It's nothing new for voice-activated devices to behave badly when they misinterpret dialogue -- just ask anyone watching a Microsoft gaming event with a Kinect-equipped Xbox One nearby. However, Amazon's Echo devices is causing more of that chaos than usual. It started when a 6-year-old Dallas girl inadvertently ordered cookies and a dollhouse from Amazon by saying what she wanted. It was a costly goof ($170), but nothing too special by itself. However, the response to that story sent things over the top. When San Diego's CW6 discussed the snafu on a morning TV show, one of the hosts made the mistake of saying that he liked when the girl said "Alexa ordered me a dollhouse." You can probably guess what happened next.

Sticky Postings

All 242 fabric | rblg updated tags | #fabric|ch #wandering #reading

By fabric | ch

-----

As we continue to lack a decent search engine on this blog and as we don't use a "tag cloud" ... This post could help navigate through the updated content on | rblg (as of 09.2023), via all its tags!

FIND BELOW ALL THE TAGS THAT CAN BE USED TO NAVIGATE IN THE CONTENTS OF | RBLG BLOG:

(to be seen just below if you're navigating on the blog's html pages or here for rss readers)

--

Note that we had to hit the "pause" button on our reblogging activities a while ago (mainly because we ran out of time, but also because we received complaints from a major image stock company about some images that were displayed on | rblg, an activity that we felt was still "fair use" - we've never made any money or advertised on this site).

Nevertheless, we continue to publish from time to time information on the activities of fabric | ch, or content directly related to its work (documentation).

Wednesday, March 12. 2025

Summoning the Ghosts of Modernity at MAMM (Medellin) | #exhibition #digital #algorithmic #matter #nonmatter

Note: fabric | ch is part of the exhibition Summoning the Ghosts of Modernity at the Museo de Arte Moderno de Medellin (MAMM), in Colombia.

The show constitutes a continuation of Beyond Matter that took place at the ZKM in 2022/23, and is curated by Lívia Nolasco-Rószás and Esteban Guttiérez Jiménez.

The exhibition will be open between the 13th of March and 15th of June 2025.

-----

By fabric | ch

Atomized (re-)Staging (2022), by fabric | ch. Exhibited during Summing the Ghosts of Modernity at the Museo de Arte Moderno de Medellin (MAMM). March 13 to June 15 2025.

More pictures of the exhibtion on Pixelfed.

Friday, March 09. 2018

The Generator Project | #smart? #protosmart #architecture

Note: a proto-smart-architecture project by Cedric Price dating back from the 70ies, which sounds much more intersting than almost all contemporary smart architecture/cities proposals.

These lattest being in most cases glued into highly functional approaches driven by the "paths of less resistance-frictions", supported if not financed by data-hungry corporations. That's not a desirable future to my point of view.

"(...). If not changed, the building would have become “bored” and proposed alternative arrangements for evaluation (...)"

Via Interactive Architecture Lab (at the Bartlett)

-----

Cedric Price’s proposal for the Gilman Corporation was a series of relocatable structures on a permanent grid of foundation pads on a site in Florida.

Cedric Price asked John and Julia Frazer to work as computer consultants for this project. They produced a computer program to organize the layout of the site in response to changing requirements, and in addition suggested that a single-chip microprocessor should be embedded in every component of the building, to make it the controlling processor.

This would result in an “intelligent” building which controlled its own organisation in response to use. If not changed, the building would have become “bored” and proposed alternative arrangements for evaluation, learning how to improve its own evaluation, learning how to improve its own organisation on the basis of this experience.

The Brief

Generator (1976-79) sought to create conditions for shifting, changing personal interactions in a reconfigurable and responsive architectural project.

It followed this open-ended brief:

"A building which will not contradict, but enhance, the feeling of being in the middle of nowhere; has to be accessible to the public as well as to private guests; has to create a feeling of seclusion conducive to creative impulses, yet…accommodate audiences; has to respect the wildness of the environment while accommodating a grand piano; has to respect the continuity of the history of the place while being innovative."

The proposal consisted of an orthogonal grid of foundation bases, tracks and linear drains, in which a mobile crane could place a kit of parts comprised of cubical module enclosures and infill components (i.e. timber frames to be filled with modular components raging from movable cladding wall panels to furniture, services and fittings), screening posts, decks and circulation components (i.e. walkways on the ground level and suspended at roof level) in multiple arrangements.

When Cedric Price approached John and Julia Frazer he wrote:

"The whole intention of the project is to create an architecture sufficiently responsive to the making of a change of mind constructively pleasurable."

Generator Project

They proposed four programs that would use input from sensors attached to Generator’s components: the first three provided a “perpetual architect” drawing program that held the data and rules for Generator’s design; an inventory program that offered feedback on utilisation; an interface for “interactive interrogation” that let users model and prototype Generator’s layout before committing the design.

The powerful and curious boredom program served to provoke Generator’s users. “In the event of the site not being re-organized or changed for some time the computer starts generating unsolicited plans and improvements,” the Frazers wrote. These plans would then be handed off to Factor, the mobile crane operator, who would move the cubes and other elements of Generator. “In a sense the building can be described as being literally ‘intelligent’,” wrote John Frazer—Generator “should have a mind of its own.” It would not only challenge its users, facilitators, architect and programmer—it would challenge itself.

The Frazers’ research and techniques

The first proposal, associated with a level of ‘interactive’ relationship between ‘architect/machine’, would assist in drawing and with the production of additional information, somewhat implicit in the other parallel developments/ proposals.

The second proposal, related to the level of ‘interactive/semiautomatic’ relationship of ‘client–user/machine’, was ‘a perpetual architect for carrying out instructions from the Polorizer’ and for providing, for instance, operative drawings to the crane operator/driver; and the third proposal consisted of a ‘[. . .] scheduling and inventory package for the Factor [. . .] it could act as a perpetual functional critic or commentator.’

The fourth proposal, relating to the third level of relationship, enabled the permanent actions of the users, while the fifth proposal consisted of a ‘morphogenetic program which takes suggested activities and arranges the elements on the site to meet the requirements in accordance with a set of rules.’

Finally, the last proposal was [. . .] an extension [. . .] to generate unsolicited plans, improvements and modifications in response to users’ comments, records of activities, or even by building in a boredom concept so that the site starts to make proposals about rearrangements of itself if no changes are made. The program could be heuristic and improve its own strategies for site organisation on the basis of experience and feedback of user response.

Self Builder Kit and the Cal Build Kit, Working Models

In a certain way, the idea of a computational aid in the Generator project also acknowledged and intended to promote some degree of unpredictability. Generator, even if unbuilt, had acquired a notable position as the first intelligent building project. Cedric Price and the Frazers´ collaboration constituted an outstanding exchange between architecture and computational systems. The Generator experience explored the impact of the new techno-cultural order of the Information Society in terms of participatory design and responsive building. At an early date, it took responsiveness further; and postulates like those behind the Generator, where the influence of new computational technologies reaches the level of experience and an aesthetics of interactivity, seems interesting and productive.

Resources

- John Frazer, An Evolutionary Architecture, Architectural Association Publications, London 1995. http://www.aaschool.ac.uk/publications/ea/exhibition.html

- Frazer to C. Price, (Letter mentioning ‘Second thoughts but using the same classification system as before’), 11 January 1979. Generator document folio DR1995:0280:65 5/5, Cedric Price Archives (Montreal: Canadian Centre for Architecture).

Thursday, January 26. 2017

How do they consume? | #algo #bots

Note: I just read this piece of news last day about Echo (Amazon's "robot assistant"), who accidentally attempted to buy large amount of toys by (always) listening and misunderstanding a phrase being told on TV by a presenter (and therefore captured by Echo in the living room and so on)... It is so "stupid" (I mean, we can see how the act of buying linked to these so-called "A.I"s is automatized by default configuration), but revealing of the kind of feedback loops that can happen with automatized decision delegated to bots and machines.

Interesting word appearing in this context is, btw, "accidentally".

Via Endgadget

-----

By Jon Fingas

Amazon's Echo attempted a TV-fueled shopping spree

Sure enough, the channel received multiple reports from viewers whose Echo devices tried to order dollhouses when they heard the TV broadcast. It's not clear that any of the purchases went through, but it no doubt caused some panic among people who weren't planning to buy toys that day.

It's easy to avoid this if you're worried: you can require a PIN code to make purchases through the Echo or turn off ordering altogether. You can also change the wake word so that TV personalities won't set off your speaker in the first place. However, this comedy of errors also suggests that there's a lot of work to be done on smart speakers before they're truly trustworthy. They may need to disable purchases by default, for example, and learn to recognize individual voices so that they won't respond to everyone who says the magic words. Until then, you may see repeats in the future.

Thursday, June 23. 2016

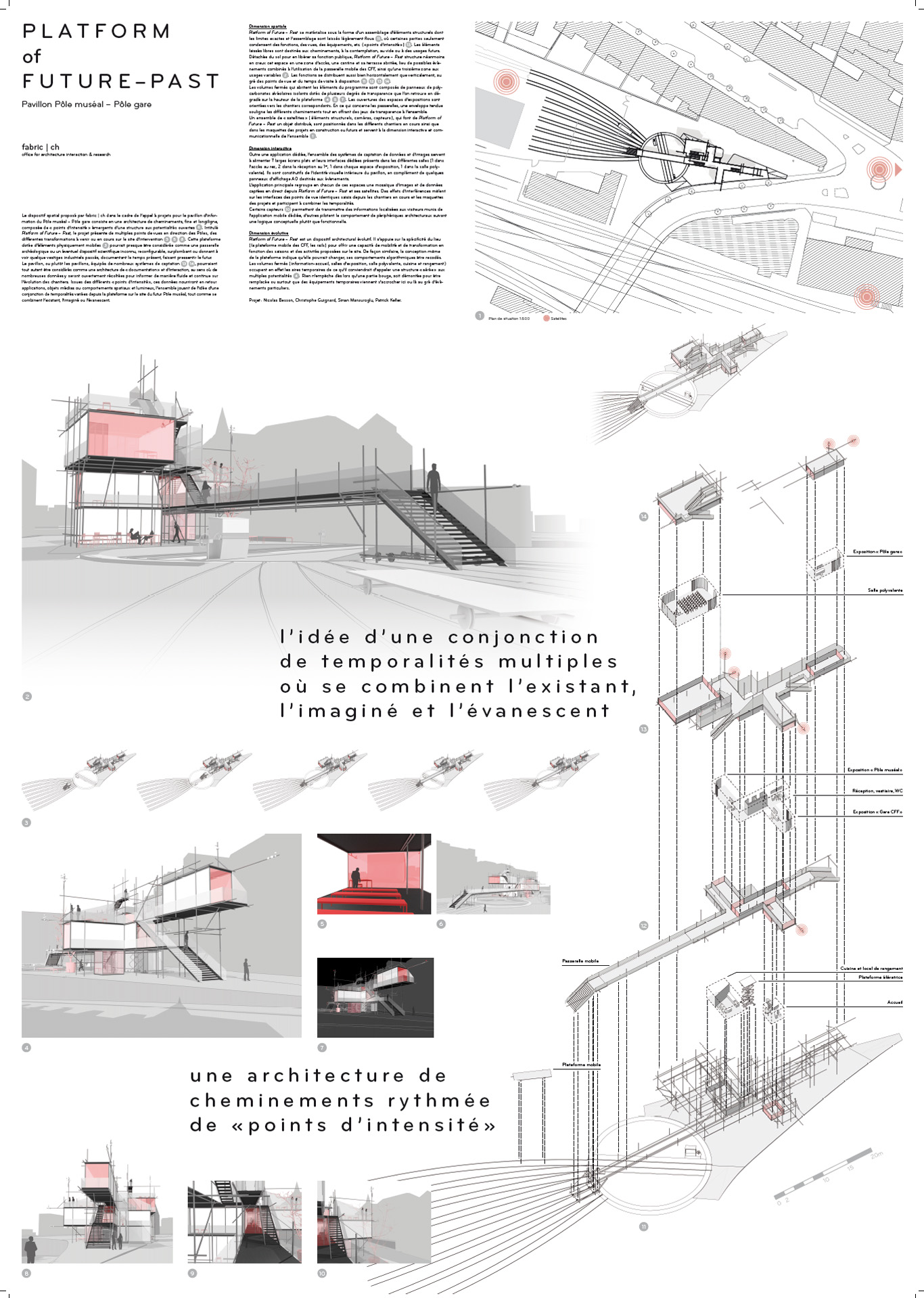

Platform of Future-Past by fabric | ch, architecture competition, (ex)1st price | #data #architecture #experimentation

Note: we've been working recently at fabric | ch on a project that we couldn't publish or talk about for contractual reasons... It concerned a relatively large information pavilion we had to create for three new museums in Switzerland (in Lausanne) and a renewed public space (railway station square). This pavilion was supposed to last for a decade, or a bit longer. The process was challenging, the work was good (we believed), but it finally didn't get build...

Sounds sad but common isn't it?

...

We'll see where these many "..." will lead us, but in the meantime and as a matter of documentation, let's stick to the interesting part and publish a first report about this project.

It consisted in an evolution of a prior spatial installation entitled Heterochrony (pdf). A second post will follow soon with the developments of this competition proposal. Both posts will show how we try to combine small size experiments (exhibitions) with more permanent ones (architecture) in our work. It also marks as well our desire at fabric | ch to confront more regularly our ideas and researches with architectural programs.

This first post only consists in a few snapshots of the competition documents, while the following one should present the "final" project and ideas linked to it.

By fabric | ch

-----

.jpg)

.jpg)

.jpg)

On the jury paper was written, under "price" -- as we didn't get paid for the 1st price itself -- : "Réalisation" (realization).

Just below in the same letter, "according to point 1.5 of the competition", no realization will be attributed... How ironic! We did work further on an extended study though.

A few words about the project taken from its presentation:

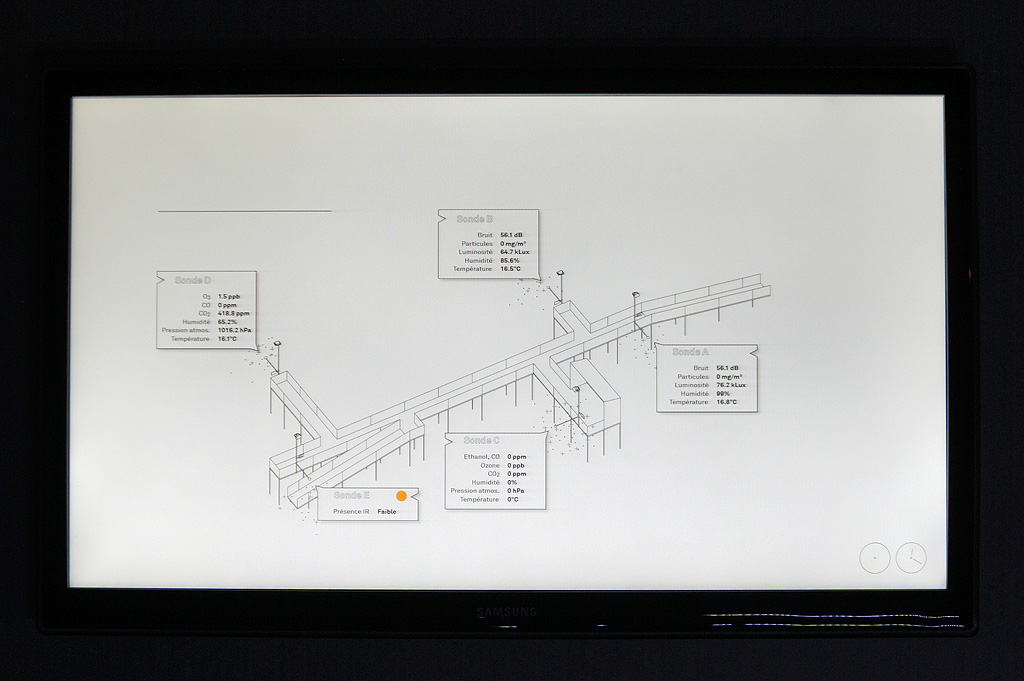

" (...) This platform with physically moving parts could almost be considered an archaeological footbridge or an unknown scientific device, reconfigurable and shiftable, overlooking and giving to see some past industrial remains, allowing to document the present, making foresee the future.

The pavilion, or rather pavilions, equipped with numerous sensor systems, could equally be considered an "architecture of documentation" and interaction, in the sense that there will be extensive data collected to inform in an open and fluid manner over the continuous changes on the sites of construction and tranformations. Taken from the various "points of interets' on the platform, these data will feed back applications ("architectural intelligence"?), media objects, spatial and lighting behaviors. The ensemble will play with the idea of a combination of various time frames and will combine the existing, the imagined and the evanescent. (...) "

Heterochrony, a previous installation that followed similar goals, back in 2012. http://www.heterochronie.cc

Download pdf (14 mb).

-----

Project: fabric | ch

Team: Patrick Keller, Christophe Guignard, Sinan Mansuroglu, Nicolas Besson.

Sunday, December 14. 2014

I&IC workshop #3 at ECAL: output > Networked Data Objects & Devices | #data #things

Via iiclouds.org

-----

The third workshop we ran in the frame of I&IC with our guest researcher Matthew Plummer-Fernandez (Goldsmiths University) and the 2nd & 3rd year students (Ba) in Media & Interaction Design (ECAL) ended last Friday (| rblg note: on the 21st of Nov.) with interesting results. The workshop focused on small situated computing technologies that could collect, aggregate and/or “manipulate” data in automated ways (bots) and which would certainly need to heavily rely on cloud technologies due to their low storage and computing capacities. So to say “networked data objects” that will soon become very common, thanks to cheap new small computing devices (i.e. Raspberry Pis for diy applications) or sensors (i.e. Arduino, etc.) The title of the workshop was “Botcave”, which objective was explained by Matthew in a previous post.

The choice of this context of work was defined accordingly to our overall research objective, even though we knew that it wouldn’t address directly the “cloud computing” apparatus — something we learned to be a difficult approachduring the second workshop –, but that it would nonetheless question its interfaces and the way we experience the whole service. Especially the evolution of this apparatus through new types of everyday interactions and data generation.

Matthew Plummer-Fernandez (#Algopop) during the final presentation at the end of the research workshop.

Through this workshop, Matthew and the students definitely raised the following points and questions:

1° Small situated technologies that will soon spread everywhere will become heavy users of cloud based computing and data storage, as they have low storage and computing capacities. While they might just use and manipulate existing data (like some of the workshop projects — i.e. #Good vs. #Evil or Moody Printer) they will altogether and mainly also contribute to produce extra large additional quantities of them (i.e. Robinson Miner). Yet, the amount of meaningful data to be “pushed” and “treated” in the cloud remains a big question mark, as there will be (too) huge amounts of such data –Lucien will probably post something later about this subject: “fog computing“–, this might end up with the need for interdisciplinary teams to rethink cloud architectures.

2° Stored data are becoming “alive” or significant only when “manipulated”. It can be done by “analog users” of course, but in general it is now rather operated by rules and algorithms of different sorts (in the frame of this workshop: automated bots). Are these rules “situated” as well and possibly context aware (context intelligent) –i.e.Robinson Miner? Or are they somehow more abstract and located anywhere in the cloud? Both?

3° These “Networked Data Objects” (and soon “Network Data Everything”) will contribute to “babelize” users interactions and interfaces in all directions, paving the way for new types of combinations and experiences (creolization processes) — i.e. The Beast, The Like Hotline, Simon Coins, The Wifi Cracker could be considered as starting phases of such processes–. Cloud interfaces and computing will then become everyday “things” and when at “house”, new domestic objects with which we’ll have totally different interactions (this last point must still be discussed though as domesticity might not exist anymore according to Space Caviar).

Moody Printer – (Alexia Léchot, Benjamin Botros)

Moody Printer remains a basic conceptual proposal at this stage, where a hacked printer, connected to a Raspberry Pi that stays hidden (it would be located inside the printer), has access to weather information. Similarly to human beings, its “mood” can be affected by such inputs following some basic rules (good – bad, hot – cold, sunny – cloudy -rainy, etc.) The automated process then search for Google images according to its defined “mood” (direct link between “mood”, weather conditions and exhaustive list of words) and then autonomously start to print them.

A different kind of printer combined with weather monitoring.

The Beast – (Nicolas Nahornyj)

Top: Nicolas Nahornyj is presenting his project to the assembly. Bottom: the laptop and “the beast”.

The Beast is a device that asks to be fed with money at random times… It is your new laptop companion. To calm it down for a while, you must insert a coin in the slot provided for that purpose. If you don’t comply, not only will it continue to ask for money in a more frequent basis, but it will also randomly pick up an image that lie around on your hard drive, post it on a popular social network (i.e. Facebook, Pinterest, etc.) and then erase this image on your local disk. Slowly, The Beast will remove all images from your hard drive and post them online…

A different kind of slot machine combined with private files stealing.

Robinson – (Anne-Sophie Bazard, Jonas Lacôte, Pierre-Xavier Puissant)

Top: Pierre-Xavier Puissant is looking at the autonomous “minecrafting” of his bot. Bottom: the proposed bot container that take on the idea of cubic construction. It could be placed in your garden, in one of your room, then in your fridge, etc.

Robinson automates the procedural construction of MineCraft environments. To do so, the bot uses local weather information that is monitored by a weather sensor located inside the cubic box, attached to a Raspberry Pi located within the box as well. This sensor is looking for changes in temperature, humidity, etc. that then serve to change the building blocks and rules of constructions inside MineCraft (put your cube inside your fridge and it will start to build icy blocks, put it in a wet environment and it will construct with grass, etc.)

A different kind of thermometer combined with a construction game.

Note: Matthew Plummer-Fernandez also produced two (auto)MineCraft bots during the week of workshop. The first one is building environment according to fluctuations in the course of different market indexes while the second one is trying to build “shapes” to escape this first envirnment. These two bots are downloadable from theGithub repository that was realized during the workshop.

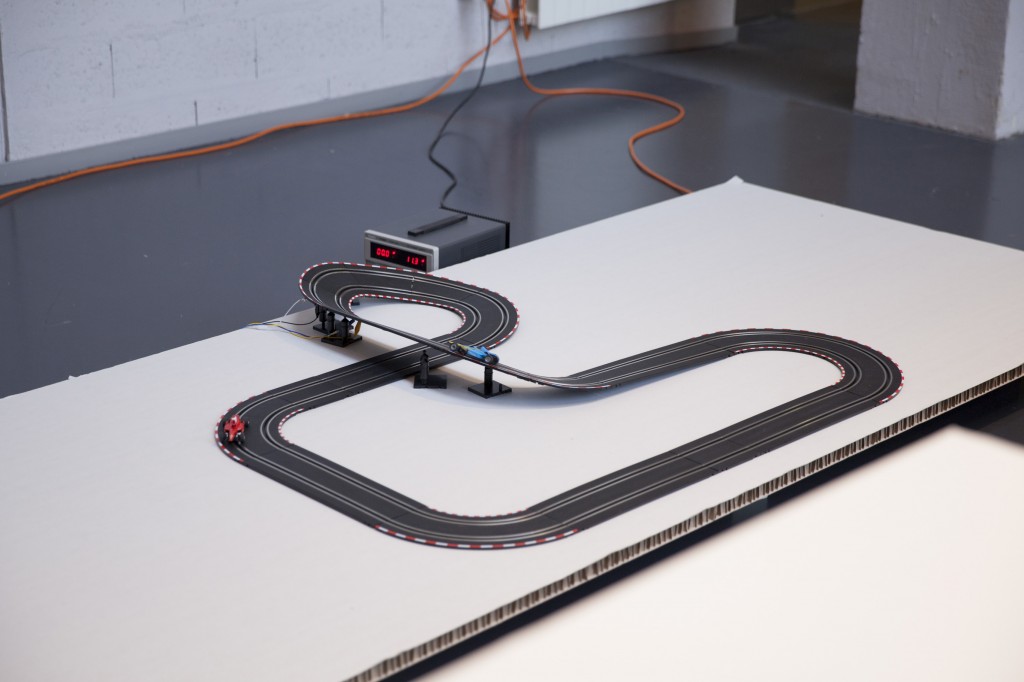

#Good vs. #Evil – (Maxime Castelli)

Top: a transformed car racing game. Bottom: a race is going on between two Twitter hashtags, materialized by two cars.

#Good vs. #Evil is a quite straightforward project. It is also a hack of an existing two racing cars game. Yet in this case, the bot is counting iterations of two hashtags on Twitter: #Good and #Evil. At each new iteration of one or the other word, the device gives an electric input to its associated car. The result is a slow and perpetual race car between “good” and “evil” through their online hashtags iterations.

A different kind of data visualization combined with racing cars.

The “Like” Hotline – (Mylène Dreyer, Caroline Buttet, Guillaume Cerdeira)

Top: Caroline Buttet and Mylène Dreyer are explaining their project. The screen of the laptop, which is a Facebook account is beamed on the left outer part of the image. Bottom: Caroline Buttet is using a hacked phone to “like” pages.

The “Like” Hotline is proposing to hack a regular phone and install a hotline bot on it. Connected to its online Facebook account that follows a few personalities and the posts they are making, the bot ask questions to the interlocutor which can then be answered by using the keypad on the phone. After navigating through a few choices, the bot hotline help you like a post on the social network.

A different kind of hotline combined with a social network.

Simoncoin – (Romain Cazier)

Top: Romain Cazier introducing its “coin” project. Bottom: the device combines an old “Simon” memory game with the production of digital coins.

Simoncoin was unfortunately not finished at the end of the week of workshop but was thought out in force details that would be too long to explain in this short presentation. Yet the main idea was to use the game logic of Simon to generate coins. In a parallel to the Bitcoins that are harder and harder to mill, Simon Coins are also more and more difficult to generate due to the game logic.

Another different kind of money combined with a memory game.

The Wifi Cracker – (Bastien Girshig, Martin Hertig)

Top: Bastien Girshig and Martin Hertig (left of Matthew Plummer-Fernandez) presenting. Middle and Bottom: the wifi password cracker slowly diplays the letters of the wifi password.

The Wifi Cracker is an object that you can independently leave in a space. It furtively looks a little bit like a clock, but it won’t display the time. Instead, it will look for available wifi networks in the area and start try to find their protected password (Bastien and Martin found a ready made process for that). The bot will test all possible combinations and it will take time. Once the device will have found the working password, it will use its round display to transmit the password. Letter by letter and slowly as well.

A different kind of cookoo clock combined with a password cracker.

Acknowledgments:

Lots of thanks to Matthew Plummer-Fernandez for its involvement and great workshop direction; Lucien Langton for its involvment, technical digging into Raspberry Pis, pictures and documentation; Nicolas Nova and Charles Chalas (from HEAD) so as Christophe Guignard, Christian Babski and Alain Bellet for taking part or helping during the final presentation. A special thanks to the students from ECAL involved in the project and the energy they’ve put into it: Anne-Sophie Bazard, Benjamin Botros, Maxime Castelli, Romain Cazier, Guillaume Cerdeira, Mylène Dreyer, Bastien Girshig, Jonas Lacôte, Alexia Léchot, Nicolas Nahornyj, Pierre-Xavier Puissant.

From left to right: Bastien Girshig, Martin Hertig (The Wifi Cracker project), Nicolas Nova, Matthew Plummer-Fernandez (#Algopop), a “mystery girl”, Christian Babski (in the background), Patrick Keller, Sebastian Vargas, Pierre Xavier-Puissant (Robinson Miner), Alain Bellet and Lucien Langton (taking the pictures…) during the final presentation on Friday.

Thursday, July 03. 2014

The Emerging Threat from Twitter's Social Capitalists | #social #networks

Note: I'm happy to learn that I'm not a "social capitalist"! I am not a "regular capitalist" either...

Via MIT Technology

-----

Social capitalists on Twitter are inadvertently ruining the network for ordinary users, say network scientists.

A couple of years, ago, network scientists began to study the phenomenon of “link farming” on Twitter and other social networks. This is the process in which spammers gather as many links or followers as possible to help spread their messages.

What these researchers discovered on Twitter was curious. They found that link farming was common among spammers. However, most of the people who followed the spam accounts came from a relatively small pool of human users on Twitter.

These people turn out to be individuals who are themselves trying to amass social capital by gathering as many followers as possible. The researchers called these people social capitalists.

That raises an interesting question: how do social capitalists emerge and what kind of influence do they have on the network? Today we get an answer of sorts, thanks to the work of Vincent Labatut at Galatasaray University in Turkey and a couple of pals who have carried out the first detailed study of social capitalists and how they behave.

These guys say that social capitalists fall into at least two different categories that reflect their success and the roles they play in linking together diverse communities. But they warn that social capitalists have a dark side too.

First, a bit of background. Twitter has around 600 million users who send 60 million tweets every day. On average, each Twitter user has around 200 followers and follows a similar number, creating a dynamic social network in which messages percolate through the network of links.

Many of these people use Twitter to connect with friends, family, news organizations, and so on. But a few, the social capitalists, use the network purely to maximize their own number of followers.

Social capitalists essentially rely on two kinds of reciprocity to amass followers. The first is to reassure other users that if they follow this user, then he or she will follow them back, a process called Follow Me and I Follow You or FMIFY. The second is to follow anybody and hope they follow back, a process called I Follow You, Follow Me or IFYFM.

This process takes place regardless of the content of messages, which is how they get mixed up with spammers, a point that turns out to be significant later.

Clearly, social capitalists are different from Twitter users who choose to follow people based on the content they tweet. The question that Labatut and co set out to answer is how to automatically identify social capitalists in Twitter and to work out how they sit within the Twitter network.

A clear feature of the reciprocity mechanism is that there will be a large overlap between the friends and followers of social capitalists. It’s possible to measure this overlap and categorize users accordingly. Social capitalists tend to have an overlap much closer to 100 percent than ordinary users.

Having identified social capitalists, another important measure is the ratio of friends to followers. Labatut and co say that those using the FMIFY strategy have a ratio smaller than 1 while those using the IFYFM will have a ration greater than 1 (because the number of followers is always greater than the number of friends).

One final way to categorize them is by their level of success. Here, Labatut and others set an arbitrary threshold of 10,000 followers. Social capitalists with more than this are obviously more successful than those with less.

To study these groups, Labatut and coanalyze an anonymized dataset of 55 million Twitter users with two billion links between them. And they find some 160,000 users who fit the description of social capitalist.

In particular, the team is interested in how social capitalists are linked to communities within Twitter, that is groups of users who are more strongly interlinked than average.

It turns out that there is a surprisingly large variety of social capitalists playing different roles. “We find out the different kinds of social capitalists occupy very specific roles,” say Labatut and co.

For example, social capitalists with fewer than 10,000 followers tend not to have large numbers of links within a single community but links to lots of different communities. By contrast, those with more than 10,000 followers can have a strong presence in single communities as well as link disparate communities together. In both cases, social capitalists are significant because their messages travel widely across the entire Twitter network.

That has important consequences for the Twitter network. Labatut and co say there is a clear dark side to the role of social capitalists. “Because of this lack of interest in the content produced by the users they follow, social capitalists are not healthy for a service such as Twitter,” they say.

That’s because they provide an indiscriminate conduit for spammers to peddle their wares. “[Social capitalists’] behavior helps spammers gain influence, and more generally makes the task of finding relevant information harder for regular users,” say Labatut and co.

That’s an interesting insight that raises a tricky question for Twitter and other social networks. Finding social capitalists should now be straightforward now that Labatut and others have found a way to spot them automatically. But if social capitalists are detrimental, should their activities be restricted?

Ref:

http://arxiv.org/abs/1406.6611 : Identifying the Community Roles of Social Capitalists in the Twitter Network.

http://www.mpi-sws.org/~farshad/TwitterLinkfarming.pdf : Understanding and Combating Link Farming in the Twitter Social Network

Friday, April 25. 2014

ECAL students create bizarre smart home objects in Milan | #smart?

Before you'll start reading, let me add a missing information: the projects were developed during a full semester by 2nd year bachelor students at the ECAL, under the direction of Profs. Chris Kabel (product design) and Alain Bellet (interaction design).

Via It's Nice That

-----

By Rob Alderson

Léa Pereyre, Claire Pondard, Tom Zambaz: Mr Time (Image By ECAL/Axel Crettenand & Sylvain Aebischer)

It’s laudable that designers are working on worthy projects that will have a practical impact on building a better future, but we’re big believers that creatives should be engaged in making tomorrow a bit more fun too. Luckily for us, there are institutions like the Ecole cantonale d’art de Lausanne (ECAL).

At this year’s Milan Salone, ECAL’s Industrial Design and Media & Interaction Design students unveiled a series of weird and wonderful objects that presented “a playful interpretation take on the concept of the smart home.” These included a clock that mimics the gestures of those looking at it, cacti that respond musically to being caressed, a pair of chairs one of which reacts to the movements of the sitter in the other, a tea spoon that won’t be separated from its mug and a fan that is powered by the amplified breath of the homeowner.

It’s fair to say that some of these creations are completely impractical, but they all raise questions about our future interaction with household objects and they do so in the quirkiest way possible.

Related Links:

Thursday, April 24. 2014

Les-tuh skwair | #algorithm

-----

“As algorithmic systems become more prevalent, I’ve begun to notice of a variety of emergent behaviors evolving to work around these constraints, to deal with the insufficiency of these black box systems…The first behavior is adaptation. These are situations where I bend to the system’s will. For example, adaptations to the shortcomings of voice UI systems — mispronouncing a friend’s name to get my phone to call them; overenunciating; or speaking in a different accent because of the cultural assumptions built into voice recognition. We see people contort their behavior to perform for the system so that it responds optimally.”

Alexis Lloyd (NYTimes R&D) shares some interesting views under the title In the Loop: Designing Conversations with Algorithms.

Friday, September 20. 2013

How to make players sick in your virtual reality game

Via Joystiq

-----

There is a great, undiscovered potential in virtual reality development. Sure, you can create lifelike virtual worlds, but you can also make players sick. Oculus VR founder Palmer Luckey and VP of product Nate Mitchell hosted a panel at GDC Europe last week, instructing developers on how to avoid the VR development pitfalls that make players uncomfortable. It was a lovely service for VR developers, but we saw a much greater opportunity. Inadvertently, the panel explained how to make players as queasy and uncomfortable as possible.

And so, we now present the VR developer's guide to manipulating your players right down to the vestibular level. Just follow these tips and your players will be tossing their cookies in minutes.

Note: If you'd rather not make your players horribly ill and angry, just do the opposite of everything below.

Include lots of small, tight spaces

In virtual reality, small and closed-off areas truly feel small, said Luckey. "Small corridors are really claustrophobic. It's actually one of the worst things you can do for most people in VR, is to put them in a really small corridor with the walls and the ceiling closing in on them, and then tell them to move rapidly through it."

Meanwhile, open spaces are a "relief," he said, so you'll want to avoid those.

Possible applications: Air duct exploration game.

Create a user interface that neglects depth and head-tracking

Virtual reality is all about depth and immersion, said Mitchell. So, if you want to break that immersion, your ideal user interface should be as traditional and flat as possible.

For example, put targeting reticles on a 2D plane in the center of a player's field of view. Maybe set it up so the reticle floats a couple of feet away from the player's face. "That is pretty uncomfortable for most players and they'll just try to grapple with what do they converge on: That near-field reticle or that distant mech that they're trying to shoot at?" To sample this effect yourself, said Mitchell, you can hold your thumb in front of your eyes. When you focus on a distant object, your thumb will appear to split in two. Now just imagine that happening to something as vital a targeting reticle!

You might think that setting the reticle closer to the player will make things even worse, and you're right. "The sense of personal space can make people actually feel uncomfortable, like there's this TV floating righting in front of their face that they try to bat out of the way." Mitchell said a dynamic reticle that paints itself onto in-game surfaces feels much more natural, so don't do that.

You can use similar techniques to create an intrusive, annoying heads-up display. Place a traditional HUD directly in front of the player's face. Again, they'll have to deal with double vision as their eyes struggle to focus on different elements of the game. Another option, since VR has a much wider field of view than monitors, is to put your HUD elements in the far corners of the display, effectively putting it into a player's peripheral vision. "Suddenly it's too far for the player to glance at, and they actually can't see pretty effectively." What's more, when players try to turn their head to look at it, the HUD will turn with them. Your players will spin around wildly as they desperately try to look at their ammo counter.

Possible applications: Any menu or user interface from Windows 3.1.

Disable head-tracking or take control away from the player

"Simulator sickness," when players become sick in a VR game, is actually the inverse of motion sickness, said Mitchell. Motion sickness is caused by feeling motion without being able to see it ? Mitchell cited riding on a boat rocking in the ocean as an example. "There's all this motion, but visually you don't perceive that the floor, ceiling and walls are moving. And that's what that sensory disconnect ? mainly in your vestibular senses ? is what creates that conflict that makes you dizzy." Simulator sickness he said, is the opposite. "You're in an environment where you perceive there to be motion, visually, but there is no motion. You're just sitting in a chair."

If you disable head-tracking in part of your game, it artificially creates just that sort of sensory disconnect. Furthermore, if you move the camera without player input, say to display a cut-scene, it can be very disorienting. When you turn your head in VR, you expect the world to turn with you. When it doesn't, you can have an uncomfortable reaction.

Possible applications: Frequent, Unskippable Cutscenes: The Game.

Feature plenty of backwards and lateral movement

Forward movement in a VR game tends not to cause problems, but many users have trouble dealing with backwards movement, said Mitchell. "You can imagine sometimes if you sit on a train and you perceive no motion, and the train starts moving backwards very quickly, or you see another car pulling off, all of those different sensations are very similar to that discomfort that comes from moving backwards in space." Lateral movement ? i.e. sideways movement ? has a similar effect, Mitchell said. "Being able to sort of strafe on a dime doesn't always cause the most comfortable experience."

Possible applications: Backwards roller coaster simulator.

Quick changes in altitude

"Quick changes in altitude do seem to cause disorientation," said Mitchell. Exactly why that happens isn't really understood, but it seems to hold true among VR developers. This means that implementing stairs or ramps into your games can throw players for a loop ? which, remember, is exactly what we're after. Don't use closed elevators, as these prevent users from perceiving the change in altitude, and is generally much more comfortable.

Possible applications: A VR version of the last level from Ghostbusters on NES. Also: Backwards roller coaster simulator.

Don't include visual points of reference

When players look down in VR, they expect to see their character's body. Likewise, in a space combat or mech game, they expect to see the insides of the cockpit when they look around. "Having a visual identity is really crucial to VR. People don't want to look down and be a disembodied head." For the purposes of this guide, that makes a disembodied head the ideal avatar for aggravating your players.

Possible applications: Disembodied Heads ... in ... Spaaaaaace. Also: Disembodied head in a backwards roller coaster.

Shift the horizon line

Okay, this is probably one of the most devious ways to manipulate your players. Mitchell imagines a simulation of sitting on a beach, watching the sunset. "If you subtly tilt the horizon line very, very minimally, a couple degrees, the player will start to become dizzy and disoriented and won't know why."

Possible applications: Drunk at the Beach.

Shoot for a low frame rate, disable V-sync

"With VR, having the world tear non-stop is miserable." Enough said. Furthermore, a low frame rate can be disorienting as well. When players move their heads and the world doesn't move at the same rate of speed, its jarring to their natural senses.

Possible applications: Limitless.

In Closing

Virtual reality is still a fledgling technology and, as Luckey and Mitchell explained, there's still a long way to go before both players and developers fully understand it. There are very few points of reference, and there is no widely established design language that developers can draw from.

What Luckey and Mitchell have detailed - and what we've decided to ignore - is a basic set of guidelines on maintaining player comfort in the VR space. Fair warning though, if you really want to design a game that makes players sick, the developers of AaaaaAAaaaAAAaaAAAAaAAAAA!!! already beat you to it.

fabric | rblg

This blog is the survey website of fabric | ch - studio for architecture, interaction and research.

We curate and reblog articles, researches, writings, exhibitions and projects that we notice and find interesting during our everyday practice and readings.

Most articles concern the intertwined fields of architecture, territory, art, interaction design, thinking and science. From time to time, we also publish documentation about our own work and research, immersed among these related resources and inspirations.

This website is used by fabric | ch as archive, references and resources. It is shared with all those interested in the same topics as we are, in the hope that they will also find valuable references and content in it.

Quicksearch

Categories

Calendar

|

|

July '25 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | 3 | 4 | 5 | 6 | |

| 7 | 8 | 9 | 10 | 11 | 12 | 13 |

| 14 | 15 | 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 | 26 | 27 |

| 28 | 29 | 30 | 31 | |||