Thursday, July 25. 2013

Via MIT Technology Review via @chrstphggnrd

-----

New tricks will enable a life-logging app called Saga to figure out not only where you are, but what you’re doing.

By Tom Simonite

Having mobile devices closely monitoring our behavior could make them more useful, and open up new business opportunities.

Many of us already record the places we go and things we do by using our smartphone to diligently snap photos and videos, and to update social media accounts. A company called ARO is building technology that automatically collects a more comprehensive, automatic record of your life.

ARO is behind an app called Saga that automatically records every place that a person goes. Now ARO’s engineers are testing ways to use the barometer, cameras, and microphones in a device, along with a phone’s location sensors, to figure out where someone is and what they are up to. That approach should debut in the Saga app in late summer or early fall.

The current version of Saga, available for Apple and Android phones, automatically logs the places a person visits; it can also collect data on daily activity from other services, including the exercise-tracking apps FitBit and RunKeeper, and can pull in updates from social media accounts like Facebook, Instagram, and Twitter. Once the app has been running on a person’s phone for a little while, it produces infographics about his or her life; for example, charting the variation in times when they leave for work in the morning.

Software running on ARO’s servers creates and maintains a model of each user’s typical movements. Those models power Saga’s life-summarizing features, and help the app to track a person all day without requiring sensors to be always on, which would burn too much battery life.

“If I know that you’re going to be sitting at work for nine hours, we can power down our collection policy to draw as little power as possible,” says Andy Hickl, CEO of ARO. Saga will wake up and check a person’s location if, for example, a phone’s accelerometer suggests he or she is on the move; and there may be confirmation from other clues, such as the mix of Wi-Fi networks in range of the phone. Hickl says that Saga typically consumes around 1 percent of a device’s battery, significantly less than many popular apps for e-mail, mapping, or social networking.

That consumption is low enough, says Hickl, that Saga can afford to ramp up the information it collects by accessing additional phone sensors. He says that occasionally sampling data from a phone’s barometer, cameras, and microphones will enable logging of details like when a person walked into a conference room for a meeting, or when they visit Starbucks, either alone or with company.

The Android version of Saga recently began using the barometer present in many smartphones to distinguish locations close to one another. “Pressure changes can be used to better distinguish similar places,” says Ian Clifton, who leads development of the Android version of ARO. “That might be first floor versus third floor in the same building, but also inside a vehicle versus outside it, even in the same physical space.”

ARO is internally testing versions of Saga that sample light and sound from a person’s environment. Clifton says that using a phone’s microphone to collect short acoustic fingerprints of different places can be a valuable additional signal of location, and allow inferences about what a person is doing. “Sometimes we’re not sure if you’re in Starbucks or the bar next door,” says Clifton. “With acoustic fingerprints, even if the [location] sensor readings are similar, we can distinguish that.”

Occasionally sampling the light around a phone using its camera provides another kind of extra signal of a person’s activity. “If you go from ambient light to natural light, that would say to us your context has changed,” says Hickl, and it should be possible for Saga to learn the difference between, say, the different areas of an office.

The end result of sampling light, sound, and pressure data will be Saga’s machine learning models being able to fill in more details of a users’ life, says Hickl. “[When] I go home today and spend 12 hours there, to Saga that looks like a wall of nothing,” he says, noting that Saga could use sound or light cues to infer when during that time at home he was, say, watching TV, playing with his kids, or eating dinner.

Andrew Campbell, who leads research into smartphone sensing at Dartmouth College, says that adding more detailed, automatic life-logging features is crucial for Saga or any similar app to have a widespread impact. “Automatic sensing relieves the user of the burden of inputting lots of data,” he says. “Automatic and continuous sensing apps that minimize user interaction are likely to win out.”

Campbell says that automatic logging coupled with machine learning should allow apps to learn more about users’ health and welfare, too. He recently started analyzing data from a trial in which 60 students used a life-logging app that Campbell developed called Biorhythm. It uses various data collection tricks, including listening for nearby voices to determine when a student is in a conversation. “We can see many interesting patterns related to class performance, personality, stress, sociability, and health,” says Campbell. “This could translate into any workplace performance situation, such as a startup, hospital, large company, or the home.”

Campbell’s project may shape how he runs his courses, but it doesn’t have to make money. ARO, funded by Microsoft cofounder Paul Allen, ultimately needs to make life-logging pay. Hickl says that he has already begun to rent out some of ARO’s technology to other companies that want to be able to identify their users’ location or activities. Aggregate data from Saga users should also be valuable, he says.

“Now we’re getting a critical mass of users in some areas and we’re able to do some trend-spotting,” he says. “The U.S. national soccer team was in Seattle, and we were able to see where activity was heating up around the city.” Hickl says the data from that event could help city authorities or businesses plan for future soccer events in Seattle or elsewhere. He adds that Saga could provide similar insights into many other otherwise invisible patterns of daily life.

Personal comment:

Or how to build up knowledge and minable data from low end "sensors". Finally, how some trivial inputs from low cost sensors can, combined with others, reveal deeper patterns in our everyday habits.

But who's Aro? Who founded it? Who are the "business angels" behind it and what are they up to? What does the technology exactly do? Where are its legal headquarters located (under which law)? That's the first questions you should ask yourself before eventually giving your data to a private company... (I know, this is usual suspects these days). But that's pretty hard to find! CEO is Mr Andy Hickl based in Seattle, having 1096 followers on Twitter and a "Sorry, this page isn't available" on Facebook, you can start to digg from there and mine for him on Google...

-

We are in need of some sort of efficient Creative Commons equivalent for data. But that would be respected by companies. As well as some open source equivalent for Facebook, Google, Dropbox, etc. (but also MS, Apple, etc.), located in countries that support these efforts through their laws and where these "Creative Commons" profiles and data would be implemented. Then, at least, we would have some choice.

In Switzerland, we had a term to describe how the landscape has been progressivily used since the 60ies to build small individual or holiday houses: "mitage du territoire" ("urban sprawl" sounds to be the equivalent in english, but "mitage" is related to "moths" to be precise, so rather ""mothed" landscape" if I could say so, which says what it says) and we had the opportunity to vote against it recently, with success. I believe that now the same thing is happening with personal and/or public sphere, with our lives: it is sprawled or "mothed" by private interests.

So, it is time to ask for the opportunity to "vote" (against it) everybody and have the choice between keeping the ownership of your data, releasing them as public or being paid for them (like a share in the company('s product))!

Wednesday, June 19. 2013

Via MIT Technology Review

-----

By Ben Waber

A new line of research examines what happens in an office where the positions of the cubicles and walls—even the coffee pot—are all determined by data.

Can we use data about people to alter physical reality, even in real time, and improve their performance at work or in life? That is the question being asked by a developing field called augmented social reality.

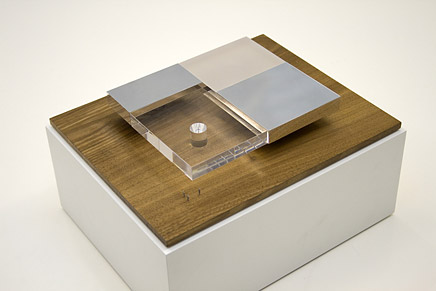

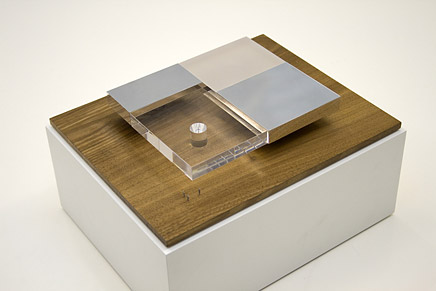

Here’s a simple example. A few years ago, with Sandy Pentland’s human dynamics research group at MIT’s Media Lab, I created what I termed an “augmented cubicle.” It had two desks separated by a wall of plexiglass with an actuator-controlled window blind in the middle. Depending on whether we wanted different people to be talking to each other, the blinds would change position at night every few days or weeks.

The augmented cubicle was an experiment in how to influence the social dynamics of a workplace. If a company wanted engineers to talk more with designers, for example, it wouldn’t set up new reporting relationships or schedule endless meetings. Instead, the blinds in the cubicles between the groups would go down. Now as engineers passed the designers it would be easier to have a quick chat about last night’s game or a project they were working on.

Human social interaction is rapidly becoming more measurable at a large scale, thanks to always-on sensors like cell phones. The next challenge is to use what we learn from this behavioral data to influence or enhance how people work with each other. The Media Lab spinoff company I run uses ID badges packed with sensors to measure employees’ movements, their tone of voice, where they are in an office, and whom they are talking to. We use data we collect in offices to advise companies on how to change their organizations, often through actual physical changes to the work environment. For instance, after we found that people who ate in larger lunch groups were more productive, Google and other technology companies that depend on serendipitous interaction to spur innovation installed larger cafeteria tables.

In the future, some of these changes could be made in real time. At the Media Lab, Pentland’s group has shown how tone of voice, fluctuation in speaking volume, and speed of speech can predict things like how persuasive a person will be in, say, pitching a startup idea to a venture capitalist. As part of that work, we showed that it’s possible to digitally alter your voice so that you sound more interested and more engaged, making you more persuasive.

Another way we can imagine using behavioral data to augment social reality is a system that suggests who should meet whom in an organization. Traditionally that’s an ad hoc process that occurs during meetings or with the help of mentors. But we might be able to draw on sensor and digital communication data to compare actual communication patterns in the workplace with an organizational ideal, then prompt people to make introductions to bridge the gaps. This isn’t the LinkedIn model, where people ask to connect to you, but one where an analytical engine would determine which of your colleagues or friends to introduce to someone else. Such a system could be used to stitch together entire organizations.

Unlike augmented reality, which layers information on top of video or your field of view to provide extra information about the world, augmented social reality is about systems that change reality to meet the social needs of a group.

For instance, what if office coffee machines moved around according to the social context? When a coffee-pouring robot appeared as a gag in TV commercial two years ago, I thought seriously about the uses of a coffee machine with wheels. By positioning the coffee robot in between two groups, for example, we could increase the likelihood that certain coworkers would bump into each other. Once we detected—using smart badges or some other sensor—that the right conversations were occurring between the right people, the robot could move on to another location. Vending machines, bowls of snacks—all could migrate their way around the office on the basis of social data. One demonstration of these ideas came from a team at Plymouth University in the United Kingdom. In their “Slothbots” project, slow-moving robotic walls subtly change their position over time to alter the flow of people in a public space, constantly tuning their movement in response to people’s behavior.

The large amount of behavioral data that we can collect by digital means is starting to converge with technologies for shaping the world in response. Will we notify people when their environment is being subtly transformed? Is it even ethical to use data-driven techniques to persuade and influence people this way? These questions remain unanswered as technology leads us toward this augmented world.

Ben Waber is cofounder and CEO of Sociometric Solutions and the author of People Analytics: How Social Sensing Technology Will Transform Business, published by FT Press.

Personal comment:

Following my previous posts about data, monitoring or data centers: or when your "ashtray" will come close to you and your interlocutor, at the "right place", after having suggested to "meet" him...

Besides this trivial (as well as uninteresting and boring) functional example, there are undoubtedly tremendous implications and stakes around the fact that we might come to algorithmically negociated social interactions. In fact that we might increase these type of interactions, including physically, as we are already into algorithmic social interactions.

Which rules and algorithms, to do what? Again, we come back to the point when architects will have to start to design algorithms and implement them in close collaboration with developers.

Friday, March 23. 2012

Via Pasta&Vinegar

-----

- reaDIYmate: Wi-Fi paper companions

"reaDIYmates are fun Wi-fi paper companions that move and play sounds depending on what's happening in your digital life. Assemble them in 10 minutes with no tools or glue, then choose what you want them to do through a simple web interface. Link them to your digital life (Gmail, Facebook, Twitter, Foursquare, RSS feeds, SoundCloud, If This Then That, and more to come) or control them remotely in real time from your iPhone."

Monday, September 05. 2011

A project by french artist Etienne Cliquet, a small size and fragile folded architecture (in fact a small origami made out from a porous paper) that open itself under the effect of water. Would be nice to experience it, as fragile but in bigger size.

Flottille (detail) from Etienne Cliquet on Vimeo.

Friday, July 29. 2011

Three new experiments highlight the power of optogenetics—a type of genetic engineering that allows scientists to control brain cells with light.

Karl Deisseroth and colleagues at Stanford University used light to trigger and then alleviate social deficits in mice that resemble those seen in autism. Researchers targeted a highly evolved part of the brain called the prefrontal cortex, which is well connected to other brain regions and involved in planning, execution, personality and social behavior. They engineered cells to become either hyperactive or underactive in response to specific wavelengths of light.

According to a report from Stanford;

The experimental mice exhibited no difference from the normal mice in tests of their anxiety levels, their tendency to move around or their curiosity about new objects. But, the team observed, the animals in whose medial prefrontal cortex excitability had been optogenetically stimulated lost virtually all interest in engaging with other mice to whom they were exposed. (The normal mice were much more curious about one another.)

The findings support one of the theories behind the neurodevelopmental deficits of autism and schizophrenia; that in these disorders, the brain is wired in a way that makes it hyperactive, or overly susceptible to overstimulation. That may explain why many autistic children are very sensitive to loud noises or other environmental stimuli.

"Boosting their excitatory nerve cells largely abolished their social behavior," said Deisseroth, [associate professor of psychiatry and behavioral sciences and of bioengineering and the study's senior author]. In addition, these mice's brains showed the same gamma-oscillation pattern that is observed among many autistic and schizophrenic patients. "When you raise the firing likelihood of excitatory cells in the medial prefrontal cortex, you see an increased gamma oscillation right away, just as one would predict it would if this change in the excitatory/inhibitory balance were in fact relevant."

In a second study, from Japan, researchers used optogenetics to make mice fall asleep by engineering a specific type of neuron in the hypothalamus, part of the brain that regulates sleep. Shining light on these neurons inhibited their activity, sending the mice into dreamless (or non-REM) sleep. The research, published this month in the Journal of Neuroscience, might shed light on narcolepsy, a disorder of sudden sleep attacks.

Rather than making mice fall asleep, a third group of researchers used optogenetics disrupt sleep in mice, which in turn affected their memory. Previous research has shown that sleep is important for consolidating, or storing, memories, and that diseases characterized by sleep deficits, such as sleep apnea, often have memory deficits as well. But it has been difficult to analyze the effect of more subtle disruptions to sleep.

The new study shows that "regardless of the total amount of sleep, a minimal unit of uninterrupted sleep is crucial for memory consolidation," the authors write in the study published online July 25 in the Proceedings of the National Academy of Sciences.

They genetically engineered a group of neurons involved in switching between sleep and wake to be sensitive to light. Stimulating these cells with 10-second bursts of light fragmented the animals' sleep without affecting total sleep time or quality and composition of sleep.

According to a press release from Stanford;

After manipulating the mice's sleep, the researchers had the animals undergo a task during which they were placed in a box with two objects: one to which they had previously been exposed, and another that was new to them. Rodents' natural tendency is to explore novel objects, so if they spent more time with the new object, it would indicate that they remembered the other, now familiar object. In this case, the researchers found that the mice with fragmented sleep didn't explore the novel object longer than the familiar one — as the control mice did — showing that their memory was affected.

The findings, "point to a specific characteristic of sleep — continuity — as being critical for memory," said [H. Craig Heller, professor of biology at Stanford and one of the authors of the study.]

Friday, February 05. 2010

London-based Steven Chilton of Marks Barfield Architects, the designers of the London Eye, has sent us fascinating images of Villa Hush Hush, an innovative high end residence they are currently developing. The project has been privately commissioned and currently has planning permission.

Images + project description after the jump.

Villa Hush-Hush - by Steven Chilton of Marks Barfield Architects, designers of the London Eye

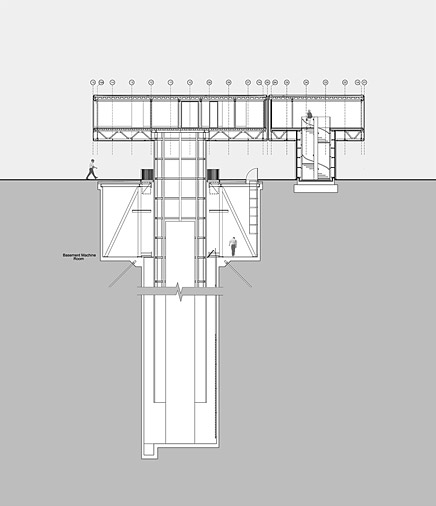

Specifically created for sensitive sites and affording sensational views, Villa Hush-Hush is designed as a spectacular new home concept that can disappear into a landscape, but at the touch of a button be lifted above the treetops to provide wonderful panoramic views.

Project Architect and designer Steven Chilton said: “The inspiration comes from a fascinating brief to create an individually crafted and beautiful home. The design derives from a simple cubic composition inspired by the work of Donald Judd. Unique in design and function, and unlike any other home in the world, it will offer a memorable, moving, living experience.”

In plan, the villa is divided into four clearly defined zones, of which it is possible to elevate two, depending on the internal arrangement and client’s requirements. The clean simplicity of the forms concentrates the relationship between the villa, the viewer and its environment.

In plan, the villa is divided into four clearly defined zones, of which it is possible to elevate two, depending on the internal arrangement and client’s requirements. The clean simplicity of the forms concentrates the relationship between the villa, the viewer and its environment.

Externally the moving element transforms the villa into a kinetic sculpture creating a unique spectacle with an assured quality. Inside, bespoke designs by interior specialists Candy & Candy will frame spectacular views that would slowly be unveiled as part of the villa rises up above the surroundings bringing the horizon into view, creating a unique, memorable, and heightened feeling.

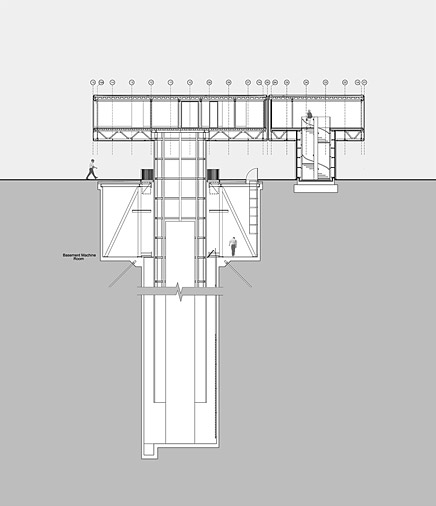

Engineers Atelier One designed the lifting mechanism which pushes a support column up out of the ground raising the moving element of the villa from its lowered position to the required height. The lifting mechanism has been designed such that the lifting, at around 10cm per second, is gentle and steady. The moving element of the villa can be lowered more quickly as it is easier to drop the structure than lift it. This means it would take about five minutes to reach its full height above ground and about three minutes to descend.

The support column and the moving element of the villa are balanced by 260 tonnes of steel plate acting as a counter-weight suspended in a cradle and guided within a central inner steel tube structure, and are driven by eight 22kW drive motors, equivalent in total to an energy efficient family sized vehicle. Redundancy is designed into the system of motors and gearboxes such that in the event of failure of a single drive unit the mechanism will function as normal, with no reduction in performance.

Working with dynamic specialists Motioneering, dampers have been designed into the structure to limit the dynamic response of the structure at various heights and wind speeds to ensure the highest levels of comfort.

To maximise the economic efficiency of the structure, the structure is restricted from being elevated during very high winds. The high wind limit for this is around Beaufort Scale 7 which is described as when whole trees are in motion and effort is needed to walk against the wind. In these cases the moving element of the villa would remain comfortably in its lowered position.

About Steven Chilton, Associate Director MBA

Born in London in 1971, Steven first made his mark on the environment as a graffiti artist prior to pursuing a career in architecture. After studying at Manchester University where he received a Distinction in design, he joined MBA in 1997 and spent the first three years working on the design and construction of the London Eye.

Since then he has designed the award winning Millbank Millennium Pier outside Tate Britain, collaborating with acclaimed conceptual artist Angela Bulloch and recently created the competition winning sculpture to mark the entry point into Wales called the Red Cloud.

-----

Via Archinect

|