Wednesday, March 12. 2025

Summoning the Ghosts of Modernity at MAMM (Medellin) | #exhibition #digital #algorithmic #matter #nonmatter

Note: fabric | ch is part of the exhibition Summoning the Ghosts of Modernity at the Museo de Arte Moderno de Medellin (MAMM), in Colombia.

The show constitutes a continuation of Beyond Matter that took place at the ZKM in 2022/23, and is curated by Lívia Nolasco-Rószás and Esteban Guttiérez Jiménez.

The exhibition will be open between the 13th of March and 15th of June 2025.

-----

By fabric | ch

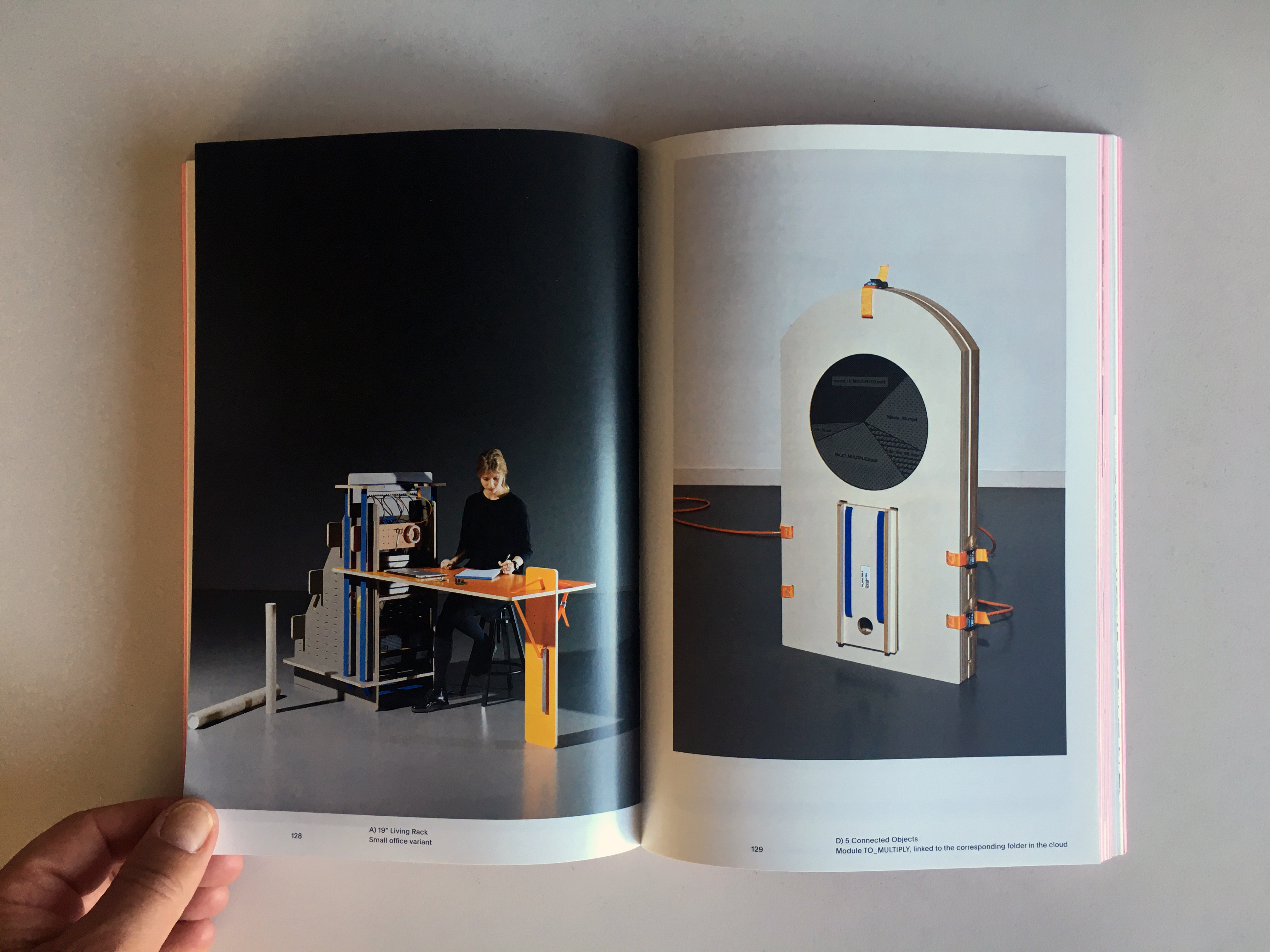

Atomized (re-)Staging (2022), by fabric | ch. Exhibited during Summing the Ghosts of Modernity at the Museo de Arte Moderno de Medellin (MAMM). March 13 to June 15 2025.

More pictures of the exhibtion on Pixelfed.

Tuesday, February 27. 2024

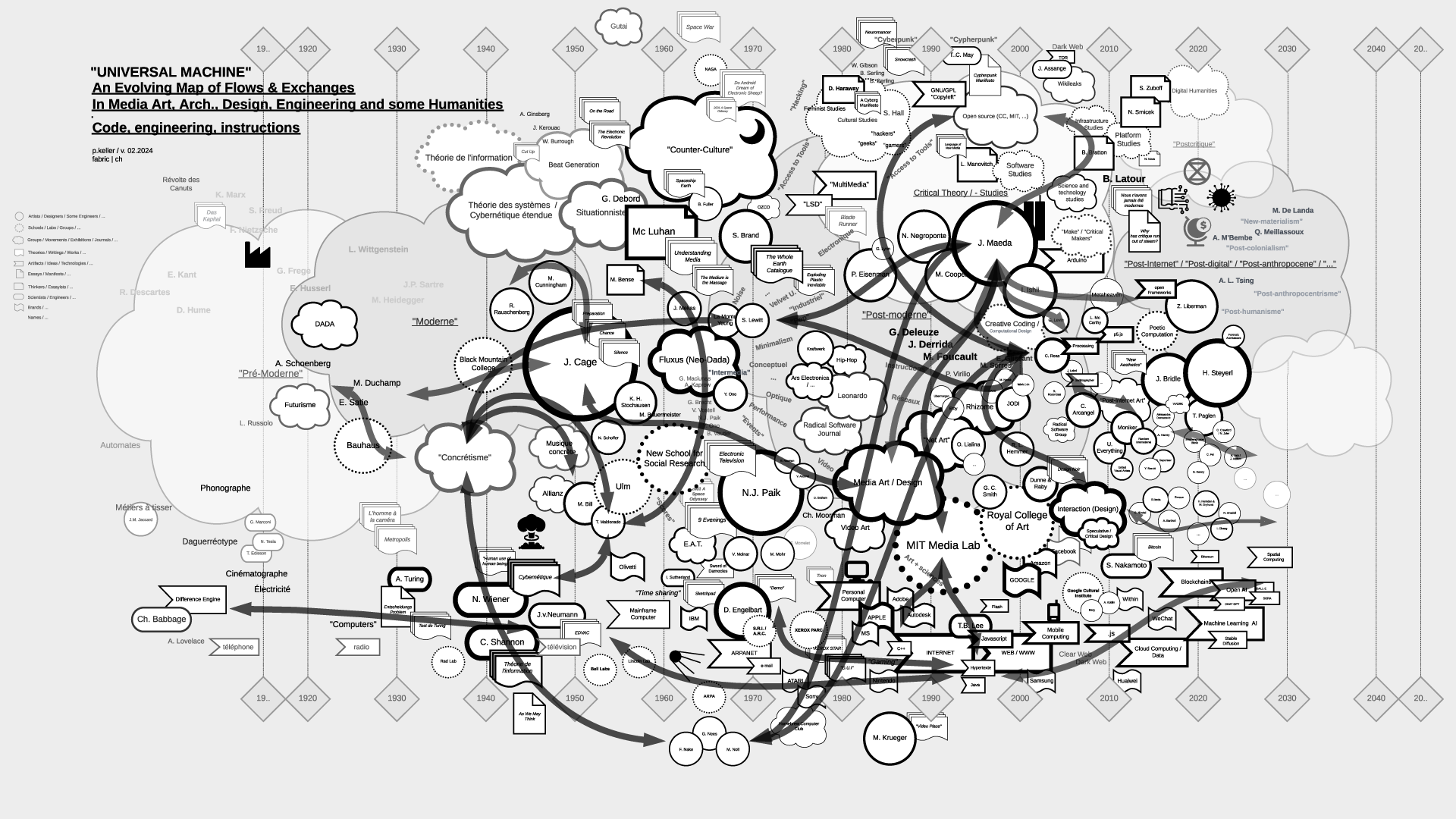

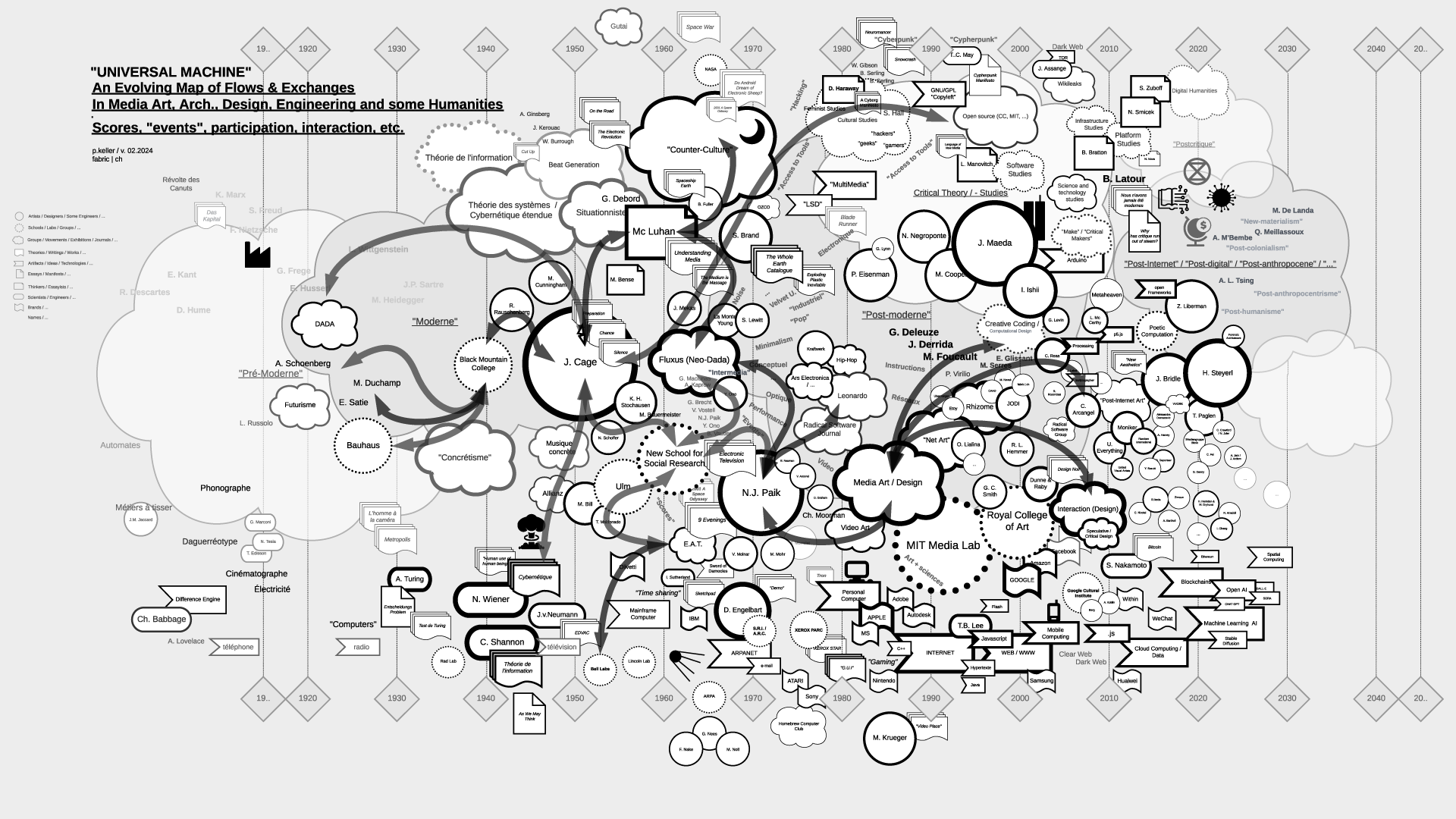

"Universal Machine": historical graphs on the relations and fluxes between art, architecture, design, and technology (19.. - 20..) | #art&sciences #history #graphs

Note (03.2024): The contents of the files (maps) have been updated as of 02.2024.

-

Note (07.2021): As part of my teaching at ECAL / University of Art and Design Lausanne (HES-SO), I've delved into the historical ties between art and science. This ongoing exploration focuses on the connection between creative processes in art, architecture, and design, and the information sciences, particularly the computer, also known as the "Universal Machine" as coined by A. Turing. This informs the title of the graphs below and this post.

Through my work at fabric | ch, and previously as an assistant at EPFL followed by a professorship at ECAL, to experience first hand some of these massive transformations in society and culture.

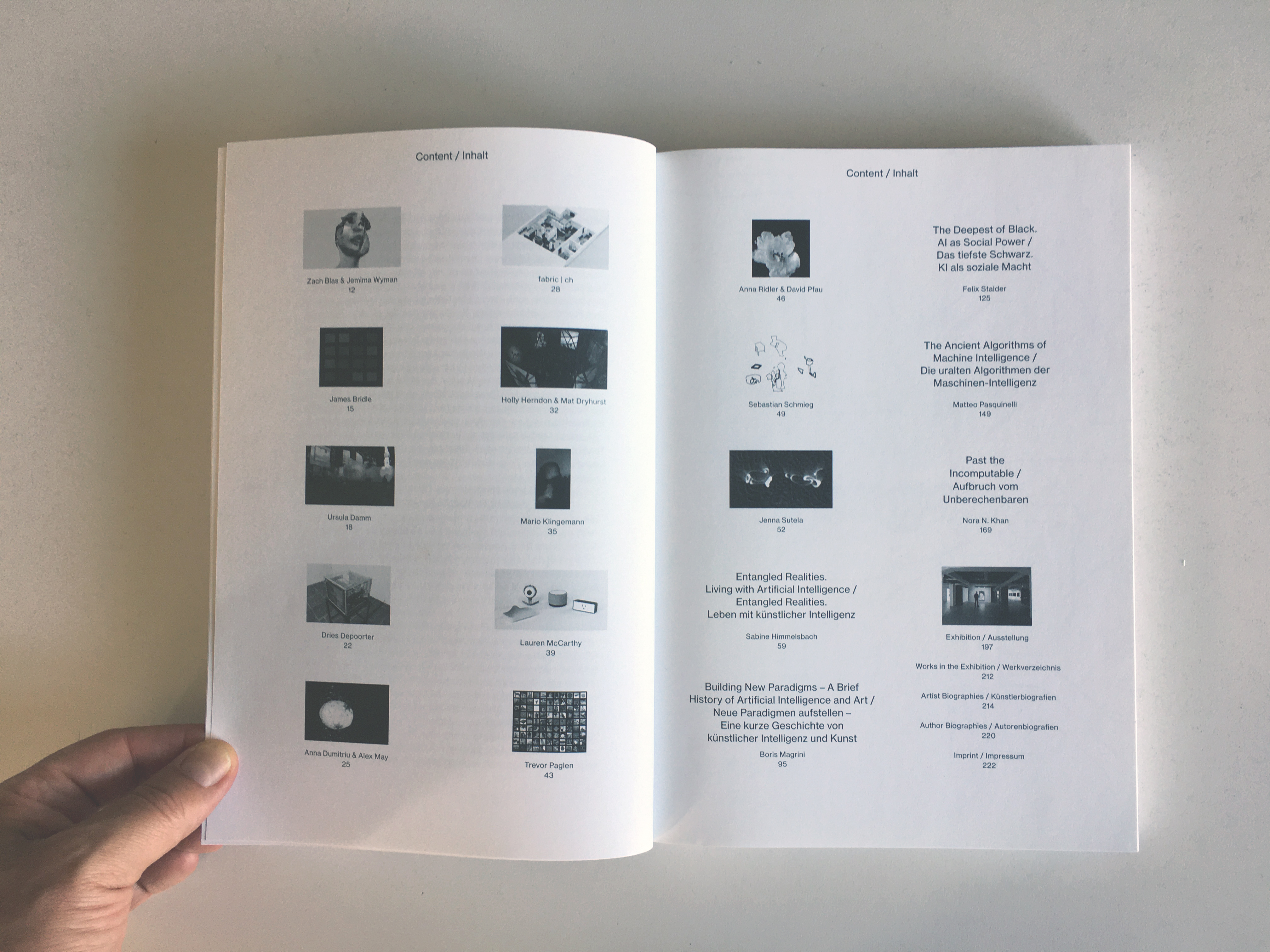

Thus, in my theory courses, I've aimed to create "maps" that aid in comprehending, visualizing, and elucidating the flux and timelines of interactions among individuals, artifacts, and disciplines. These maps, imperfect and constrained by size, are continuously evolving and open to interpretation beyond my own. I regularly update them as part of the process.

Yet, in the absence of a comprehensive written, visual, or sensitive history of these techno-cultural phenomena as a whole, these maps serve as valuable approximation tools for grasping the flows and exchanges that either unite or divide them. They offer a starting point for constructing personal knowledge and delving deeper into these subjects.

This is precisely why, despite their inherent fuzziness - or perhaps because of it - I choose to share them on this blog (fabric | rblg), in an informal manner. It's an invitation for other artists, designers, researchers, teachers, students, and so forth, to begin building upon them, to depict different flows, to develop pre-existing or subsequent ideas, or even more intriguingly, to diverge from them. If such advancements occur, I'm keen on featuring them on this platform. Feel free to reach out for suggestions, comments, or to share new developments.

...

It's worth mentioning that the maps are structured horizontally along a linear timeline, spanning from the late 18th century to the mid-21st century, predominantly focusing on the industrial period. Vertically, they are organized around disciplines, with the bottom representing engineering, the middle encompassing art and design, and the top relating to humanities, social events, or movements.

Certainly, one might question this linear timeline, echoing the sentiments of writer B. Latour. What about considering a spiral timeline, for instance? Such a representation would still depict both the past and the future, while also illustrating the historical proximities of topics, connecting past centuries and subjects with our contemporary context in a circular manner. However, for the time being, and while recognizing its limitations, I adhere to the simplicity of the linear approach.

Countless narratives can emerge as inherent properties of the graphs, underscoring that they are not their origins but rather products thereof.

...

The selection of topics (code, scores-instructions, countercultural, network-related, interaction, "post-...") currently aligns with the themes of my teaching but is subject to expansion, possibly toward an underlying layer revealing the material conditions that underpinned and facilitated the entire process.

In any case, this could serve as a fruitful starting point for some further readings or perhaps a new "Where's Waldo/Wally" kind of game!

Via fabric | ch

-----

By Patrick Keller

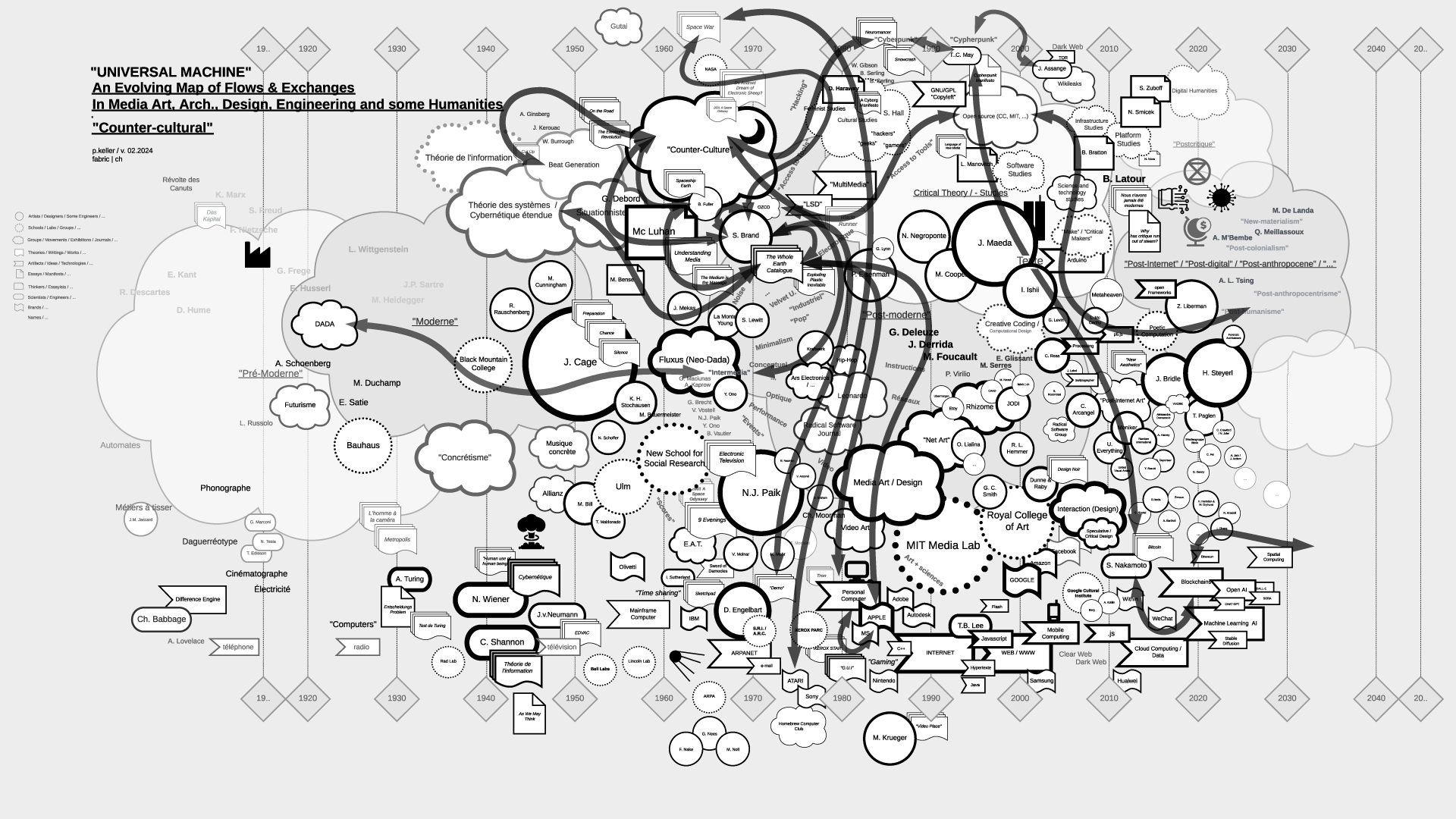

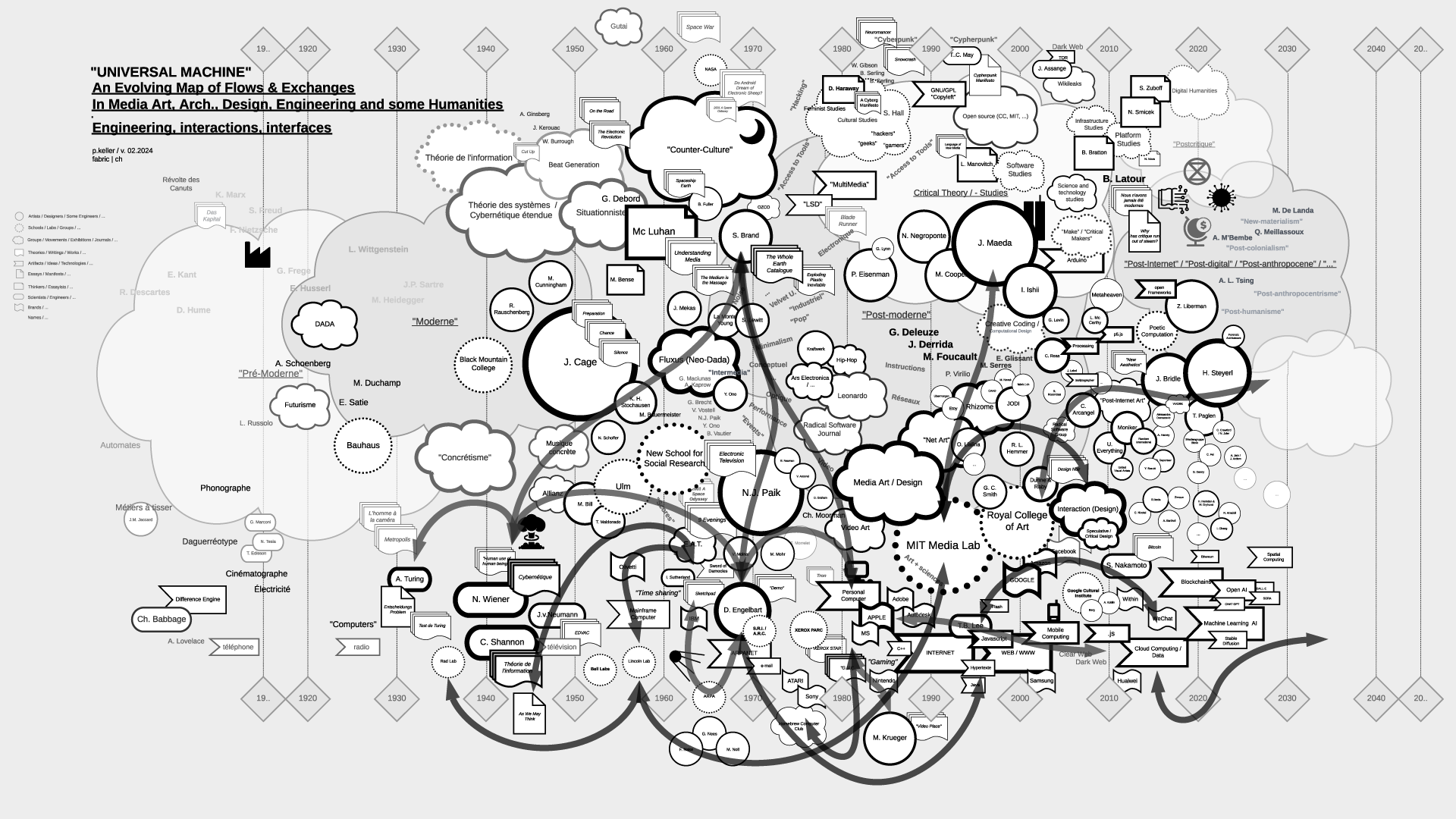

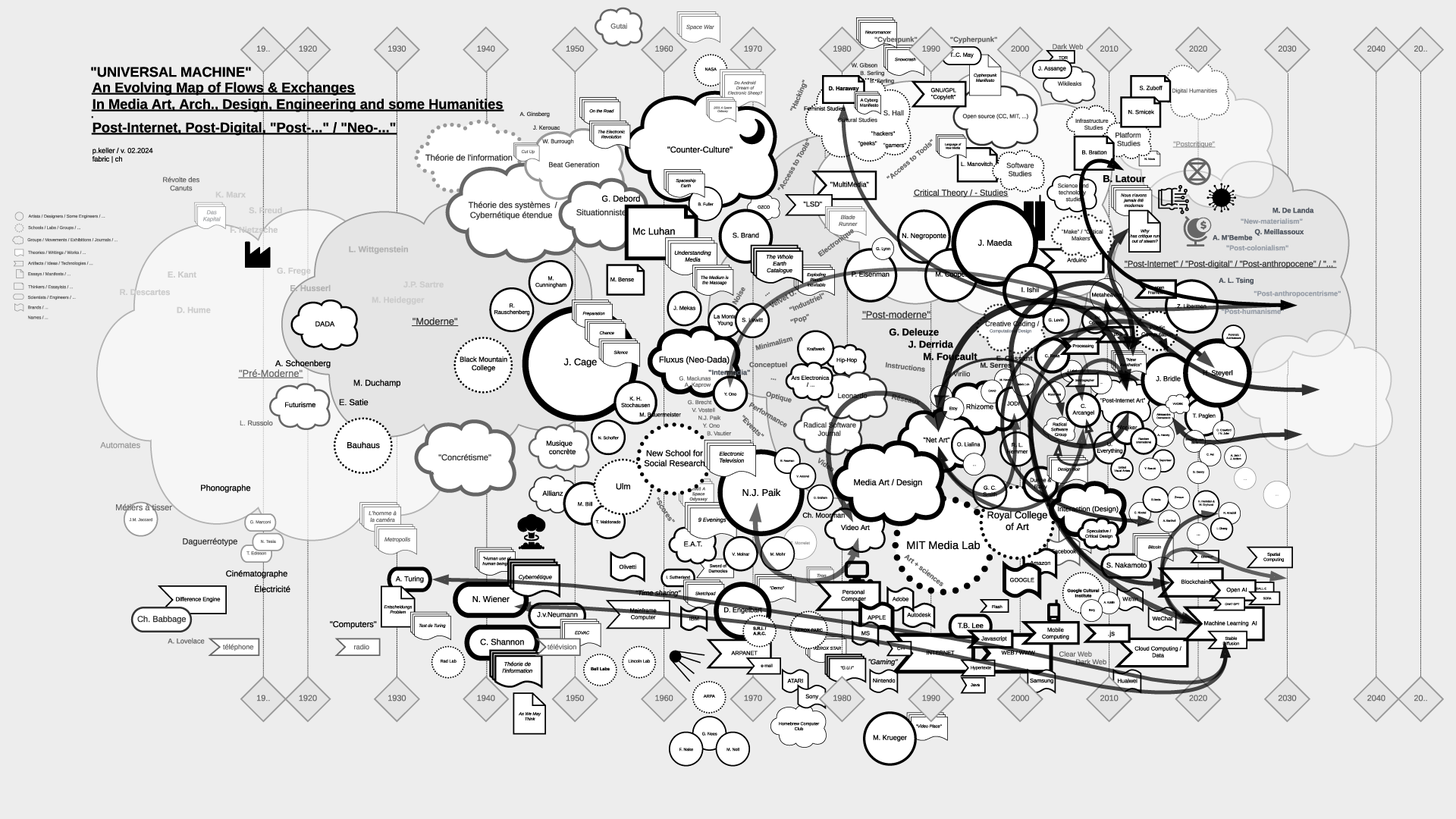

Rem.: By clicking on the thumbnails below you'll get access to HD versions.

"Universal Machine", main map (late 18th to mid 21st centuries):

Flows in the map > "Code":

Flows in the map > "Scores, Partitions, ...":

Flows in the map > "Countercultural, Subcultural, ...":

Flows in the map > "Network Related":

Flows in the map > "Interaction":

Flows in the map > "Post-Internet/Digital, "Post -..." , "Neo -...", ML/AI":

...

To be continued (& completed) ...

Monday, October 02. 2023

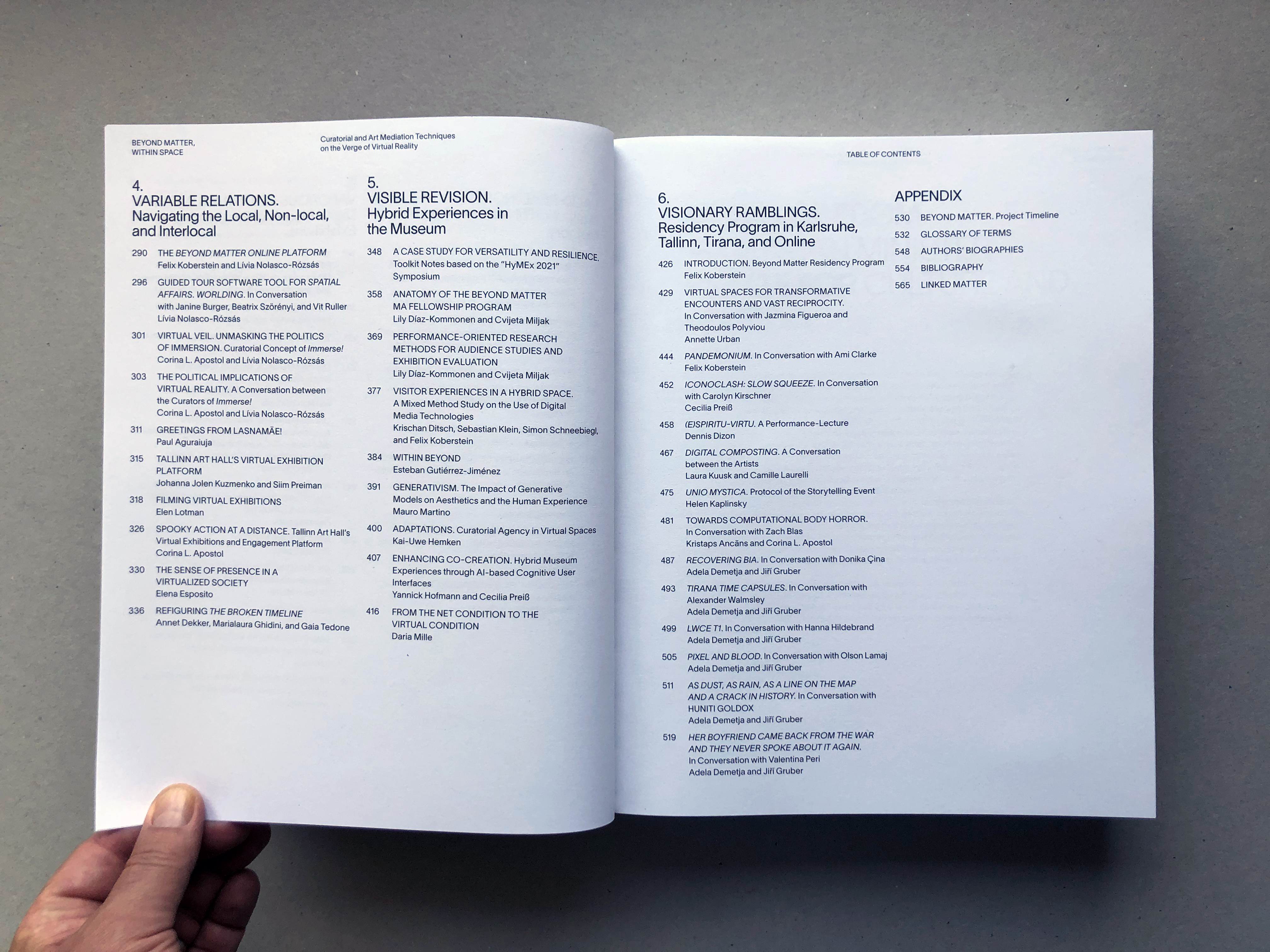

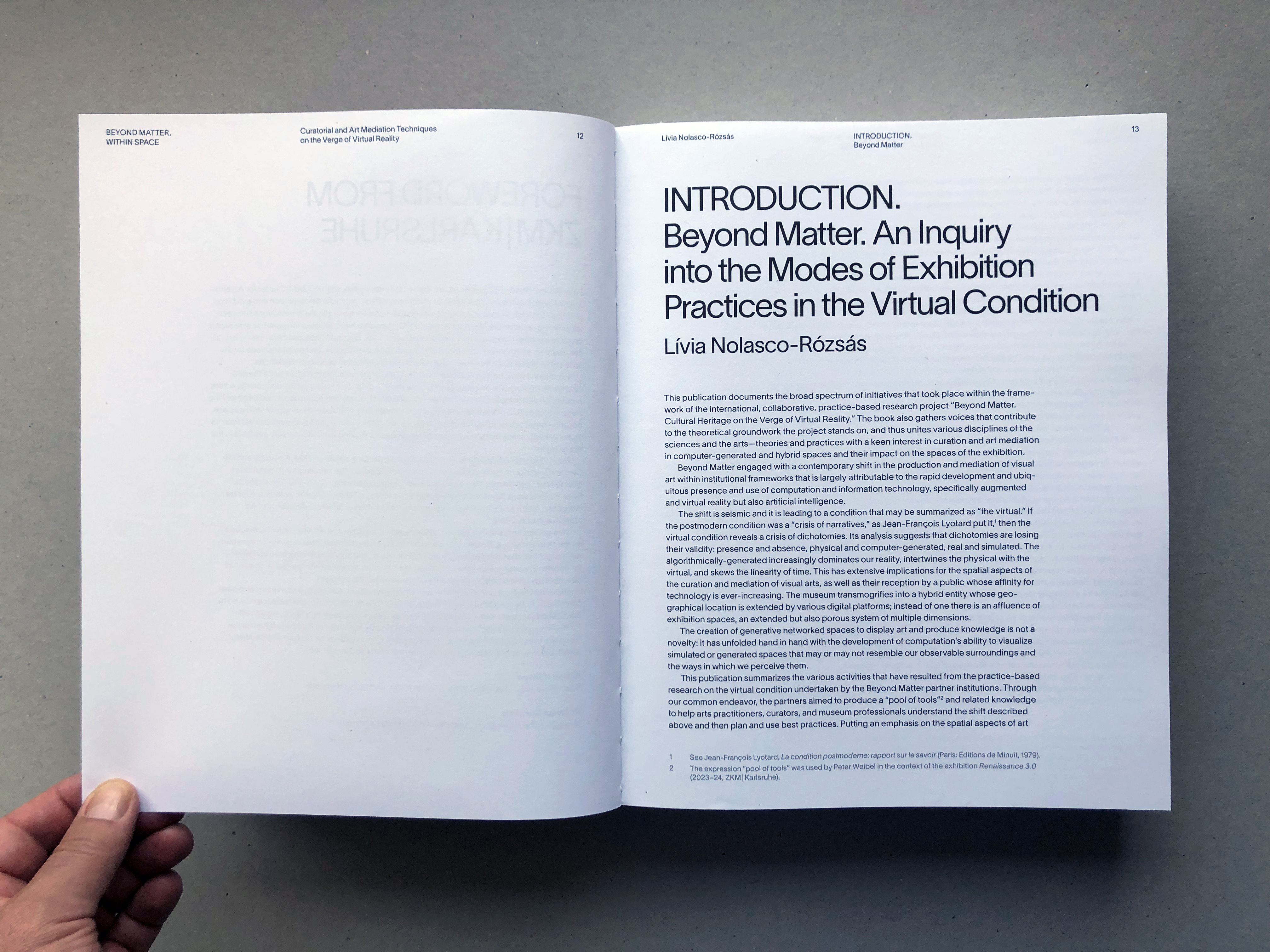

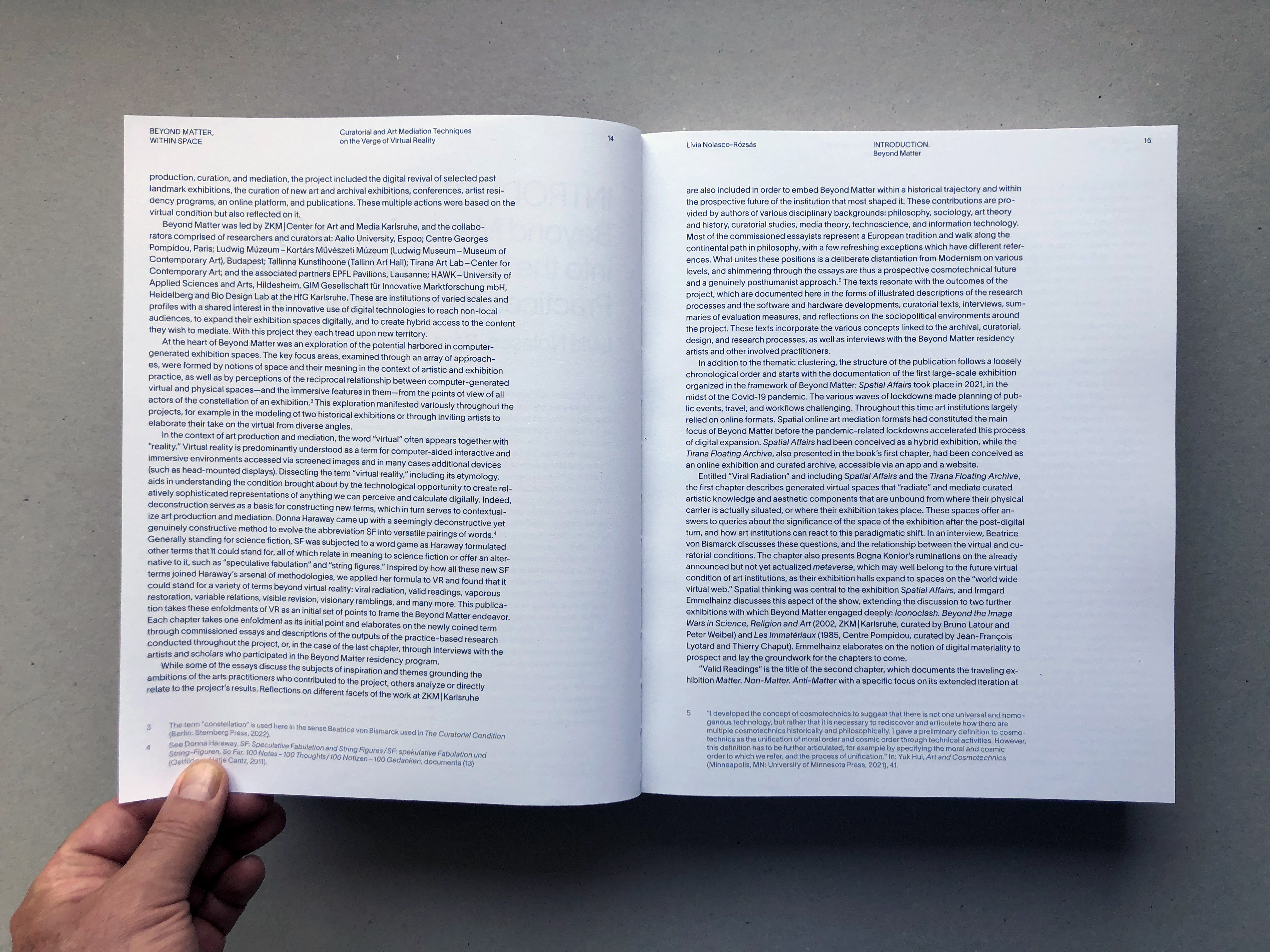

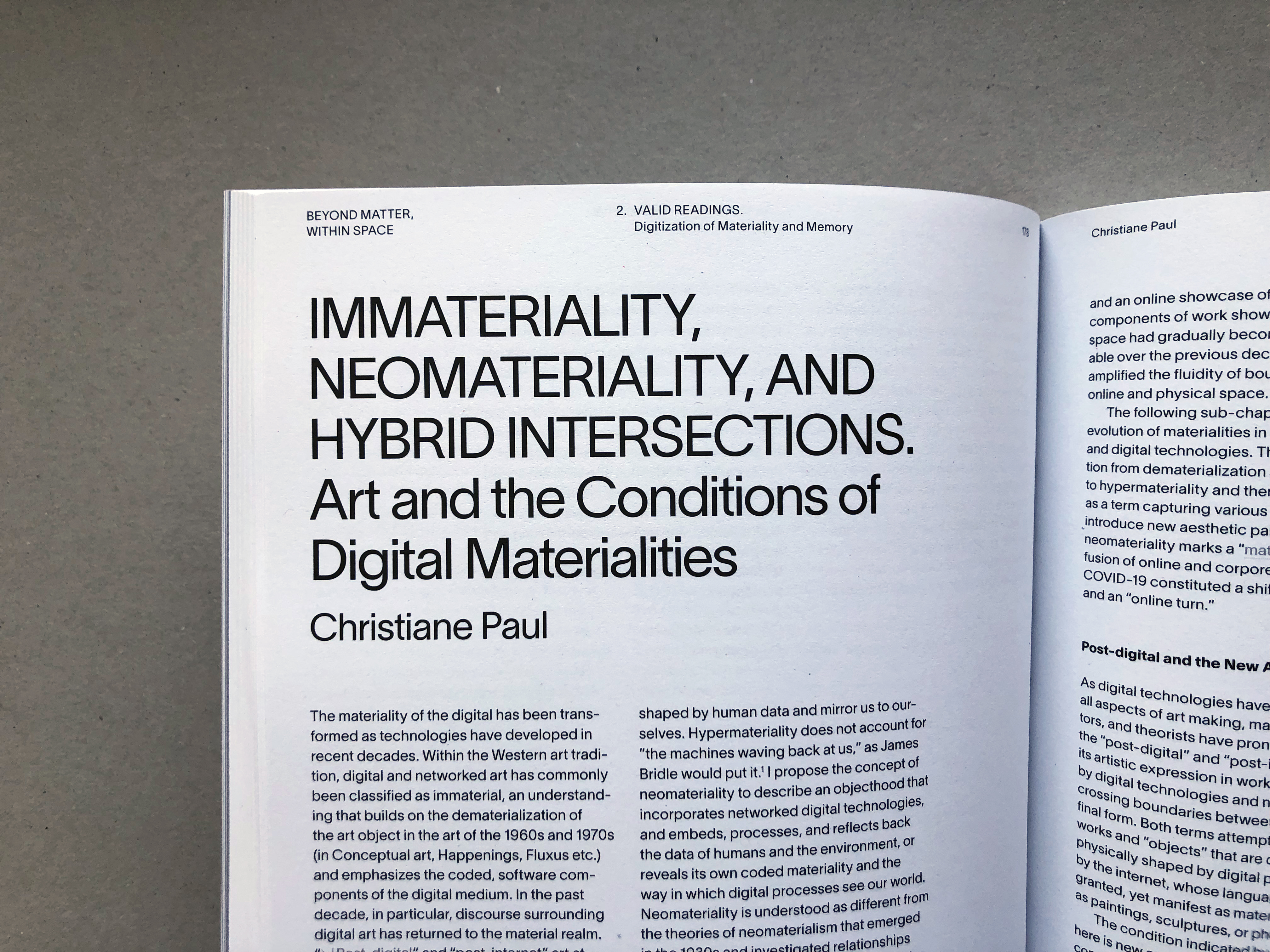

Beyond Matter – Within Space, ZKM exhibition catalogue (eds. L. Nolasco-Rószás & M. Schädler), Hatje Cantz (Berlin, 2023) | #AI #AR #VR #XR #art #digital #exhibition #curation

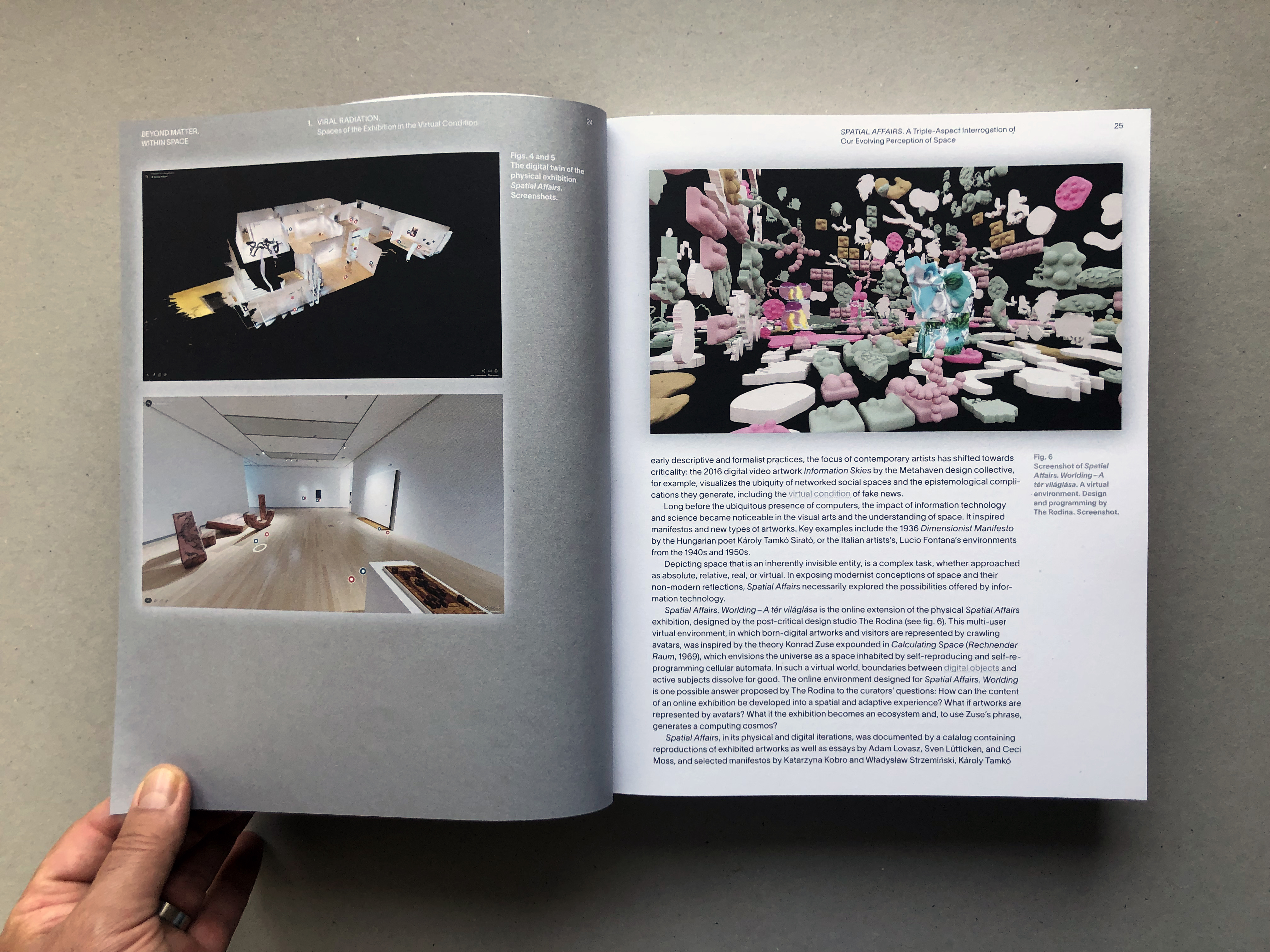

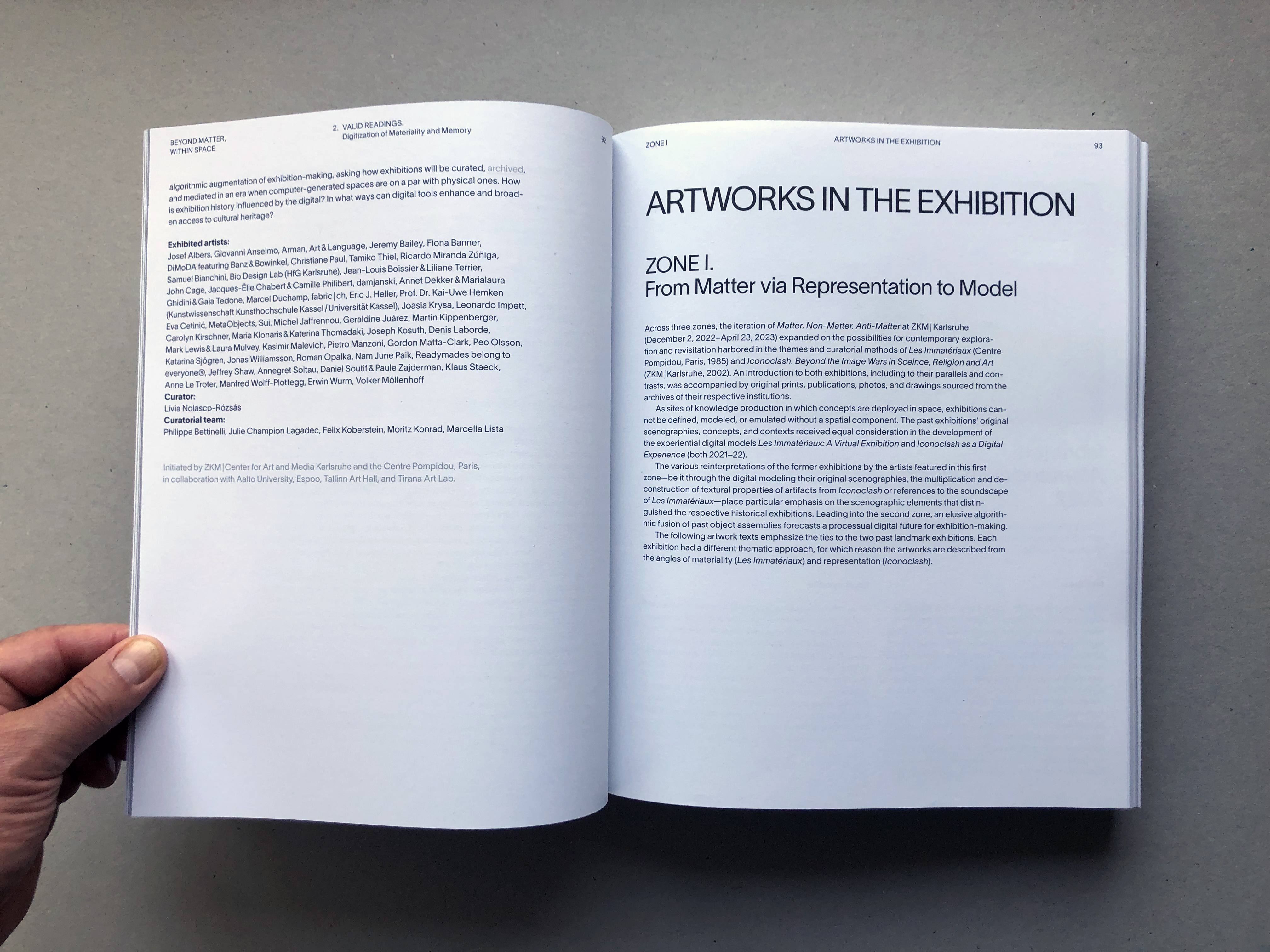

Note: this well-documented publication, complete with essays and which also serves as a comprehensive exhibition catalogue, was released to coincide with the end of a European research project Beyond Matter. The research was led by the ZKM (Zentrum für Kunst une Medien, Karlsruhe) and Centre George Pompidou in Paris. The publication also came out as the exhibition catalogue in relation to two exhibitions held in 2023 – n ZKM and Centre Pompidou – about the research obectives.

Beyond Matter – Within Space is certainly destined to become a central work on the issue of digital art exhibition in our time.

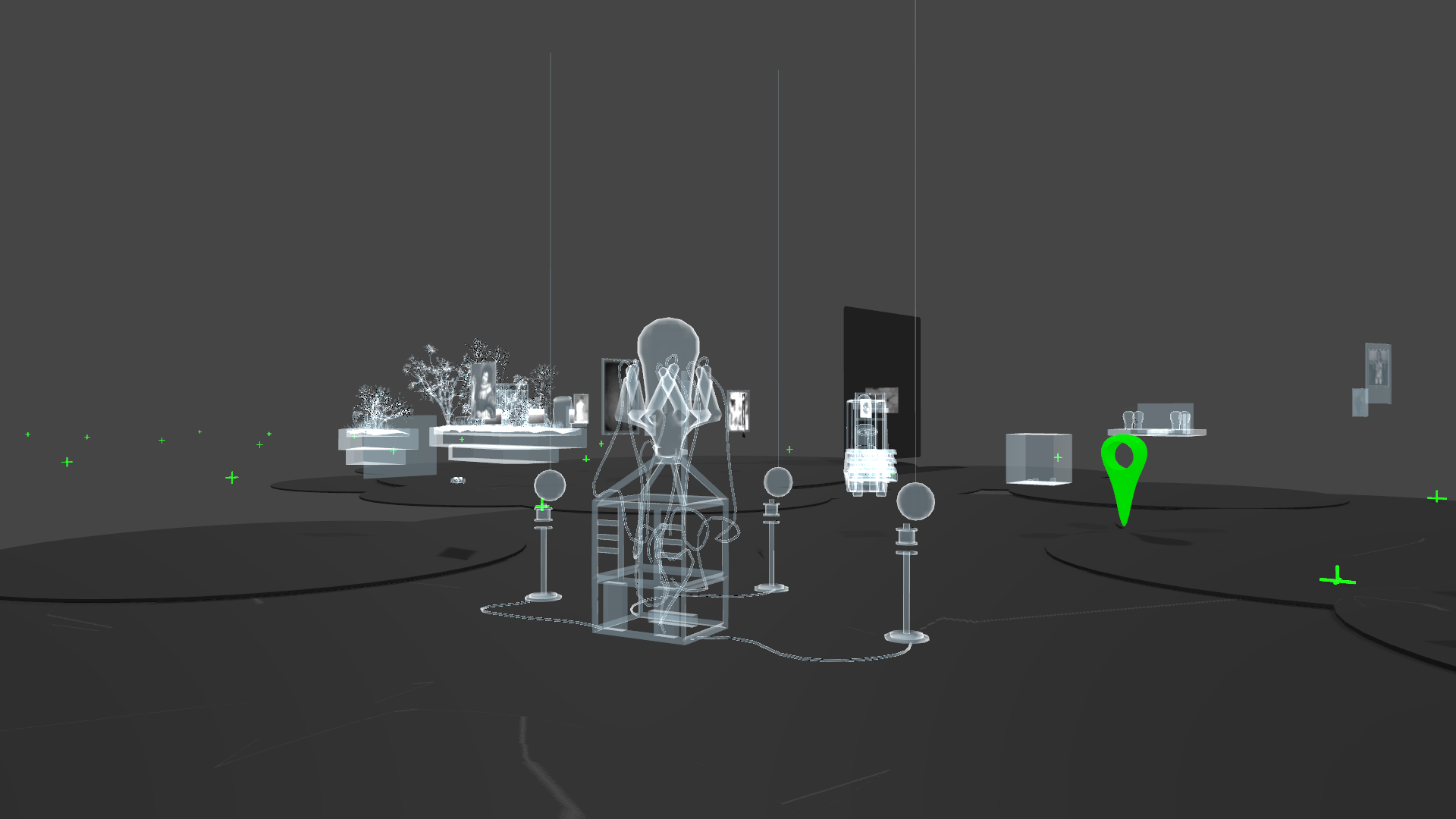

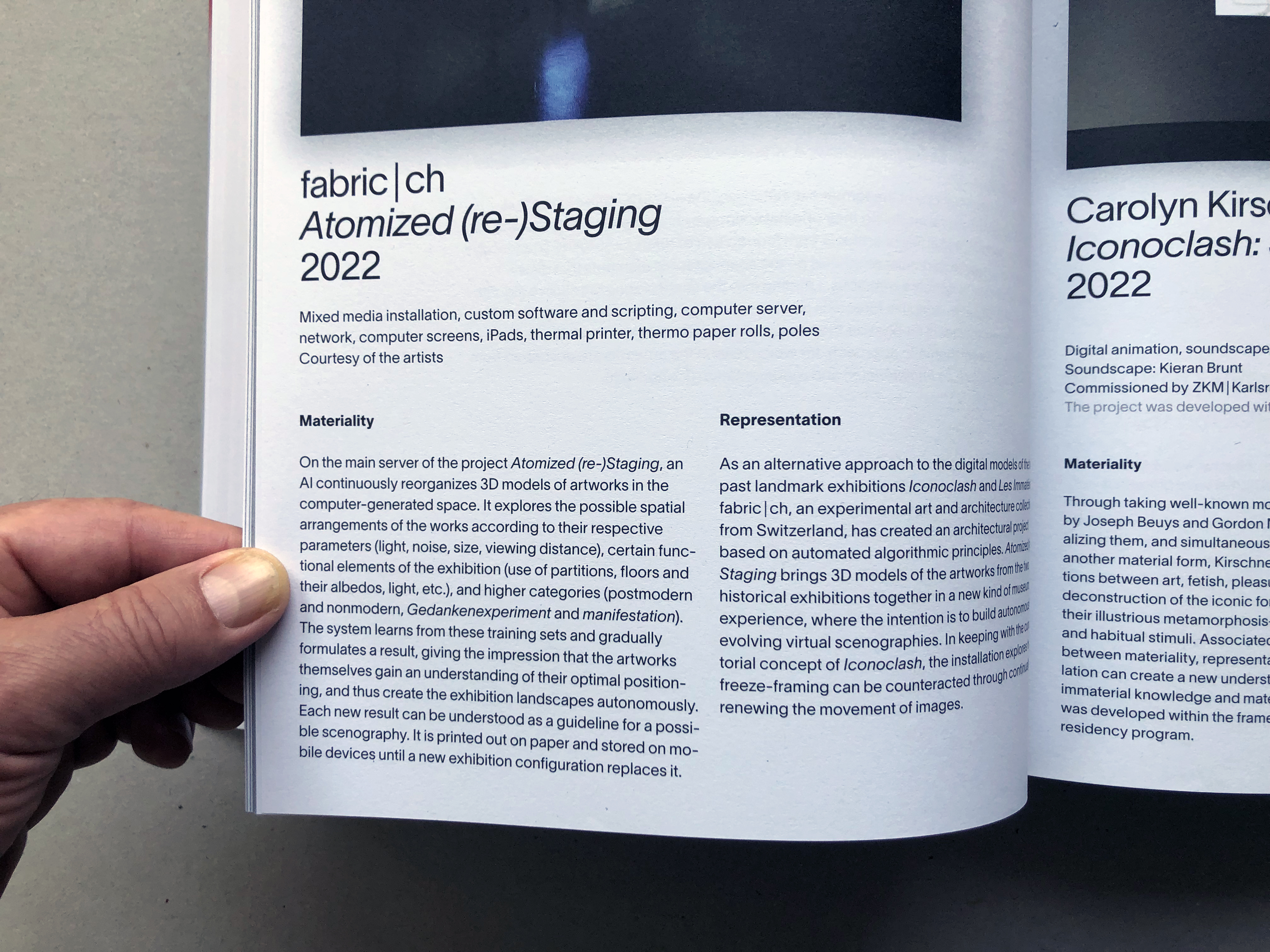

In this context, fabric | ch – studio for architecture, interaction & research had the chance to be involved in the exhibition at the ZKM and work with around 200 digitized artworks (of historical signifiance) provided by the research team. The "experimental architecture" project fabric | ch created at this occasion, a new work, was entitled Atomized (re-)Staging.

-----

By Patrick Keller

Wednesday, November 30. 2022

Past exhibitions as Digital Experiences @ZKM, fabric | ch | #digital #exhibition #experimentation

Note:

The exhibition related to the project and European research Beyond Matter - Past Exhibitions as Digital Experiences will open next week at ZKM, with the digital versions (or should I rather say "versioning"?) of two past and renowned exhibitions: Iconoclash, at ZKM in 2002 (with Bruno Latour among the multiple curators) and Les Immateriaux, at Beaubourg in 1985 (in this case with Jean-François Lyotard, not so long after the release of his Postmodern Condition). An unusual combination from two different times and perspectives.

The title of the exhibition will be Matter. Non-Matter. Anti-Matter, with an amazing contemporary and historic lineup of works and artists, as well as documentation material from both past shows.

Working with digitized variants of iconic artworks from these past exhibitions (digitization work under the supervision of Matthias Heckel), fabric | ch has been invited by Livia Nolasco-Roszas, ZKM curator and head of the research, to present its own digital take in the form of a combination on these two historic shows, and by using the digital models produced by their research team and made available.

The result, a new fabric | ch project entitled Atomized (re-)Staging, will be presented at the ZKM in Karlsruhe from this Saturday on (03.12.2022 - 23.04.2023).

Via ZKM

-----

Opening: Matter. Non-Matter. Anti-Matter.

© ZKM | Center for Art and Media Karlsruhe, Visual: AKU Collective / Mirjam Reili

Past exhibitions as Digital Experiences

Fri, December 02, 2022 7 pm CET, Opening

---

Free entry

---

When past exhibitions come to life digitally, the past becomes a virtual experience. What this novel experience can look like in concrete terms is shown by the exhibition »Matter. Non-Matter. Anti-Matter«.

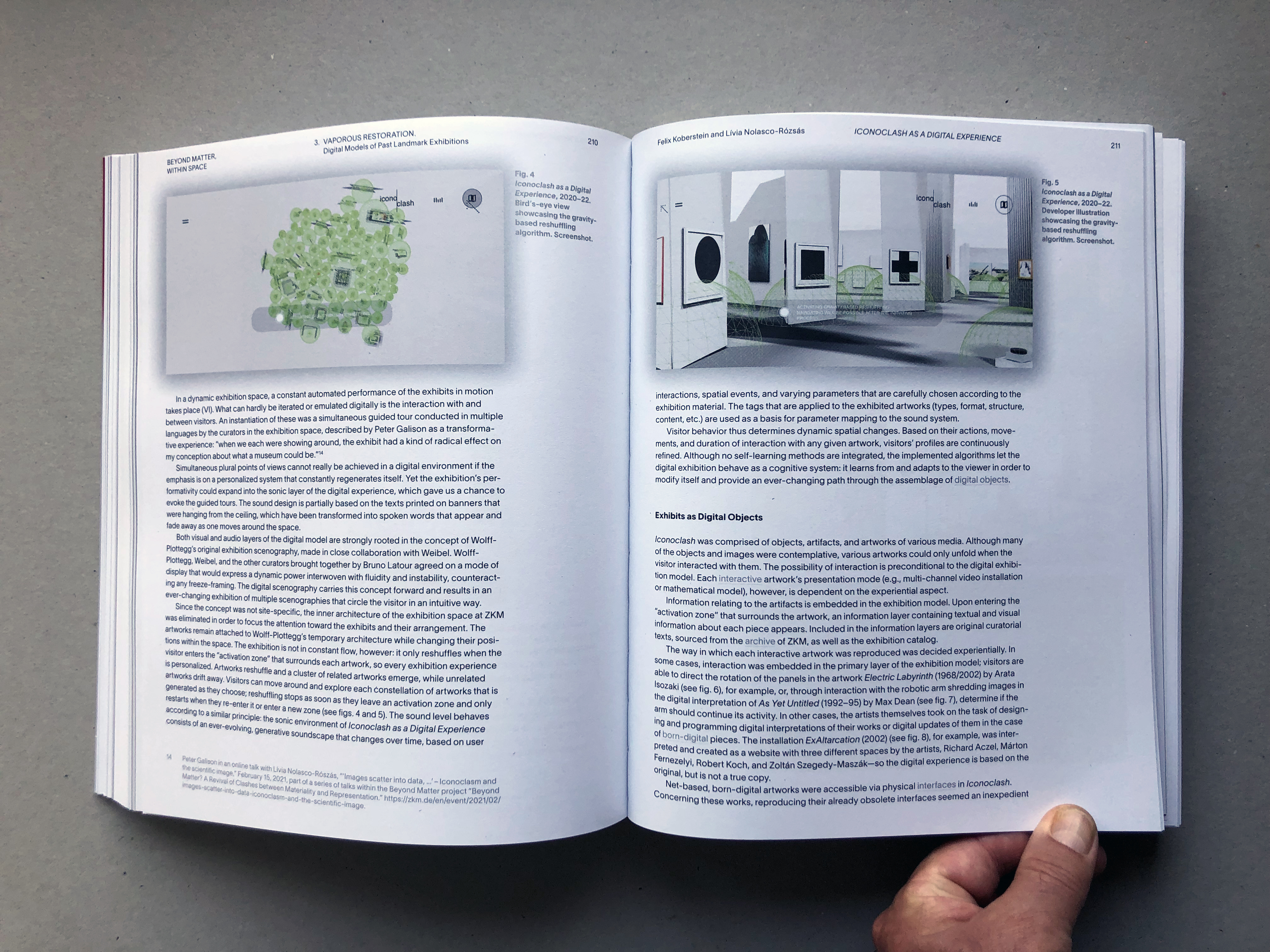

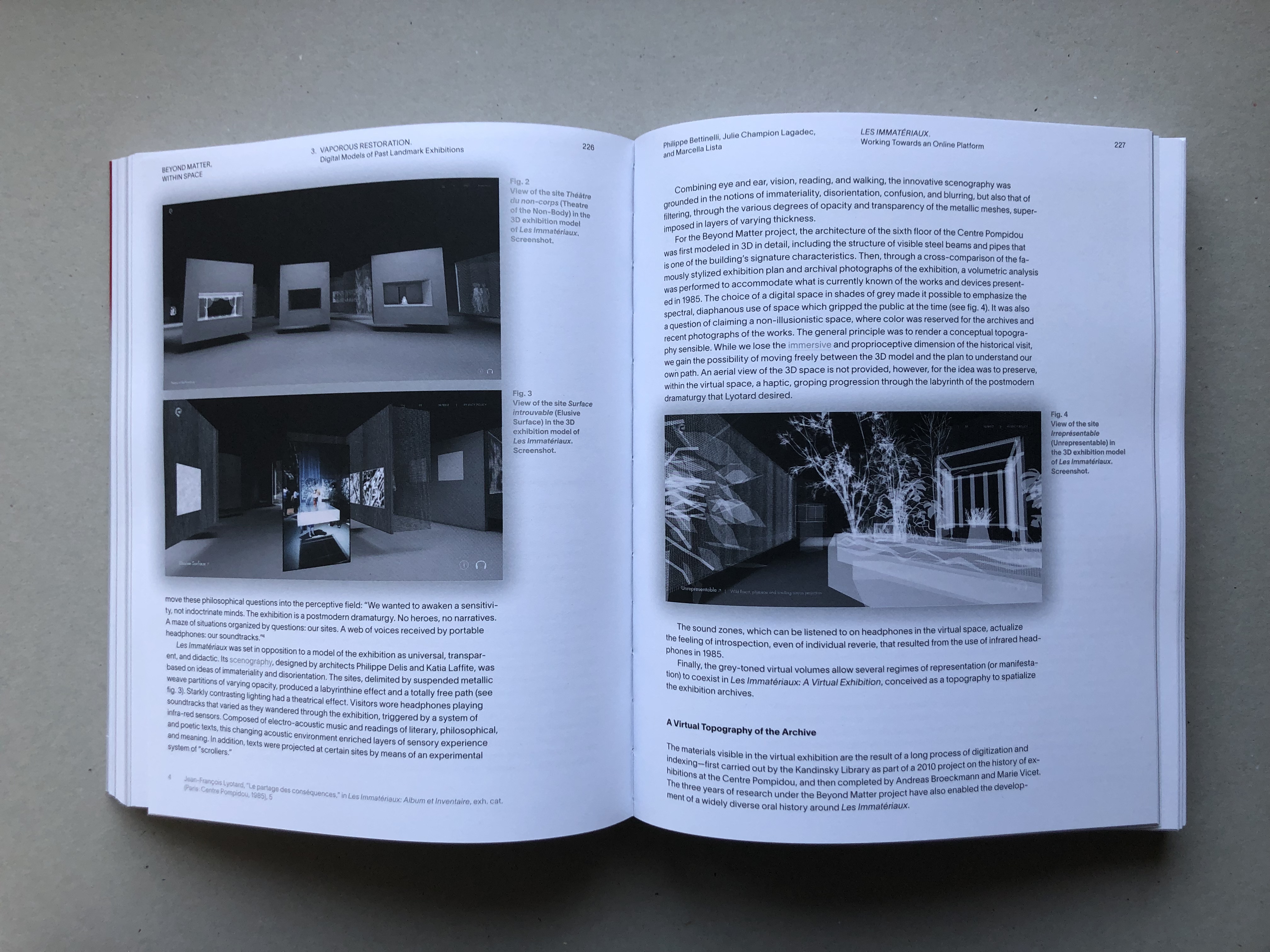

As part of the project »Beyond Matter. Cultural Heritage on the Verge of Virtual Reality«, the ZKM | Karlsruhe and the Centre Pompidou, Paris, use the case studies »Les Immatériaux« (Centre Pompidou, 1985) and »Iconoclash« (ZKM | Karlsruhe, 2002) to investigate the possibility of reviving exhibitions through experiential methods of digital and spatial modeling.

The digital model as an interactive presentation of exhibition concepts is a novel approach to exploring exhibition history, curatorial methods, and representation and mediation. The goal is not to create »digital twins«, that is, virtual copies of past assemblages of artifacts and their surrounding architecture, but to provide an independent sensory experience.

On view will be digital models of past exhibitions, artworks and artifacts from those exhibitions, and accompanying contemporary commentary integrated via augmented reality. The exhibition will be accompanied by a conference on virtualizing exhibition histories.

The exhibition will be accompanied by numerous events, such as specialist workshops, webinars, online and offline guided tours, and a conference.

---

Program

7 – 7:30 p.m. Media Theater

Short lectures by

Sybille Krämer, Professor (emer.) at the Free University of Berlin

Siegfried Zielinski, media theorist with a focus on archaeology and variantology of arts and media, curator, author

Moderation: Lívia Nolasco-Rózsás, curator

7:30 – 8:15 p.m. Media Theater

Welcome

Olga Sismanidi, Representative Creative Europe Program of the European Commission (EACEA)

Arne Braun, State Secretary in the Ministry of Science, Research and the Arts of Baden-Württemberg

Frank Mentrup, Lord Mayor of the City of Karlsruhe

Peter Weibel, Director of the ZKM | Karlsruhe

Xavier Rey, Director of the Centre Pompidou, Paris

8:15 – 8:30 p.m. Improvisation on the piano

Hymn Controversy by Bardo Henning

8:30 p.m.

Curator guided tour of the exhibition

---

The exhibition will be open from 8 to 10 p.m.

The mint Café is also looking forward to your visit.

---

Matter. Non-Matter. Anti-Matter.

Past Exhibitions as Digital Experiences.

Sat, December 03, 2022 – Sun, April 23, 2023

The EU project »Beyond Matter: Cultural Heritage on the Verge of Virtual Reality« researches ways to reexperience past exhibitions using digital and spatial modeling methods. The exhibition »Matter. Non-Matter. Anti-Matter.« presents the current state of the research project at ZKM | Karlsruhe.

At the core of the event is the digital revival of the iconic exhibitions »Les Immatériaux« of the Centre Pompidou Paris in 1985 and »Iconoclash: Beyond the Image Wars in Science, Religion, and Art« of the ZKM | Karlsruhe in 2002.

Based on the case studies of »Les Immatériaux« (Centre Pompidou, 1985) and »Iconoclash: Beyond the Image Wars in Science, Religion, and Art« (ZKM, 2002), ZKM | Karlsruhe and the Centre Pompidou Paris investigate possibilities of reviving exhibitions through experiential methods of digital and spatial modeling. Central to this is also the question of the particular materiality of the digital.

At the heart of the Paris exhibition »Les Immatériaux« in the mid-1980s was the question of what impact new technologies and materials could have on artistic practice. When philosopher Jean-François Lyotard joined as cocurator, the project's focus eventually shifted to exploring the changes in the postmodern world that were driven by a flood of new technologies.

The exhibition »Iconoclash« at ZKM | Karlsruhe focused on the theme of representation and its multiple forms of expression, as well as the social turbulence it generates. As emphasized by curators Bruno Latour and Peter Weibel, the exhibition was not intended to be iconoclastic in its approach, but rather to present a synopsis of scholarly exhibits, documents, and artworks about iconoclasms – a thought experiment that took the form of an exhibition – a so-called »thought exhibition.«

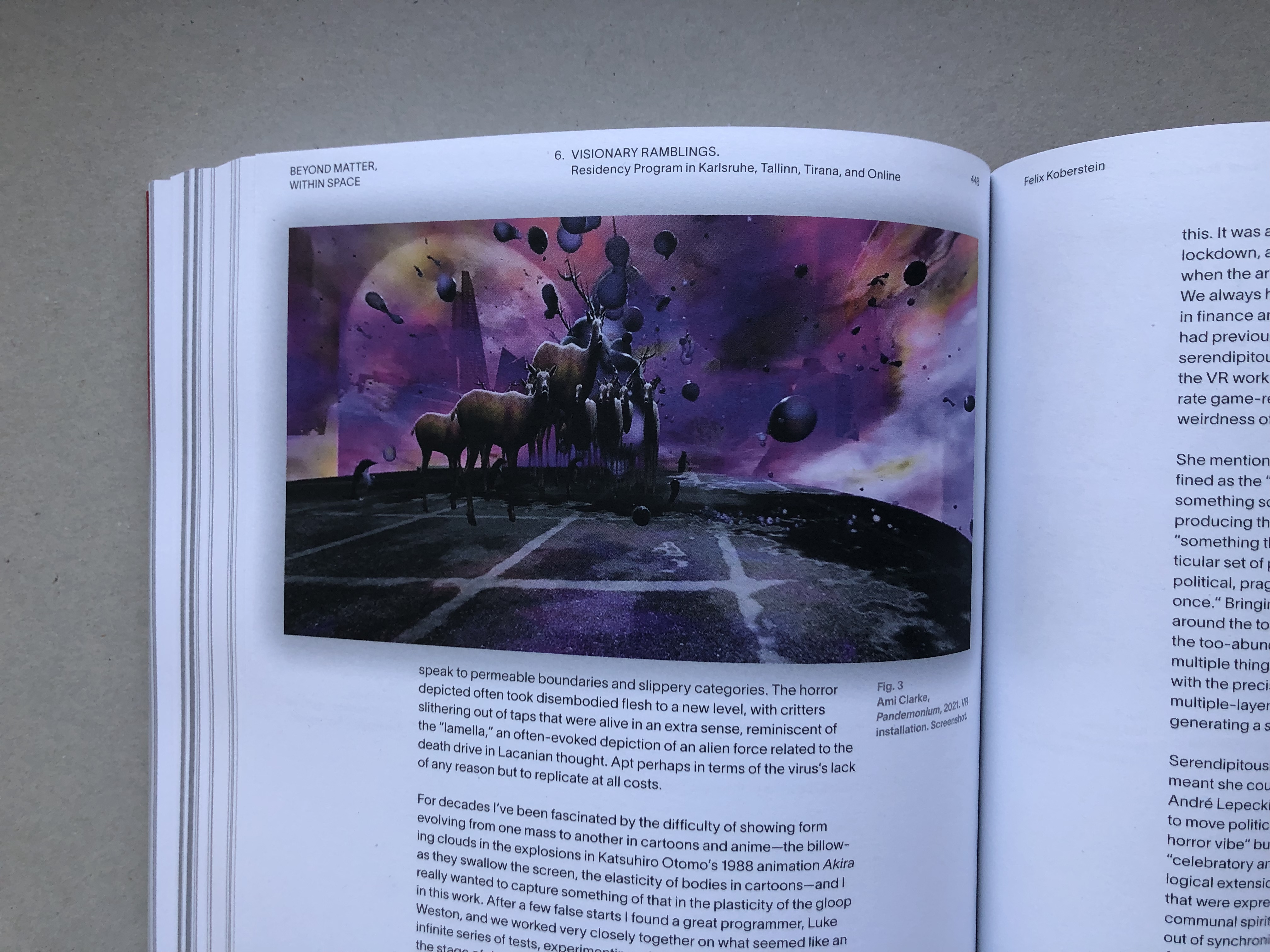

»Matter. Non-Matter. Anti-Matter.« now presents in the 21st century the digital models of the two projects on the Immaterial Display, hardware that has been specially developed for exploring virtual exhibitions. On view are artworks and artifacts from the past exhibitions, as well as contemporary reflections and artworks created or expanded for this exhibition. These include works by Jeremy Bailey, damjanski, fabric|ch, Geraldine Juárez, Carolyn Kirschner, and Anne Le Troter that echo the 3D models of the two landmark exhibitions. They bear witness to the current digitization trend in the production, collection, and presentation of art.

Case studies and examples of the application of digital curatorial reconstruction techniques that were created as part of the Beyond Matter project complement the presentation.

The exhibition »Matter. Non-Matter. Anti-Matter.« is accompanied by an extensive program of events: A webinar series aimed at museum professionals and cultural practitioners will present examples of work in digital or hybrid museums; two workshops, coorganized with Andreas Broeckmann from Leuphana University Lüneburg, will focus on interdisciplinary curating and methods for researching historical exhibitions; workshops on »Performance-Oriented Design Methods for Audience Studies and Exhibition Evaluation« (PORe) will be held by Lily Díaz-Kommonen and Cvijeta Miljak from Aalto University.

After the exhibition ends at the ZKM, a new edition of »Matter. Non-Matter. Anti-Matter.« will be on view at the Centre Pompidou in Paris from May to July 2023.

>>>

Artists

Josef Albers, Giovanni Anselmo, Arman, Art & Language, Jeremy Bailey, Fiona Banner, DiMoDa featuring Banz & Bowinkel, Christiane Paul, Tamiko Thiel, Ricardo Miranda Zúñiga, Samuel Bianchini, Bio Design Lab (HfG Karlsruhe), Jean-Louis Boissier & Liliane Terrier, John Cage, Jacques-Élie Chabert & Camille Philibert, damjanski, Annet Dekker & Marialaura Ghidini & Gaia Tedone, Marcel Duchamp, fabric | ch, Eric J. Heller, Prof. Dr. Kai-Uwe Hemken (Art Studies Kunsthochschule Kassel / University of Kassel), Joasia Krysa, Leonardo Impett, Eva Cetinić, MetaObjects, Sui, Michel Jaffrennou, Geraldine Juárez, Martin Kippenberger, Carolyn Kirschner, Maria Klonaris & Katerina Thomadaki, Joseph Kosuth, Denis Laborde, Mark Lewis & Laura Mulvey, Kasimir Malevich, Pietro Manzoni, Gordon Matta-Clark, Peo Olsson, Katarina Sjögren, Jonas Williamsson, Roman Opalka, Nam June Paik, Readymades belong to everyone®, Jeffrey Shaw, Annegret Soltau, Daniel Soutif & Paule Zajderman, Klaus Staeck, Anne Le Troter, Manfred Wolff-Plottegg, Erwin Wurm

-

Curator

Livia Nolasco-RózsásCuratorial team

Felix Koberstein (ZKM)

Moritz Konrad (ZKM)

Marcella Lista (Centre Pompidou, Paris)

Philippe Bettinelli (Centre Pompidou, Paris)

Julie Champion (Centre Pompidou, Paris)Beyond Matter project team at ZKM

Marianne Schädler (managing editor)

Aurora Bertolli (communication)Exhibition team

Matthias Gommel (Scenography)

Janine Burger (Participation)

Thomas Schwab (Technical project management)Graphic design

AKU.coMuseum and Exhibition Technology

Martin Mangold - Organization / Institution

- Initiated by the ZKM | Center for Art and Media Karlsruhe and the Centre Pompidou, Paris, in collaboration with the Aalto University, Espoo, the Tallinn Art Hall, and the Tirana Art Lab.

>>>

Further locations and dates:

| Mar 14, 2022 – Mar 29, 2022 | Väre, Aalto University, Espoo |

| Apr 20, 2022 – Apr 25, 2022 | The Cube / Helsinki Central Library Oodi |

| Apr 25, 2022 – May 5, 2022 | Väre, Aalto University, Espoo |

| May 16, 2022 – May 22, 2022 | Design Museum Helsinki |

| June 25, 2022 – August 28, 2022 | Tirana Art Lab, Albanien |

| Dec 3, 2022 – Apr 23, 2023 | ZKM |

| Summer 2023 | Centre Pompidou, Paris |

---

Project

Cooperation partners

Supported by

Atomized (re-)Staging, new work by fabric | ch on @beyondmatter and @zkm | #exhibition #digital #autonomous

Via

-----

Friday, October 28. 2022

Art x Science Dialogues: Curation In The Context of Artificial Intelligence - fabric | ch & Aiiiii Art Center Shanghai | #AI #exhibition #architecture

Note:

fabric | ch had the pleasure to present our recent works at the invitation of Swissnex China, during an online panel along with Ms. Xi Li, curator and director of the Aiiiii Art Center in Shanghai.

This was in particular the occasion to introduce Platform of Future-Past, a new installation and environmental device realized for the exhibition at How Art Center (Shanghai)

The panel was moderated by Cissy Sun, Head of Art-Science, Swissnex in China.

Via Swissnex China

-----

Art x Science Dialogues: Curation In The Context of Artificial Intelligence

Join the upcoming Art x Science Dialogue, exploring the topic of curation and artificial intelligence with Mr. Patrick Keller, Architect and Co-founder of fabric | ch and Ms. Xi Li, Curator and Director of Aiiiii Art Center.

---

When

Where

While the question of whether AI will take over the art world has been raised repeatedly, there seems to be a consensus in the art world that AI is a creative tool and that we should make better use of it. And it is the same with curation. Using algorithms in content curation and spatial design, implementing AI systems in museums, curating on generative artworks and etc. are the new issues facing today’s curators.

In the upcoming edition of Art x Science Dialogue, we’ll invite Mr. Patrick Keller, Architect and Co-founder of fabric | ch, and Ms. Xi Li, Curator and Director of Aiiiii Art Center, to join the conversation on the topic of curation and artificial intelligence.

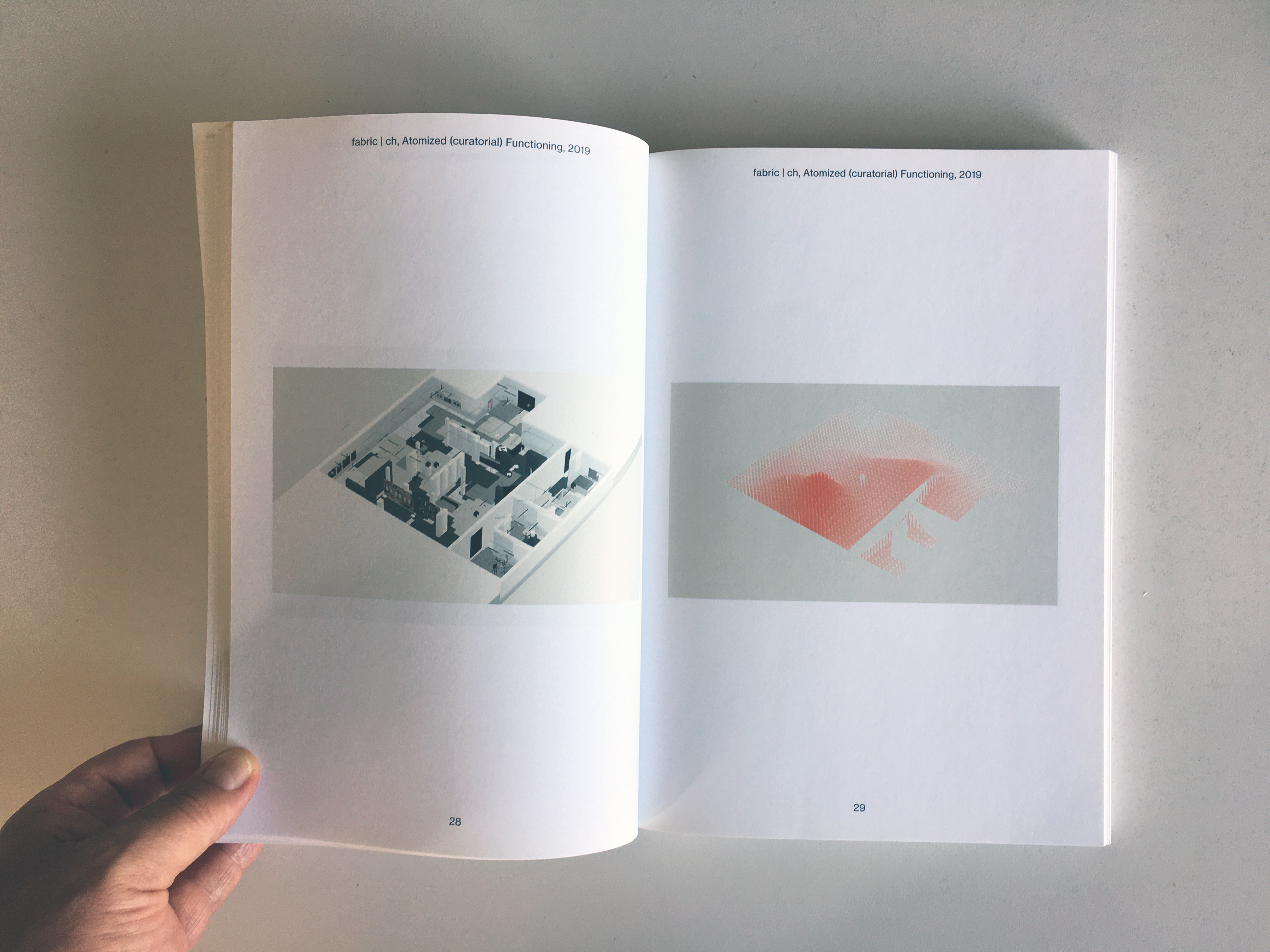

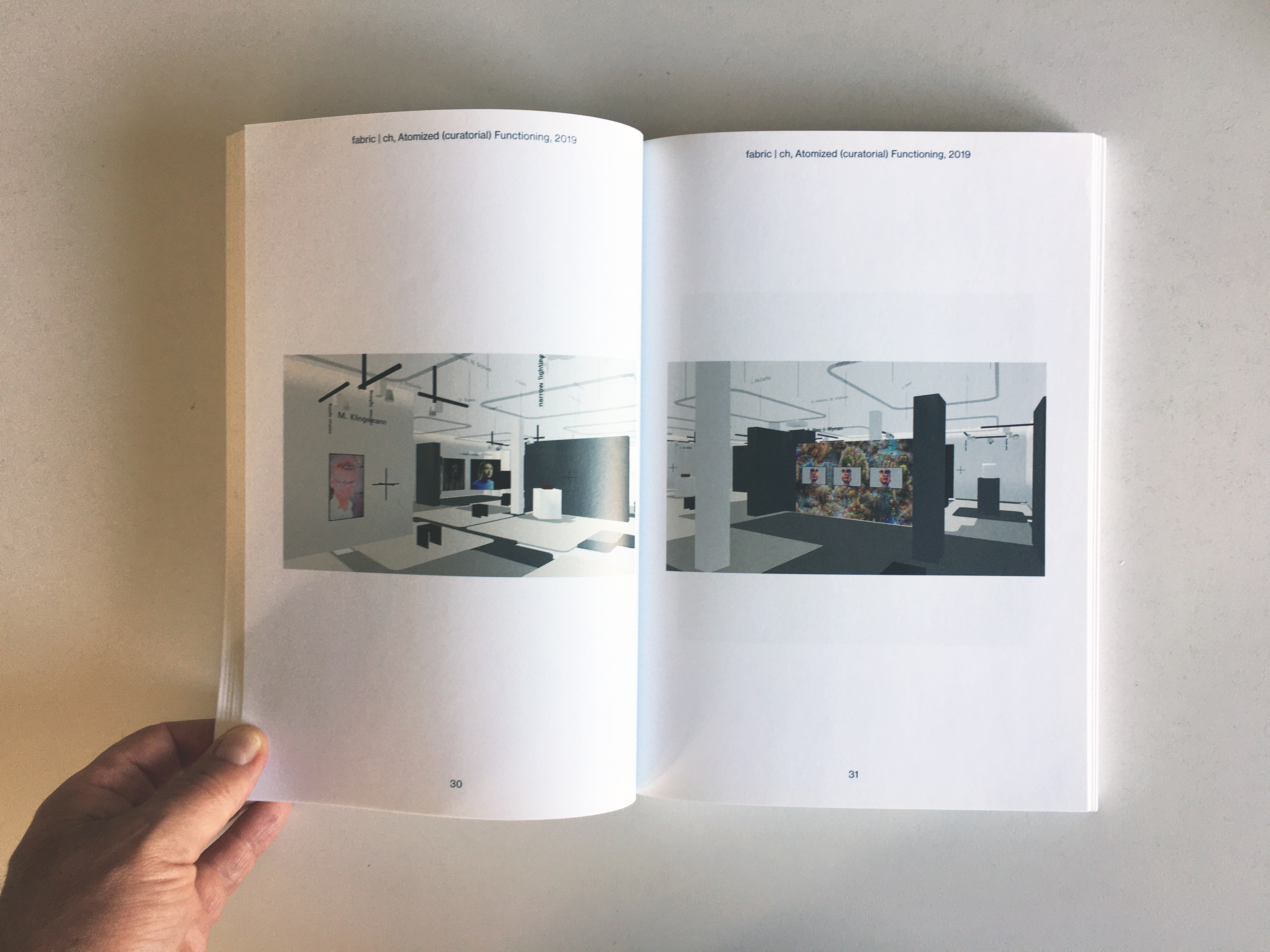

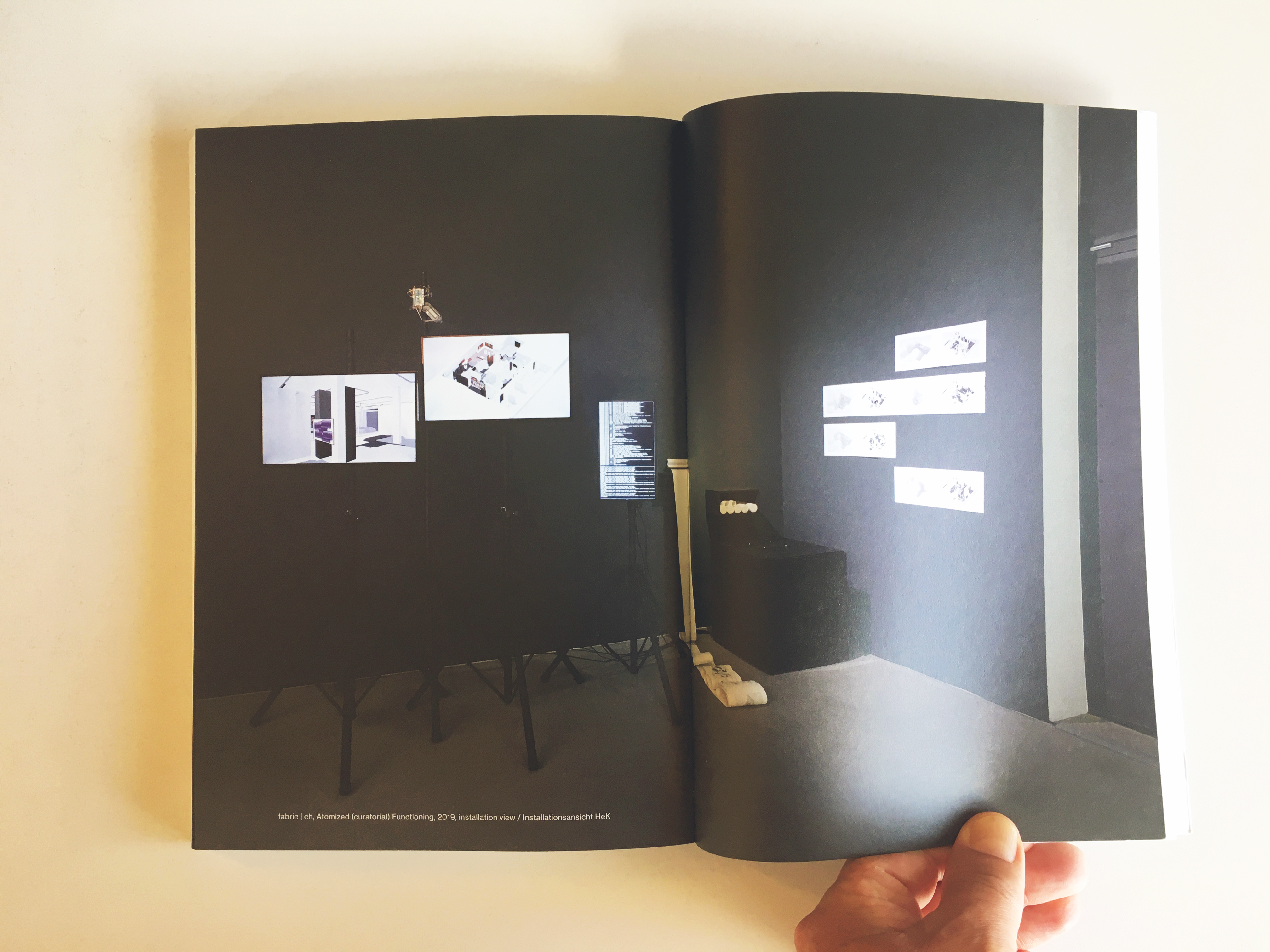

Patrick Keller will present the works from their Collective – fabric | ch, featuring Atomized (curatorial) Functioning. It is an architectural project based on automated algorithmic principles, to which a machine learning layer can be added as required. It is a software piece that endlessly creates and saves new spatial configurations for a given situation and converges towards a “solution”, in real-time 3d and according to dynamic data and constraints. While Xi Li will present her research on AI art curation and her perspectives and practices in curating the exhibitions at Aiiiii Art Center. It is a brand new artificial intelligence art institution based in Shanghai, aiming to offer insight into the many challenges, practices, and creative modes of artificial intelligence-based art.

Atomized (curatorial) Functioning, by fabric | ch

---

PROGRAM

16:00 – 16:05 Opening Remarks

Cissy Sun, Head of Art-Science, Swissnex in China

16:05 – 16:25 Presentation

Patrick Keller, Architect, Co-founder, fabric | ch

16:25 – 16:45 Presentation

Xi Li, Curator, Director, Aiiiii Art Center

16:45 – 17:00 Discussion and Q&A

SPEAKERS

---

fabric | ch – studio for architecture, interaction & research

Combining experimentation, exhibition, and production, fabric | ch formulates new architectural proposals and produces singular livable spaces that bind localized and distributed landscapes, algorithmic behaviors, atmospheres, and technologies.

Since the foundation of the studio, the architects and scientists of fabric | ch have investigated the field of contemporary space in its interaction with technologies. From network-related environments that blend physical and digital properties to algorithmic recombination of locations, temporalities, and dimensions based on the objective sensing of their environmental data, it is the nature of our relationship to the environment and to contemporary space that is rephrased.

The work of fabric | ch deals with issues related to the mediation of our relationship to place and distance, to automated climatic, informational, and energetic exchanges, to mobility and post-globalization, all inscribed in a perspective of creolization, spatial interbreeding, new materialism, and sustainability.

fabric | ch is currently composed of Christian Babski, Stéphane Carion, Christophe Guignard and Patrick Keller (cofounders of the collective), Keumok Kim and Michaël Chablais.

Patrick Keller is an architect and founding member of the collective fabric | ch, composed of architects, artists, and engineers, based in Lausanne, Switzerland.

In this context, he has contributed to the creation, development, and exhibition of numerous experimental works dealing with the intersection of architecture, networks, algorithms, and data, along with livable environments.

---

Aiiiii Art Center

Aiiiii Art Center (Est. 2021) is an artificial intelligence art institution based in Shanghai. The organization seeks to support, promote, as well as incubate both international and domestic artists and projects related to intelligent algorithms. Aiiiii Art Center is committed to becoming a pioneer of artificial intelligence through the discovery of exciting possibilities afforded by the intersections of creativity and technology.

Aiiiii Art Center aims to offer insight into the many challenges, practices, and creative modes of artificial intelligence-based art. Such aims will be achieved through academic conferences and published research efforts conducted either independently or in collaboration with domestic and international institutions and organizations. This organization will also actively promote and showcase the exploratory uses of artificial intelligence-based art in practice.

Xi Li, curator, director of Aiiiii Art Center. Graduated from Central Saint Martins MA in Narrative Environments and Central Academy of Fine Arts with a BA in Art Management.

Programs: Book of Sand: The Inaugural Exhibition of Aiiiii Art Center (2021); aai International Conference on AI Art (2021, 2022); Ritual of Signs and Metamorphosis (2018); Olafur Eliasson: The Unspeakable Openness of Things (2017);Captive of Love: Danwen Xing (2017); Over Time: The Poetic Image of the Urbanscape (2016).

---

ART X SCIENCE DIALOGUES

Art x Science Dialogues is a new webinar series initiated by Swissnex in China in 2020. The webinar series not only presents artistic projects where scientists and research engineers are deeply involved but also looks into new interdisciplinary initiatives and trends. Through the dialogues between artists and scientists, we try to stimulate the exchange of ideas between the two different worlds and explore opportunities for collaboration.

Tuesday, August 31. 2021

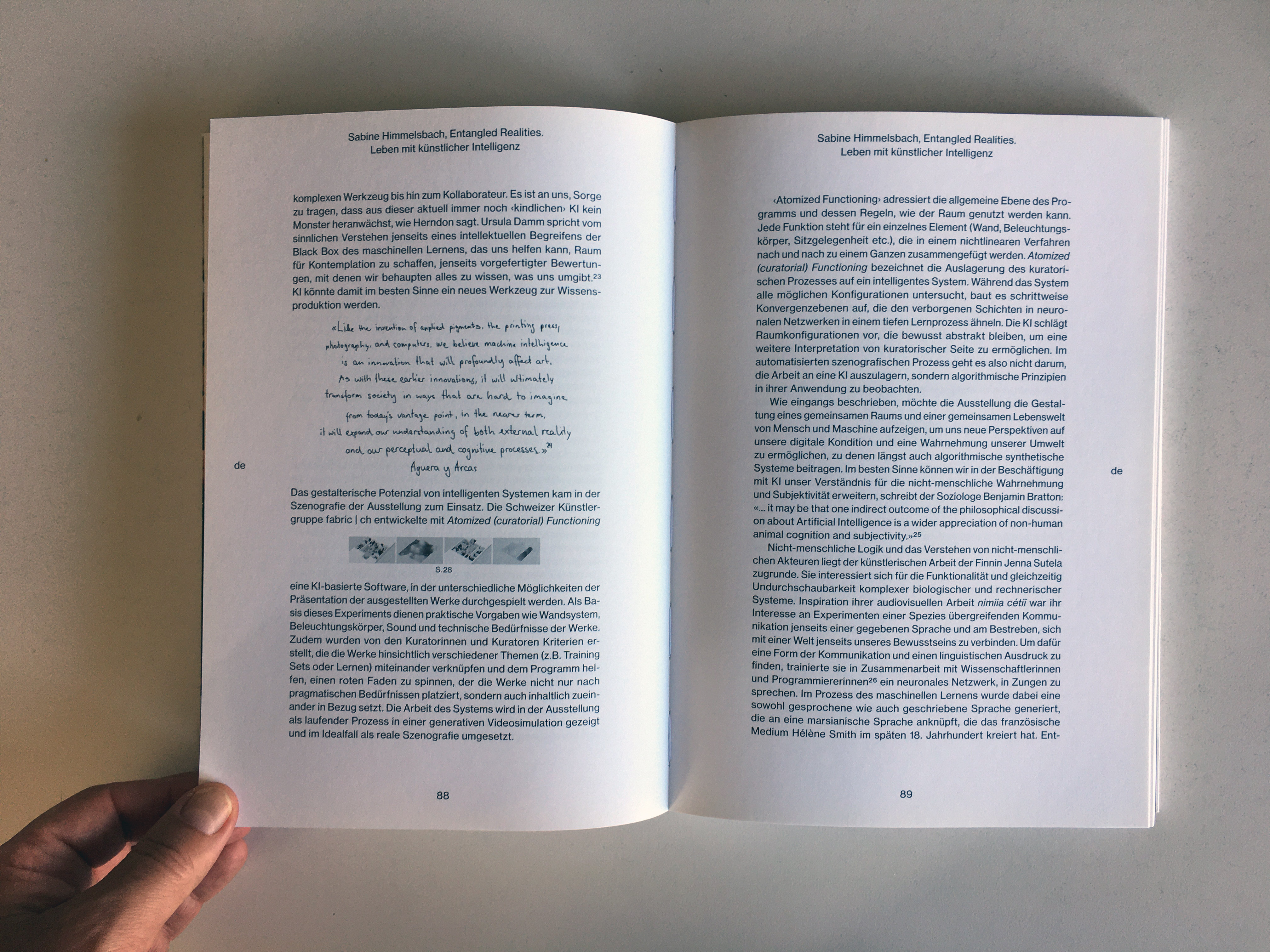

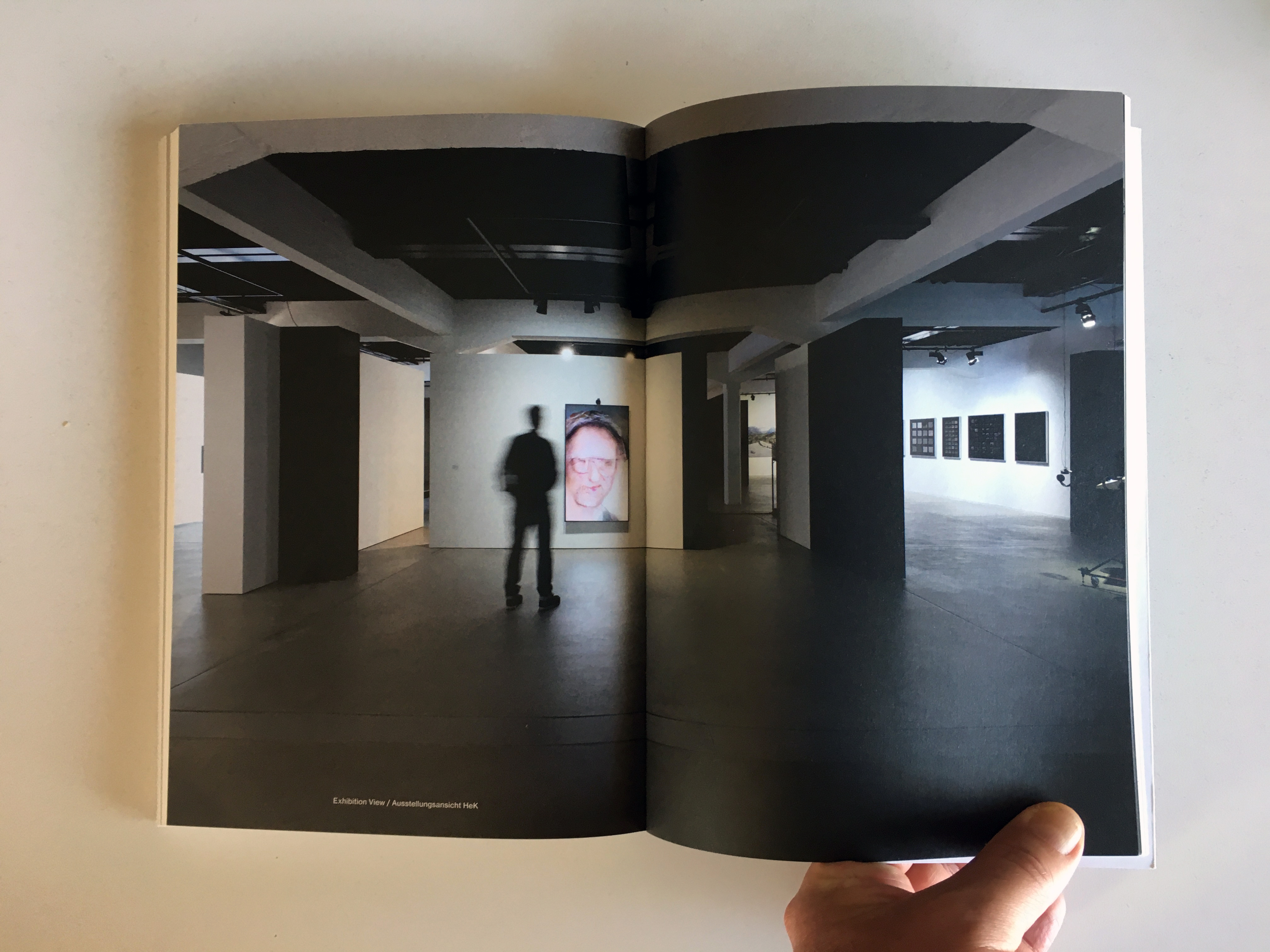

Entangled Realities, HeK exhibition catalogue (eds. S. Himmelsbach & B. Magrini), C. Merian Verlag (Basel, 2021) | #AI #art #environments

Note: this publication was released at the occasion of the exhibition Entangled Realities - Living with Artificial Intelligence, curated by Sabine Himmelsbach & Boris Magrini at Haus der elektronischen Künste, in Basel.

The project Atomized (curatorial) Functioning (pdf), part of the Atomized (*) Functioning serie, was presented, used and debatedi n this context.

-----

By Patrick Keller

Wednesday, August 25. 2021

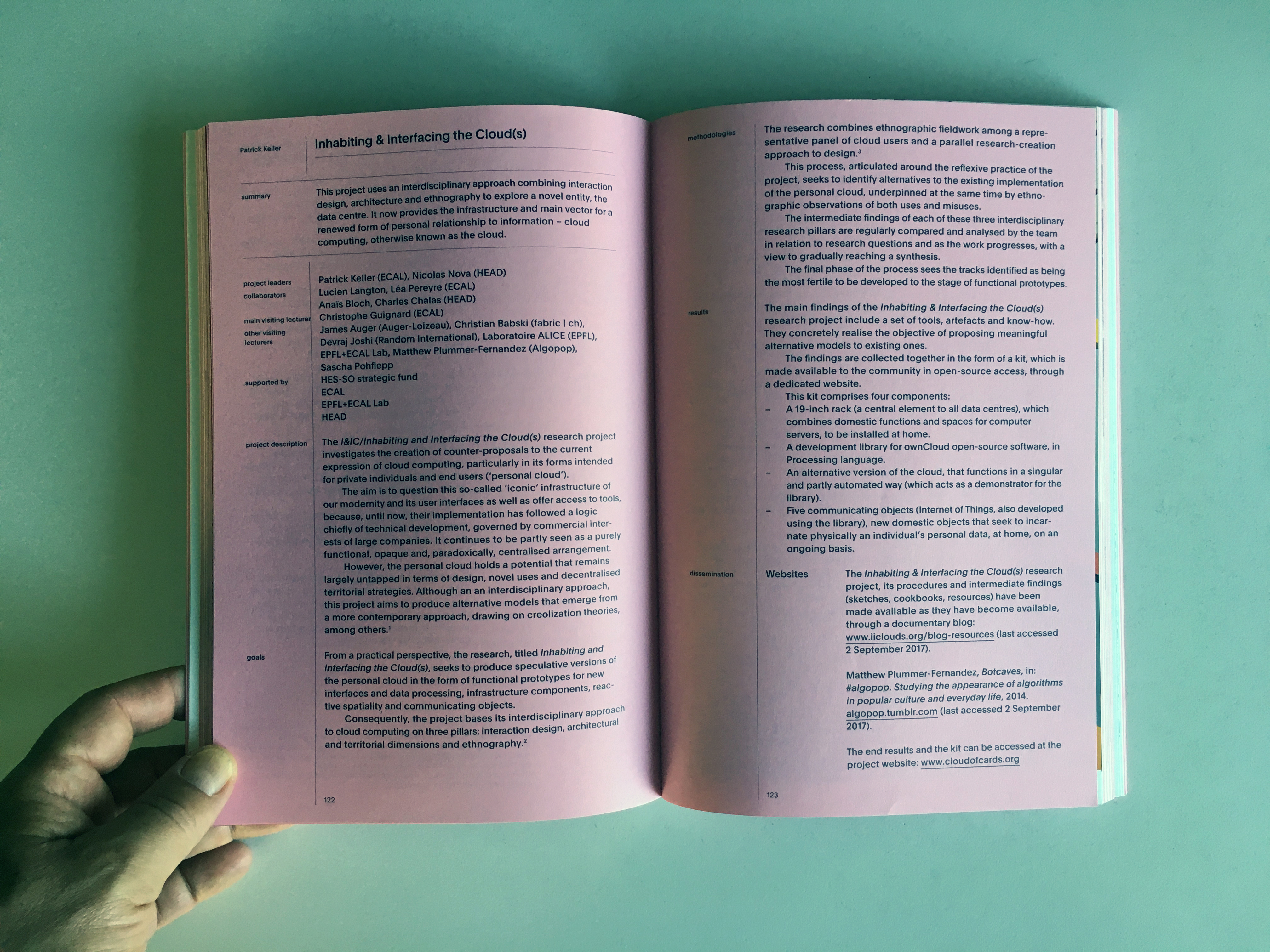

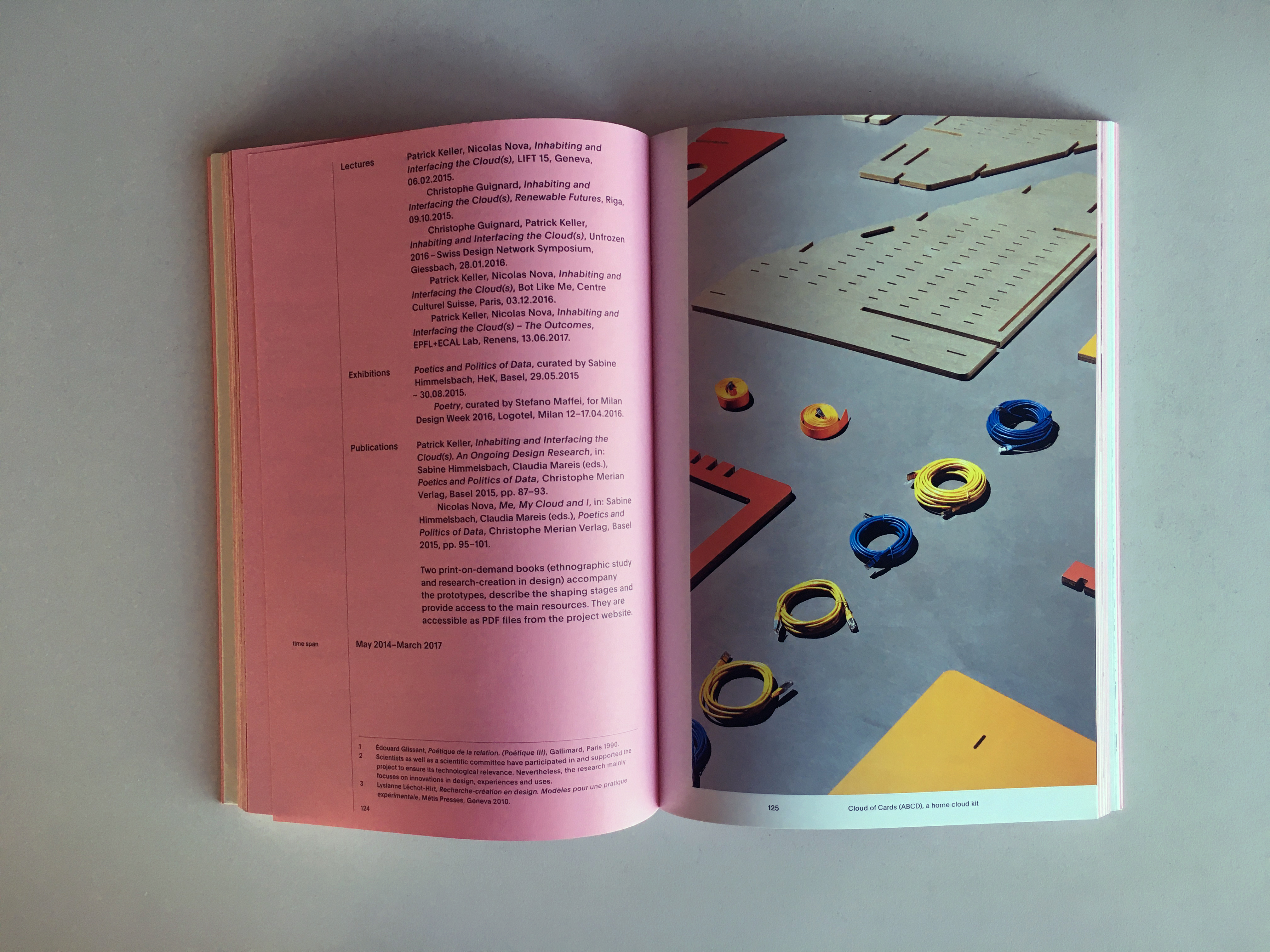

Cloud of Cards – A design research publication, ed. ECAL, Hemisphere & Frame magazines (2019 – 2020) | #design #research #datacenters #infrastructure

Note: still catching up on past publications, these ones (Cloud of Cards and related) are "pre-covid times", in Print-on-Demand and related to a the design research on data and the cloud led jointly between ECAL / University of Art & Design, Lausanne and HEAD - Genève (with Prof. Nicolas Nova). It concerns mainly new propositions for hosting infrastructure of data, envisioned as "personal", domestic (decentralized) and small scale alternatives. Many "recipes" were published to describe how to creatively hold you data yourself.

It can also be accessed through my academia account, along with it's accompanying publication by NIcolas Nova: Cloud of Practices.

-----

By Patrick Keller

--

The same research was shortly presented in the Swiss journal Hemispheres, as well as in the international magazine Frame:

--

Making Sense – A publication about design research, (ed. D. Fornari), ECAL (Lausanne, 2017) | #iiclouds #datacenter #research

Note: to catch up on time and work with the documentation of our past publications, this one was published already some time ago by ECAL / University of Art and Design, Lausanne (HES-SO), but still a topical issue (> how to redesign/codesign datacenters and the access to personal data in both a sustainable and "fair" way for the end user?)

We're currently working on an evolution of this project that involves the recent decentralized technologies that emerged in the meantime (a.k.a "blockchains", "NFT", etc.). In the meantime, we are preparing academic talks on the subject with the media sociologist Joël Vacheron, who will be invoved in the next phases of the research -- would they happen... --

-----

By Patrick Keller

(sorry for the strange colors on these 3 img. below...)

Friday, February 01. 2019

The Yoda of Silicon Valley | #NewYorkTimes #Algorithms

Note: Yet another dive into history of computer programming and algorithms, used for visual outputs ...

Via The New York Times (on Medium)

-----

By Siobhan Roberts

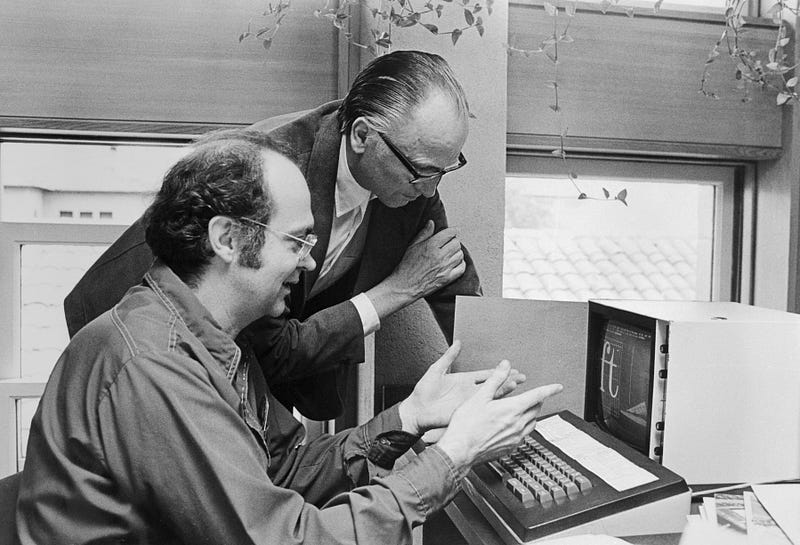

Donald Knuth at his home in Stanford, Calif. He is a notorious perfectionist and has offered to pay a reward to anyone who finds a mistake in any of his books. Photo: Brian Flaherty

For half a century, the Stanford computer scientist Donald Knuth, who bears a slight resemblance to Yoda — albeit standing 6-foot-4 and wearing glasses — has reigned as the spirit-guide of the algorithmic realm.

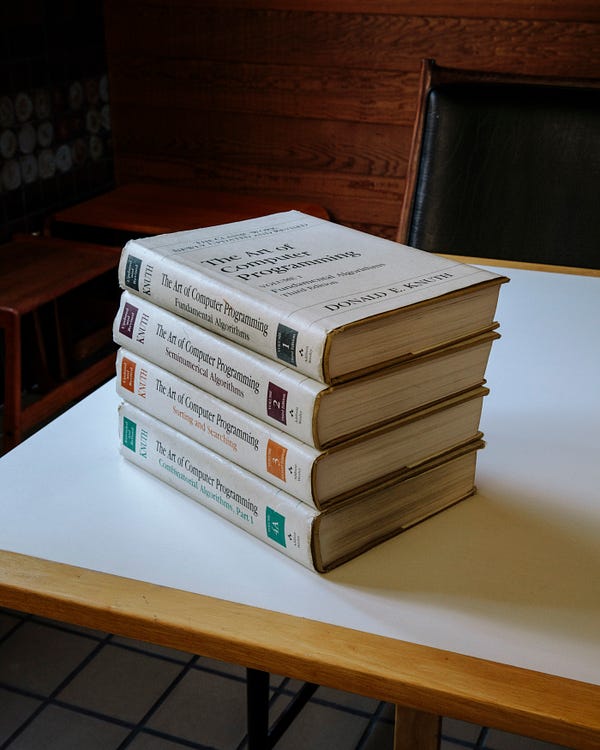

He is the author of “The Art of Computer Programming,” a continuing four-volume opus that is his life’s work. The first volume debuted in 1968, and the collected volumes (sold as a boxed set for about $250) were included by American Scientist in 2013 on its list of books that shaped the last century of science — alongside a special edition of “The Autobiography of Charles Darwin,” Tom Wolfe’s “The Right Stuff,” Rachel Carson’s “Silent Spring” and monographs by Albert Einstein, John von Neumann and Richard Feynman.

With more than one million copies in print, “The Art of Computer Programming” is the Bible of its field. “Like an actual bible, it is long and comprehensive; no other book is as comprehensive,” said Peter Norvig, a director of research at Google. After 652 pages, volume one closes with a blurb on the back cover from Bill Gates: “You should definitely send me a résumé if you can read the whole thing.”

The volume opens with an excerpt from “McCall’s Cookbook”:

Here is your book, the one your thousands of letters have asked us to publish. It has taken us years to do, checking and rechecking countless recipes to bring you only the best, only the interesting, only the perfect.

Inside are algorithms, the recipes that feed the digital age — although, as Dr.Knuth likes to point out, algorithms can also be found on Babylonian tablets from 3,800 years ago. He is an esteemed algorithmist; his name is attached to some of the field’s most important specimens, such as the Knuth-Morris-Pratt string-searching algorithm. Devised in 1970, it finds all occurrences of a given word or pattern of letters in a text — for instance, when you hit Command+F to search for a keyword in a document.

Now 80, Dr. Knuth usually dresses like the youthful geek he was when he embarked on this odyssey: long-sleeved T-shirt under a short-sleeved T-shirt, with jeans, at least at this time of year. In those early days, he worked close to the machine, writing “in the raw,” tinkering with the zeros and ones.

“Knuth made it clear that the system could actually be understood all the way down to the machine code level,” said Dr. Norvig. Nowadays, of course, with algorithms masterminding (and undermining) our very existence, the average programmer no longer has time to manipulate the binary muck, and works instead with hierarchies of abstraction, layers upon layers of code — and often with chains of code borrowed from code libraries. But an elite class of engineers occasionally still does the deep dive.

“Here at Google, sometimes we just throw stuff together,” Dr. Norvig said, during a meeting of the Google Trips team, in Mountain View, Calif. “But other times, if you’re serving billions of users, it’s important to do that efficiently. A 10-per-cent improvement in efficiency can work out to billions of dollars, and in order to get that last level of efficiency, you have to understand what’s going on all the way down.”

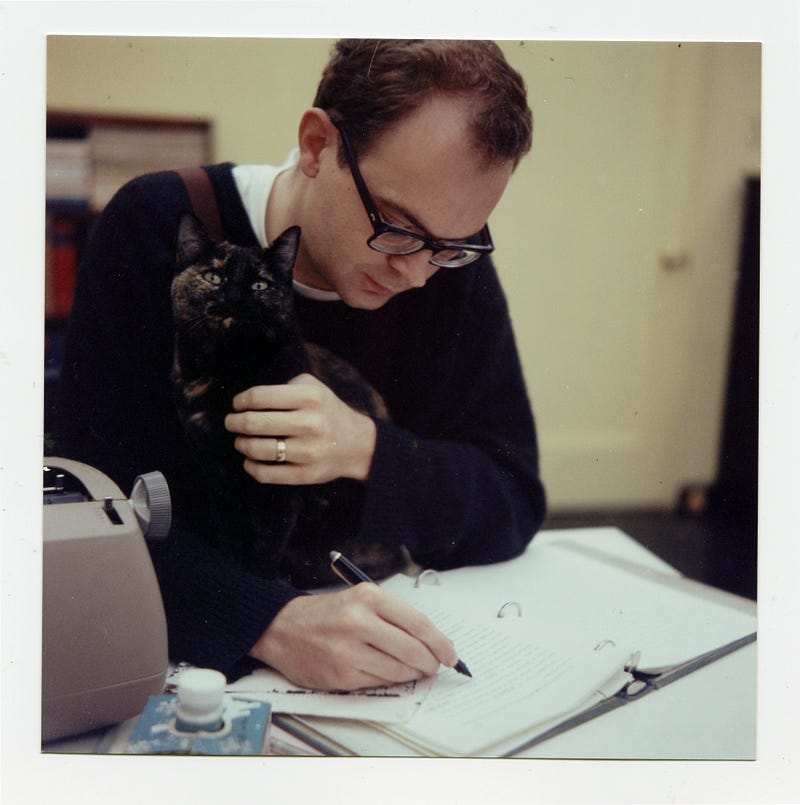

Dr. Knuth at the California Institute of Technology, where he received his Ph.D. in 1963. Photo: Jill Knuth

Or, as Andrei Broder, a distinguished scientist at Google and one of Dr. Knuth’s former graduate students, explained during the meeting: “We want to have some theoretical basis for what we’re doing. We don’t want a frivolous or sloppy or second-rate algorithm. We don’t want some other algorithmist to say, ‘You guys are morons.’”

The Google Trips app, created in 2016, is an “orienteering algorithm” that maps out a day’s worth of recommended touristy activities. The team was working on “maximizing the quality of the worst day” — for instance, avoiding sending the user back to the same neighborhood to see different sites. They drew inspiration from a 300-year-old algorithm by the Swiss mathematician Leonhard Euler, who wanted to map a route through the Prussian city of Königsberg that would cross each of its seven bridges only once. Dr. Knuth addresses Euler’s classic problem in the first volume of his treatise. (He once applied Euler’s method in coding a computer-controlled sewing machine.)

Following Dr. Knuth’s doctrine helps to ward off moronry. He is known for introducing the notion of “literate programming,” emphasizing the importance of writing code that is readable by humans as well as computers — a notion that nowadays seems almost twee. Dr. Knuth has gone so far as to argue that some computer programs are, like Elizabeth Bishop’s poems and Philip Roth’s “American Pastoral,” works of literature worthy of a Pulitzer.

He is also a notorious perfectionist. Randall Munroe, the xkcd cartoonist and author of “Thing Explainer,” first learned about Dr. Knuth from computer-science people who mentioned the reward money Dr. Knuth pays to anyone who finds a mistake in any of his books. As Mr. Munroe recalled, “People talked about getting one of those checks as if it was computer science’s Nobel Prize.”

Dr. Knuth’s exacting standards, literary and otherwise, may explain why his life’s work is nowhere near done. He has a wager with Sergey Brin, the co-founder of Google and a former student (to use the term loosely), over whether Mr. Brin will finish his Ph.D. before Dr. Knuth concludes his opus.

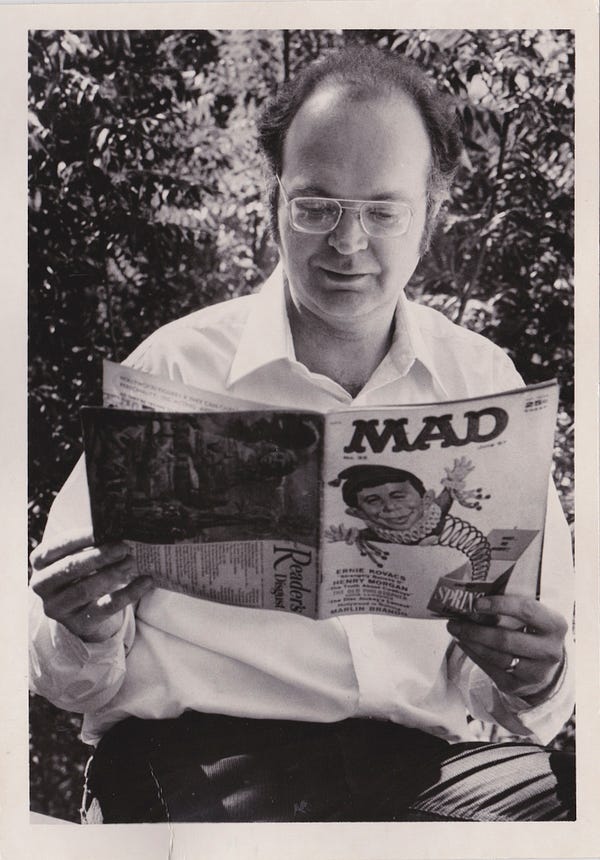

The dawn of the algorithm

At age 19, Dr. Knuth published his first technical paper, “The Potrzebie System of Weights and Measures,” in Mad magazine. He became a computer scientist before the discipline existed, studying mathematics at what is now Case Western Reserve University in Cleveland. He looked at sample programs for the school’s IBM 650 mainframe, a decimal computer, and, noticing some inadequacies, rewrote the software as well as the textbook used in class. As a side project, he ran stats for the basketball team, writing a computer program that helped them win their league — and earned a segment by Walter Cronkite called “The Electronic Coach.”

During summer vacations, Dr. Knuth made more money than professors earned in a year by writing compilers. A compiler is like a translator, converting a high-level programming language (resembling algebra) to a lower-level one (sometimes arcane binary) and, ideally, improving it in the process. In computer science, “optimization” is truly an art, and this is articulated in another Knuthian proverb: “Premature optimization is the root of all evil.”

Eventually Dr. Knuth became a compiler himself, inadvertently founding a new field that he came to call the “analysis of algorithms.” A publisher hired him to write a book about compilers, but it evolved into a book collecting everything he knew about how to write for computers — a book about algorithms.

Left: Dr. Knuth in 1981, looking at the 1957 Mad magazine issue that contained his first technical article. He was 19 when it was published. Photo: Jill Knuth. Right: “The Art of Computer Programming,” volumes 1–4. “Send me a résumé if you can read the whole thing,” Bill Gates wrote in a blurb. Photo: Brian Flaherty

“By the time of the Renaissance, the origin of this word was in doubt,” it began. “And early linguists attempted to guess at its derivation by making combinations like algiros [painful] + arithmos [number].’” In fact, Dr. Knuth continued, the namesake is the 9th-century Persian textbook author Abū ‘Abd Allāh Muhammad ibn Mūsā al-Khwārizmī, Latinized as Algorithmi. Never one for half measures, Dr. Knuth went on a pilgrimage in 1979 to al-Khwārizmī’s ancestral homeland in Uzbekistan.

When Dr. Knuth started out, he intended to write a single work. Soon after, computer science underwent its Big Bang, so he reimagined and recast the project in seven volumes. Now he metes out sub-volumes, called fascicles. The next installation, “Volume 4, Fascicle 5,” covering, among other things, “backtracking” and “dancing links,” was meant to be published in time for Christmas. It is delayed until next April because he keeps finding more and more irresistible problems that he wants to present.

In order to optimize his chances of getting to the end, Dr. Knuth has long guarded his time. He retired at 55, restricted his public engagements and quit email (officially, at least). Andrei Broder recalled that time management was his professor’s defining characteristic even in the early 1980s.

Dr. Knuth typically held student appointments on Friday mornings, until he started spending his nights in the lab of John McCarthy, a founder of artificial intelligence, to get access to the computers when they were free. Horrified by what his beloved book looked like on the page with the advent of digital publishing, Dr. Knuth had gone on a mission to create the TeX computer typesetting system, which remains the gold standard for all forms of scientific communication and publication. Some consider it Dr. Knuth’s greatest contribution to the world, and the greatest contribution to typography since Gutenberg.

This decade-long detour took place back in the age when computers were shared among users and ran faster at night while most humans slept. So Dr. Knuth switched day into night, shifted his schedule by 12 hours and mapped his student appointments to Fridays from 8 p.m. to midnight. Dr. Broder recalled, “When I told my girlfriend that we can’t do anything Friday night because Friday night at 10 I have to meet with my adviser, she thought, ‘This is something that is so stupid it must be true.’”

When Knuth chooses to be physically present, however, he is 100-per-cent there in the moment. “It just makes you happy to be around him,” said Jennifer Chayes, a managing director of Microsoft Research. “He’s a maximum in the community. If you had an optimization function that was in some way a combination of warmth and depth, Don would be it.”

Dr. Knuth discussing typefaces with Hermann Zapf, the type designer. Many consider Dr. Knuth’s work on the TeX computer typesetting system to be the greatest contribution to typography since Gutenberg. Photo: Bettmann/Getty Images

Sunday with the algorithmist

Dr. Knuth lives in Stanford, and allowed for a Sunday visitor. That he spared an entire day was exceptional — usually his availability is “modulo nap time,” a sacred daily ritual from 1 p.m. to 4 p.m. He started early, at Palo Alto’s First Lutheran Church, where he delivered a Sunday school lesson to a standing-room-only crowd. Driving home, he got philosophical about mathematics.

“I’ll never know everything,” he said. “My life would be a lot worse if there was nothing I knew the answers about, and if there was nothing I didn’t know the answers about.” Then he offered a tour of his “California modern” house, which he and his wife, Jill, built in 1970. His office is littered with piles of U.S.B. sticks and adorned with Valentine’s Day heart art from Jill, a graphic designer. Most impressive is the music room, built around his custom-made, 812-pipe pipe organ. The day ended over beer at a puzzle party.

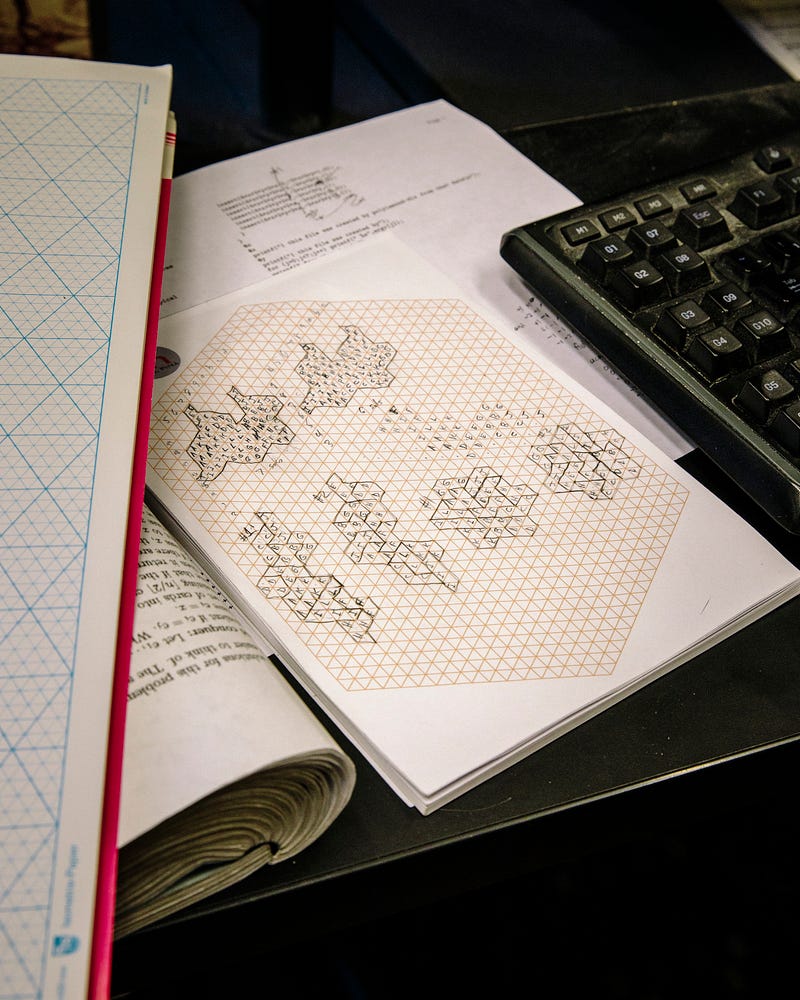

Puzzles and games — and penning a novella about surreal numbers, and composing a 90-minute multimedia musical pipe-dream, “Fantasia Apocalyptica” — are the sorts of things that really tickle him. One section of his book is titled, “Puzzles Versus the Real World.” He emailed an excerpt to the father-son team of Martin Demaine, an artist, and Erik Demaine, a computer scientist, both at the Massachusetts Institute of Technology, because Dr. Knuth had included their “algorithmic puzzle fonts.”

“I was thrilled,” said Erik Demaine. “It’s an honor to be in the book.” He mentioned another Knuth quotation, which serves as the inspirational motto for the biannual “FUN with Algorithms” conference: “Pleasure has probably been the main goal all along.”

But then, Dr. Demaine said, the field went and got practical. Engineers and scientists and artists are teaming up to solve real-world problems — protein folding, robotics, airbags — using the Demaines’s mathematical origami designs for how to fold paper and linkages into different shapes.

Of course, all the algorithmic rigmarole is also causing real-world problems. Algorithms written by humans — tackling harder and harder problems, but producing code embedded with bugs and biases — are troubling enough. More worrisome, perhaps, are the algorithms that are not written by humans, algorithms written by the machine, as it learns.

Programmers still train the machine, and, crucially, feed it data. (Data is the new domain of biases and bugs, and here the bugs and biases are harder to find and fix). However, as Kevin Slavin, a research affiliate at M.I.T.’s Media Lab said, “We are now writing algorithms we cannot read. That makes this a unique moment in history, in that we are subject to ideas and actions and efforts by a set of physics that have human origins without human comprehension.” As Slavin has often noted, “It’s a bright future, if you’re an algorithm.”

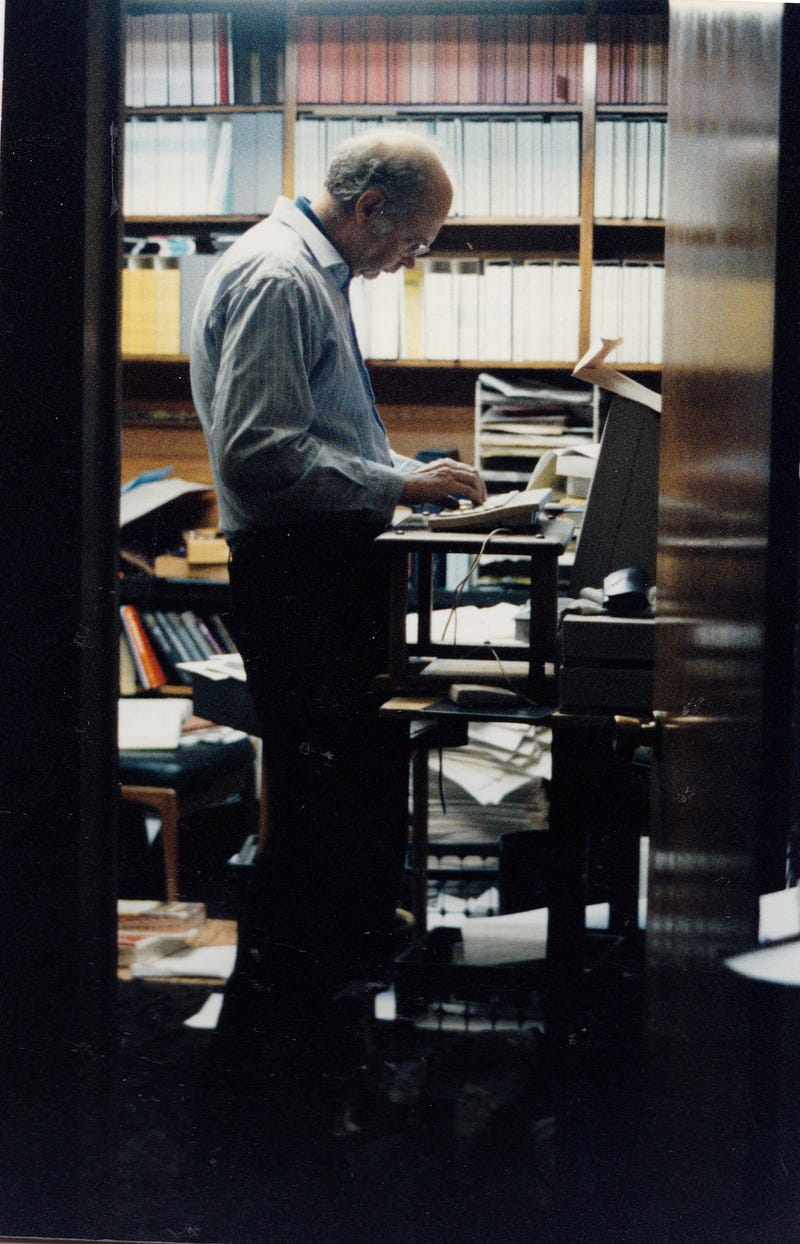

Dr. Knuth at his desk at home in 1999. Photo: Jill Knuth

A few notes. Photo: Brian Flaherty

All the more so if you’re an algorithm versed in Knuth. “Today, programmers use stuff that Knuth, and others, have done as components of their algorithms, and then they combine that together with all the other stuff they need,” said Google’s Dr. Norvig.

“With A.I., we have the same thing. It’s just that the combining-together part will be done automatically, based on the data, rather than based on a programmer’s work. You want A.I. to be able to combine components to get a good answer based on the data. But you have to decide what those components are. It could happen that each component is a page or chapter out of Knuth, because that’s the best possible way to do some task.”

Lucky, then, Dr. Knuth keeps at it. He figures it will take another 25 years to finish “The Art of Computer Programming,” although that time frame has been a constant since about 1980. Might the algorithm-writing algorithms get their own chapter, or maybe a page in the epilogue? “Definitely not,” said Dr. Knuth.

“I am worried that algorithms are getting too prominent in the world,” he added. “It started out that computer scientists were worried nobody was listening to us. Now I’m worried that too many people are listening.”

fabric | rblg

This blog is the survey website of fabric | ch - studio for architecture, interaction and research.

We curate and reblog articles, researches, writings, exhibitions and projects that we notice and find interesting during our everyday practice and readings.

Most articles concern the intertwined fields of architecture, territory, art, interaction design, thinking and science. From time to time, we also publish documentation about our own work and research, immersed among these related resources and inspirations.

This website is used by fabric | ch as archive, references and resources. It is shared with all those interested in the same topics as we are, in the hope that they will also find valuable references and content in it.

Quicksearch

Categories

Calendar

|

|

July '25 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | 3 | 4 | 5 | 6 | |

| 7 | 8 | 9 | 10 | 11 | 12 | 13 |

| 14 | 15 | 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 | 26 | 27 |

| 28 | 29 | 30 | 31 | |||

.jpg)