Monday, December 17. 2018

In 1968, computers got personal | #demo #newdemo?

Note: we went a bit historical recently on | rblg, digging into history of computing in relation to design/architecture/art. And the following one is certainly far from being the less know... History of computing, or rather personal computing. Yet the article by Maraget O'Mara bring new insights about Engelbart's "mother of all demos", asking in particular for a new "demo".

It is also interesting to consider how some topics that we'd believe are very contemporary, were in fact already popping up pretty early in the history of the media.

Questiond about the status of the machine in relation to us, humans, or about the collection of data, etc. If you go back to the early texts by Wiener and Turing (vulgarization texts... for my part), you might see that the questions we still have were already present.

Via The Conversation

-----

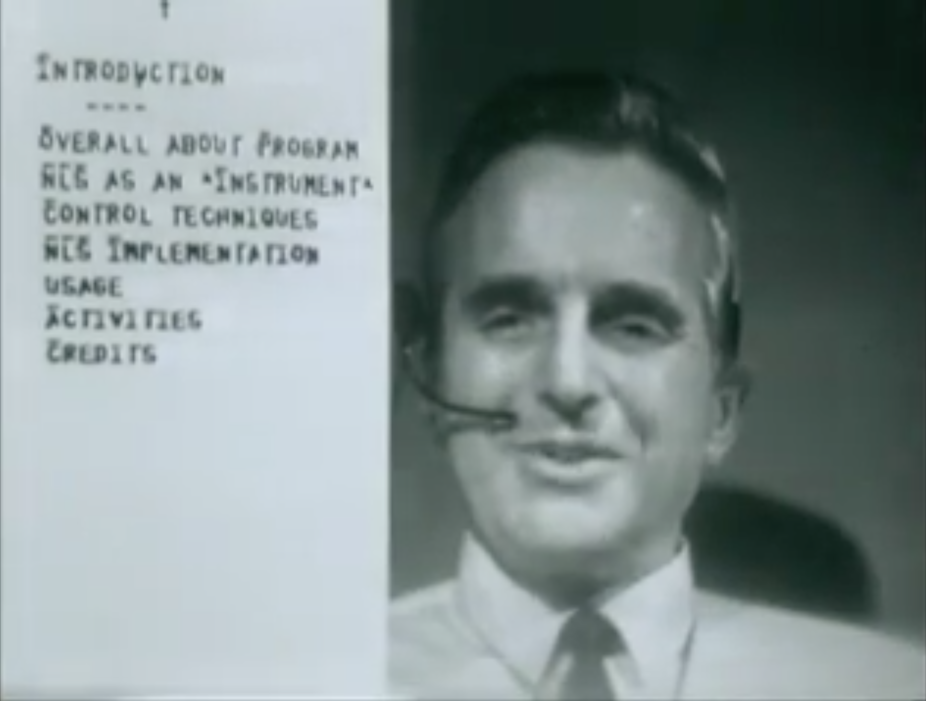

On a crisp California afternoon in early December 1968, a square-jawed, mild-mannered Stanford researcher named Douglas Engelbart took the stage at San Francisco’s Civic Auditorium and proceeded to blow everyone’s mind about what computers could do. Sitting down at a keyboard, this computer-age Clark Kent calmly showed a rapt audience of computer engineers how the devices they built could be utterly different kinds of machines – ones that were “alive for you all day,” as he put it, immediately responsive to your input, and which didn’t require users to know programming languages in order to operate.

The prototype computer mouse Doug Engelbart used in his demo. Michael Hicks, CC BY

Engelbart typed simple commands. He edited a grocery list. As he worked, he skipped the computer cursor across the screen using a strange wooden box that fit snugly under his palm. With small wheels underneath and a cord dangling from its rear, Engelbart dubbed it a “mouse.”

The 90-minute presentation went down in Silicon Valley history as the “mother of all demos,” for it previewed a world of personal and online computing utterly different from 1968’s status quo. It wasn’t just the technology that was revelatory; it was the notion that a computer could be something a non-specialist individual user could control from their own desk.

The first part of the "mother of all demos."

Shrinking the massive machines

In the America of 1968, computers weren’t at all personal. They were refrigerator-sized behemoths that hummed and blinked, calculating everything from consumer habits to missile trajectories, cloistered deep within corporate offices, government agencies and university labs. Their secrets were accessible only via punch card and teletype terminals.

The Vietnam-era counterculture already had made mainframe computers into ominous symbols of a soul-crushing Establishment. Four years before, the student protesters of Berkeley’s Free Speech Movement had pinned signs to their chests that bore a riff on the prim warning that appeared on every IBM punch card: “I am a UC student. Please don’t bend, fold, spindle or mutilate me.”

Hear Prof. O'Mara discuss this topic on our Heat and Light podcast.

Earlier in 1968, Stanley Kubrick’s trippy “2001: A Space Odyssey” mined moviegoers’ anxieties about computers run amok with the tale of a malevolent mainframe that seized control of a spaceship from its human astronauts.

Voices rang out on Capitol Hill about the uses and abuses of electronic data-gathering, too. Missouri Senator Ed Long regularly delivered floor speeches he called “Big Brother updates.” North Carolina Senator Sam Ervin declared that mainframe power posed a threat to the freedoms guaranteed by the Constitution. “The computer,” Ervin warned darkly, “never forgets.” As the Johnson administration unveiled plans to centralize government data in a single, centralized national database, New Jersey Congressman Cornelius Gallagher declared that it was just another grim step toward scientific thinking taking over modern life, “leaving as an end result a stack of computer cards where once were human beings.”

The zeitgeist of 1968 helps explain why Engelbart’s demo so quickly became a touchstone and inspiration for a new, enduring definition of technological empowerment. Here was a computer that didn’t override human intelligence or stomp out individuality, but instead could, as Engelbart put it, “augment human intellect.”

While Engelbart’s vision of how these tools might be used was rather conventionally corporate – a computer on every office desk and a mouse in every worker’s palm – his overarching notion of an individualized computer environment hit exactly the right note for the anti-Establishment technologists coming of age in 1968, who wanted to make technology personal and information free.

The second part of the "mother of all demos."

Over the next decade, technologists from this new generation would turn what Engelbart called his “wild dream” into a mass-market reality – and profoundly transform Americans’ relationship to computer technology.

Government involvement

In the decade after the demo, the crisis of Watergate and revelations of CIA and FBI snooping further seeded distrust in America’s political leadership and in the ability of large government bureaucracies to be responsible stewards of personal information. Economic uncertainty and an antiwar mood slashed public spending on high-tech research and development – the same money that once had paid for so many of those mainframe computers and for training engineers to program them.

Enabled by the miniaturizing technology of the microprocessor, the size and price of computers plummeted, turning them into affordable and soon indispensable tools for work and play. By the 1980s and 1990s, instead of being seen as machines made and controlled by government, computers had become ultimate expressions of free-market capitalism, hailed by business and political leaders alike as examples of what was possible when government got out of the way and let innovation bloom.

There lies the great irony in this pivotal turn in American high-tech history. For even though “the mother of all demos” provided inspiration for a personal, entrepreneurial, government-is-dangerous-and-small-is-beautiful computing era, Doug Engelbart’s audacious vision would never have made it to keyboard and mouse without government research funding in the first place.

Engelbart was keenly aware of this, flashing credits up on the screen at the presentation’s start listing those who funded his research team: the Defense Department’s Advanced Projects Research Agency, later known as DARPA; the National Aeronautics and Space Administration; the U.S. Air Force. Only the public sector had the deep pockets, the patience and the tolerance for blue-sky ideas without any immediate commercial application.

Although government funding played a less visible role in the high-tech story after 1968, it continued to function as critical seed capital for next-generation ideas. Marc Andreessen and his fellow graduate students developed their groundbreaking web browser in a government-funded university laboratory. DARPA and NASA money helped fund the graduate research project that Sergey Brin and Larry Page would later commercialize as Google. Driverless car technology got a jump-start after a government-sponsored competition; so has nanotechnology, green tech and more. Government hasn’t gotten out of Silicon Valley’s way; it remained there all along, quietly funding the next generation of boundary-pushing technology.

The third part of the "mother of all demos."

Today, public debate rages once again on Capitol Hill about computer-aided invasions of privacy. Hollywood spins apocalyptic tales of technology run amok. Americans spend days staring into screens, tracked by the smartphones in our pockets, hooked on social media. Technology companies are among the biggest and richest in the world. It’s a long way from Engelbart’s humble grocery list.

But perhaps the current moment of high-tech angst can once again gain inspiration from the mother of all demos. Later in life, Engelbart described his life’s work as a quest to “help humanity cope better with complexity and urgency.” His solution was a computer that was remarkably different from the others of that era, one that was humane and personal, that augmented human capability rather than boxing it in. And he was able to bring this vision to life because government agencies funded his work.

Now it’s time for another mind-blowing demo of the possible future, one that moves beyond the current adversarial moment between big government and Big Tech. It could inspire people to enlist public and private resources and minds in crafting the next audacious vision for our digital future.

-

Our new podcast “Heat and Light” features Prof. O'Mara discussing this story in depth.

Related Links:

Thursday, November 22. 2018

Programmed: Rules, Codes, and Choreographies in Art, 1965–2018 | #algorithms #creation

Note: open since last September and seen here and there, this exhibition at the Withney about the uses of rules and code in art. It follows a similar exhibition - and historical as well - this year at the MOMA, Thinking Machines. This certainly demonstrates an increasing desire and interest in the historization of six decades - five in the context of this show - of "art & technologies" (not yet "design & technologies", while "architecture and digital" was done at the CCA).

Those six decades remained almost under the radar for long and there will be obviously a lot of work to do to write this epic!

Interesting in the context of the Whitney exhibition are the many sub-topics developed:

- Rule, Instruction, Algorithm: Ideas as Form /

- Rule, Instruction, Algorithm: Generative Measures /

- Rule, Instruction, Algorithm: Collapsing Instruction and Form /

- Signal, Sequence, Resolution: Image Resequenced /

- Signal, Sequence, Resolution: Liberating the Signal /

- Signal, Sequence, Resolution: Realities Encoded /

- Augmented Reality: Tamiko Thiel

Via Whitney Museum of American Art

-----

.jpg)

Programmed: Rules, Codes, and Choreographies in Art, 1965–2018 establishes connections between works of art based on instructions, spanning over fifty years of conceptual, video, and computational art. The pieces in the exhibition are all “programmed” using instructions, sets of rules, and code, but they also address the use of programming in their creation. The exhibition links two strands of artistic exploration: the first examines the program as instructions, rules, and algorithms with a focus on conceptual art practices and their emphasis on ideas as the driving force behind the art; the second strand engages with the use of instructions and algorithms to manipulate the TV program, its apparatus, and signals or image sequences. Featuring works drawn from the Whitney’s collection, Programmed looks back at predecessors of computational art and shows how the ideas addressed in those earlier works have evolved in contemporary artistic practices. At a time when our world is increasingly driven by automated systems, Programmed traces how rules and instructions in art have both responded to and been shaped by technologies, resulting in profound changes to our image culture.

The exhibition is organized by Christiane Paul, Adjunct Curator of Digital Art, and Carol Mancusi-Ungaro, Melva Bucksbaum Associate Director for Conservation and Research, with Clémence White, curatorial assistant.

Friday, July 13. 2018

Thinking Machines at MOMA | #computing #art&design&architecture #history #avantgarde

Note: following the exhibition Thinking Machines: Art and Design in the Computer Age, 1959–1989 until last April at MOMA, images of the show appeared on the museum's website, with many references to projects. After Archeology of the Digital at CCA in Montreal between 2013-17, this is another good contribution to the history of the field and to the intricate relations between art, design, architecture and computing.

How cultural fields contributed to the shaping of this "mass stacked media" that is now built upon the combinations of computing machines, networks, interfaces, services, data, data centers, people, crowds, etc. is certainly largely underestimated.

Literature start to emerge, but it will take time to uncover what remained "out of the radars" for a very long period. They acted in fact as some sort of "avant-garde", not well estimated or identified enough, even by specialized institutions and at a time when the name "avant-garde" almost became a "s-word"... or was considered "dead".

Unfortunately, no publication seems to have been published in relation to the exhibition, on the contrary to the one at CCA, which is accompanied by two well documented books.

Via MOMA

-----

Thinking Machines: Art and Design in the Computer Age, 1959–1989

November 13, 2017–

Drawn primarily from MoMA's collection, Thinking Machines: Art and Design in the Computer Age, 1959–1989 brings artworks produced using computers and computational thinking together with notable examples of computer and component design. The exhibition reveals how artists, architects, and designers operating at the vanguard of art and technology deployed computing as a means to reconsider artistic production. The artists featured in Thinking Machines exploited the potential of emerging technologies by inventing systems wholesale or by partnering with institutions and corporations that provided access to cutting-edge machines. They channeled the promise of computing into kinetic sculpture, plotter drawing, computer animation, and video installation. Photographers and architects likewise recognized these technologies' capacity to reconfigure human communities and the built environment.

Thinking Machines includes works by John Cage and Lejaren Hiller, Waldemar Cordeiro, Charles Csuri, Richard Hamilton, Alison Knowles, Beryl Korot, Vera Molnár, Cedric Price, and Stan VanDerBeek, alongside computers designed by Tamiko Thiel and others at Thinking Machines Corporation, IBM, Olivetti, and Apple Computer. The exhibition combines artworks, design objects, and architectural proposals to trace how computers transformed aesthetics and hierarchies, revealing how these thinking machines reshaped art making, working life, and social connections.

Organized by Sean Anderson, Associate Curator, Department of Architecture and Design, and Giampaolo Bianconi, Curatorial Assistant, Department of Media and Performance Art.

-

More images HERE.

Friday, June 22. 2018

The empty brain | #neurosciences #nometaphor

Via Aeon

-----

Your brain does not process information, retrieve knowledge or store memories. In short: your brain is not a computer

Img. by Jan Stepnov (Twenty20).

No matter how hard they try, brain scientists and cognitive psychologists will never find a copy of Beethoven’s 5th Symphony in the brain – or copies of words, pictures, grammatical rules or any other kinds of environmental stimuli. The human brain isn’t really empty, of course. But it does not contain most of the things people think it does – not even simple things such as ‘memories’.

Our shoddy thinking about the brain has deep historical roots, but the invention of computers in the 1940s got us especially confused. For more than half a century now, psychologists, linguists, neuroscientists and other experts on human behaviour have been asserting that the human brain works like a computer.

To see how vacuous this idea is, consider the brains of babies. Thanks to evolution, human neonates, like the newborns of all other mammalian species, enter the world prepared to interact with it effectively. A baby’s vision is blurry, but it pays special attention to faces, and is quickly able to identify its mother’s. It prefers the sound of voices to non-speech sounds, and can distinguish one basic speech sound from another. We are, without doubt, built to make social connections.

A healthy newborn is also equipped with more than a dozen reflexes – ready-made reactions to certain stimuli that are important for its survival. It turns its head in the direction of something that brushes its cheek and then sucks whatever enters its mouth. It holds its breath when submerged in water. It grasps things placed in its hands so strongly it can nearly support its own weight. Perhaps most important, newborns come equipped with powerful learning mechanisms that allow them to change rapidly so they can interact increasingly effectively with their world, even if that world is unlike the one their distant ancestors faced.

Senses, reflexes and learning mechanisms – this is what we start with, and it is quite a lot, when you think about it. If we lacked any of these capabilities at birth, we would probably have trouble surviving.

But here is what we are not born with: information, data, rules, software, knowledge, lexicons, representations, algorithms, programs, models, memories, images, processors, subroutines, encoders, decoders, symbols, or buffers – design elements that allow digital computers to behave somewhat intelligently. Not only are we not born with such things, we also don’t develop them – ever.

We don’t store words or the rules that tell us how to manipulate them. We don’t create representations of visual stimuli, store them in a short-term memory buffer, and then transfer the representation into a long-term memory device. We don’t retrieve information or images or words from memory registers. Computers do all of these things, but organisms do not.

Computers, quite literally, process information – numbers, letters, words, formulas, images. The information first has to be encoded into a format computers can use, which means patterns of ones and zeroes (‘bits’) organised into small chunks (‘bytes’). On my computer, each byte contains 8 bits, and a certain pattern of those bits stands for the letter d, another for the letter o, and another for the letter g. Side by side, those three bytes form the word dog. One single image – say, the photograph of my cat Henry on my desktop – is represented by a very specific pattern of a million of these bytes (‘one megabyte’), surrounded by some special characters that tell the computer to expect an image, not a word.

Computers, quite literally, move these patterns from place to place in different physical storage areas etched into electronic components. Sometimes they also copy the patterns, and sometimes they transform them in various ways – say, when we are correcting errors in a manuscript or when we are touching up a photograph. The rules computers follow for moving, copying and operating on these arrays of data are also stored inside the computer. Together, a set of rules is called a ‘program’ or an ‘algorithm’. A group of algorithms that work together to help us do something (like buy stocks or find a date online) is called an ‘application’ – what most people now call an ‘app’.

Forgive me for this introduction to computing, but I need to be clear: computers really do operate on symbolic representations of the world. They really store and retrieve. They really process. They really have physical memories. They really are guided in everything they do, without exception, by algorithms.

Humans, on the other hand, do not – never did, never will. Given this reality, why do so many scientists talk about our mental life as if we were computers?

In his book In Our Own Image (2015), the artificial intelligence expert George Zarkadakis describes six different metaphors people have employed over the past 2,000 years to try to explain human intelligence.

In the earliest one, eventually preserved in the Bible, humans were formed from clay or dirt, which an intelligent god then infused with its spirit. That spirit ‘explained’ our intelligence – grammatically, at least.

The invention of hydraulic engineering in the 3rd century BCE led to the popularity of a hydraulic model of human intelligence, the idea that the flow of different fluids in the body – the ‘humours’ – accounted for both our physical and mental functioning. The hydraulic metaphor persisted for more than 1,600 years, handicapping medical practice all the while.

By the 1500s, automata powered by springs and gears had been devised, eventually inspiring leading thinkers such as René Descartes to assert that humans are complex machines. In the 1600s, the British philosopher Thomas Hobbes suggested that thinking arose from small mechanical motions in the brain. By the 1700s, discoveries about electricity and chemistry led to new theories of human intelligence – again, largely metaphorical in nature. In the mid-1800s, inspired by recent advances in communications, the German physicist Hermann von Helmholtz compared the brain to a telegraph.

"The mathematician John von Neumann stated flatly that the function of the human nervous system is ‘prima facie digital’, drawing parallel after parallel between the components of the computing machines of the day and the components of the human brain"

Each metaphor reflected the most advanced thinking of the era that spawned it. Predictably, just a few years after the dawn of computer technology in the 1940s, the brain was said to operate like a computer, with the role of physical hardware played by the brain itself and our thoughts serving as software. The landmark event that launched what is now broadly called ‘cognitive science’ was the publication of Language and Communication (1951) by the psychologist George Miller. Miller proposed that the mental world could be studied rigorously using concepts from information theory, computation and linguistics.

This kind of thinking was taken to its ultimate expression in the short book The Computer and the Brain (1958), in which the mathematician John von Neumann stated flatly that the function of the human nervous system is ‘prima facie digital’. Although he acknowledged that little was actually known about the role the brain played in human reasoning and memory, he drew parallel after parallel between the components of the computing machines of the day and the components of the human brain.

Propelled by subsequent advances in both computer technology and brain research, an ambitious multidisciplinary effort to understand human intelligence gradually developed, firmly rooted in the idea that humans are, like computers, information processors. This effort now involves thousands of researchers, consumes billions of dollars in funding, and has generated a vast literature consisting of both technical and mainstream articles and books. Ray Kurzweil’s book How to Create a Mind: The Secret of Human Thought Revealed (2013), exemplifies this perspective, speculating about the ‘algorithms’ of the brain, how the brain ‘processes data’, and even how it superficially resembles integrated circuits in its structure.

The information processing (IP) metaphor of human intelligence now dominates human thinking, both on the street and in the sciences. There is virtually no form of discourse about intelligent human behaviour that proceeds without employing this metaphor, just as no form of discourse about intelligent human behaviour could proceed in certain eras and cultures without reference to a spirit or deity. The validity of the IP metaphor in today’s world is generally assumed without question.

But the IP metaphor is, after all, just another metaphor – a story we tell to make sense of something we don’t actually understand. And like all the metaphors that preceded it, it will certainly be cast aside at some point – either replaced by another metaphor or, in the end, replaced by actual knowledge.

Just over a year ago, on a visit to one of the world’s most prestigious research institutes, I challenged researchers there to account for intelligent human behaviour without reference to any aspect of the IP metaphor. They couldn’t do it, and when I politely raised the issue in subsequent email communications, they still had nothing to offer months later. They saw the problem. They didn’t dismiss the challenge as trivial. But they couldn’t offer an alternative. In other words, the IP metaphor is ‘sticky’. It encumbers our thinking with language and ideas that are so powerful we have trouble thinking around them.

The faulty logic of the IP metaphor is easy enough to state. It is based on a faulty syllogism – one with two reasonable premises and a faulty conclusion. Reasonable premise #1: all computers are capable of behaving intelligently. Reasonable premise #2: all computers are information processors. Faulty conclusion: all entities that are capable of behaving intelligently are information processors.

Setting aside the formal language, the idea that humans must be information processors just because computers are information processors is just plain silly, and when, some day, the IP metaphor is finally abandoned, it will almost certainly be seen that way by historians, just as we now view the hydraulic and mechanical metaphors to be silly.

If the IP metaphor is so silly, why is it so sticky? What is stopping us from brushing it aside, just as we might brush aside a branch that was blocking our path? Is there a way to understand human intelligence without leaning on a flimsy intellectual crutch? And what price have we paid for leaning so heavily on this particular crutch for so long? The IP metaphor, after all, has been guiding the writing and thinking of a large number of researchers in multiple fields for decades. At what cost?

In a classroom exercise I have conducted many times over the years, I begin by recruiting a student to draw a detailed picture of a dollar bill – ‘as detailed as possible’, I say – on the blackboard in front of the room. When the student has finished, I cover the drawing with a sheet of paper, remove a dollar bill from my wallet, tape it to the board, and ask the student to repeat the task. When he or she is done, I remove the cover from the first drawing, and the class comments on the differences.

Because you might never have seen a demonstration like this, or because you might have trouble imagining the outcome, I have asked Jinny Hyun, one of the student interns at the institute where I conduct my research, to make the two drawings. Here is her drawing ‘from memory’ (notice the metaphor):

And here is the drawing she subsequently made with a dollar bill present:

Jinny was as surprised by the outcome as you probably are, but it is typical. As you can see, the drawing made in the absence of the dollar bill is horrible compared with the drawing made from an exemplar, even though Jinny has seen a dollar bill thousands of times.

What is the problem? Don’t we have a ‘representation’ of the dollar bill ‘stored’ in a ‘memory register’ in our brains? Can’t we just ‘retrieve’ it and use it to make our drawing?

Obviously not, and a thousand years of neuroscience will never locate a representation of a dollar bill stored inside the human brain for the simple reason that it is not there to be found.

"The idea that memories are stored in individual neurons is preposterous: how and where is the memory stored in the cell?"

A wealth of brain studies tells us, in fact, that multiple and sometimes large areas of the brain are often involved in even the most mundane memory tasks. When strong emotions are involved, millions of neurons can become more active. In a 2016 study of survivors of a plane crash by the University of Toronto neuropsychologist Brian Levine and others, recalling the crash increased neural activity in ‘the amygdala, medial temporal lobe, anterior and posterior midline, and visual cortex’ of the passengers.

The idea, advanced by several scientists, that specific memories are somehow stored in individual neurons is preposterous; if anything, that assertion just pushes the problem of memory to an even more challenging level: how and where, after all, is the memory stored in the cell?

So what is occurring when Jinny draws the dollar bill in its absence? If Jinny had never seen a dollar bill before, her first drawing would probably have not resembled the second drawing at all. Having seen dollar bills before, she was changed in some way. Specifically, her brain was changed in a way that allowed her to visualise a dollar bill – that is, to re-experience seeing a dollar bill, at least to some extent.

The difference between the two diagrams reminds us that visualising something (that is, seeing something in its absence) is far less accurate than seeing something in its presence. This is why we’re much better at recognising than recalling. When we re-member something (from the Latin re, ‘again’, and memorari, ‘be mindful of’), we have to try to relive an experience; but when we recognise something, we must merely be conscious of the fact that we have had this perceptual experience before.

Perhaps you will object to this demonstration. Jinny had seen dollar bills before, but she hadn’t made a deliberate effort to ‘memorise’ the details. Had she done so, you might argue, she could presumably have drawn the second image without the bill being present. Even in this case, though, no image of the dollar bill has in any sense been ‘stored’ in Jinny’s brain. She has simply become better prepared to draw it accurately, just as, through practice, a pianist becomes more skilled in playing a concerto without somehow inhaling a copy of the sheet music.

From this simple exercise, we can begin to build the framework of a metaphor-free theory of intelligent human behaviour – one in which the brain isn’t completely empty, but is at least empty of the baggage of the IP metaphor.

As we navigate through the world, we are changed by a variety of experiences. Of special note are experiences of three types: (1) we observe what is happening around us (other people behaving, sounds of music, instructions directed at us, words on pages, images on screens); (2) we are exposed to the pairing of unimportant stimuli (such as sirens) with important stimuli (such as the appearance of police cars); (3) we are punished or rewarded for behaving in certain ways.

We become more effective in our lives if we change in ways that are consistent with these experiences – if we can now recite a poem or sing a song, if we are able to follow the instructions we are given, if we respond to the unimportant stimuli more like we do to the important stimuli, if we refrain from behaving in ways that were punished, if we behave more frequently in ways that were rewarded.

Misleading headlines notwithstanding, no one really has the slightest idea how the brain changes after we have learned to sing a song or recite a poem. But neither the song nor the poem has been ‘stored’ in it. The brain has simply changed in an orderly way that now allows us to sing the song or recite the poem under certain conditions. When called on to perform, neither the song nor the poem is in any sense ‘retrieved’ from anywhere in the brain, any more than my finger movements are ‘retrieved’ when I tap my finger on my desk. We simply sing or recite – no retrieval necessary.

A few years ago, I asked the neuroscientist Eric Kandel of Columbia University – winner of a Nobel Prize for identifying some of the chemical changes that take place in the neuronal synapses of the Aplysia (a marine snail) after it learns something – how long he thought it would take us to understand how human memory works. He quickly replied: ‘A hundred years.’ I didn’t think to ask him whether he thought the IP metaphor was slowing down neuroscience, but some neuroscientists are indeed beginning to think the unthinkable – that the metaphor is not indispensable.

A few cognitive scientists – notably Anthony Chemero of the University of Cincinnati, the author of Radical Embodied Cognitive Science (2009) – now completely reject the view that the human brain works like a computer. The mainstream view is that we, like computers, make sense of the world by performing computations on mental representations of it, but Chemero and others describe another way of understanding intelligent behaviour – as a direct interaction between organisms and their world.

My favourite example of the dramatic difference between the IP perspective and what some now call the ‘anti-representational’ view of human functioning involves two different ways of explaining how a baseball player manages to catch a fly ball – beautifully explicated by Michael McBeath, now at Arizona State University, and his colleagues in a 1995 paper in Science. The IP perspective requires the player to formulate an estimate of various initial conditions of the ball’s flight – the force of the impact, the angle of the trajectory, that kind of thing – then to create and analyse an internal model of the path along which the ball will likely move, then to use that model to guide and adjust motor movements continuously in time in order to intercept the ball.

That is all well and good if we functioned as computers do, but McBeath and his colleagues gave a simpler account: to catch the ball, the player simply needs to keep moving in a way that keeps the ball in a constant visual relationship with respect to home plate and the surrounding scenery (technically, in a ‘linear optical trajectory’). This might sound complicated, but it is actually incredibly simple, and completely free of computations, representations and algorithms.

"We will never have to worry about a human mind going amok in cyberspace, and we will never achieve immortality through downloading."

Two determined psychology professors at Leeds Beckett University in the UK – Andrew Wilson and Sabrina Golonka – include the baseball example among many others that can be looked at simply and sensibly outside the IP framework. They have been blogging for years about what they call a ‘more coherent, naturalised approach to the scientific study of human behaviour… at odds with the dominant cognitive neuroscience approach’. This is far from a movement, however; the mainstream cognitive sciences continue to wallow uncritically in the IP metaphor, and some of the world’s most influential thinkers have made grand predictions about humanity’s future that depend on the validity of the metaphor.

One prediction – made by the futurist Kurzweil, the physicist Stephen Hawking and the neuroscientist Randal Koene, among others – is that, because human consciousness is supposedly like computer software, it will soon be possible to download human minds to a computer, in the circuits of which we will become immensely powerful intellectually and, quite possibly, immortal. This concept drove the plot of the dystopian movie Transcendence (2014) starring Johnny Depp as the Kurzweil-like scientist whose mind was downloaded to the internet – with disastrous results for humanity.

Fortunately, because the IP metaphor is not even slightly valid, we will never have to worry about a human mind going amok in cyberspace; alas, we will also never achieve immortality through downloading. This is not only because of the absence of consciousness software in the brain; there is a deeper problem here – let’s call it the uniqueness problem – which is both inspirational and depressing.

Because neither ‘memory banks’ nor ‘representations’ of stimuli exist in the brain, and because all that is required for us to function in the world is for the brain to change in an orderly way as a result of our experiences, there is no reason to believe that any two of us are changed the same way by the same experience. If you and I attend the same concert, the changes that occur in my brain when I listen to Beethoven’s 5th will almost certainly be completely different from the changes that occur in your brain. Those changes, whatever they are, are built on the unique neural structure that already exists, each structure having developed over a lifetime of unique experiences.

This is why, as Sir Frederic Bartlett demonstrated in his book Remembering (1932), no two people will repeat a story they have heard the same way and why, over time, their recitations of the story will diverge more and more. No ‘copy’ of the story is ever made; rather, each individual, upon hearing the story, changes to some extent – enough so that when asked about the story later (in some cases, days, months or even years after Bartlett first read them the story) – they can re-experience hearing the story to some extent, although not very well (see the first drawing of the dollar bill, above).

This is inspirational, I suppose, because it means that each of us is truly unique, not just in our genetic makeup, but even in the way our brains change over time. It is also depressing, because it makes the task of the neuroscientist daunting almost beyond imagination. For any given experience, orderly change could involve a thousand neurons, a million neurons or even the entire brain, with the pattern of change different in every brain.

Worse still, even if we had the ability to take a snapshot of all of the brain’s 86 billion neurons and then to simulate the state of those neurons in a computer, that vast pattern would mean nothing outside the body of the brain that produced it. This is perhaps the most egregious way in which the IP metaphor has distorted our thinking about human functioning. Whereas computers do store exact copies of data – copies that can persist unchanged for long periods of time, even if the power has been turned off – the brain maintains our intellect only as long as it remains alive. There is no on-off switch. Either the brain keeps functioning, or we disappear. What’s more, as the neurobiologist Steven Rose pointed out in The Future of the Brain (2005), a snapshot of the brain’s current state might also be meaningless unless we knew the entire life history of that brain’s owner – perhaps even about the social context in which he or she was raised.

Think how difficult this problem is. To understand even the basics of how the brain maintains the human intellect, we might need to know not just the current state of all 86 billion neurons and their 100 trillion interconnections, not just the varying strengths with which they are connected, and not just the states of more than 1,000 proteins that exist at each connection point, but how the moment-to-moment activity of the brain contributes to the integrity of the system. Add to this the uniqueness of each brain, brought about in part because of the uniqueness of each person’s life history, and Kandel’s prediction starts to sound overly optimistic. (In a recent op-ed in The New York Times, the neuroscientist Kenneth Miller suggested it will take ‘centuries’ just to figure out basic neuronal connectivity.)

Meanwhile, vast sums of money are being raised for brain research, based in some cases on faulty ideas and promises that cannot be kept. The most blatant instance of neuroscience gone awry, documented recently in a report in Scientific American, concerns the $1.3 billion Human Brain Project launched by the European Union in 2013. Convinced by the charismatic Henry Markram that he could create a simulation of the entire human brain on a supercomputer by the year 2023, and that such a model would revolutionise the treatment of Alzheimer’s disease and other disorders, EU officials funded his project with virtually no restrictions. Less than two years into it, the project turned into a ‘brain wreck’, and Markram was asked to step down.

We are organisms, not computers. Get over it. Let’s get on with the business of trying to understand ourselves, but without being encumbered by unnecessary intellectual baggage. The IP metaphor has had a half-century run, producing few, if any, insights along the way. The time has come to hit the DELETE key.

Wednesday, June 06. 2018

What Happened When Stephen Hawking Threw a Cocktail Party for Time Travelers (2009) | #time #dimensions

Note: speaking about time, not in time, out of time, etc. and as a late tribute to Stephen Hawking, this experiement full of malice from him regarding the possibilities of time travel.

To be seen on Open Culture.

Via Open Culture

-----

Who among us has never fantasized about traveling through time? But then, who among us hasn't traveled through time? Every single one of us is a time traveler, technically speaking, moving as we do through one second per second, one hour per hour, one day per day. Though I never personally heard the late Stephen Hawking point out that fact, I feel almost certain that he did, especially in light of one particular piece of scientific performance art he pulled off in 2009: throwing a cocktail party for time travelers — the proper kind, who come from the future.

"Hawking’s party was actually an experiment on the possibility of time travel," writes Atlas Obscura's Anne Ewbank. "Along with many physicists, Hawking had mused about whether going forward and back in time was possible. And what time traveler could resist sipping champagne with Stephen Hawking himself?" "

By publishing the party invitation in his mini-series Into the Universe With Stephen Hawking, Hawking hoped to lure futuristic time travelers. You are cordially invited to a reception for Time Travellers, the invitation read, along with the the date, time, and coordinates for the event. The theory, Hawking explained, was that only someone from the future would be able to attend."

Alas, no time travelers turned up. Since someone possessed of that technology at any point in the future would theoretically be able to attend, does Hawking's lonely party, which you can see in the clip above, prove that time travel will never become possible? Maybe — or maybe the potential time-travelers of the future know something about the space-time-continuum-threatening risks of the practice that we don't. As for Dr. Hawking, I have to imagine that he came away satisfied from the shindig, even though his hoped-for Ms. Universe from the future never walked through the door. “I like simple experiments… and champagne,” he said, and this champagne-laden simple experiment will continue to remind the rest of us to enjoy our time on Earth, wherever in that time we may find ourselves.

-

Related Content:

The Lighter Side of Stephen Hawking: The Physicist Cracks Jokes and a Smile with John Oliver

Professor Ronald Mallett Wants to Build a Time Machine in this Century … and He’s Not Kidding

Based in Seoul, Colin Marshall writes and broadcasts on cities and culture. His projects include the book The Stateless City: a Walk through 21st-Century Los Angeles and the video series The City in Cinema. Follow him on Twitter at @colinmarshall or on Facebook.

Tuesday, May 29. 2018

A Big Leap for an Artificial Leaf | #artificial #leaf #material

Note: some progressive news... Published almost two years ago ((!) I find it interesting to bring things back and out of their "buzz time", possibly check what happened to it next), the article present some advances in "bionic-leaf". One step closer to the creation of artificial leaves so to say.

The interesting thing is that the research has deepened and continues towards agriculture, on-site soil enrichment to boost growth rather than treating it with fertilizers and chemicals to be transported from far. Behind this, some genetic manipulations though (for good? for bad?): "Expanding the reach of the bionic leaf".

-----

A new system for making liquid fuel from sunlight, water, and air is a promising step for solar fuels.

The bionic leaf is one step closer to reality.

Daniel Nocera, a professor of energy science at Harvard who pioneered the use of artificial photosynthesis, says that he and his colleague Pamela Silver have devised a system that completes the process of making liquid fuel from sunlight, carbon dioxide, and water. And they’ve done it at an efficiency of 10 percent, using pure carbon dioxide—in other words, one-tenth of the energy in sunlight is captured and turned into fuel. That is much higher than natural photosynthesis, which converts about 1 percent of solar energy into the carbohydrates used by plants, and it could be a milestone in the shift away from fossil fuels. The new system is described in a new paper in Science.

“Bill Gates has said that to solve our energy problems, someday we need to do what photosynthesis does, and that someday we might be able to do it even more efficiently than plants,” says Nocera. “That someday has arrived.”

In nature, plants use sunlight to make carbohydrates from carbon dioxide and water. Artificial photosynthesis seeks to use the same inputs—solar energy, water, and carbon dioxide—to produce energy-dense liquid fuels. Nocera and Silver’s system uses a pair of catalysts to split water into oxygen and hydrogen, and feeds the hydrogen to bacteria along with carbon dioxide. The bacteria, a microörganism that has been bioengineered to specific characteristics, converts the carbon dioxide and hydrogen into liquid fuels.

Several companies, including Joule Unlimited and LanzaTech, are working to produce biofuels from carbon dioxide and hydrogen, but they use bacteria that consume carbon monoxide or carbon dioxide, rather than hydrogen. Nocera’s system, he says, can operate at lower temperatures, higher efficiency, and lower costs.

Nocera’s latest work “is really quite amazing,” says Peidong Yang of the University of California, Berkeley. Yang has developed a similar system with much lower efficiency. “The high performance of this system is unparalleled” in any other artificial photosynthesis system reported to date, he says.

The new system can use pure carbon dioxide in gas form, or carbon dioxide captured from the air—which means it could be carbon-neutral, introducing no additional greenhouse gases into the atmosphere. “The 10 percent number, that’s using pure CO2,” says Nocera. Allowing the bacteria themselves to capture carbon dioxide from the air, he adds, results in an efficiency of 3 to 4 percent—still significantly higher than natural photosynthesis. “That’s the power of biology: these bioörganisms have natural CO2 concentration mechanisms.”

Nocera’s research is distinct from the work being carried out by the Joint Center for Artificial Photosynthesis, a U.S. Department of Energy-funded program that seeks to use inorganic catalysts, rather than bacteria, to convert hydrogen and carbon dioxide to liquid fuel. According to Dick Co, who heads the Solar Fuels Institute at Northwestern University, the innovation of the new system lies not only in its superior performance but also in its fusing of two usually separate fields: inorganic chemistry (to split water) and biology (to convert hydrogen and carbon dioxide into fuel). “What’s really exciting is the hybrid approach” to artificial photosynthesis, says Co. “It’s exciting to see chemists pairing with biologists to advance the field.”

Commercializing the technology will likely take years. In any case, the prospect of turning sunlight into liquid fuel suddenly looks a lot closer.

Related Links:

Wednesday, April 18. 2018

A Turing Machine Handmade Out of Wood | #history #computing #openculture

Note: Turing Machines are now undoubtedly part of pop culture, aren't they?

Via Open Culture (via Boing Boing)

-----

It took Richard Ridel six months of tinkering in his workshop to create this contraption--a mechanical Turing machine made out of wood. The silent video above shows how the machine works. But if you're left hanging, wanting to know more, I'd recommend reading Ridel's fifteen page paper where he carefully documents why he built the wooden Turing machine, and what pieces and steps went into the construction.

If this video prompts you to ask, what exactly is a Turing Machine?, also consider adding this short primer by philosopher Mark Jago to your media diet.

Related Content:

Free Online Computer Science Courses

The Books on Young Alan Turing’s Reading List: From Lewis Carroll to Modern Chromatics

The LEGO Turing Machine Gives a Quick Primer on How Your Computer Works

The Enigma Machine: How Alan Turing Helped Break the Unbreakable Nazi Code

Thursday, April 12. 2018

Vlatko Vedral - Decoding Reality | #quantum #information #thermodynamics

More about Quantum Information by Vlatko Vedral and his book Decoding Reality.

Via Legalise Freedom

-----

Listen to the discussion online HERE (Youtube, 1h02).

...

Vlatko Vedral on Decoding Reality -- The Universe as Quantum Information. What is the nature of reality? Why is there something rather than nothing? These are the deepest questions that human beings have asked, that thinkers East and West have pondered over millennia. For a physicist, all the world is information. The Universe and its workings are the ebb and flow of information. We are all transient patterns of information, passing on the blueprints for our basic forms to future generations using a digital code called DNA.

Decoding Reality asks some of the deepest questions about the Universe and considers the implications of interpreting it in terms of information. It explains the nature of information, the idea of entropy, and the roots of this thinking in thermodynamics. It describes the bizarre effects of quantum behaviour such as 'entanglement', which Einstein called 'spooky action at a distance' and explores cutting edge work on harnessing quantum effects in hyperfast quantum computers, and how recent evidence suggests that the weirdness of the quantum world, once thought limited to the tiniest scales, may reach up into our reality.

The book concludes by considering the answer to the ultimate question: where did all of the information in the Universe come from? The answers considered are exhilarating and challenge our concept of the nature of matter, of time, of free will, and of reality itself.

Tuesday, April 10. 2018

I’m building a machine that breaks the rules of reality | #information #thermodynamics

Note: the title and beginning of the article is very promissing or teasing, so to say... But unfortunately not freely accessible without a subscription on the New Scientist. Yet as it promisses an interesting read, I do archive it on | rblg for record and future readings.

In the meantime, here's also an interesting interview (2010) from Vlatko, at the time when he published his book Decoding Reality () about Information with physicist Vlatko Vedral for The Guardian.

Via The Guardian

-----

...

And an extract from the article on the New Scientist:

I’m building a machine that breaks the rules of reality

We thought only fools messed with the cast-iron laws of thermodynamics – but quantum trickery is rewriting the rulebook, says physicist Vladko Vedral.

Martin Leon Barreto

By Vlatko Vedral

A FEW years ago, I had an idea that may sound a little crazy: I thought I could see a way to build an engine that works harder than the laws of physics allow.

You would be within your rights to baulk at this proposition. After all, the efficiency of engines is governed by thermodynamics, the most solid pillar of physics. This is one set of natural laws you don’t mess with.

Yet if I leave my office at the University of Oxford and stroll down the corridor, I can now see an engine that pays no heed to these laws. It is a machine of considerable power and intricacy, with green lasers and ions instead of oil and pistons. There is a long road ahead, but I believe contraptions like this one will shape the future of technology.

Better, more efficient computers would be just the start. The engine is also a harbinger of a new era in science. To build it, we have had to uncover a field called quantum thermodynamics, one set to retune our ideas about why life, the universe – everything, in fact – are the way they are.

Thermodynamics is the theory that describes the interplay between temperature, heat, energy and work. As such, it touches on pretty much everything, from your brain to your muscles, car engines to kitchen blenders, stars to quasars. It provides a base from which we can work out what sorts of things do and don’t happen in the universe. If you eat a burger, you must burn off the calories – or …

Related Links:

Friday, December 15. 2017

New MoMA show plots the impact of computers on architecture and design | #design #computers #history

Note: with a bit of delay (delay can be an interesting attitude nowadays), but the show is still open... and the content still very interesting!

Via Archpaper

-----

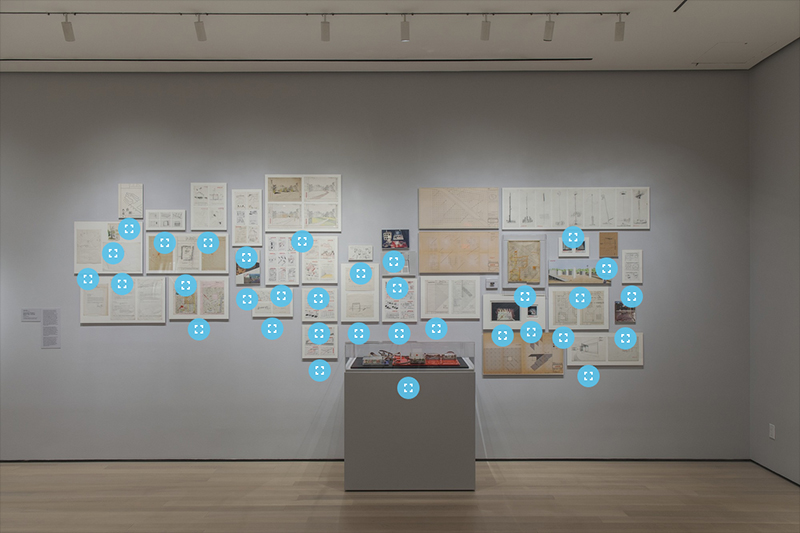

New MoMA show plots the impact of computers on architecture and design. Pictured here: “Menu 23" layout of Cedric Price's Generator Project. (Courtesy California College of the Arts archive)

The beginnings of digital drafting and computational design will be on display at the Museum of Modern Art (MoMA) starting November 13th, as the museum presents Thinking Machines: Art and Design in the Computer Age, 1959–1989. Spanning 30 years of works by artists, photographers, and architects, Thinking Machines captures the postwar period of reconciliation between traditional techniques and the advent of the computer age.

Organized by Sean Anderson, associate curator in the museum’s Department of Architecture and Design, and Giampaolo Bianconi, a curatorial assistant in the Department of Media and Performance Art, the exhibition examines how computer-aided design became permanently entangled with art, industrial design, and space planning.

Drawings, sketches, and models from Cedric Price’s 1978-80 Generator Project, the never-built “first intelligent building project” will also be shown. The response to a prompt put out by the Gilman Paper Corporation for its White Oak, Florida, site to house theater and dance performances alongside travelling artists, Price’s Generator proposal sought to stimulate innovation by constantly shifting arrangements.

Ceding control of the floor plan to a master computer program and crane system, a series of 13-by-13-foot rooms would have been continuously rearranged according to the users’ needs. Only constrained by a general set of Price’s design guidelines, Generator’s program would even have been capable of rearranging rooms on its own if it felt the layout hadn’t been changed frequently enough. Raising important questions about the interaction between a space and its occupants, Generator House laid the groundwork for computational architecture and smart building systems.

R. Buckminster Fuller’s 1970 work for Radical Hardware magazine will also appear. (Courtesy PBS)

Thinking Machines: Art and Design in the Computer Age, 1959–1989 will be running from November 13th to April 8th, 2018. MoMA members can preview the show from November 10th through the 12th.

fabric | rblg

This blog is the survey website of fabric | ch - studio for architecture, interaction and research.

We curate and reblog articles, researches, writings, exhibitions and projects that we notice and find interesting during our everyday practice and readings.

Most articles concern the intertwined fields of architecture, territory, art, interaction design, thinking and science. From time to time, we also publish documentation about our own work and research, immersed among these related resources and inspirations.

This website is used by fabric | ch as archive, references and resources. It is shared with all those interested in the same topics as we are, in the hope that they will also find valuable references and content in it.

Quicksearch

Categories

Calendar

|

|

July '25 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | 3 | 4 | 5 | 6 | |

| 7 | 8 | 9 | 10 | 11 | 12 | 13 |

| 14 | 15 | 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 | 26 | 27 |

| 28 | 29 | 30 | 31 | |||