Sticky Postings

All 242 fabric | rblg updated tags | #fabric|ch #wandering #reading

By fabric | ch

-----

As we continue to lack a decent search engine on this blog and as we don't use a "tag cloud" ... This post could help navigate through the updated content on | rblg (as of 09.2023), via all its tags!

FIND BELOW ALL THE TAGS THAT CAN BE USED TO NAVIGATE IN THE CONTENTS OF | RBLG BLOG:

(to be seen just below if you're navigating on the blog's html pages or here for rss readers)

--

Note that we had to hit the "pause" button on our reblogging activities a while ago (mainly because we ran out of time, but also because we received complaints from a major image stock company about some images that were displayed on | rblg, an activity that we felt was still "fair use" - we've never made any money or advertised on this site).

Nevertheless, we continue to publish from time to time information on the activities of fabric | ch, or content directly related to its work (documentation).

Thursday, June 29. 2023

Atomized (re-)Staging by fabric | ch at Centre Pompidou | #exhibitions #digital #revival #iconoclash #immatériaux

Note: At the invitation of Macella Lista and Livia Nolasco-Roszas (curators), fabric | ch presents Atomized (re-)Staging during the Moviment festival-exhibition at the Centre Pompidou in Paris, as part of a weekend devoted to a return to the landmark exhibition Les Immatériaux (which took place at Beaubourg in 1985).

Via @ptrckkllr and @beyondmatereu (research project & exhibition: Beyond Matter)

-----

Wednesday, January 26. 2022

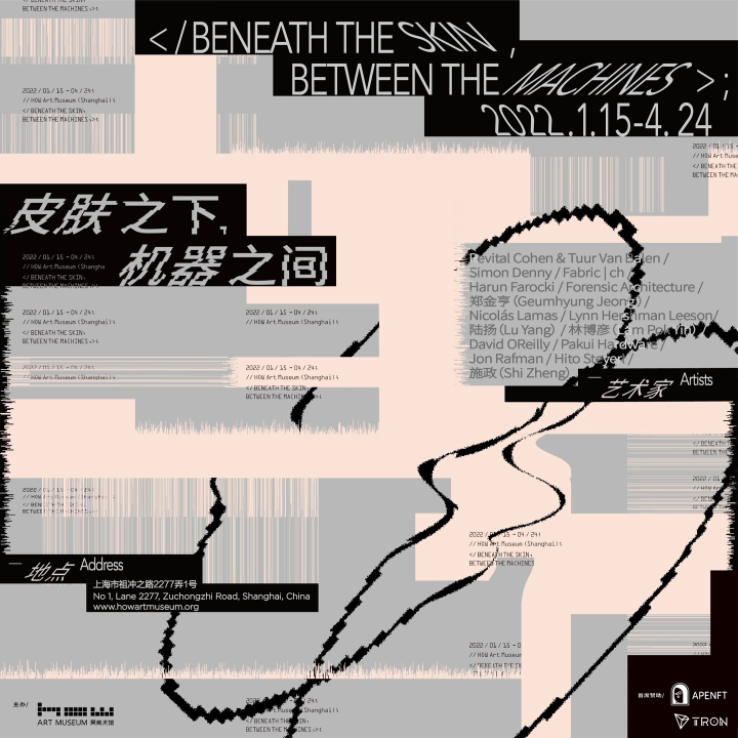

Platform of Future-Past (2022) at HOW Art Museum in Shanghai | #data #monitering #installation

Note:

The exhibition Beneath the Skin, Between the Machines just opened at HOW Art Museum (Hao Art Gallery) and fabric | ch was keen to be invited to create a large installation for the show, also intented to be used during a symposium that will be entirely part of the exhibition (panels and talks as part of the installation therefore). The exhibition will be open between January 15 - April 24 2022 in Shanghai.

Along with a selection of chinese and international artists, curator Liaoliao Fu asked us to develop a proposal based on a former architectural device, Public Platform of Future-Past, which in itself was inspired by an older installation of ours... Heterochrony.

This new work, entitled Platform of Future-Past, deals with the temporal oddity that can be produced and induced by the recording, accumulation and storage of monitoring data, which contributes to leaving partial traces of "reality", functioning as spectres of the past.

We are proud to present this work along artists such as Hito Steyerl, Geumhyung Jeong, Lu Yang, Jon Rafman, Forensic Architecture, Lynn Hershman Leeson and Harun Farocki.

...

Last but not least and somehow a "sign of the times", this is the first exhibition in which we are participating and whose main financial backers are a blockchain and crypto-finance company, as well as a NFT platform. Both based in China.

More information about the symposium will be published.

Via Pro Helvetia

-----

-----

Curatorial Statement

By Fu Liaoliao and the curatorial team

"Man is only man at the surface. Remove the skin, dissect, and immediately you come to machinery.” When Paul Valéry wrote this down, he might not foresee that human beings – a biological organism – would indeed be incorporated into machinery at such a profound level in a highly informationized and computerized time and space. In a sense, it is just as what Marx predicted: a conscious connection of machine[1]. Today, machine is no longer confined to any material form; instead, it presents itself in the forms of data, coding and algorithm – virtually everything that is “operable”, “calculable” and “thinkable”. Ever since the idea of cyborg emerges, the man-machine relation has always been intertwined with our imagination, vision and fear of the past, present and future.

In a sense, machine represents a projection of human beings. We human beings transfer ideas of slavery and freedom to other beings, namely a machine that could replace human beings as technical entities or tools. Opposite (and similar, in a sense,) to the “embodiment” of machine, organic beings such as human beings are hurrying to move towards “disembodiment”. Everything pertinent to our body and behavior can be captured and calculated as data. In the meantime, the social system that human beings have created never stops absorbing new technologies. During the process of trial and error, the difference and fortuity accompanying the “new” are taken in and internalized by the system. “Every accident, every impulse, every error is productive (of the social system),”[2] and hence is predictable and calculable. Within such a system, differences tend to be obfuscated and erased, but meanwhile due to highly professional complexities embedded in different disciplines/fields, genuine interdisciplinary communication is becoming increasingly difficult, if not impossible.

As a result, technologies today are highly centralized, homogenized, sophisticated and commonized. They penetrate deeply into our skin, but beyond knowing, sensing and thinking. On the one hand, the exhibition probes into the reconfiguration of man by technologies through what’s “beneath the skin”; and on the other, encourages people to rethink the position and situation we’re in under this context through what’s “between the machines”. As an art institute located at Shanghai Zhangjiang Hi-Tech Industrial Development Zone, one of the most important hi-tech parks in China, HOW Art Museum intends to carve out an open rather than enclosed field through the exhibition, inviting the public to immerse themselves and ponder upon the questions such as “How people touch machines?”, “What the machines think of us?” and “Where to position art and its practice in the face of the overwhelming presence of technology and the intricate technological reality?”

Departing from these issues, the exhibition presents a selection of recent works of Revital Cohen & Tuur Van Balen, Simon Denny, Harun Farocki, Nicolás Lamas, Lynn Hershman Leeson, Lu Yang, Lam Pok Yin, David OReilly, Pakui Hardware, Jon Rafman, Hito Steyerl, Shi Zheng and Geumhyung Jeong. In the meantime, it intends to set up a “panel installation”, specially created by fabric | ch for this exhibition, trying to offer a space and occasion for decentralized observation and participation in the above discussions. Conversations and actions are to be activated as well as captured, observed and archived at the same time.

[1] Karl Marx, “Fragment on Machines”, Foundations of a Critique of Political Economy

[2] Niklas Luhmann, Social Systems

-----

Schedule

Duration: January 15-April 24, 2022

Artists: Revital Cohen & Tuur Van Balen, Simon Denny, fabric | ch, Harun Farocki, Geumhyung Jeong, Nicolás Lamas, Lynn Hershman Leeson, Lu Yang, Lam Pok Yin, David OReilly, Pakui Hardware, Jon Rafman, Hito Steyerl, Shi Zheng

Curator: Fu Liaoliao

Organizer: HOW Art Museum, Shanghai

Lead Sponsor: APENFT Foundation

Swiss participation is supported by Pro Helvetia Shanghai, Swiss Arts Council.

(Swiss speakers and performers appearing in the educational events will be updated soon.)

-----

Work by fabric | ch

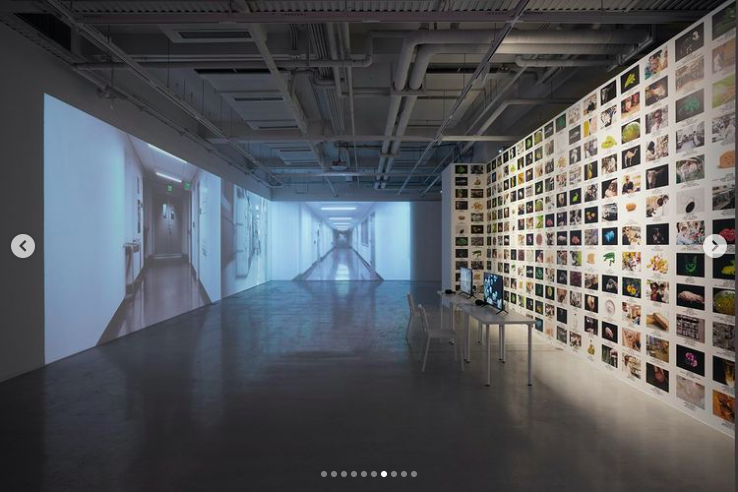

HOW Art Museum has invited Lausanne-based artist group fabric | ch to set up a “panel installation” based on their former project “Public Platform of Future Past” and adapted to the museum space, fostering insightful communication among practitioners from different fields and the audiences.

“Platform of Future-Past” is a temporary environmental device that consists in a twenty meters long walkway, or rather an observation deck, almost archaeological: a platform that overlooks an exhibition space and that, paradoxically, directly links its entrance to its exit. It thus offers the possibility of crossing this space without really entering it and of becoming its observer, as from archaeological observation decks. The platform opens- up contrasting atmospheres and offers affordances or potential uses on the ground.

The peculiarity of the work consists thus in the fact that it generates a dual perception and a potential temporal disruption, which leads to the title of the work, Platform of Future-Past: if the present time of the exhibition space and its visitors is, in fact, the “archeology” to be observed from the platform, and hence a potential “past,” then the present time of the walkway could be understood as a possible “future” viewed from the ground…

“Platform of Future-Past” is equipped in three zones with environmental monitoring devices. The sensors record as much data as possible over time, generated by the continuously changing conditions, presences and uses in the exhibition space. The data is then stored on Platform Future-Past’s servers and replayed in a loop on its computers. It is a “recorded moment”, “frozen” on the data servers, that could potentially replay itself forever or is waiting for someone to reactivate it. A “data center” on the deck, with its set of interfaces and visualizations screens, lets the visitors-observers follow the ongoing process of recording.

The work could be seen as an architectural proposal built on the idea of massive data production from our environment. Every second, our world produces massive amounts of data, stored “forever” in remote data centers, like old gas bubbles trapped in millennial ice.

As such, the project is attempting to introduce doubt about its true nature: would it be possible, in fact, that what is observed from the platform is already a present recorded from the past? A phantom situation? A present regenerated from the data recorded during a scientific experiment that was left abandoned? Or perhaps replayed by the machine itself ? Could it already, in fact, be running on a loop for years?

Platform of Future-Past, Scaffolding, projection screens, sensors, data storage, data flows, plywood panels, textile partitions

-----

Platform of Future-Past (2022)

-----

Beneath the Skin, Between the Machines (exhibition, 01.22 - 04.22)

-----

Platform of Future-Past was realized with the support of Pro Helvetia.

Saturday, February 17. 2018

Environmental Devices retrospective exhibition by fabric | ch until today! | #fabric|ch #accrochage #book

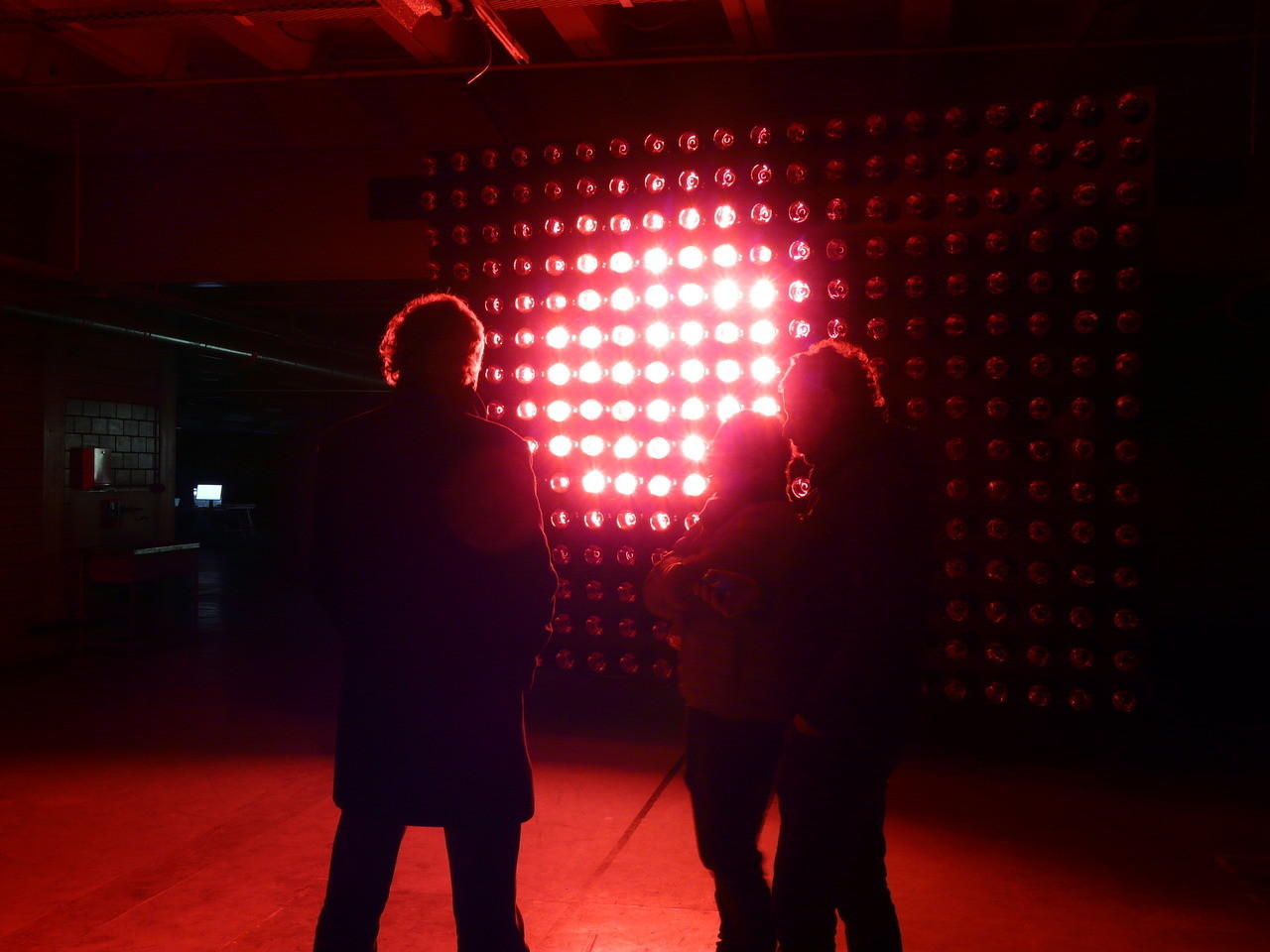

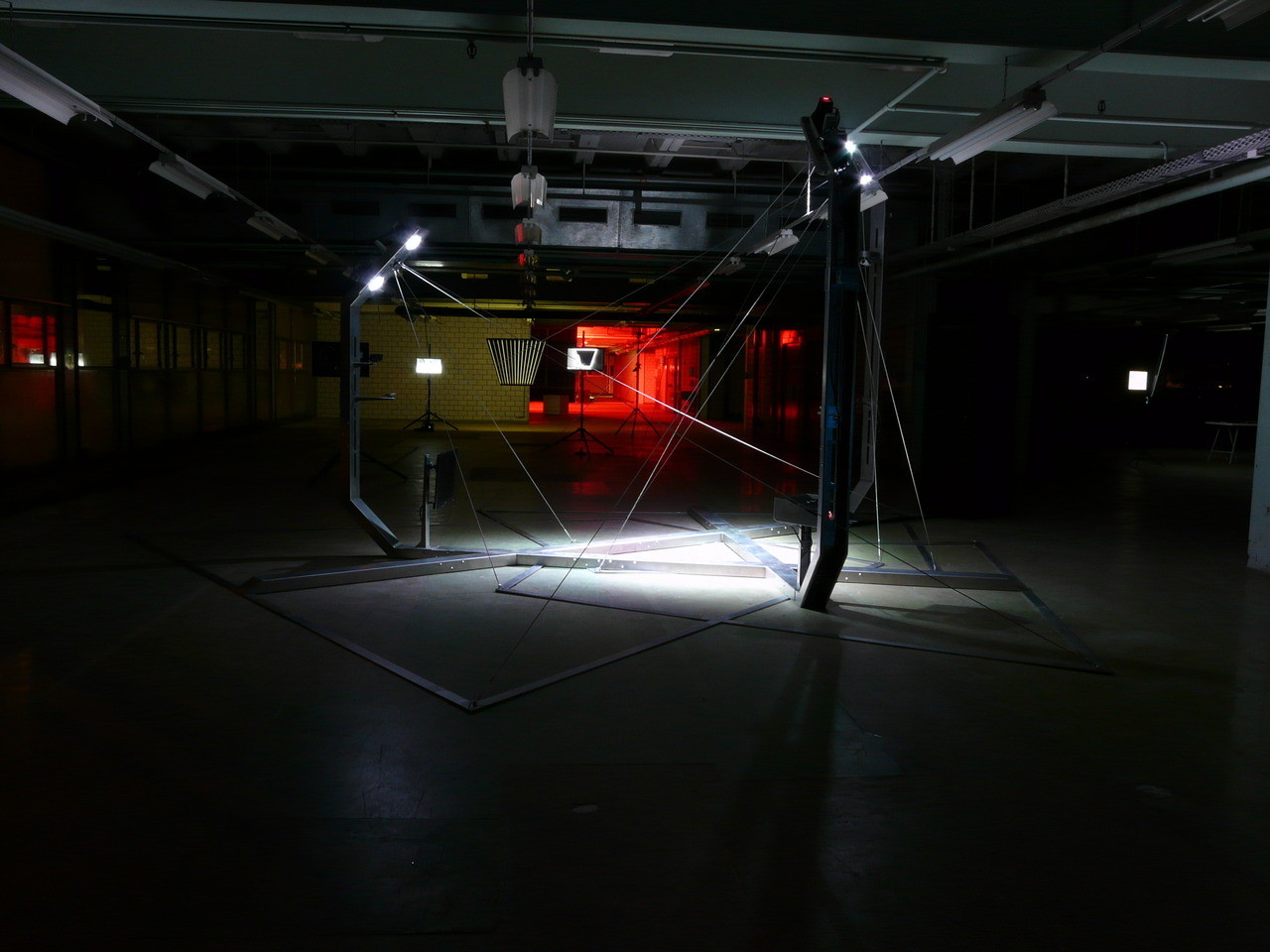

Note: a few pictures from fabric | ch retrospective at #EphemeralKunsthalleLausanne (disused factory Mayer & Soutter, nearby Lausanne in Renens).

The exhibition is being set up in the context of the production of a monographic book and is still open today (Saturday 17.02, 5.00-8.00 pm)!

By fabric | ch

-----

-

All images Ch. Guignard.

Wednesday, October 19. 2016

Le médium spirite ou la magie d’un corps hypermédiatique à l’ère de la modernité | #spirit #media #technology

Note: following the previous post that mentioned the idea of spiritism in relation to personal data, or forgotten personal data, but also in relation to "beliefs" linked to contemporary technologies, here comes an interesting symposium (Machines, magie, médias) and post on France Culture. The following post and linked talk from researcher Mireille Berton (nearby University of Lausanne, Dpt of Film History and Aesthetics) are in French.

Via France Culture

-----

Cerisy : Machines, magie, médias (du 20 au 28 août 2016)

Les magiciens — de Robert-Houdin et Georges Méliès à Harry Houdini et Howard Thurston suivis par Abdul Alafrez, David Copperfield, Jim Steinmeyer, Marco Tempest et bien d’autres — ont questionné les processus de production de l’illusion au rythme des innovations en matière d’optique, d’acoustique, d’électricité et plus récemment d’informatique et de numérique.

Or, toute technologie qui se joue de nos sens, tant qu’elle ne dévoile pas tous ses secrets, tant que les techniques qu'elle recèle ne sont pas maîtrisées, tant qu’elle n’est pas récupérée et formalisée par un média, reste à un stade que l’on peut définir comme un moment magique. Machines et Magie partagent, en effet, le secret, la métamorphose, le double, la participation, la médiation. Ce parti pris se fonde sur l’hypothèse avancée par Arthur C. Clarke : "Toute technologie suffisamment avancée est indiscernable de la magie" (1984, p. 36).

L’émergence même des médias peut être analysée en termes d’incarnation de la pensée magique, "patron-modèle" (Edgar Morin, 1956) de la forme première de l’entendement individuel (Marcel Mauss, 1950). De facto, depuis les fantasmagories du XVIIIe siècle jusqu’aux arts numériques les plus actuels, en passant par le théâtre, la lanterne magique, la photographie, le Théâtrophone, le phonographe, la radio, la télévision et le cinéma, l’histoire des machineries spectaculaires croise celle de la magie et les expérimentations de ses praticiens, à l’affût de toute nouveauté permettant de réactualiser les effets magiques par la mécanisation des performances. C’est par l’étude des techniques d’illusion propres à chaque média, dont les principes récurrents ont été mis au jour par les études intermédiales et l’archéologie des médias, que la rencontre avec l’art magique s’est imposée.

Ce colloque propose d’en analyser leur cycle technologique : le moment magique (croyance et émerveillement), le mode magique (rhétorique), la sécularisation (banalisation de la dimension magique). Ce cycle est analysé dans sa transversalité afin d’en souligner les dimensions intermédiales. Les communications sont ainsi regroupées en sept sections : L’art magique ; Magie et esthétiques de l’étonnement ; Magie, télévision et vidéo ; Les merveilles de la science ; Magie de l’image, l’image et la magie ; Magie du son, son et magie ; Du tableau vivant au mimétisme numérique. La première met en dialogue historiens et praticiens de la magie et présente un état des archives sur le sujet. Les six sections suivantes font état des corrélations: magie/médias et médias/magie.

Docteure ès Lettres, Mireille Berton est maître d’enseignement et de recherche à la Section d’Histoire et esthétique du cinéma de l'Université de Lausanne (UNIL).

Ses travaux portent principalement sur les rapports entre cinéma et sciences du psychisme (psychologie, psychanalyse, psychiatrie, parapsychologie), avec un intérêt particulier pour une approche croisant histoire culturelle, épistémologie des médias et Gender Studies.

Outre de nombreuses études, elle a publié un livre tiré de sa thèse de doctorat intitulé Le Corps nerveux des spectateurs. Cinéma et sciences du psychisme autour de 1900 (L’Âge d’Homme, 2015), et elle a co-dirigé avec Anne-Katrin Weber un ouvrage collectif consacré à l’histoire des dispositifs télévisuels saisie au travers de discours, pratiques, objets et représentations (La Télévision du Téléphonoscope à YouTube. Pour une archéologie de l'audiovision, Antipodes, 2009). Elle travaille actuellement sur un manuscrit consacré aux représentations du médium spirite dans les films et séries télévisées contemporains (à paraître chez Georg en 2017).

Résumé de la communication:

L'intervention propose de revenir sur une question souvent traitée dans l’histoire des sciences et de l’occultisme, à savoir le rôle joué par les instruments de mesure et de capture dans l’appréhension des faits paranormaux. Une analyse de sources spirites parues durant les premières décennies du XXe siècle permet de mettre au jour les tensions provoquées par les dispositifs optiques et électriques qui viennent défier le corps tout-puissant du médium spirite sur son propre territoire. La rencontre entre occultisme et modernité donne alors naissance à la figure (discursive et fantasmatique) du médium "hypermédiatique", celui-ci surpassant toutes les possibilités offertes par les découvertes scientifiques.

Related Links:

Thursday, January 28. 2016

I&IC at Unfrozen – Swiss Design Network 2016 Conference, SDN (Zürich, 2016)) | #datacenter #infrastructures #research

Note: I'll move this afternoon to Grandhotel Giessbach (sounds like a Wes Anderson movie) to present later tonight the temporary results of the research I'm jointly leading with Nicolas Nova for ECAL & HEAD - Genève, in partnership with EPFL-ECAL Lab & EPFL: Inhabiting and Interfacing the Cloud(s). Looking forward to meet the Swiss design research community (mainly) at the hotel...

Via iiclouds.org

-----

Christophe Guignard and myself will have the pleasure to present the temporary results of the design research Inhabiting & Interfacing the Cloud(s) next Thursday (28.01.2016) at the Swiss Design Network conference.

The conference will happen at Grandhotel Giessbach over the lake Brienz, where we'll focus on the research process fully articulated around the practice of design (with the participation of students in the case of I&IC) and the process of project.

This will apparently happen between "dinner" and "bar", as we'll present a "Fireside Talk" at 9pm. Can't wait to do and see that...

The full program and proceedings (pdf) of the conference can be accessed HERE.

As for previous events, we'll try to make a short "follow up" on this documentary blog after the event.

Wednesday, October 21. 2015

Create a Self-Destructing Website With This Open Source Code | #web #design

Note: suddenly speaking about web design, wouldn' it be the time to start again doing some interaction design on the web? Aren't we in need of some "net art" approach, some weirder propositions than the too slick "responsive design" of a previsible "user-centered" or even "experience" design dogma? These kind of complex web/interaction experiences almost all vanished (remember Jodi?) To the point that there is now a vast experimental void for designers to tap again into!

Well, after the site that can only be browsed by one person at a time (with a poor visual design indeed), here comes the one that self destruct itself. Could be a start... Btw, thinking about files, sites or contents, etc. that would self destruct themsleves would probably help save lots of energy in data storage, hard drives and datacenters of all sorts, where these data sits like zombies.

Via GOOD

-----

By Isis Madrid

Former head of product at Flickr and Bitly, Matt Rothenberg recently caused an internet hubbub with his Unindexed project. The communal website continuously searched for itself on Google for 22 days, at which point, upon finding itself, spontaneously combusted.

In addition to chasing its own tail on Google, Unindexed provided a platform for visitors to leave comments and encourage one another to spread the word about the website. According to Rothenberg, knowledge of the website was primarily passed on in the physical world via word of mouth.

“Part of the goal with the project was to create a sense of unease with the participants—if they liked it, they could and should share it with others, so that the conversation on the site could grow,” Rothenberg told Motherboard. “But by doing so they were potentially contributing to its demise via indexing, as the more the URL was out there, the faster Google would find it.”

When the website finally found itself on Google, the platform disappeared and this message replaced it:

“HTTP/1.1 410 Gone This web site is no longer here. It was automatically and permanently deleted after being indexed by Google. Prior to its deletion on Tue Feb 24 2015 21:01:14 GMT+0000 (UTC) it was active for 22 days and viewed 346 times. 31 of those visitors had added to the conversation.”

If you are interested in creating a similar self-destructing site, feel free to start with Rothenberg’s open source code.

Sunday, December 14. 2014

I&IC workshop #3 at ECAL: output > Networked Data Objects & Devices | #data #things

Via iiclouds.org

-----

The third workshop we ran in the frame of I&IC with our guest researcher Matthew Plummer-Fernandez (Goldsmiths University) and the 2nd & 3rd year students (Ba) in Media & Interaction Design (ECAL) ended last Friday (| rblg note: on the 21st of Nov.) with interesting results. The workshop focused on small situated computing technologies that could collect, aggregate and/or “manipulate” data in automated ways (bots) and which would certainly need to heavily rely on cloud technologies due to their low storage and computing capacities. So to say “networked data objects” that will soon become very common, thanks to cheap new small computing devices (i.e. Raspberry Pis for diy applications) or sensors (i.e. Arduino, etc.) The title of the workshop was “Botcave”, which objective was explained by Matthew in a previous post.

The choice of this context of work was defined accordingly to our overall research objective, even though we knew that it wouldn’t address directly the “cloud computing” apparatus — something we learned to be a difficult approachduring the second workshop –, but that it would nonetheless question its interfaces and the way we experience the whole service. Especially the evolution of this apparatus through new types of everyday interactions and data generation.

Matthew Plummer-Fernandez (#Algopop) during the final presentation at the end of the research workshop.

Through this workshop, Matthew and the students definitely raised the following points and questions:

1° Small situated technologies that will soon spread everywhere will become heavy users of cloud based computing and data storage, as they have low storage and computing capacities. While they might just use and manipulate existing data (like some of the workshop projects — i.e. #Good vs. #Evil or Moody Printer) they will altogether and mainly also contribute to produce extra large additional quantities of them (i.e. Robinson Miner). Yet, the amount of meaningful data to be “pushed” and “treated” in the cloud remains a big question mark, as there will be (too) huge amounts of such data –Lucien will probably post something later about this subject: “fog computing“–, this might end up with the need for interdisciplinary teams to rethink cloud architectures.

2° Stored data are becoming “alive” or significant only when “manipulated”. It can be done by “analog users” of course, but in general it is now rather operated by rules and algorithms of different sorts (in the frame of this workshop: automated bots). Are these rules “situated” as well and possibly context aware (context intelligent) –i.e.Robinson Miner? Or are they somehow more abstract and located anywhere in the cloud? Both?

3° These “Networked Data Objects” (and soon “Network Data Everything”) will contribute to “babelize” users interactions and interfaces in all directions, paving the way for new types of combinations and experiences (creolization processes) — i.e. The Beast, The Like Hotline, Simon Coins, The Wifi Cracker could be considered as starting phases of such processes–. Cloud interfaces and computing will then become everyday “things” and when at “house”, new domestic objects with which we’ll have totally different interactions (this last point must still be discussed though as domesticity might not exist anymore according to Space Caviar).

Moody Printer – (Alexia Léchot, Benjamin Botros)

Moody Printer remains a basic conceptual proposal at this stage, where a hacked printer, connected to a Raspberry Pi that stays hidden (it would be located inside the printer), has access to weather information. Similarly to human beings, its “mood” can be affected by such inputs following some basic rules (good – bad, hot – cold, sunny – cloudy -rainy, etc.) The automated process then search for Google images according to its defined “mood” (direct link between “mood”, weather conditions and exhaustive list of words) and then autonomously start to print them.

A different kind of printer combined with weather monitoring.

The Beast – (Nicolas Nahornyj)

Top: Nicolas Nahornyj is presenting his project to the assembly. Bottom: the laptop and “the beast”.

The Beast is a device that asks to be fed with money at random times… It is your new laptop companion. To calm it down for a while, you must insert a coin in the slot provided for that purpose. If you don’t comply, not only will it continue to ask for money in a more frequent basis, but it will also randomly pick up an image that lie around on your hard drive, post it on a popular social network (i.e. Facebook, Pinterest, etc.) and then erase this image on your local disk. Slowly, The Beast will remove all images from your hard drive and post them online…

A different kind of slot machine combined with private files stealing.

Robinson – (Anne-Sophie Bazard, Jonas Lacôte, Pierre-Xavier Puissant)

Top: Pierre-Xavier Puissant is looking at the autonomous “minecrafting” of his bot. Bottom: the proposed bot container that take on the idea of cubic construction. It could be placed in your garden, in one of your room, then in your fridge, etc.

Robinson automates the procedural construction of MineCraft environments. To do so, the bot uses local weather information that is monitored by a weather sensor located inside the cubic box, attached to a Raspberry Pi located within the box as well. This sensor is looking for changes in temperature, humidity, etc. that then serve to change the building blocks and rules of constructions inside MineCraft (put your cube inside your fridge and it will start to build icy blocks, put it in a wet environment and it will construct with grass, etc.)

A different kind of thermometer combined with a construction game.

Note: Matthew Plummer-Fernandez also produced two (auto)MineCraft bots during the week of workshop. The first one is building environment according to fluctuations in the course of different market indexes while the second one is trying to build “shapes” to escape this first envirnment. These two bots are downloadable from theGithub repository that was realized during the workshop.

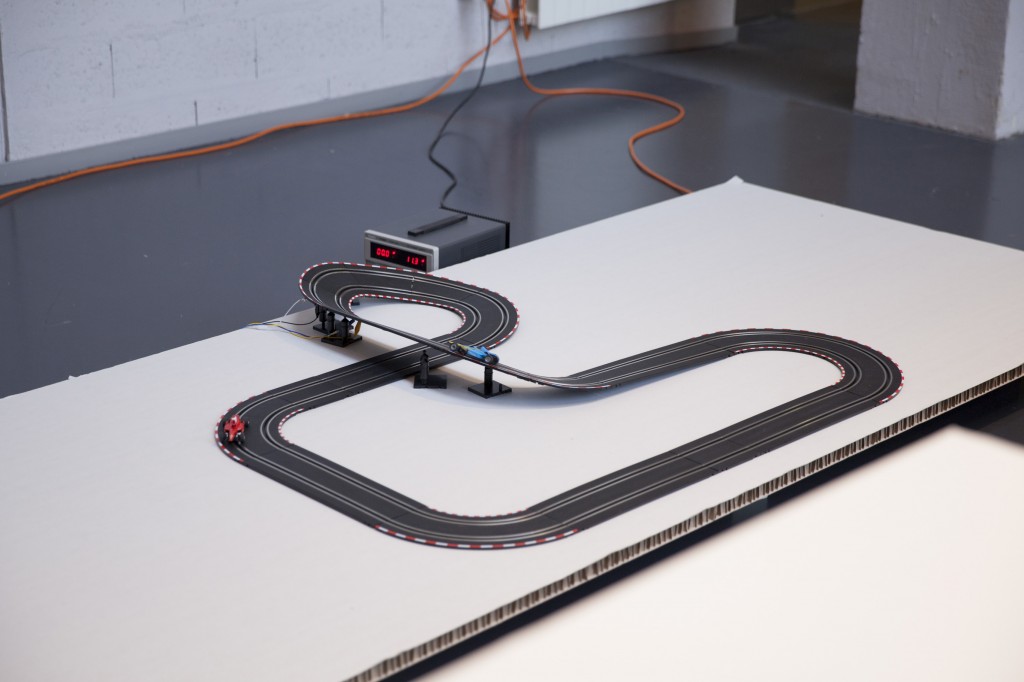

#Good vs. #Evil – (Maxime Castelli)

Top: a transformed car racing game. Bottom: a race is going on between two Twitter hashtags, materialized by two cars.

#Good vs. #Evil is a quite straightforward project. It is also a hack of an existing two racing cars game. Yet in this case, the bot is counting iterations of two hashtags on Twitter: #Good and #Evil. At each new iteration of one or the other word, the device gives an electric input to its associated car. The result is a slow and perpetual race car between “good” and “evil” through their online hashtags iterations.

A different kind of data visualization combined with racing cars.

The “Like” Hotline – (Mylène Dreyer, Caroline Buttet, Guillaume Cerdeira)

Top: Caroline Buttet and Mylène Dreyer are explaining their project. The screen of the laptop, which is a Facebook account is beamed on the left outer part of the image. Bottom: Caroline Buttet is using a hacked phone to “like” pages.

The “Like” Hotline is proposing to hack a regular phone and install a hotline bot on it. Connected to its online Facebook account that follows a few personalities and the posts they are making, the bot ask questions to the interlocutor which can then be answered by using the keypad on the phone. After navigating through a few choices, the bot hotline help you like a post on the social network.

A different kind of hotline combined with a social network.

Simoncoin – (Romain Cazier)

Top: Romain Cazier introducing its “coin” project. Bottom: the device combines an old “Simon” memory game with the production of digital coins.

Simoncoin was unfortunately not finished at the end of the week of workshop but was thought out in force details that would be too long to explain in this short presentation. Yet the main idea was to use the game logic of Simon to generate coins. In a parallel to the Bitcoins that are harder and harder to mill, Simon Coins are also more and more difficult to generate due to the game logic.

Another different kind of money combined with a memory game.

The Wifi Cracker – (Bastien Girshig, Martin Hertig)

Top: Bastien Girshig and Martin Hertig (left of Matthew Plummer-Fernandez) presenting. Middle and Bottom: the wifi password cracker slowly diplays the letters of the wifi password.

The Wifi Cracker is an object that you can independently leave in a space. It furtively looks a little bit like a clock, but it won’t display the time. Instead, it will look for available wifi networks in the area and start try to find their protected password (Bastien and Martin found a ready made process for that). The bot will test all possible combinations and it will take time. Once the device will have found the working password, it will use its round display to transmit the password. Letter by letter and slowly as well.

A different kind of cookoo clock combined with a password cracker.

Acknowledgments:

Lots of thanks to Matthew Plummer-Fernandez for its involvement and great workshop direction; Lucien Langton for its involvment, technical digging into Raspberry Pis, pictures and documentation; Nicolas Nova and Charles Chalas (from HEAD) so as Christophe Guignard, Christian Babski and Alain Bellet for taking part or helping during the final presentation. A special thanks to the students from ECAL involved in the project and the energy they’ve put into it: Anne-Sophie Bazard, Benjamin Botros, Maxime Castelli, Romain Cazier, Guillaume Cerdeira, Mylène Dreyer, Bastien Girshig, Jonas Lacôte, Alexia Léchot, Nicolas Nahornyj, Pierre-Xavier Puissant.

From left to right: Bastien Girshig, Martin Hertig (The Wifi Cracker project), Nicolas Nova, Matthew Plummer-Fernandez (#Algopop), a “mystery girl”, Christian Babski (in the background), Patrick Keller, Sebastian Vargas, Pierre Xavier-Puissant (Robinson Miner), Alain Bellet and Lucien Langton (taking the pictures…) during the final presentation on Friday.

Friday, November 21. 2014

I&IC workshop at ECAL: The birth of Botcaves | #iiclouds #designresearch #bots

Note: the workshop continues and should finish today. We'll document and publish results next week. As the workshop is all about small size and situated computing, Lucien Langton (assistant on the project) made a short tutorial about the way to set up your Pi. I'll also publish the Github repository that Matthew Plummer-Fernandez has set up.

Via iiclouds.org

-----

The Bots are running! The second workshop of I&IC’s research study started yesterday with Matthew’s presentation to the students. A video of the presentation might be included in the post later on, but for now here’s the [pdf]: Botcaves

First prototypes setup by the students include bots playing Minecraft, bots cracking wifi’s, bots triggered by onboard IR Cameras. So far, some groups worked directly with Python scripts deployed via SSH into the Pi’s, others established a client-server connection between their Mac and their Pi by installing Processing on their Raspberry and finally some decided to start by hacking hardware to connect to their bots later.

The research process will be continuously documented during the week.

The Wifi cracking Bot

Hacking a phone

Connecting to Pi via Proce55ing

Friday, October 17. 2014

“Hello, Computer” – Intel’s New Mobile Chips Are Always Listening | #monitoring #always

Note: are we all on our way, not to LA, but to HER... ?

-----

Tablets and laptops coming later this year will be able to constantly listen for voice commands thanks to new chips from Intel.

By Tom Simonite

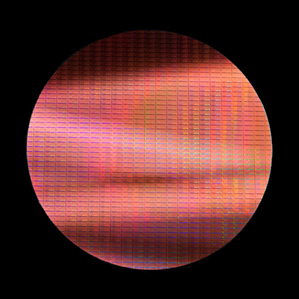

New processors: A silicon wafer etched with Intel’s Core M mobile chips.

A new line of mobile chips unveiled by Intel today makes it possible to wake up a laptop or tablet simply by saying “Hello, computer.” Once it has been awoken, the computer can operate as a voice-controlled virtual assistant. You might call out “Hello, computer, what is the weather forecast today?” while getting out of bed.

Tablets and lightweight laptops based on the new Core M line of chips will go on sale at the end of this year. They can constantly listen for voice instructions thanks to a component known as a digital signal processor core that’s dedicated to processing audio with high efficiency and minimal power use.

“It doesn’t matter what state the system will be in, it will be listening all the time,” says Ed Gamsaragan, an engineer at Intel. “You could be actively doing work or it could be in standby.”

It is possible to set any two- or three-word phrase to rouse a computer with a Core M chip. A device can also be trained to respond only to a specific voice. The voice-print feature isn’t accurate enough to replace a password, but it could prevent a device from being accidentally woken up, says Gamsaragan. If coupled with another biometric measure, such as webcam with facial recognition, however, a voice command could work as a security mechanism, he says.

Manufacturers will decide how to implement the voice features in Intel’s Core M chips in devices that will appear on shelves later this year.

The wake-on-voice feature is compatible with any operating system. That means it could be possible to summon Microsoft’s virtual assistant Cortana in Windows, or Google’s voice search functions in Chromebook devices.

The only mobile device on the market today that can constantly listen for commands is the Moto X smartphone from Motorola (see “The Era of Ubiquitous Listening Dawns”). It has a dedicated audio chip that constantly listens for the command “OK, Google,” which activates the Google search app.

Intel’s Core M chips are based on the company’s new generation of smaller transistors, with features as small as 14 nanometers. This new architecture makes chips more power efficient and cooler than earlier generations, so Core M devices don’t require cooling fans.

Intel says that the 14-nanometer architecture will make it possible to make laptops and tablets much thinner than they are today. This summer the company showed off a prototype laptop that is only 7.2 millimeters (0.28 inches) thick. That’s slightly thinner than Apple’s iPad Air, which is 7.5 millimeters thick, but Intel’s prototype packed considerably more computing power.

fabric | rblg

This blog is the survey website of fabric | ch - studio for architecture, interaction and research.

We curate and reblog articles, researches, writings, exhibitions and projects that we notice and find interesting during our everyday practice and readings.

Most articles concern the intertwined fields of architecture, territory, art, interaction design, thinking and science. From time to time, we also publish documentation about our own work and research, immersed among these related resources and inspirations.

This website is used by fabric | ch as archive, references and resources. It is shared with all those interested in the same topics as we are, in the hope that they will also find valuable references and content in it.

Quicksearch

Categories

Calendar

|

|

April '24 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

| 29 | 30 | |||||