Sticky Postings

By fabric | ch

-----

As we continue to lack a decent search engine on this blog and as we don't use a "tag cloud" ... This post could help navigate through the updated content on | rblg (as of 09.2023), via all its tags!

FIND BELOW ALL THE TAGS THAT CAN BE USED TO NAVIGATE IN THE CONTENTS OF | RBLG BLOG:

(to be seen just below if you're navigating on the blog's html pages or here for rss readers)

--

Note that we had to hit the "pause" button on our reblogging activities a while ago (mainly because we ran out of time, but also because we received complaints from a major image stock company about some images that were displayed on | rblg, an activity that we felt was still "fair use" - we've never made any money or advertised on this site).

Nevertheless, we continue to publish from time to time information on the activities of fabric | ch, or content directly related to its work (documentation).

Friday, March 13. 2015

Via Rhizome

-----

"Computing has always been personal. By this I mean that if you weren't intensely involved in it, sometimes with every fiber in your body, you weren't doing computers, you were just a user."

Ted Nelson

Friday, April 25. 2014

Via ArchDaily via The European

-----

This article by Carlo Ratti originally appeared in The European titled “The Sense-able City“. Ratti outlines the driving forces behind the Smart Cities movement and explain why we may be best off focusing on retrofitting existing cities with new technologies rather than building new ones.

What was empty space just a few years ago is now becoming New Songdo in Korea, Masdar in the United Arab Emirates or PlanIT in Portugal — new “smart cities”, built from scratch, are sprouting across the planet and traditional actors like governments, urban planners and real estate developers, are, for the first time, working alongside large IT firms — the likes of IBM, Cisco, and Microsoft.

The resulting cities are based on the idea of becoming “living labs” for new technologies at the urban scale, blurring the boundary between bits and atoms, habitation and telemetry. If 20th century French architect Le Corbusier advanced the concept of the house as a “machine for living in”, these cities could be imagined as inhabitable microchips, or “computers in open air”.

Read on for more about the rise of Smart Cities

Wearable Computers and Smart Trash

The very idea of a smart city runs parallel to “ambient intelligence” — the dissemination of ubiquitous electronic systems in our living environments, allowing them to sense and respond to people. That fluid sensing and actuation is the logical conclusion of the liberation of computing: from mainframe solidity to desktop fixity, from laptop mobility to handheld ubiquity, to a final ephemerality as computing disappears into the environment and into humans themselves with development of wearable computers.

It is impossible to forget the striking side-by-side images of the past two Papal Inaugurations: the first, for Benedict XVI in 2005, shows the raised hands of a cheering crowd, while the second, for Francesco I in 2013, a glimmering constellation of smartphone screens held aloft to take pictures. Smart cities are enabled by the atomization of technology, ushering an age when the physical world is indistinguishable from its digital overlay.

The key mechanism behind ambient intelligence, then, is “sensing” — the ability to measure what happens around us and to respond dynamically. New means of sensing are suffusing every aspect of urban space, revealing its visible and invisible dimensions: we are learning more about our cities so that they can learn about us. As people talk, text, and browse, data collected from telecommunication networks is capturing urban flows in real time and crystallizing them as Google’s traffic congestion maps.

Like a tracer running through the veins of the city, networks of air quality sensors attached to bikes can help measure an individual’s exposure to pollution and draw a dynamic map of the urban air on a human scale, as in the case of the Copenhagen Wheel developed by new startup Superpedestrian. Even trash could become smarter: the deployment of geolocating tags attached to ordinary garbage could paint a surprising picture of the waste management system, as trash is shipped throughout the country in a maze-like disposal process — as we saw in Seattle with our own Trash Track project.

Afraid of Our Own Bed

Today, people themselves (equipped with smartphones, naturally) can be instruments of sensing. Over the past few years, a new universe of urban apps has appeared — allowing people to broadcast their location, information and needs — and facilitating new interactions with the city. Hail a taxi (“Uber”), book a table for dinner (“OpenTable”), or have physical encounters based on proximity and profiles (“Grindr” and “Blendr”): real-time information is sent out from our pockets, into the city, and right back to our fingertips.

In some cases, the very process of sensing becomes a deliberate civic action: citizens themselves are taking an increasingly active role in participatory data sharing. Users of Waze automatically upload detailed road and traffic information so that their community can benefit from it. 311-type apps allow people to report non-emergencies in their immediate neighborhood, from potholes to fallen tree branches, and subsequently organize a fix. Open Street Map does the same, enabling citizens to collaboratively draw maps of places that have never been systematically charted before — especially in developing countries not yet graced by a visit from Google.

These examples show the positive implications of ambient urban intelligence but the data that emerges from fine-grained sensing is inherently neutral. It is a tool that can be used in many different applications, and to widely varying ends. As artist-turned-XeroxPARC-pioneer Rich Gold once asked in an incisive (and humorous) essay: “How smart does your bed have to be, before you are afraid to go to sleep at night?” What might make our nights sleepless, in this case, is the sheer amount of data being generated by sensing. According to a famous quantification by Google’s Eric Schmidt, every 48 hours we produce as much data as all of humanity until 2003 (an estimation that is already three years old). Who has access to this data? How do we avoid the dystopian ending of Italo Calvino’s 1960s short story “The Memory of the World,” where humanity’s act of infinite recording unravels as intrigue, drama, and murder?

And finally, does this new pervasive data dimension require an entirely new city? Probably not. Of course, ambient intelligence might have architectural ramifications, like responsive building facades or occupant-targeted climates. But in each of the city-sensing examples above, technology does not necessarily call for new urban space — many IT-infused “smart city initiatives” feel less like a necessity and more like a justification of real estate operations on a massive scale – with a net result of bland spatial products.

Forget About Flying Cars

Ambient intelligence can indeed pervade new cities, but perhaps most importantly, it can also animate the rich, chaotic erstwhile urban spaces — like a new operating system for existing hardware. This was already noted by Bill Mitchell at the beginning of our digital era: “The gorgeous old city of Venice […] can integrate modern telecommunications infrastructure far more gracefully than it could ever have adapted to the demands of the industrial revolution.” Could ambient intelligence bring new life to the winding streets of Italian hill towns, the sweeping vistas of Santorini, or the empty husks of Detroit?

We might need to forget about the flying cars that zip through standard future cities discourse. Urban form has shown an impressive persistence over millennia — most elements of the modern city were already present in Greek and Roman times. Humans have always needed, and will continue to need, the same physical structures for their daily lives: horizontal planes and vertical walls (no offense, Frank O. Gehry). But the very lives that unfold inside those walls is now the subject of one of the most striking transformations in human history. Ambient intelligence and sensing networks will not change the container but the contained; not smart cities but smart citizens.

This article by Carlo Ratti originally appeared in The European Magazine

Wednesday, April 02. 2014

Via Wired

-----

By Kyle Vanhemert

The future we see in Her is one where technology has dissolved into everyday life.

A few weeks into the making of Her, Spike Jonze’s new flick about romance in the age of artificial intelligence, the director had something of a breakthrough. After poring over the work of Ray Kurzweil and other futurists trying to figure out how, exactly, his artificially intelligent female lead should operate, Jonze arrived at a critical insight: Her, he realized, isn’t a movie about technology. It’s a movie about people. With that, the film took shape. Sure, it takes place in the future, but what it’s really concerned with are human relationships, as fragile and complicated as they’ve been from the start.

Of course on another level Her is very much a movie about technology. One of the two main characters is, after all, a consciousness built entirely from code. That aspect posed a unique challenge for Jonze and his production team: They had to think like designers. Assuming the technology for AI was there, how would it operate? What would the relationship with its “user” be like? How do you dumb down an omniscient interlocutor for the human on the other end of the earpiece?

When AI is cheap, what does all the other technology look like?

For production designer KK Barrett, the man responsible for styling the world in which the story takes place, Her represented another sort of design challenge. Barrett’s previously brought films like Lost in Translation, Marie Antoinette, and Where the Wild Things Are to life, but the problem here was a new one, requiring more than a little crystal ball-gazing. The big question: In a world where you can buy AI off the shelf, what does all the other technology look like?

In Her, the future almost looks more like the past.

Technology Shouldn’t Feel Like Technology

One of the first things you notice about the “slight future” of Her, as Jonze has described it, is that there isn’t all that much technology at all. The main character Theo Twombly, a writer for the bespoke love letter service BeautifulHandwrittenLetters.com, still sits at a desktop computer when he’s at work, but otherwise he rarely has his face in a screen. Instead, he and his fellow future denizens are usually just talking, either to each other or to their operating systems via a discrete earpiece, itself more like a fancy earplug anything resembling today’s cyborgian Bluetooth headsets.

In this “slight future” world, things are low-tech everywhere you look. The skyscrapers in this futuristic Los Angeles haven’t turned into towering video billboards a la Blade Runner; they’re just buildings. Instead of a flat screen TV, Theo’s living room just has nice furniture.

This is, no doubt, partly an aesthetic concern; a world mediated through screens doesn’t make for very rewarding mise en scene. But as Barrett explains it, there’s a logic to this technological sparseness. “We decided that the movie wasn’t about technology, or if it was, that the technology should be invisible,” he says. “And not invisible like a piece of glass.” Technology hasn’t disappeared, in other words. It’s dissolved into everyday life.

Here’s another way of putting it. It’s not just that Her, the movie, is focused on people. It also shows us a future where technology is more people-centric. The world Her shows us is one where the technology has receded, or one where we’ve let it recede. It’s a world where the pendulum has swung back the other direction, where a new generation of designers and consumers have accepted that technology isn’t an end in itself–that it’s the real world we’re supposed to be connecting to. (Of course, that’s the ideal; as we see in the film, in reality, making meaningful connections is as difficult as ever.)

Theo still has a desktop display at work and at home, but elsewhere technology is largely invisible.

Theo Twombly still sits at a desktop computer when he’s at work, but otherwise he rarely has his face in a screen.

Jonze had help in finding the contours of this slight future, including conversations with designers from New York-based studio Sagmeister & Walsh and an early meeting with Elizabeth Diller and Ricardo Scofidio, principals at architecture firm DS+R. As the film’s production designer, Barrett was responsible for making it a reality.

Throughout that process, he drew inspiration from one of his favorite books, a visual compendium of futuristic predictions from various points in history. Basically, the book reminded Barrett what not to do. “It shows a lot of things and it makes you laugh instantly, because you say, ‘those things never came to pass!’” he explains. “But often times, it’s just because they over-thought it. The future is much simpler than you think.”

That’s easy to say in retrospect, looking at images of Rube Goldbergian kitchens and scenes of commute by jet pack. But Jonze and Barrett had the difficult task of extrapolating that simplification forward from today’s technological moment.

Theo’s home gives us one concise example. You could call it a “smart house,” but there’s little outward evidence of it. What makes it intelligent isn’t the whizbang technology but rather simple, understated utility. Lights, for example, turn off and on as Theo moves from room to room. There’s no app for controlling them from the couch; no control panel on the wall. It’s all automatic. Why? “It’s just a smart and efficient way to live in a house,” says Barrett.

Today’s smartphones were another object of Barrett’s scrutiny. “They’re advanced, but in some ways they’re not advanced whatsoever,” he says. “They need too much attention. You don’t really want to be stuck engaging them. You want to be free.” In Barrett’s estimation, the smartphones just around the corner aren’t much better. “Everyone says we’re supposed to have a curved piece of flexible glass. Why do we need that? Let’s make it more substantial. Let’s make it something that feels nice in the hand.”

Theo’s smartphone was designed to be “substantial,” something that first and foremost “feels good in the hand.”

Theo’s phone in the film is just that–a handsome hinged device that looks more like an art deco cigarette case than an iPhone. He uses it far less frequently than we use our smartphones today; it’s functional, but it’s not ubiquitous. As an object, it’s more like a nice wallet or watch. In terms of industrial design, it’s an artifact from a future where gadgets don’t need to scream their sophistication–a future where technology has progressed to the point that it doesn’t need to look like technology.

All of these things contribute to a compelling, cohesive vision of the future–one that’s dramatically different from what we usually see in these types of movies. You could say that Her is, in fact, a counterpoint to that prevailing vision of the future–the anti-Minority Report. Imagining its world wasn’t about heaping new technology on society as we know it today. It was looking at those places where technology could fade into the background, integrate more seamlessly. It was about envisioning a future, perhaps, that looked more like the past. “In a way,” says Barrett, “my job was to undesign the design.”

The Holy Grail: A Discrete User Interface

The greatest act of undesigning in Her, technologically speaking, comes with the interface used throughout the film. Theo doesn’t touch his computer–in fact, while he has a desktop display at home and at work, neither have a keyboard. Instead, he talks to it. “We decided we didn’t want to have physical contact,” Barrett says. “We wanted it to be natural. Hence the elimination of software keyboards as we know them.”

Again, voice control had benefits simply on the level of moviemaking. A conversation between Theo and Sam, his artificially intelligent OS, is obviously easier for the audience to follow than anything involving taps, gestures, swipes or screens. But the voice-based UI was also a perfect fit for a film trying to explore what a less intrusive, less demanding variety of technology might look like.

The main interface in the film is voice–Theo communicates to his AI OS through a discrete ear plug.

Indeed, if you’re trying to imagine a future where we’ve managed to liberate ourselves from screens, systems based around talking are hard to avoid. As Barrett puts it, the computers we see in Her “don’t ask us to sit down and pay attention” like the ones we have today. He compares it to the fundamental way music beats out movies in so many situations. Music is something you can listen to anywhere. It’s complementary. It lets you operate in 360 degrees. Movies require you to be locked into one place, looking in one direction. As we see in the film, no matter what Theo’s up to in real life, all it takes to bring his OS into the fold is to pop in his ear plug.

Looking at it that way, you can see the audio-based interface in Her as a novel form of augmented reality computing. Instead of overlaying our vision with a feed, as we’ve typically seen it, Theo gets a one piped into his ear. At the same time, the other ear is left free to take in the world around him.

Barrett sees this sort of arrangement as an elegant end point to the trajectory we’re already on. Think about what happens today when we’re bored at the dinner table. We check our phones. At the same time, we realize that’s a bit rude, and as Barrett sees it, that’s one of the great promises of the smartwatch: discretion.

“They’re a little more invisible. A little sneakier,” he says. Still, they’re screens that require eyeballs. Instead, Barrett says, “imagine if you had an ear plug in and you were getting your feed from everywhere.” Your attention would still be divided, but not nearly as flagrantly.

Theo chops it up with a holographic videogame character.

Of course, a truly capable voice-based UI comes with other benefits. Conversational interfaces make everything easier to use. When every different type of device runs an OS that can understand natural language, it means that every menu, every tool, every function is accessible simply by requesting it.

That, too, is a trend that’s very much alive right now. Consider how today’s mobile operating systems, like iOS and ChromeOS, hide the messy business of file systems out of sight. Theo, with his voice-based valet as intermediary, is burdened with even less under-the-hood stuff than we are today. As Barrett puts it: “We didn’t want him fiddling with things and fussing with things.” In other words, Theo lives in a future where everything, not just his iPad, “just works.”

Theo lives in a future where everything, not just his iPad, “just works.”

AI: the ultimate UX challenge

The central piece of invisible design in Her, however, is that of Sam, the artificially intelligent operating system and Theo’s eventual romantic partner. Their relationship is so natural that it’s easy to forget she’s a piece of software. But Jonze and company didn’t just write a girlfriend character, label it AI, and call it a day. Indeed, much of the film’s dramatic tension ultimately hinges not just on the ways artificial intelligence can be like us but the ways it cannot.

Much of Sam’s unique flavor of AI was written into the script by Jonze himself. But her inclusion led to all sorts of conversations among the production team about the nature of such a technology. “Anytime you’re dealing with trying to interact with a human, you have to think of humans as operating systems. Very advanced operating systems. Your highest goal is to try to emulate them,” Barrett says. Superficially, that might mean considering things like voice pattern and sensitivity and changing them based on the setting or situation.

Even more quesitons swirled when they considered how an artificially intelligent OS should behave. Are they a good listener? Are they intuitive? Do they adjust to your taste and line of questioning? Do they allow time for you to think? As Barrett puts it, “you don’t want a machine that’s always telling you the answer. You want one that approaches you like, ‘let’s solve this together.’”

In essence, it means that AI has to be programmed to dumb itself down. “I think it’s very important for OSes in the future to have a good bedside manner.” Barrett says. “As politicians have learned, you can’t talk at someone all the time. You have to act like you’re listening.”

AI’s killer app, as we see in the film, is the ability to adjust to the emotional state of its user.

As we see in the film, though, the greatest asset of AI might be that it doesn’t have one fixed personality. Instead, its ability to figure out what a person needs at a given moment emerges as the killer app.

Theo, emotionally desolate in the midst of a hard divorce, is having a hard time meeting people, so Sam goads him into going on a blind date. When Theo’s friend Amy splits up with her husband, her own artificially intelligent OS acts as a sort of therapist. “She’s helping me work through some things,” Amy says of her virtual friend at one point.

In our own world, we may be a long way from computers that are able to sense when we’re blue and help raise our spirits in one way or another. But we’re already making progress down this path. In something as simple as a responsive web layout or iOS 7′s “Do Not Disturb” feature, we’re starting to see designs that are more perceptive about the real world context surrounding them–where or how or when they’re being used. Google Now and other types of predictive software are ushering in a new era of more personalized, more intelligent apps. And while Apple updating Siri with a few canned jokes about her Hollywood counterpart might not amount to a true sense of humor, it does serve as another example of how we’re making technology more human–a preoccupation that’s very much alive today.

Personal comment:

While I do agree with the idea that technology is becoming in some ways banal --or maybe, to use a better word, just common-- and that the future might not be about flying cars, fancy allover hologram interfaces or backup video cities populated with personal clones), that it might be "in service of", will "vanish" or "recede" into our daily atmospheres, environments, architectures, furnitures, clothes if not bodies or cells, we have to keep in mind that this could (will) make it even more intrusive.

When technology won't make debate anymore (when it will be common), when it will be both ambient and invisible, passively accepted if not undergone, then there will be lots of room for ... (gap to be filled by many names) to fulfill their wildest dreams.

We also have to keep in mind that when technology enters an untapped domain (let's say as an example social domain, for the last ten years), it engineers what was, in some cases and before, a common good (you didn't had to pay or trade some data to talk with somebody before). So to say, to engineer common goods (i.e. social relationships, but why not in the future love, air, genome --already the case--, etc.) turns them into products: commodification. Not always a good thing if I could say so... This definitely looks like a goal from the economy: how to turn everything into something you can sell, and information technology is quite good in helping do that.

-

And btw... even so technology might "recede", I'm not so keen with the rather "skeumorph" design of the "ai cell phone" in the movie so far (haven't seen the movie yet)..;). and oh my, I definitely hope this won't be our future. It looks like a Jobs design!

Friday, May 25. 2012

Via Owni.eu

-----

By Paule d'Atha

(...)

A little “data art” to finish with Marcin Ignac, a Danish artist, programmer and designer. In his project Every Day of My Life, he visualises every time he has used his computer in the past 30 months.

Each line represents a day and each colour block, the main application opened during that time. The black gaps are times when the computer was switched off. It’s particularly interesting to see, year after year, the frequency of black-out areas.

Friday, May 11. 2012

Via Creative Applications

-----

Feel Me is a project by Marco Triverio that explores the gap between synchronous and asynchronous communication using our mobile device in attempt to “connect differently” and enrich digital communications. Whereas we draw lines between phone conversations and sms messages, Feel Me looks for space in between that would allow you to be intimate in realtime, non-verbally using touch.

Based on the finding for which communications with a special person are not about content going back and forth but rather about perceiving the presence of the other person on the other side, Feel Me opens a real-time interactive channel.

Feel Me first appears to be a text messaging application. When two people are both looking at the conversation they are having, touches on the screen of one side are shown on the other side as small dots. Touching the same spot triggers a small reaction, such as a vibration or a sound, acknowledging that both parts are there at the same time. Feel Me creates a playful link with the person on the other side, opening a channel for a non-verbal and interactive connection.

“Feel Me” was awarded honors at CIID. Marco is currently working as an interaction designer at IDEO.

See also concept development videos below.

Project Page

Monday, January 09. 2012

Via MIT Technology Review

-----

New system detects emotions based on variables ranging from typing speeds to the weather.

By Duncan Graham-Rowe

Researchers at Samsung have developed a smart phone that can detect people's emotions. Rather than relying on specialized sensors or cameras, the phone infers a user's emotional state based on how he's using the phone.

For example, it monitors certain inputs, such as the speed at which a user types, how often the "backspace" or "special symbol" buttons are pressed, and how much the device shakes. These measures let the phone postulate whether the user is happy, sad, surprised, fearful, angry, or disgusted, says Hosub Lee, a researcher with Samsung Electronics and the Samsung Advanced Institute of Technology's Intelligence Group, in South Korea. Lee led the work on the new system. He says that such inputs may seem to have little to do with emotions, but there are subtle correlations between these behaviors and one's mental state, which the software's machine-learning algorithms can detect with an accuracy of 67.5 percent.

The prototype system, to be presented in Las Vegas next week at the Consumer Communications and Networking Conference, is designed to work as part of a Twitter client on an Android-based Samsung Galaxy S II. It enables people in a social network to view symbols alongside tweets that indicate that person's emotional state. But there are many more potential applications, says Lee. The system could trigger different ringtones on a phone to convey the caller's emotional state or cheer up someone who's feeling low. "The smart phone might show a funny cartoon to make the user feel better," he says.

Further down the line, this sort of emotion detection is likely to have a broader appeal, says Lee. "Emotion recognition technology will be an entry point for elaborate context-aware systems for future consumer electronics devices or services," he says. "If we know the emotion of each user, we can provide more personalized services."

Samsung's system has to be trained to work with each individual user. During this stage, whenever the user tweets something, the system records a number of easily obtained variables, including actions that might reflect the user's emotional state, as well as contextual cues, such as the weather or lighting conditions, that can affect mood, says Lee. The subject also records his or her emotion at the time of each tweet. This is all fed into a type of probabilistic machine-learning algorithm known as a Bayesian network, which analyzes the data to identify correlations between different emotions and the user's behavior and context.

The accuracy is still pretty low, says Lee, but then the technology is still at a very early experimental stage, and has only been tested using inputs from a single user. Samsung won't say whether it plans to commercialize this technology, but Lee says that with more training data, the process can be greatly improved. "Through this, we will be able to discover new features related to emotional states of users or ways to predict other affective phenomena like mood, personality, or attitude of users," he says.

Reading emotion indirectly through normal cell phone use and context is a novel approach, and, despite the low accuracy, one worth pursuing, says Rosalind Picard, founder and director of MIT's Affective Computing Research Group, and cofounder of Affectiva, which last year launched a commercial product to detect human emotions. "There is a huge growing market for technology that can help businesses show higher respect for customer feelings," she says. "Recognizing when the customer is interested or bored, stressed, confused, or delighted is a vital first step for treating customers with respect," she says.

Copyright Technology Review 2012.

Personal comment:

While we can doubt a little about the accuracy of so few inputs to determine someone's emotions, context aware (a step further from "geography" aware) devices seems a way to go for technology manufacturers.

Transposed into the field of environement design and architecture (sensors, probes) it could lead to some designs where there is a sort of collaboration between the environment and it's users (participants? ecology of co-evolutive functions?). We mentioned this in a previous post (The measured life): "could we think of a data based architecture (open data from health and Real Life monitoring --in addition to environmental sensors and online data--) that would collaborate with those health inputs?" and now with those emotional inputs? A sensitive environment?

We worked on something related a few years ago within the frame of a research project between EPFL (Swiss Institute of Technology, Lausanne) and ECAL (University of Art and Design, Lausanne), it was rather a robotized proof of concept at a small scale than a full environment though: The Rolling Microfunctions. The idea in this case was not that "function follows context", but rather that "function understand context and triggers open/sometimes disruptive patterns of usage, base on algorithmic and semi-autonomous rules"... Functions as a creative environment. Sort of... The result was a bit disapointing of course, but it was the idea behind it that we thought was interesting.

And of course, all these projects should be backed up by a very rigourous policy about user's, function's and space's data: they belong either to the user (privacy), to the public (open data) or why not, to the space..., but not to a company (googlacy, facebookacy, twitteracy, etc.) unless the space belong's to them, like in a shop (see "La ville franchisée", by David Mangin) .

Wednesday, April 20. 2011

Via @joelvacheron

-----

Documentary made after William Foote Whyte's seminal book "Street Corner Society".

Tuesday, March 29. 2011

Via GOOD

-----

by Alex Goldmark

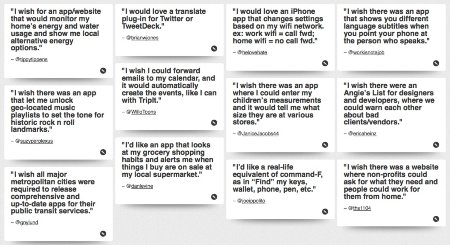

Sure, it seems like there's an app for everything. But we're not quite there yet. There are still many practical problems with seemingly simple solutions that have yet to materialize, like this:

I wish someone would use the foursquare API to build a cab-sharing app to help you split a ride home at the end of the night.

Or this:

I wish there was a website where non-profits could ask for what they need and people could work for them from home.

Meet the Internet Wishlist, a "suggestion box for the future of technology." Composed of hopeful tweets from people around the world, the site ends up reading like a mini-blog of requests for mobile apps, basic grand dreaming, and tech-focused humor posts. All kidding aside, though, creator Amrit Richmond hopes the list will ultimately lead to a bit of demand-driven design.

To contribute, people post an idea on Twitter and include #theiwl in their tweet. Richmond then collects the most "forward thinking" onto the Wishlist website. (Full disclosure, she's a former Art Editor here at GOOD and now a creative strategist for nonprofits and start-ups.)

"I hope the project inspires entrepreneurs, developers and designers to innovate and build the products and features that people want," Richmond says. "I see so many startups try and solve problems that don't need solving. ... I wanted to uncover and show what kinds of day-to-day problems people have that they want technology to solve for them."

The list already has an active community of posters, who are quick to point out when there's already an app or website fulfilling a poster's wish. For instance, several commenters pointed out that people can already connect with nonprofits and do micro-volunteering from home through Sparked.

To see the full wishlist or subscribe, go here.

What would you ask for?

Wednesday, March 16. 2011

Via TreeHugger

-----

Image: Gear Diary

The Digital Migration Continues to Change the Face of Consumption

A new study from the Ponyter Institute reveals that by the end of 2010, more people were reading their news online than in traditional newspapers. 34% said they read news online, while 31% read the paper. A noted shift in advertising revenues supported the same claim -- more money went to online sources than to daily dead tree editions, too. This story serves to highlig...

Read the full story on TreeHugger

|