Sticky Postings

By fabric | ch

-----

As we continue to lack a decent search engine on this blog and as we don't use a "tag cloud" ... This post could help navigate through the updated content on | rblg (as of 09.2023), via all its tags!

FIND BELOW ALL THE TAGS THAT CAN BE USED TO NAVIGATE IN THE CONTENTS OF | RBLG BLOG:

(to be seen just below if you're navigating on the blog's html pages or here for rss readers)

--

Note that we had to hit the "pause" button on our reblogging activities a while ago (mainly because we ran out of time, but also because we received complaints from a major image stock company about some images that were displayed on | rblg, an activity that we felt was still "fair use" - we've never made any money or advertised on this site).

Nevertheless, we continue to publish from time to time information on the activities of fabric | ch, or content directly related to its work (documentation).

Thursday, January 19. 2017

Note: let's "start" this new (delusional?) year with this short video about the ways "they" see things, and us. They? The "machines" of course, the bots, the algorithms...

An interesting reassembled trailer that was posted by Matthew Plummer-Fernandez on his Tumblr #algopop that documents the "appearance of algorithms in popular culture". Matthew was with us back in 2014, to collaborate on a research project at ECAL that will soon end btw and worked around this idea of bots in design.

Will this technological future become "delusional" as well, if we don't care enough? As essayist Eric Sadin points it in his recent book, "La silicolonisation du monde" (in French only at this time)?

Possibly... It is with no doubt up to each of us (to act), so as regarding our everyday life in common with our fellow human beings!

Via #algopop

-----

An algorithm watching a movie trailer by Støj

Everything but the detected objects are removed from the trailer of The Wolf of Wall Street. The software is powered by Yolo object-detection, which has been used for similar experiments.

Thursday, June 21. 2012

Via MIT Technology Review

-----

By Duncan Graham-Rowe

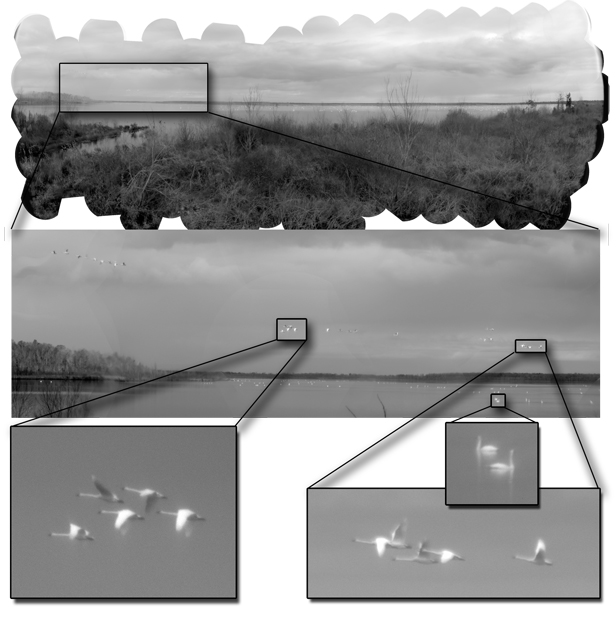

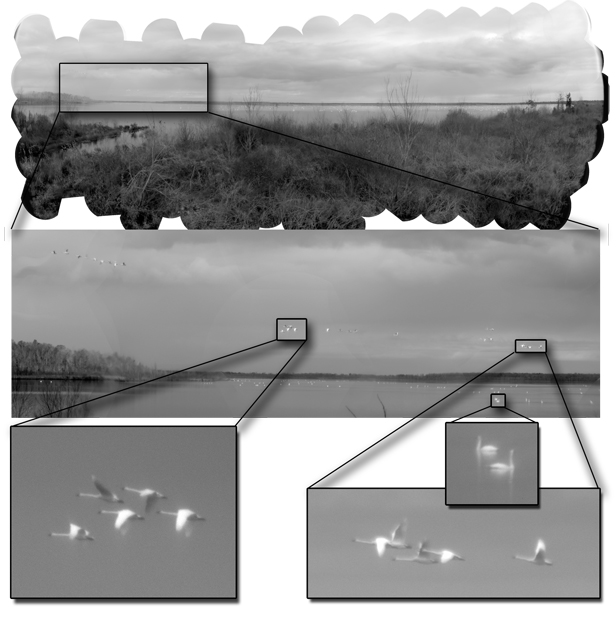

Beady eye: The Aware-2 gigapixel camera with some of its many micro-cameras.

Duke University

Imagine trying to spot an individual pixel in an image displayed across 1,000 high-definition TV screens. That's the kind of resolution a new kind of "compact" gigapixel camera is capable of producing.

Developed by David Brady and colleagues at Duke University in Durham, North Carolina, the new camera is not the first to generate images with more than a billion pixels (or gigapixel resolution). But it is the first with the potential to be scaled down to portable dimensions. Gigapixel cameras could not only transform digital photography, says Brady, but they could revolutionize image surveillance and video broadcasting.

Until now, gigapixel images have been generated either by creating very large film negatives and then scanning them at extremely high resolutions or by taking lots of separate digital images and then stitching them together into a mosaic on a computer. While both approaches can produce stunningly detailed images, the use of film is slow, and setting up hundreds of separate digital cameras to capture an image simultaneously is normally less than practical.

It is not possible to simply scale up a normal digital camera by increasing the number of light sensors to a billion, because this would require a lens so large that imperfections on its surface would cause distortion.

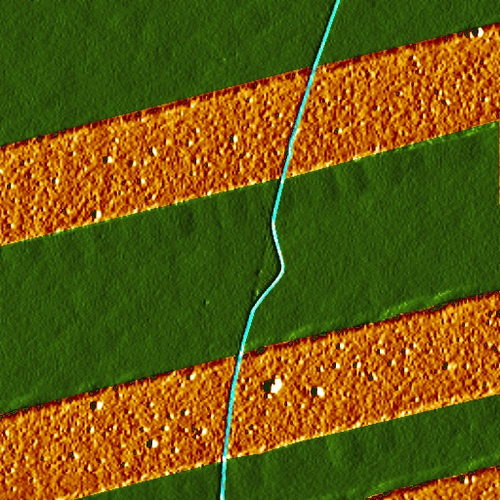

Zoom in: A gigapixel image of Pungo Lake.

Duke University

Brady's solution, a camera called AWARE, has 98 micro-cameras similar to those found in smart phones, each with 10-megapixel resolution. By positioning these high quality micro-cameras behind the lens, it becomes possible to process different portions of the image separately and to correct for known distortions. "We realized we could turn this into a parallel-processing problem," Brady says.

The corrections are made possible by eight graphical processing units working in parallel. Breaking the problem up this way allows more complex techniques to be used to correct for optical aberrations, says Illah Nourbakhsh a lead researcher of a similar project, called Gigapan, at Carnegie Mellon University.

Eventually, as computer processing power improves, the hardware needed for such a camera should shrink. Portable gigapixel resolution could be useful in a number of ways. For example, additional pixels already help with image stabilization. "Also, if you increase the resolution, you increase the chances of automated recognition and artificial intelligence systems being able to accurately recognize things in the world," Nourbakhsh says.

The project is described in this week's issue of the journal Nature. In one gigapixel image of Pungo Lake in the Pocosin Lakes National Wildlife Refuge, Brady's group shows that individual swans in the extreme distance can be resolved. The picture was taken using a prototype camera capable of capturing and processing an entire image in just 18 seconds.

As graphical processors improve, so too will the speed of the camera, says Bradley. And although the prototype currently stands 75 centimeters tall–about the size of a television studio camera—the device's size is dictated in large part by the equipment needed to cool the circuit boards.

"In the near term, we think this concept of a micro-camera imaging system is the future of cameras," says Brady. By the end of next year, his group hopes to be able to produce and sell 100 units a year, each costing around $100,000. This is comparable to the cost of a broadcast TV camera, he says.

Gigapixel cameras could eventually allow events to be covered in new ways. "Rather than showing a camera angle that the producer lets you see, the viewer will be able to see anything in the scene that they want," Brady says.

Wednesday, April 27. 2011

Via The Funanbulist

-----

by Léopold Lambert

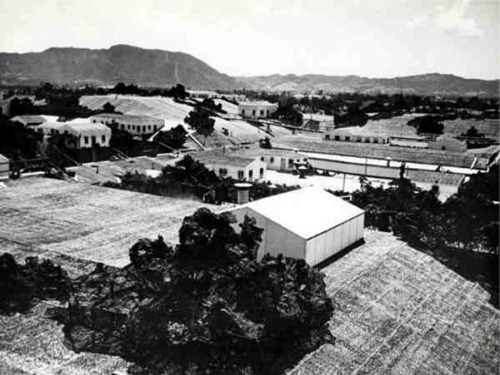

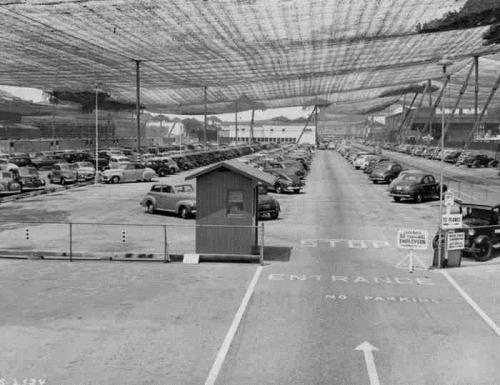

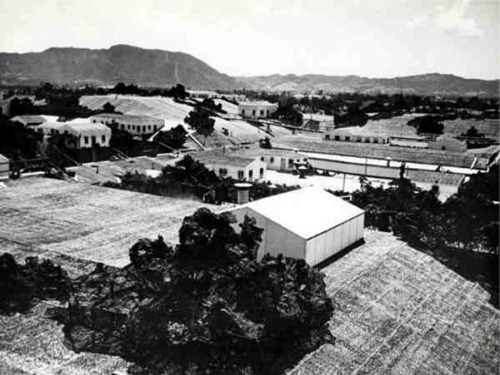

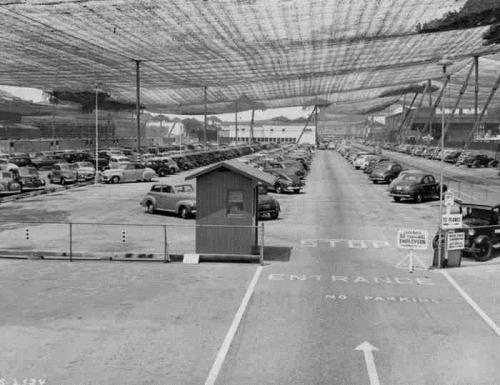

In 1942, after the United States entered the second world war and fearing the Japanese threat on the Pacific coast, an entire aircraft plant and airport -the Lockheed Burbank- has been camouflaged to escape from sight to potential Japanese airplanes. It is interesting to observe that, in order to do so, the US army had to ask for the help of Hollywood studios -WWII is probably the beginning of a long history of exchanges between Hollywood and the US Army- to make this industrial landscape appearing as a piece of suburbia. The very vast aircraft plant was therefore obliged to function under a porous canopy from which was emerging here and there, some chimneys disguised in trees or fountains.

Thanks Martial. (see more on amusingplanet)

Friday, March 25. 2011

Via Creative Applications

-----

FABRICATE is an International Peer Reviewed Conference with supporting publication and exhibition to be held at The Bartlett School of Architecture in London from 15-16 April 2011. Discussing the progressive integration of digital design with manufacturing processes, FABRICATE will bring together pioneers in design and making within architecture, construction, engineering, manufacturing, materials technology and computation.

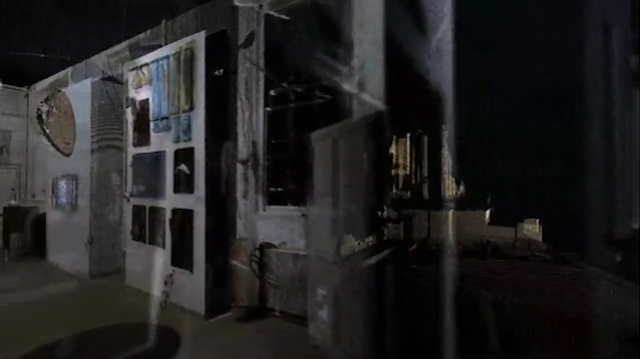

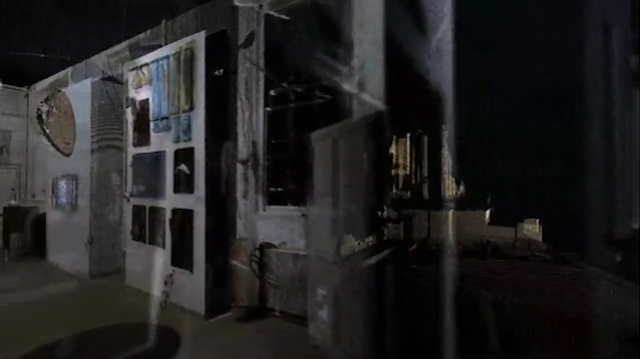

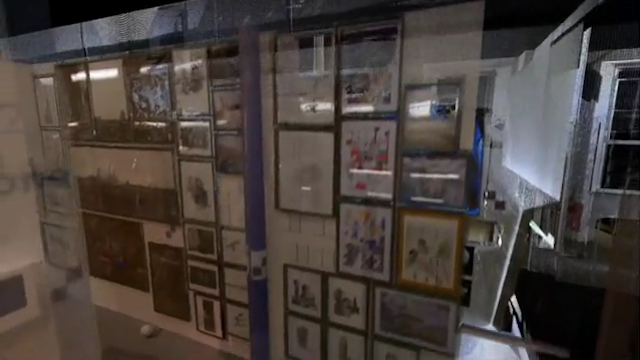

Part of the exhibition is the work of ScanLAB, a research group run by Matthew Shaw and William Trossell at the Bartlett School of Architecture that explores the potential role of 3D scanning in Architecture, Design and Making. In 2010, 48 hours of scanning produced 64 scans of the Slade school’s entire exhibition space. These have been compiled to form a complete 3D replica of the temporary show which has been distilled into a navigable animation and a series of ‘standard’ architectural drawings.

The work becomes a confused collage of hours of delicately created lines and forms set within a feature prefect representation of the exhibition space. Sometimes a model or image stands out as identifiable, more often a sketch merges into a model and an exhibition stand creating a blurred hybrid of designs and authors. These drawings represent the closest record to an as built drawing set for the entire exhibition and an ‘as was’ representation of the Bartlett’s year.

The 3D model was produced using a Faro Photon 120 laser scanner ($40k). Software that enables navigation is Pointools, generic point cloud model software that allows for some of the largest point cloud models – multi-billion point datasets.

For more information on FABRICATE, see http://www.fabricate2011.org

Exhibition Private View

6pm – 14th April 2011

Bartlett School of Architecture Gallery

Wates House, 22 Gordon Street

London WC1H 0QB

For tickets, see fabricate2011.org/registration/

(Thanks Ruairi)

See also Fragments of time and space recorded with Kinect+SLR on NYC Subway … and CITY OF HOLES on bldgblog

Personal comment:

Usually not a big fan of realistic 3d architecture, but I find quite interesting (camera movements excepted...) this "in between reality" of an uncompleted or imperfert scan. Like if the architecture was half appearing, or halp disappearing in an "in between time zone".

Via MIT Technology Review

-----

With a few snapshots, you can build a detailed virtual replica.

By Tom Simonite

|

Getting all the angles: Microsoft researcher Johannes Kopf demonstrates a cell phone app that can capture objects in 3-D.

Credit: Microsoft |

Capturing an object in three dimensions needn't require the budget of Avatar. A new cell phone app developed by Microsoft researchers can be sufficient. The software uses overlapping snapshots to build a photo-realistic 3-D model that can be spun around and viewed from any angle.

"We want everybody with a cell phone or regular digital camera to be able to capture 3-D objects," says Eric Stollnitz, one of the Microsoft researchers who worked on the project.

To capture a car in 3-D, for example, a person needs to take a handful of photos from different viewpoints around it. The photos can be instantly sent to a cloud server for processing. The app then downloads a photo-realistic model of the object that can be smoothly navigated by sliding a finger over the screen. A detailed 360 degree view of a car-sized object needs around 40 photos, a smaller object like a birthday cake would need 25 or fewer.

If captured with a conventional camera instead of a cell phone, the photos have to be uploaded onto a computer for processing in order to view the results. The researchers have also developed a Web browser plug-in that can be used to view the 3-D models, enabling them to be shared online. "You could be selling an item online, taking a picture of a friend for fun, or recording something for insurance purposes," says Stollnitz. "These 3-D scans take up less bandwidth than a video because they are based on only a few images, and are also interactive."

To make a model from the initial snapshots, the software first compares the photos to work out where in 3-D space they were taken from. The same technology was used in a previous Microsoft research project, PhotoSynth, that gave a sense of a 3-D scene by jumping between different views (see video). However, PhotoSynth doesn't directly capture the 3-D information inside photos.

"We also have to calculate the actual depth of objects from the stereo effect," says Stollnitz, "comparing how they appear in different photos." His software uses what it learns through that process to break each image apart and spread what it captures through virtual 3-D space (see video, below). The pieces from different photos are stitched together on the fly as a person navigates around the virtual space to generate his current viewpoint, creating the same view that would be seen if he were walking around the object in physical space.

Look at the video HERE.

"This is an interesting piece of software," says Jason Hurst, a product manager with 3DMedia, which makes software that combines pairs of photos to capture a single 3-D view of a scene. However, using still photos does have its limitations, he points out. "Their method, like ours, is effectively time-lapse, so it can't deal with objects that are moving," he says.

3DMedia's technology is targeted at displays like 3-D TVs or Nintendo's new glasses-free 3-D handheld gaming device. But the 3-D information built up by the Microsoft software could be modified to display on such devices, too, says Hurst, because the models it builds contain enough information to create the different viewpoints for a person's eyes.

Hurst says that as more 3-D-capable hardware appears, people will need more tools that let them make 3-D content. "The push of 3-D to consumers has come from TV and computer device makers, but the content is lagging," says Hurst. "Enabling people to make their own is a good complement."

Copyright Technology Review 2011.

Wednesday, February 16. 2011

Via Eye blog

-----

by Eye contributor

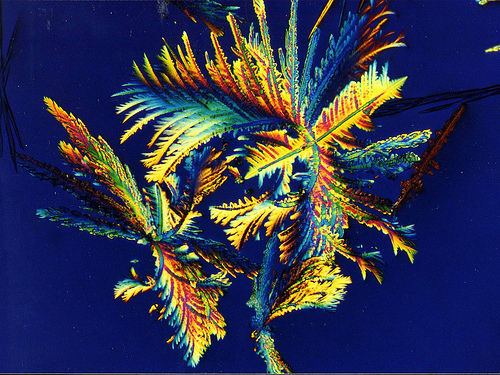

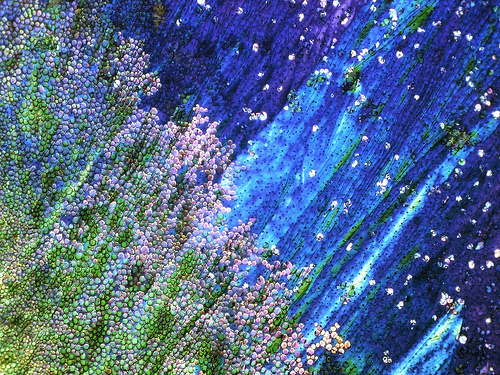

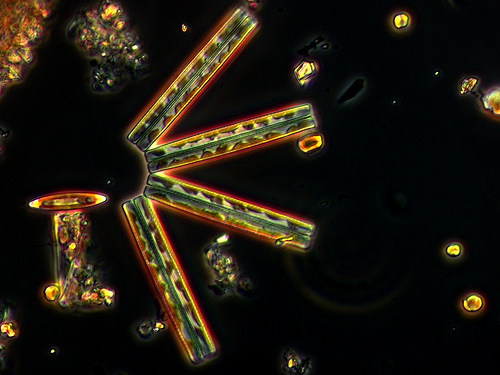

An astonishing collection of images of great beauty from the natural sciences went on show at Museum Boijmans Van Beuningen in Rotterdam last Saturday. Prof. Dr Hans Galjaard, curator of the exhibition, sought out examples from the following fields: physics, chemistry, geology, microbiology, marine biology, botany, fungal diversity, cell biology of higher organisms, human reproduction and astronomy.

He asked practitioners in more than 30 institutions if they had experienced what he calls a ‘Stendhal moment’, an instance of overwhelming beauty, during their research.

There are no physical artworks; scientific films and images will be projected on the walls and ceilings.

Top: Marble, Verde D’Arno, polished surface 7×9 cm. Photograph: Dirk Wiersma, Utrecht.

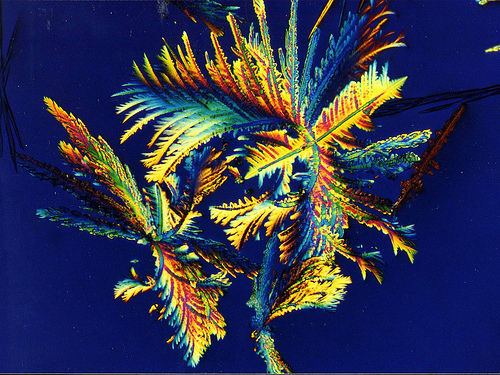

Above: Crystalline mixture including dextrin and potassium bicarbonate. Photograph: Loes Modderman.

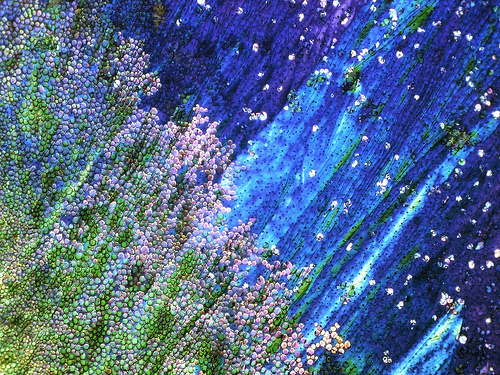

Below: Crystalline mixture of paracetamol and dextrin. Photograph: Loes Modderman.

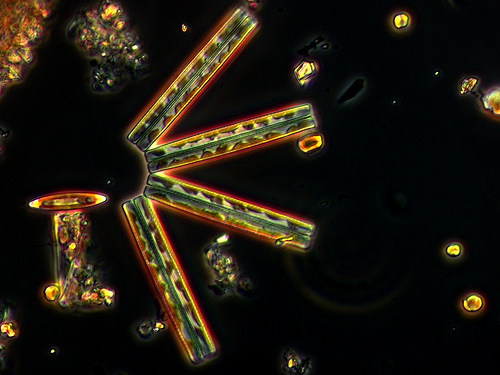

Above: Diatoms. Photograph: Wim van Egmond / Micropolitan Museum.

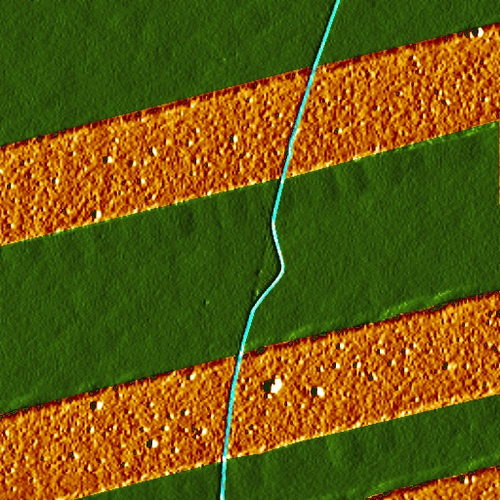

Below: A carbon nanotube (green) over two electrodes. Photograph: Cees Dekker, Nanotechnology Institute, Delft University of Technology.

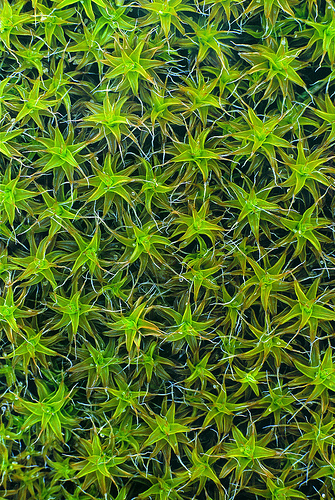

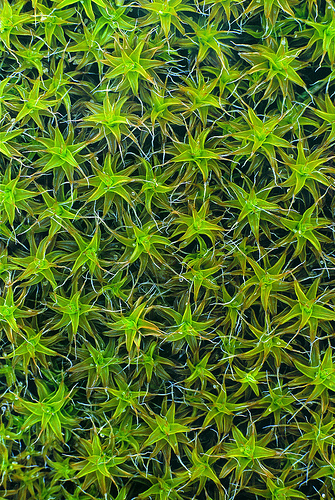

Above: Star moss. Photograph: Ruth van Crevel from Plantenparade (2001).

Below: Pollen grain. Photograph: Jan Muller, National Herbarium of the Netherlands.

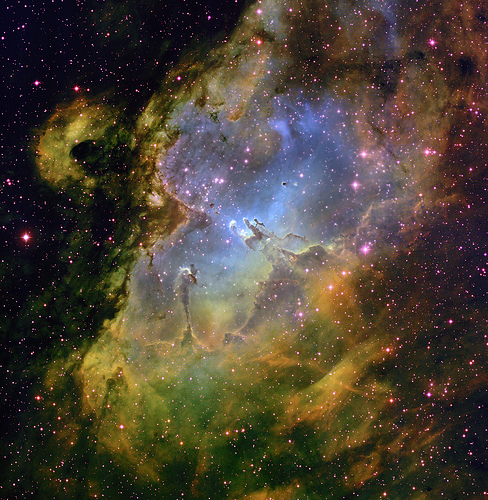

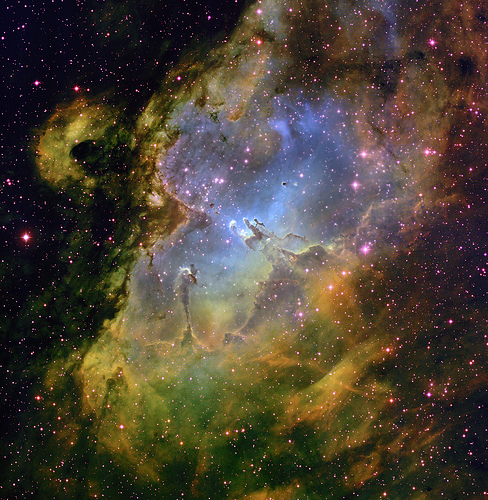

Below: Inside the Eagle Nebula. Photograph: T. A. Rector & B. A. Wolpa, National Optical Astronomy Observatory/Association of Universities for Research in Astronomy / National Science Foundation.

12 February > 5 June 2011

Beauty in Science

Museum Boijmans Van Beuningen

Museumpark

18 3015 CX Rotterdam

Netherlands

www.boijmans.nl

Friday, November 05. 2010

Via Art Press

-----

I just read these following lines from David Hockney (73 this year, and for which technologies always had an influence in his paintings --photography, photocopy, fax--), in Art Press n° 372:

"(...). You can understand what's happening today much better if you see history the way I do. For 500 years the Church controlled society by reigning over images. With the advent of the mass media, it gradually lost its power, and media magnates circulated whatever images they want, and Hollywood has extended its empire around the world. Now images are undergoing another upheaval, marking the decline of newspaper and television. People have moved onto this [gesturing at his iPad]. The monopoly on the distribution of images has been shattered. Now I can circulate whatever I want for free. This new era also signals the changing nature of photography."

"In my book Secret Knowledge, I demonstrated that photography existed long before chemical development on paper. Art historians may dispute it but I don't mind: this is the result of observation by a painter, an insider's view. Why did Caravaggio invent Hollywood lighting? Because he used a whole system of lenses, concave mirror and a camera obscura to project faces and real objects onto the canvas. Vermeer, Van Eyck, Canaletto, Chardin and Ingres used these methods. The invention of the camera in 1837 was just a way to fix the projection on paper. That lasted 160 years and now it's over. Now it's the era of digital photography, manipulating images, and the come back of manual dexterity. The idea that photography is the most striking illustration of reality is outdated. In this country, possessing or looking at certain kind of photos will land you in jail. I ask you: how can anyone tell whether or not these photos have anything to do with reality? Has this point been discussed? No. Has it been talked about in the art world? No. People think the world is like photography, but the camera gives us an optical projection of the world. It sees the world in a spectacular way, whereas we perceive it psychologically.

No matter how good a photo may be, it doesn't haunt you the way a painting can. A good painting embraces ambiguities that can never be untangled; that's why it's so fascinating. (...) Painting will always be superior to photography in one respect: time, that juxtaposition of moments which is what makes a great painting so deep, rich and ambiguous. It has been said that the surface is all that matters. But that is to cancel what can be called the magic of art. The magic is the indeterminate part. I think the only way to renew art is to go back to nature. Nature is unlimited; it's foolish to say that we've seen it all."

Personal comment:

Can we still speak about "nature" (I mean, a place where there's no wifi, no telecom networks and no communication --probably in the deep oceans, in foreign and/or inaccessible landscapes or high in the moutains --but even the Himalayas got wifi recently, Swiss mountains are all covered by Swisscom networks...--; do we have to speak about "manual dexterity" or "sotware dexterity"?

Nonetheless, this is a quite interesting quote from Mr. Hockney!

Wednesday, April 28. 2010

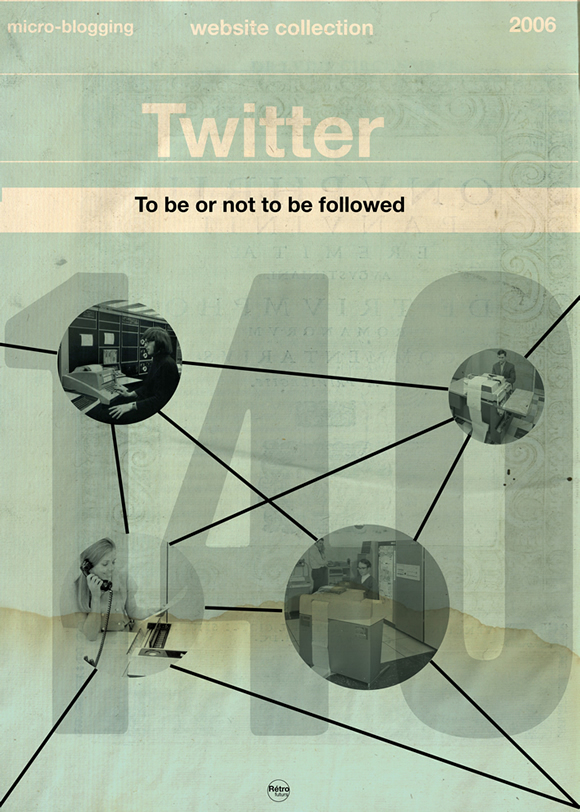

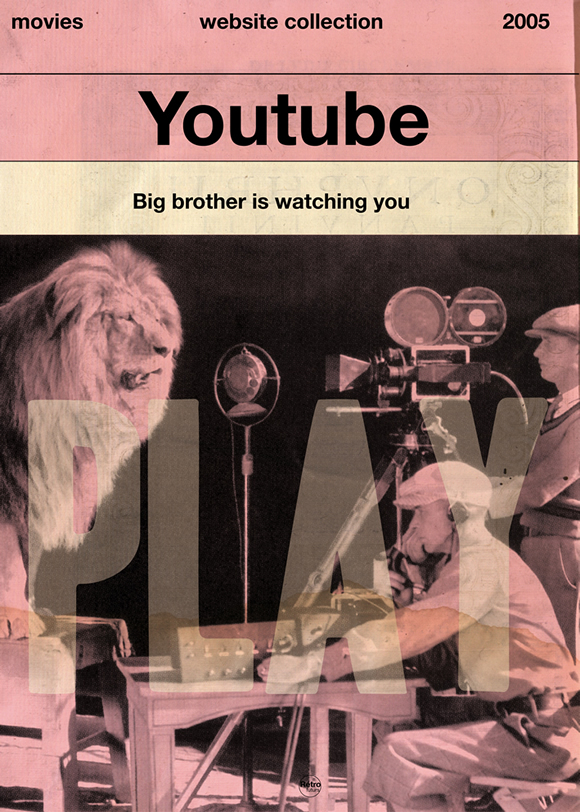

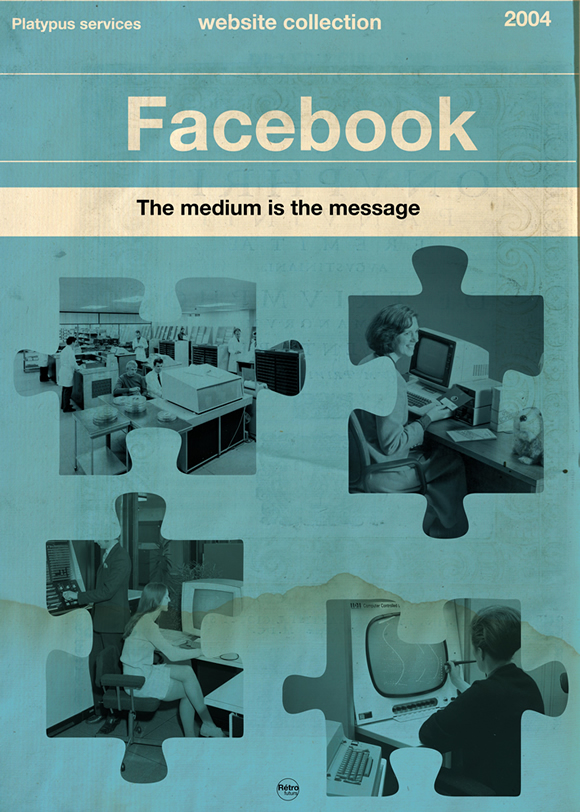

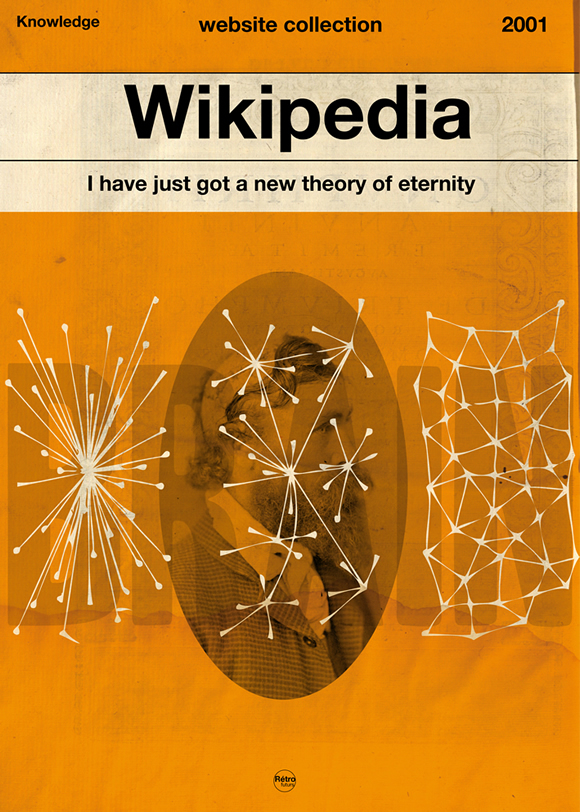

Via Retrofuturs

Flickr

MySpace

Posters avalaible in 50X70 cm, A3 & A4

A4 => 10 euros per one

Global pack for 60 euros

A3 -> 20 each one

100 euros for the pack

50*70cm ->55 euros each one

400 euros for the pack

All signed

Laser print

Monday, August 31. 2009

The Shard / London / Renzo Piano

Coruscant / Georges Lucas

Renzo Piano’s latest project, the Shard, has recently moved to the construction phase. The 1,016 ft high skyscraper will be the tallest building in Western Europe and will provide amazing views of London. The mixed use tower, complete with offices, apartments, a hotel and spa, retail areas, restaurants and a 15-storey public viewing gallery, will sit adjacent to London Bridge station as part of a new development called London Bridge Quarter. Replacing the 1970’s Southwark Tower on Bridge Street, the Shard is a welcomed addition to the London skyline, and its central location near major transportation nodes will play a key role in allowing London to expand.

More about the tower after the break.

Known for his elegant, light and detail oriented building, Piano’s Shard consists of several glass facets that incline inwards but do not meet at the top. Inspired by the towering church spires and masts of ships that once anchored on the Thames, the Shard’s form was generated by the irregular site plan and open to the sky to allow the building to breath naturally.

Planned as a “vertical city” to address the city’s growing population and need to maximize space, the Shard’s program varies to provide a functional central structure for London. The ground level will include a public piazza with restaurants and cafes, in addition to areas for art installations. The 50,000 sqm of office spaces include naturally ventilated winter gardens while the 195 hotel rooms and exclusive apartments located on the upper floors showcase beautiful views. While the Shard offers luxurious spaces sure to be coveted by companies and residents, the building also caters to the public with viewing platforms on floors 68-72. Accessed directly from an entrance on the ground level, these viewing galleries are expected to attract over half a million visitors each year.

The mixed program is attractive to many and will allow the Shard to help London’s future development. The Shard is due for completion in 2012.

As seen on Inhabitat.

-----

Via ArchDaily

Personal comment:

Je republie ce projet de Renzo Pian (publié dans ArchDaily) non pas tellement parce que le projet m'intéresse, mais parce qu'il y a un glissement de la production d'images pour l'architecture vers la SF (mêmes outils = mêmes images?).

Franchement, la première image ne vous fait-elle pas immédiatement penser à une autre image, déjà vue au cinéma (que je me suis donc permis de rajouter dans l'article)? Pour moi ça a été direct: la planète et la ville de Coruscant dans Star Wars.

Allez... Encore quelques buildings et des voitures volantes et on y sera!

Y aurait-il un "devenir Star Wars" urbanistique de Londres? Après le retro-devenir "Hong-Kongien" du Los Angeles hyper post-moderne de Blade Runner?

|