Monday, June 13. 2016

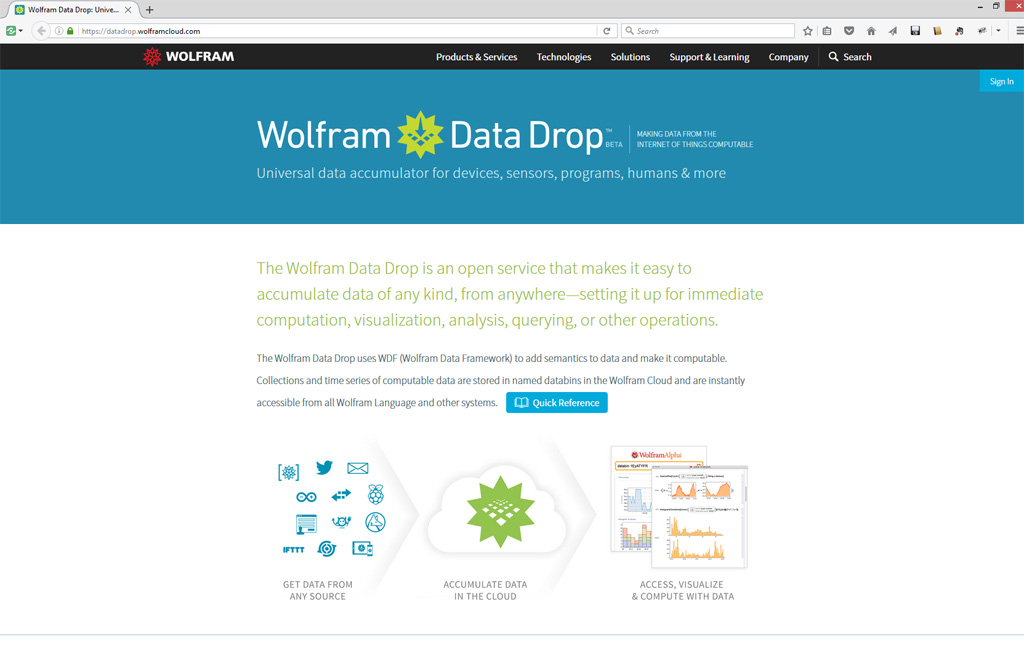

Note: after a few weeks posting about the Universal Income, here comes the "Universal data accumulator for devices, sensors, programs, humans & more" by Wolfram (best known for Wolfram Alpha computational engine and the former Mathematica libraries, on which most of their other services seem to be built).

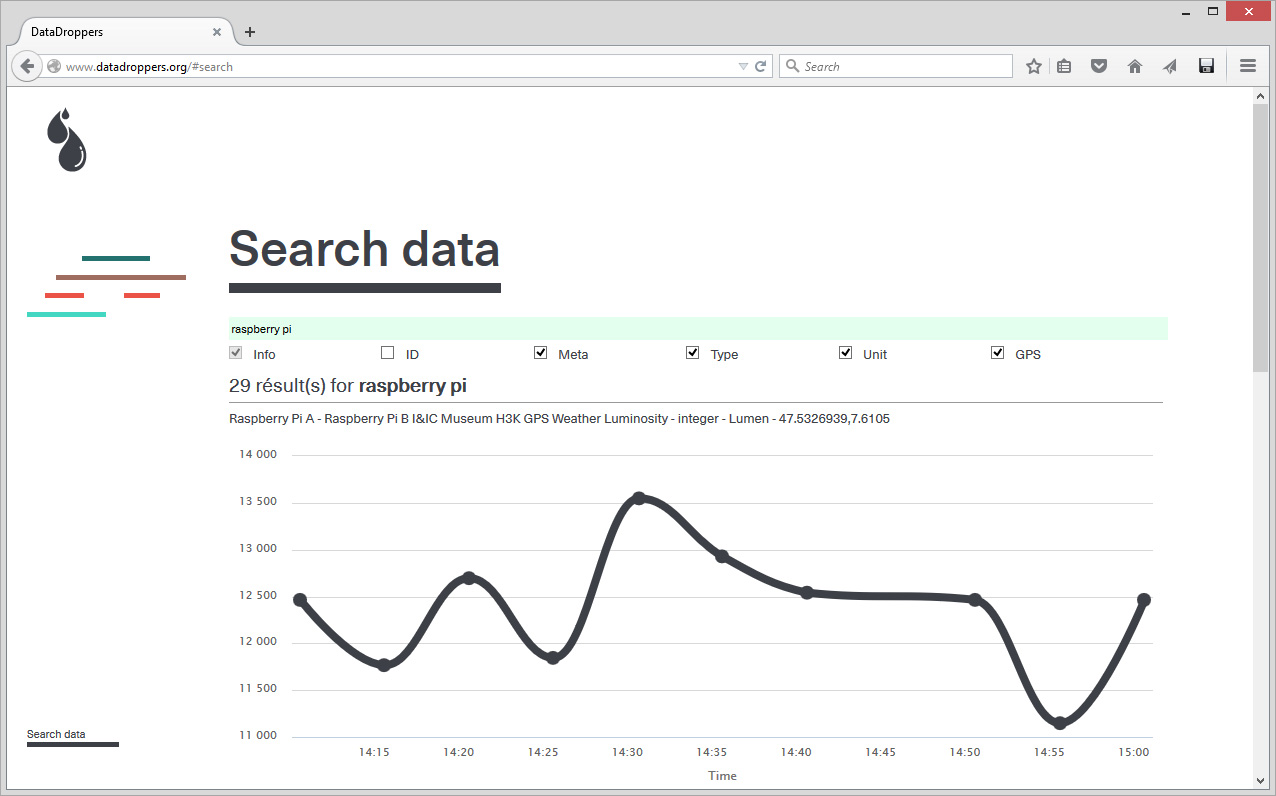

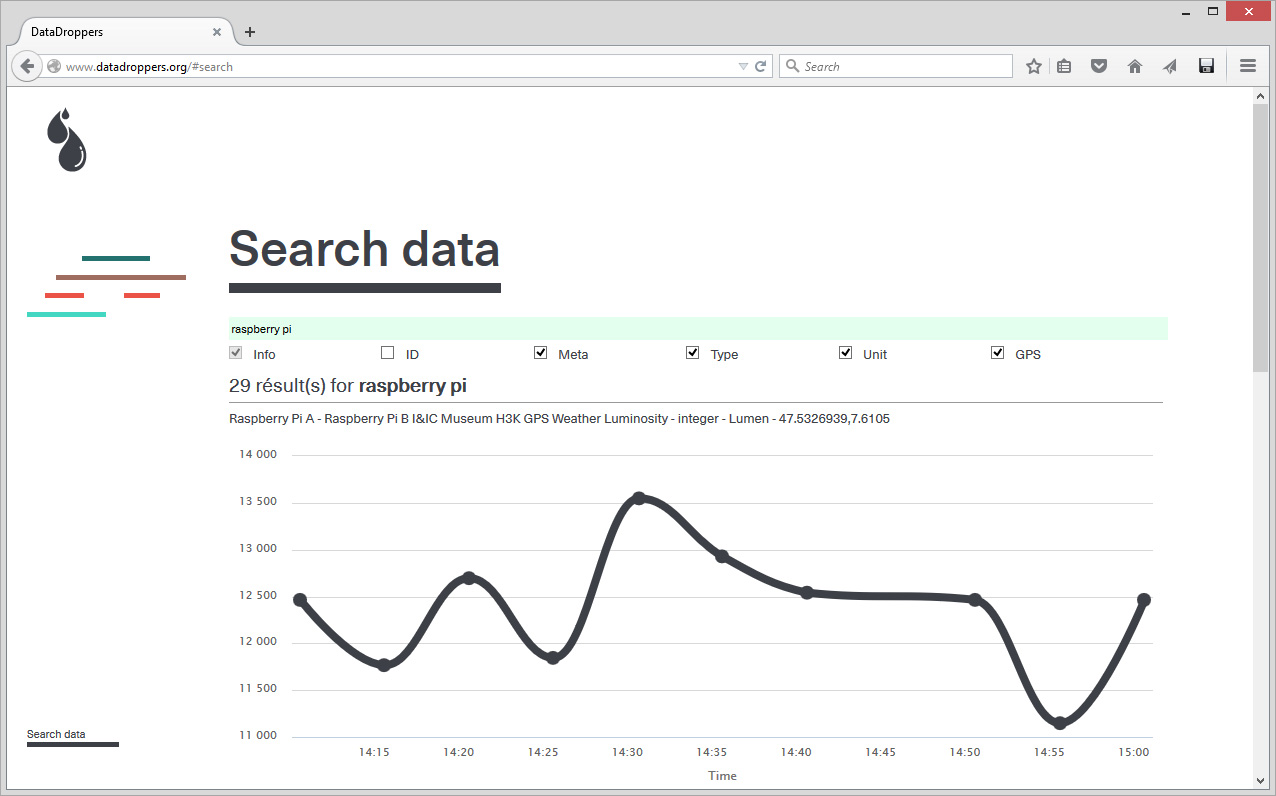

Funilly, we've picked a very similar name for a very similar data service we've set up for ourselves and our friends last year, during an exhibition at H3K: Datadroppers (!), with a different set of references in our mind (Drop City? --from which we borrowed the colors-- "Turn on, tune in, drop out"?) Even if our service is logically much more grassroots, less developed but therfore quite light to use as well.

We developed this project around data dropping/picking with another architectural project in mind that I'll speak about in the coming days: Public Platform of Future-Past. It was clearly and closely linked.

"Universal" is back in the loop as a keyword therefore... (I would rather adopt a different word for myself and the work we are doing though: "Diversal" --which is a word I'm using for 2 yearnow and naively thought I "invented", but not...)

Via Wolfram

-----

"The Wolfram Data Drop is an open service that makes it easy to accumulate data of any kind, from anywhere—setting it up for immediate computation, visualization, analysis, querying, or other operations." - which looks more oriented towards data analysis than use in third party designs and projects.

Via Datadroppers

-----

"Datadroppers is a public and open data commune, it is a tool dedicated to data collection and sharing that tries to remain as simple, minimal and easy to use as possible." Direct and light data tool for designers, belonging to designers (fabric | ch) that use it for their own projects...

Wednesday, October 21. 2015

Note: suddenly speaking about web design, wouldn' it be the time to start again doing some interaction design on the web? Aren't we in need of some "net art" approach, some weirder propositions than the too slick "responsive design" of a previsible "user-centered" or even "experience" design dogma? These kind of complex web/interaction experiences almost all vanished (remember Jodi?) To the point that there is now a vast experimental void for designers to tap again into!

Well, after the site that can only be browsed by one person at a time (with a poor visual design indeed), here comes the one that self destruct itself. Could be a start... Btw, thinking about files, sites or contents, etc. that would self destruct themsleves would probably help save lots of energy in data storage, hard drives and datacenters of all sorts, where these data sits like zombies.

Via GOOD

-----

By Isis Madrid

Former head of product at Flickr and Bitly, Matt Rothenberg recently caused an internet hubbub with his Unindexed project. The communal website continuously searched for itself on Google for 22 days, at which point, upon finding itself, spontaneously combusted.

In addition to chasing its own tail on Google, Unindexed provided a platform for visitors to leave comments and encourage one another to spread the word about the website. According to Rothenberg, knowledge of the website was primarily passed on in the physical world via word of mouth.

“Part of the goal with the project was to create a sense of unease with the participants—if they liked it, they could and should share it with others, so that the conversation on the site could grow,” Rothenberg told Motherboard. “But by doing so they were potentially contributing to its demise via indexing, as the more the URL was out there, the faster Google would find it.”

When the website finally found itself on Google, the platform disappeared and this message replaced it:

“HTTP/1.1 410 Gone This web site is no longer here. It was automatically and permanently deleted after being indexed by Google. Prior to its deletion on Tue Feb 24 2015 21:01:14 GMT+0000 (UTC) it was active for 22 days and viewed 346 times. 31 of those visitors had added to the conversation.”

If you are interested in creating a similar self-destructing site, feel free to start with Rothenberg’s open source code.

Monday, April 27. 2015

Note: we often complain on this blog about the centralization in progress (through the actions of corporations) within the "networked world", which is therefore less and less "horizontal" or distributed (and then more pyramidal, proprietary, etc.)

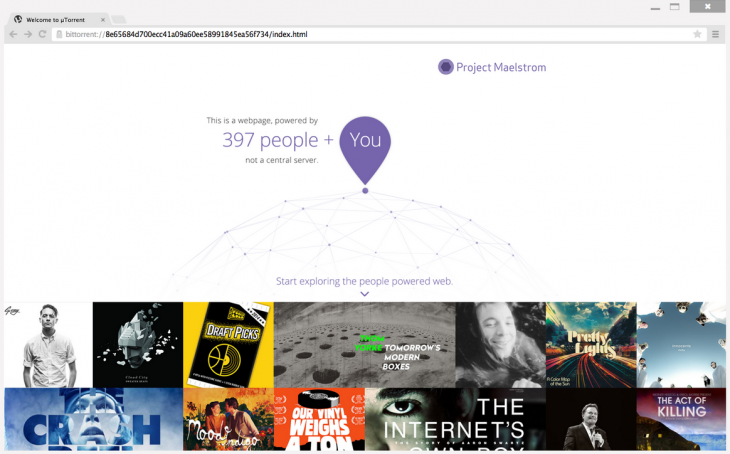

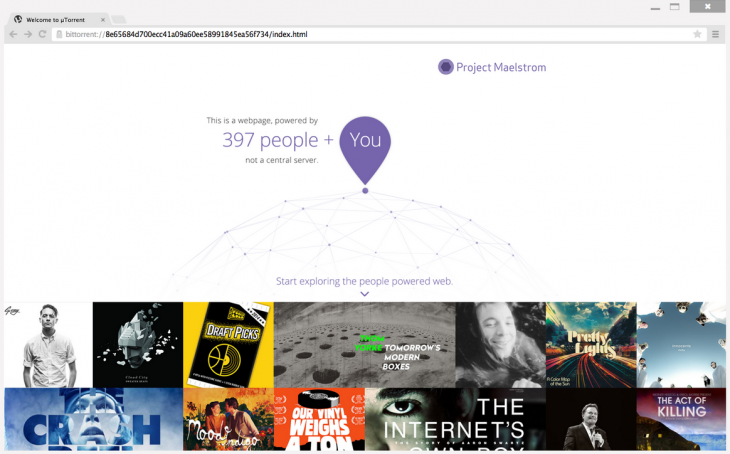

Here comes an interesting initiative by BitTorrent, following their previous distributed file storage/"cloud" system (Sync): a new browser, Maelstrom. This is interesting indeed, because instead of the "web image" --indexed web content-- being hosted in proprietary servers (i.e. the ones of Google), so as your browsing history and everything else, it seems that it will distribute this "web image" on as many "personal" devices as possible. Keeping it fully decentralized therefore. Well... let's wait and see, as the way it will really work has not been explained very well so far (we can speculate that the copy of the content will be distributed indeed, but we don't know much about their business model --what about the metadata aggregated by all the user's web searches and usages? this could still be centralized... Yet note even so they state that it will not be).

Undoubtedly, the BitTorrent architecture is an interesting one when it comes to speak about networked content and decentralization. Yet it is now also a big company... with several venture capital partners (including the ones of Facebook, Spotify, Dropbox, Jawbone, etc.). "Techno-capitalism" (as Eric Sadin names it) is unfortunately one of the biggest force of centralization and commodification. So this is a bit puzzling, but let's not be pessimistic and trust their words for now, until we don't?

Via TheNextWeb via Compouted·Blg

-----

Back in December, we reported on the alpha for BitTorrent’s Maelstrom, a browser that uses BitTorrent’s P2P technology in order to place some control of the Web back in users’ hands by eliminating the need for centralized servers.

Maelstrom is now in beta, bring it one step closer to official release. BitTorrent says more than 10,000 developers and 3,500 publishers signed up for the alpha, and it’s using their insights to launch a more stable public beta.

Along with the beta comes the first set of developer tools for the browser, helping publishers and programmers to build their websites around Maelstrom’s P2P technology. And they need to – Maelstrom can’t decentralize the Internet if there isn’t any native content for the platform.

It’s only available on Windows at the moment but if you’re interested and on Microsoft’s OS, you can download the beta from BitTorrent now.

Friday, January 23. 2015

Note: Following my recent posts about the research project "Inhabiting & Intercacing the Cloud(s)" I'm leading for ECAL, Nicolas Nova and I will be present during next Lift Conference in Geneva (Feb. 4-6 2015) for a talk combined with a workshop and a skype session with EPFL (a workshop related to the I&IC research project will be on the finish line at EPFL –Prof. Dieter Dietz’s ALICE Laboratory– on the day we’ll present in Geneva). If you plan to take part to Lift 15, please come say "hello" and exchange about the project.

Via the Lift Conference & iiclouds.org

—–

Inhabiting and Interfacing the Cloud(s)

Fri, Feb. 06 2015 – 10:30 to 12:30

Room 7+8 (Level 2)

Architect (EPFL), founding member of fabric | ch and Professor at ECAL

Principal at Near Future Laboratory and Professor at HEAD Geneva

Workshop description : Since the end of the 20th century, we have been seeing the rapid emergence of “Cloud Computing”, a new constructed entity that combines extensively information technologies, massive storage of individual or collective data, distributed computational power, distributed access interfaces, security and functionalism.

In a joint design research that connects the works of interaction designers from ECAL & HEAD with the spatial and territorial approaches of architects from EPFL, we’re interested in exploring the creation of alternatives to the current expression of “Cloud Computing”, particularly in its forms intended for private individuals and end users (“Personal Cloud”). It is to offer a critical appraisal of this “iconic” infrastructure of our modern age and its user interfaces, because to date their implementation has followed a logic chiefly of technical development, governed by the commercial interests of large corporations, and continues to be seen partly as a purely functional,centralized setup. However, the Personal Cloud holds a potential that is largely untapped in terms of design, novel uses and territorial strategies.

The workshop will be an opportunity to discuss these alternatives and work on potential scenarios for the near future. More specifically, we will address the following topics:

- How to combine the material part with the immaterial, mediatized part? Can we imagine the geographical fragmentation of these setups?

- Might new interfaces with access to ubiquitous data be envisioned that take nomadic lifestyles into account and let us offer alternatives to approaches based on a “universal” design? Might these interfaces also partake of some kind of repossession of the data by the end users?

- What setups and new combinations of functions need devising for a partly nomadic lifestyle? Can the Cloud/Data Center itself be mobile?

- Might symbioses also be developed at the energy and climate levels (e.g. using the need to cool the machines, which themselves produce heat, in order to develop living strategies there)? If so, with what users (humans, animals, plants)?

The joint design research Inhabiting & Interfacing the Cloud(s) is supported by HES-SO, ECAL & HEAD.

Interactivity : The workshop will start with a general introduction about the project, and moves to a discussion of its implications, opportunities and limits. Then a series of activities will enable break-out groups to sketch potential solutions.

Wednesday, December 24. 2014

Note: Google Earth or literally and progressively Google's Earth? It could also be considered as the start of the privatization of the lower stratosphere, where up to now, no artifacts were permanently present.

Via MIT Technology Review

-----

Google X research lab boss Astro Teller says experimental wireless balloons will test delivering Internet access throughout the Southern Hemisphere by next year.

By Tom Simonite

Astro Teller & a Project Loon prototype sails skyward.

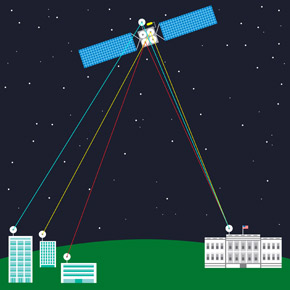

Within a year, Google is aiming to have a continuous ring of high-altitude balloons in the Southern Hemisphere capable of providing wireless Internet service to cell phones on the ground.

That’s according to Astro Teller, head of the Google X lab, the company established with the purpose of working on “moon shot” research projects. He spoke at MIT Technology Review’s EmTech conference in Cambridge today.

Teller said that the balloon project, known as Project Loon, was on track to meet the goal of demonstrating a practical way to get wireless Internet access to billions of people who don’t have it today, mostly in poor parts of the globe.

For that to work, Google would need a large fleet of balloons constantly circling the globe so that people on the ground could always get a signal. Teller said Google should soon have enough balloons aloft to prove that the idea is workable. “In the next year or so we should have a semi-permanent ring of balloons somewhere in the Southern Hemisphere,” he said.

Google first revealed the existence of Project Loon in June 2013 and has tested Loon Balloons, as they are known, in the U.S., New Zealand, and Brazil. The balloons fly at 60,000 feet and can stay aloft for as long as 100 days, their electronics powered by solar panels. Google’s balloons have now traveled more than two million kilometers, said Teller.

The balloons provide wireless Internet using the same LTE protocol used by cellular devices. Google has said that the balloons can serve data at rates of 22 megabits per second to fixed antennas, and five megabits per second to mobile handsets.

Google’s trials in New Zealand and Brazil are being conducted in partnership with local cellular providers. Google isn’t currently in the Internet service provider business—despite dabbling in wired services in the U.S. (see “Google Fiber’s Ripple Effect”)—but Teller said Project Loon would generate profits if it worked out. “We haven’t taken a dime of revenue, but if we can figure out a way to take the Internet to five billion people, that’s very valuable,” he said.

Monday, June 30. 2014

Note: I've already collected articles about this project, which interestingly, would add a permanent human presence in a layer of the atmosphere (the statosphere) where humans were not or very rarely present up to now. We also have to underline the fact that this will be an additionnal move toward the "brandification/privatization" (of the upper levels of our atmosphere --stratosphere, thermosphere-- and outer space).

It is interesting indeed, with clever worlds like "bringing the internet to million of people". Yet some other ones have a more critical view upon this strategic move by corporate interests: read Google Eyes in the Sky (by Will Oremus on Slate)

Via Next Nature

-----

Balloon-Powered Internet For Everyone

Both Google and Facebook have challenging intentions to bring the Internet to the next billion people, and while Zuckerberg’s dream involves drones with lasers, Google is planning to create a hot air balloon network.

With a system of balloons traveling on the edge of space, Project Loon will attempt to connect to internet the two-thirds of the world’s population that doesn’t have access to the Net. The balloons will float in the stratosphere, twice as high as airplanes and the weather. Users can connect to the network using a specific Internet antenna attached to their building.

“Project Loon uses software algorithms to determine where its balloons need to go, then moves each one into a layer of wind blowing in the right direction. By moving with the wind, the balloons can be arranged to form one large communications network” is explained on Project Loon website.

Currently, Google is still in the testing phase to learn more about wind patterns, and improve the balloons design. A step toward universal Internet connection?

Find more at Project Loon

Wednesday, May 28. 2014

Excerpt:

"In some ways, there’s a bug in the open source ecosystem. Projects start when developers need to fix a particular problem, and when they open source their solution, it’s instantly available to everyone. If the problem they address is common, the software can become wildly popular in a flash — whether there is someone in place to maintain the project or not. So some projects never get the full attention from developers they deserve. “I think that is because people see and touch Linux, and they see and touch their browsers, but users never see and touch a cryptographic library,” says Steve Marquess, one of the OpenSSL foundation’s partners."

Via Wired

-----

How Heartbleed Broke the Internet — And Why It Can Happen Again

Illustration: Ross Patton/WIRED

Stephen Henson is responsible for the tiny piece of software code that rocked the internet earlier this week (note: early last month).

The key moment arrived at about 11 o’clock on New Year’s Eve, 2011. With 2012 just minutes away, Henson received the code from Robin Seggelmann, a respected academic who’s an expert in internet protocols. Henson reviewed the code — an update for a critical internet security protocol called OpenSSL — and by the time his fellow Britons were ringing in the New Year, he had added it to a software repository used by sites across the web.

Two years would pass until the rest of the world discovered this, but this tiny piece of code contained a bug that would cause massive headaches for internet companies worldwide, give conspiracy theorists a field day, and, well, undermine our trust in the internet. The bug is called Heartbleed, and it’s bad. People have used it to steal passwords and usernames from Yahoo. It could let a criminal slip into your online bank account. And in theory, it could even help the NSA or China with their surveillance efforts.

It’s no surprise that a small bug would cause such huge problems. What’s amazing, however, is that the code that contained this bug was written by a team of four coders that has only one person contributing to it full-time. And yet Henson’s situation isn’t an unusual one. It points to a much larger problem with the design of the internet. Some of its most important pieces are controlled by just a handful of people, many of whom aren’t paid well — or aren’t paid at all. And that needs to change. Heartbleed has shown — so very clearly — that we must add more oversight to the internet’s underlying infrastructure. We need a dedicated and well-funded engineering task force overseeing not just online encryption but many other parts of the net.

The sad truth is that open source software — which underpins vast swathes of the net — has a serious sustainability problem. While well-known projects such as Linux, Mozilla, and the Apache web server enjoy hundreds of millions of dollars of funding, there are many other important projects that just don’t have the necessary money — or people — behind them. Mozilla, maker of the Firefox browser, reported revenues of more than $300 million in 2012. But the OpenSSL Software Foundation, which raises money for the project’s software development, has never raised more than $1 million in a year; its developers have never all been in the same room. And it’s just one example.

In some ways, there’s a bug in the open source ecosystem. Projects start when developers need to fix a particular problem, and when they open source their solution, it’s instantly available to everyone. If the problem they address is common, the software can become wildly popular in a flash — whether there is someone in place to maintain the project or not. So some projects never get the full attention from developers they deserve. “I think that is because people see and touch Linux, and they see and touch their browsers, but users never see and touch a cryptographic library,” says Steve Marquess, one of the OpenSSL foundation’s partners.

Another Popular, Unfunded Project

Take another piece of software you’ve probably never heard of called Dnsmasq. It was kicked off in the late 1990s by a British systems administrator named Simon Kelley. He was looking for a way for his Netscape browser to tell him whenever his dial-up modem had become disconnected from the internet. Scroll forward 15 years and 30,000 lines of code, and now Dnsmasq is a critical piece of network software found in hundreds of millions of Android mobile phones and consumer routers.

Kelley quit his day job only last year when he got a nine-month contract to do work for Comcast, one of several gigantic internet service providers that ships his code in its consumer routers. He doesn’t know where his paycheck will come from in 2015, and he says he has sympathy for the OpenSSL team, developing critical and widely used software with only minimal resources. “There is some responsibility to be had in writing software that is running as root or being exposed to raw network traffic in hundreds of millions of systems,” he says. Fifteen years ago, if there was a bug in his code, he’d have been the only person affected. Today, it would be felt by hundreds of millions. “With each release, I get more nervous,” he says.

Money doesn’t necessarily buy good code, but it pays for software audits and face-to-face meetings, and it can free up open-source coders from their day jobs. All of this would be welcome at the OpenSSL project, which has never had a security audit, Marquess says. Most of the Foundation’s money comes from companies asking for support or specific development work. Last year, only $2,000 worth of donations came in with no strings attached. “Because we have to produce specific deliverables that doesn’t leave us the latitude to do code audits, security reviews, refactoring: the unsexy activities that lead to a quality code base,” he says.

The problem is also preventing some critical technologies from being added to the internet. Jim Gettys says that a flaw in the way many routers are interacting with core internet protocols is causing a lot of them to choke on traffic. Gettys and a developer named Dave Taht know how to fix the issue — known as Bufferbloat — and they’ve started work on the solution. But they can’t get funding. “This is a project that has fallen through the cracks,” he says, “and a lot of the software that we depend on falls through the cracks one way or another.”

Earlier this year, the OpenBSD operating system — used by security conscious folks on the internet — nearly shut down, after being hit by a $20,000 power bill. Another important project — a Linux distribution for routers called Openwrt is also “badly underfunded,” Gettys says.

Gettys should know. He helped standardize the protocols that underpin the web and build core components of the Unix operating system, which now serves as the basis for everything from the iPhone to the servers that drive the net. He says there’s no easy answer to the problem. “I think there are ways to put money into the ecosystem,” he says, “but getting people to understand the need has been difficult.”

Eric Raymond, a coder and founder of the Open Source Initiative, agrees. “The internet needs a dedicated civil-engineering brigade to be actively hunting for vulnerabilities like Heartbleed and Bufferbloat, so they can be nailed before they become serious problems,” he said via email. After this week, it’s hard to argue with him.

Thursday, April 17. 2014

Via Computed·Blg via PCWorld

-----

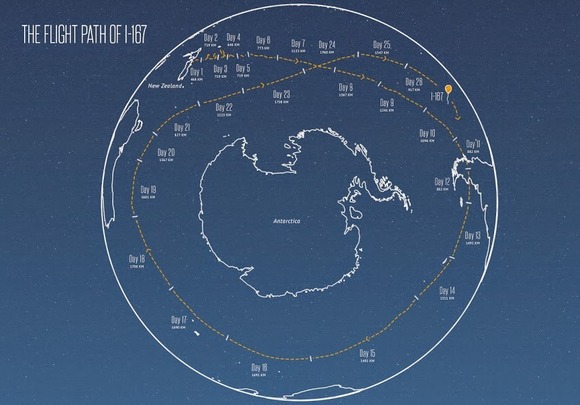

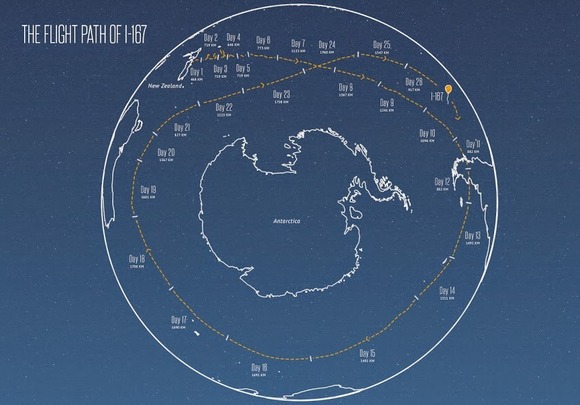

When Jules Verne wrote Around the World in Eighty Days, this probably isn’t what he had in mind: Google’s Project Loon announced last week one of its balloons had circumnavigated the Earth in 22 days.

Granted, we’re not talking a grand tour of the world here: The balloon flew in a loop over the open ocean surrounding Antarctica, starting at New Zealand. According to the Project Loon team, it was the latest accomplishment for the balloon fleet, which just achieved 500,000 kilometers of flight.

While it may seem like fun and games, Project Loon’s larger goal is to use high-altitude balloons to “connect people in rural and remote areas, help fill coverage gaps, and bring people back online after disasters.”

Currently, the project is test-flying balloons to learn more about wind patterns, and to test its balloon designs. In the past nine months, the project team has used data it’s accumulated during test flights to “refine our prediction models and are now able to forecast balloon trajectories twice as far in advance.”

It also modified the balloon’s air pump (which pumps air in and out of the balloon) to operate more efficiently, which in turn helped the balloon stay on course in this latest test run.

Project Loon’s next step toward universal Internet connection is to create “a ring of uninterrupted connectivity around the 40th southern parallel,” which it expects to pull off sometime this year.

Wednesday, March 05. 2014

Following my previous reblogs about The Real Privacy Problem & Snowdens's Leaks.

Via The New Yorker

-----

Posted by Joshua Kopstein

In the nineteen-seventies, the Internet was a small, decentralized collective of computers. The personal-computer revolution that followed built upon that foundation, stoking optimism encapsulated by John Perry Barlow’s 1996 manifesto “A Declaration of the Independence of Cyberspace.” Barlow described a chaotic digital utopia, where “netizens” self-govern and the institutions of old hold no sway. “On behalf of the future, I ask you of the past to leave us alone,” he writes. “You are not welcome among us. You have no sovereignty where we gather.”

This is not the Internet we know today. Nearly two decades later, a staggering percentage of communications flow through a small set of corporations—and thus, under the profound influence of those companies and other institutions. Google, for instance, now comprises twenty-five per cent of all North American Internet traffic; an outage last August caused worldwide traffic to plummet by around forty per cent.

Engineers anticipated this convergence. As early as 1967, one of the key architects of the system for exchanging small packets of data that gave birth to the Internet, Paul Baran, predicted the rise of a centralized “computer utility” that would offer computing much the same way that power companies provide electricity. Today, that model is largely embodied by the information empires of Amazon, Google, and other cloud-computing companies. Like Baran anticipated, they offer us convenience at the expense of privacy.

Internet users now regularly submit to terms-of-service agreements that give companies license to share their personal data with other institutions, from advertisers to governments. In the U.S., the Electronic Communications Privacy Act, a law that predates the Web, allows law enforcement to obtain without a warrant private data that citizens entrust to third parties—including location data passively gathered from cell phones and the contents of e-mails that have either been opened or left unattended for a hundred and eighty days. As Edward Snowden’s leaks have shown, these vast troves of information allow intelligence agencies to focus on just a few key targets in order to monitor large portions of the world’s population.

One of those leaks, reported by the Washington Post in late October (2013), revealed that the National Security Agency secretly wiretapped the connections between data centers owned by Google and Yahoo, allowing the agency to collect users’ data as it flowed across the companies’ networks. Google engineers bristled at the news, and responded by encrypting those connections to prevent future intrusions; Yahoo has said it plans to do so by next year. More recently, Microsoft announced it would do the same, as well as open “transparency centers” that will allow some of its software’s source code to be inspected for hidden back doors. (However, that privilege appears to only extend to “government customers.”) On Monday, eight major tech firms, many of them competitors, united to demand an overhaul of government transparency and surveillance laws.

Still, an air of distrust surrounds the U.S. cloud industry. The N.S.A. collects data through formal arrangements with tech companies; ingests Web traffic as it enters and leaves the U.S.; and deliberately weakens cryptographic standards. A recently revealed document detailing the agency’s strategy specifically notes its mission to “influence the global commercial encryption market through commercial relationships” with companies developing and deploying security products.

One solution, espoused by some programmers, is to make the Internet more like it used to be—less centralized and more distributed. Jacob Cook, a twenty-three-year-old student, is the brains behind ArkOS, a lightweight version of the free Linux operating system. It runs on the credit-card-sized Raspberry Pi, a thirty-five dollar microcomputer adored by teachers and tinkerers. It’s designed so that average users can create personal clouds to store data that they can access anywhere, without relying on a distant data center owned by Dropbox or Amazon. It’s sort of like buying and maintaining your own car to get around, rather than relying on privately owned taxis. Cook’s mission is to “make hosting a server as easy as using a desktop P.C. or a smartphone,” he said.

Like other privacy advocates, Cook’s goal isn’t to end surveillance, but to make it harder to do en masse. “When you couple a secure, self-hosted platform with properly implemented cryptography, you can make N.S.A.-style spying and network intrusion extremely difficult and expensive,” he told me in an e-mail.

Persuading consumers to ditch the convenience of the cloud has never been an easy sell, however. In 2010, a team of young programmers announced Diaspora, a privacy-centric social network, to challenge Facebook’s centralized dominance. A year later, Eben Moglen, a law professor and champion of the Free Software movement, proposed a similar solution called the Freedom Box. The device he envisioned was to be a small computer that plugs into your home network, hosting files, enabling secure communication, and connecting to other boxes when needed. It was considered a call to arms—you alone would control your data.

But, while both projects met their fund-raising goals and drummed up a good deal of hype, neither came to fruition. Diaspora’s team fell into disarray after a disappointing beta launch, personal drama, and the appearance of new competitors such as Google+; apart from some privacy software released last year, Moglen’s Freedom Box has yet to materialize at all.

“There is a bigger problem with why so many of these efforts have failed” to achieve mass adoption, said Brennan Novak, a user-interface designer who works on privacy tools. The challenge, Novak said, is to make decentralized alternatives that are as secure, convenient, and seductive as a Google account. “It’s a tricky thing to pin down,” he told me in an encrypted online chat. “But I believe the problem exists somewhere between the barrier to entry (user-interface design, technical difficulty to set up, and over-all user experience) versus the perceived value of the tool, as seen by Joe Public and Joe Amateur Techie.”

One of Novak’s projects, Mailpile, is a crowd-funded e-mail application with built-in security tools that are normally too onerous for average people to set up and use—namely, Phil Zimmermann’s revolutionary but never widely adopted Pretty Good Privacy. “It’s a hard thing to explain…. A lot of peoples’ eyes glaze over,” he said. Instead, Mailpile is being designed in a way that gives users a sense of their level of privacy, without knowing about encryption keys or other complicated technology. Just as important, the app will allow users to self-host their e-mail accounts on a machine they control, so it can run on platforms like ArkOS.

“There already exist deep and geeky communities in cryptology or self-hosting or free software, but the message is rarely aimed at non-technical people,” said Irina Bolychevsky, an organizer for Redecentralize.org, an advocacy group that provides support for projects that aim to make the Web less centralized.

Several of those projects have been inspired by Bitcoin, the math-based e-money created by the mysterious Satoshi Nakamoto. While the peer-to-peer technology that Bitcoin employs isn’t novel, many engineers consider its implementation an enormous technical achievement. The network’s “nodes”—users running the Bitcoin software on their computers—collectively check the integrity of other nodes to ensure that no one spends the same coins twice. All transactions are published on a shared public ledger, called the “block chain,” and verified by “miners,” users whose powerful computers solve difficult math problems in exchange for freshly minted bitcoins. The system’s elegance has led some to wonder: if money can be decentralized and, to some extent, anonymized, can’t the same model be applied to other things, like e-mail?

Bitmessage is an e-mail replacement proposed last year that has been called the “the Bitcoin of online communication.” Instead of talking to a central mail server, Bitmessage distributes messages across a network of peers running the Bitmessage software. Unlike both Bitcoin and e-mail, Bitmessage “addresses” are cryptographically derived sequences that help encrypt a message’s contents automatically. That means that many parties help store and deliver the message, but only the intended recipient can read it. Another option obscures the sender’s identity; an alternate address sends the message on her behalf, similar to the anonymous “re-mailers” that arose from the cypherpunk movement of the nineteen-nineties.

Another ambitious project, Namecoin, is a P2P system almost identical to Bitcoin. But instead of currency, it functions as a decentralized replacement for the Internet’s Domain Name System. The D.N.S. is the essential “phone book” that translates a Web site’s typed address (www.newyorker.com) to the corresponding computer’s numerical I.P. address (192.168.1.1). The directory is decentralized by design, but it still has central points of authority: domain registrars, which buy and lease Web addresses to site owners, and the U.S.-based Internet Corporation for Assigned Names and Numbers, or I.C.A.N.N., which controls the distribution of domains.

The infrastructure does allow for large-scale takedowns, like in 2010, when the Department of Justice tried to seize ten domains it believed to be hosting child pornography, but accidentally took down eighty-four thousand innocent Web sites in the process. Instead of centralized registrars, Namecoin uses cryptographic tokens similar to bitcoins to authenticate ownership of “.bit” domains. In theory, these domain names can’t be hijacked by criminals or blocked by governments; no one except the owner can surrender them.

Solutions like these follow a path different from Mailpile and ArkOS. Their peer-to-peer architecture holds the potential for greatly improved privacy and security on the Internet. But existing apart from commonly used protocols and standards can also preclude any possibility of widespread adoption. Still, Novak said, the transition to an Internet that relies more extensively on decentralized, P2P technology is “an absolutely essential development,” since it would make many attacks by malicious actors—criminals and intelligence agencies alike—impractical.

Though Snowden has raised the profile of privacy technology, it will be up to engineers and their allies to make that technology viable for the masses. “Decentralization must become a viable alternative,” said Cook, the ArkOS developer, “not just to give options to users that can self-host, but also to put pressure on the political and corporate institutions.”

“Discussions about innovation, resilience, open protocols, data ownership and the numerous surrounding issues,” said Redecentralize’s Bolychevsky, “need to become mainstream if we want the Internet to stay free, democratic, and engaging.”

Illustration by Maximilian Bode.

Friday, January 17. 2014

Via MIT Technology Review

-----

The company behind the Bittorrent protocol is working on software that can replicate most features of file-syncing services without handing your data to cloud servers.

By Tom Simonite on January 17, 2014

Data dump: New software from Bittorrent can synchronize files between computers and mobile devices without ever storing them in a data center like this one.

The debate over how much we should trust cloud companies with our data (see “NSA Spying Is Making Us Less Safe”) was reawakened last year after revelations that the National Security Agency routinely harvests data from Internet companies including Google, Microsoft, Yahoo, and Facebook.

Bittorrent, the company behind the sometimes controversial file-sharing protocol of the same name, is hoping that this debate will drive adoption of its new file-syncing technology this year. Called Bittorrent Sync, it synchronizes folders and files on different computers and mobile devices in a way that’s similar to what services like Dropbox offer, but without ever copying data to a central cloud server.

Cloud-based file-syncing services like Dropbox and Microsoft’s SkyDrive route all data via their own servers and keep a copy of it there. The Bittorrent software instead has devices contact one another directly over the Internet to update files as they are added or changed.

That difference in design means that people using Bittorrent Sync don’t have to worry about whether the cloud company hosting their data is properly securing it against rogue employees or other threats.

Forgoing the cloud also means that data shared using Bittorrent Sync could be harvested by the NSA or another agency only by going directly to the person or company controlling the synced devices. Synced data does travel over the public Internet, where it might be intercepted by a surveillance agency such as the NSA, which is known to collect data directly from the Internet backbone, but it travels in a strongly encrypted form. One drawback of Bittorrent Sync’s design is that two devices must both be online at the same time for them to synchronize, since there’s no intermediary server to act as an always-on source.

Bittorrent Sync is available now as a free download for PCs and mobile devices, but in a beta version that lacks the polish and ease of use of many consumer applications. Bittorrent CEO Eric Klinker says the next version, due this spring, will feature major upgrades to the interface that will make the software more user friendly and in line with its established cloud-based competitors.

Klinker says Bittorrent Sync shows how popular applications of the Internet can be designed in a way that gives people control of their own data, despite prevailing trends. “Pick any app on the Web today, it could be Twitter, e-mail, search, and it has been developed in a very centralized way—those businesses are built around centralizing information on their servers,” he says. “I’m trying to put more power in the hands of the end user and less in the hands of these companies and other centralizing authorities.”

Anonymous data sent back to Bittorrent by its software indicates that more than two million people are already using it each month. Some of those people have found uses that go beyond just managing files. For example, the company says one author in Beijing uses Bittorrent Sync to distribute blog posts on topics sensitive with Chinese authorities. And one U.S. programmer built a secure, decentralized messaging system on top of the software.

Klinker says that companies are also starting to use Bittorrent Sync to keep data inside their own systems or to avoid the costs of cloud-based solutions. He plans to eventually make Bittorrent Sync pay for itself by finding a way to sell extra services to corporate users of the software.

Given its emphasis on transparency and data ownership, Bittorrent has been criticized by some for not releasing the source code for its application. Some in the tech- and privacy-savvy crowd attracted by Bittorrent Sync’s decentralized design say this step is necessary if people are to be sure that no privacy-compromising bugs or backdoors are hiding in the software.

Klinker says he understands those concerns and may yet decide to release the source code for the software. “It’s a fair point, and we understand that transparency is good, but it opens up vulnerabilities, too,” he says. For now the company prefers to keep the code private and perform security audits behind closed doors, says Klinker.

Jacob Williams, a digital forensic scientist with CSR Group, says that stance is defensible, although he generally considers open-source programs to be more secure than those that aren’t. “Open source is a double-edged sword,” says Williams, because finding subtly placed vulnerabilities is very challenging, and because open-source projects can be split off into different versions, which dilutes the number of people looking at any one version.

Williams’s own research has shown how Dropbox and similar services could be used to slip malicious software through corporate firewalls because they are configured to use the same route as Web traffic, which usually gets a free pass (see “Dropbox Can Sync Malware”). Bittorrent Sync is configured slightly differently, he says, and so likely doesn’t automatically open up an open channel to the Internet. However, “Bittorrent Sync will likely require changes to the firewall in any moderately secure network,” he notes.

|