Tuesday, November 27. 2012

Via The Architectural League NY

-----

For all the profound changes the Internet has brought about, the network itself–the data centers, exchange points, and wires that make it possible–remains for most users as disembodied and non-material as the data that flows within and across it. In his recent book, Tubes: A Journey to the Center of the Internet, journalist Andrew Blum goes in search of the Internet’s infrastructure to find the physical pieces that make up our digital world. Tubes is a book about real places on the map: their sounds and smells, their storied pasts, their physical details, and the people who work there. In the following conversation with Gregory Wessner, the League’s Special Projects Director, Blum talks about what he saw on his trip to the Internet, what architects need to know about it, and what we can expect to see in the future.

GREGORY WESSER: So tell me about Tubes

ANDREW BLUM: Tubes–A Journey to the Center of the Internet is my attempt to visit the physical infrastructure of the Internet. It comes out of about ten years of writing, mostly about architecture and buildings. What I realized around 2008/2009 was that I was supposedly writing about buildings, but I was spending all my time sitting in front of a screen all day. And at the end of the day, I would get up and look at the smaller screen that I started carrying in my pocket in 2007. There seemed to be this huge disconnect between the physical world that I was supposed to be writing about and the day-to-day life that I was living. Even stranger still was that virtual world seemed to have no physical embodiment. There was no way to bridge the gap between the world I experienced and the world on the other side of the screen. Until one day my Internet broke and a repairman came to fix it and he followed the wire from behind the couch, down to the basement, and outside to the back of the building.

I started to wonder what would happen if you yanked the wire from the wall to see where it would lead.

GW: And you followed him?

AB: And I followed him. And then he saw a squirrel running on the wire and said, “I think a squirrel is chewing on your Internet.” And I thought if a squirrel can chew on this piece of the Internet then there must be other physical pieces of the Internet. So I started to wonder what would happen if you yanked the wire from the wall to see where it would lead.

GW: And so what did you find? What are the physical components of the Internet?

AB: I would say there are three different categories. First, if the Internet is a network of networks, the places where networks meet are the most important and these are called Internet exchange points. The surprising thing is that there are about a dozen buildings in the world that are far more important in order of magnitude than the next tier. So while the Internet is theoretically everywhere, it is predominantly concentrated in these dozen buildings to the point that for international communications, just as you would fly through New York or Washington on your way to Europe, your Internet traffic is always going to pass through the same key hubs, which include New York, London, Amsterdam, Frankfurt, Los Angeles, San Francisco, and others. So that’s one category.

The second category is data centers. The generic building type of an Internet exchange point is the data center. It’s a place for equipment and things like that. But I use data centers to mean the place where data is stored, where it is warehoused, and those buildings concentrate around two poles. They stay close to where people are and/or the exchange points. Or at the most interesting scale, they’re in places that are optimized for efficiency, which at the moment means predominantly Oregon, Washington, North Carolina, Sweden, and places like that. These include the big business or big super data centers like Facebook and Google and Microsoft. And then the third category is basically the lines between, the fiber optic cables that connect buildings, the fiber optic cables that connect cities, and the undersea cables that connect continents. Those are the three kinds of categories of physicality of the Internet.

GW: And to what degree did you see any design thinking applied to any of this?

AB: A surprising amount, or at least more than you might think. For example, in the first category of exchange points, one of the most prominent in the U.S., and in some ways a company that’s dominant internationally, is a company called Equinix. From the outside, the typical data center–not all of them, but many of them and certainly the biggest one in Ashburn, Virginia, which has the single greatest concentration of networks in the U.S.–looks like the ultimate bland concrete box.

(Left) An Equinix Data Center in Ashburn, VA

(Right) Data Center Server Room, photo via Shutterstock.

It looks like the back of a Walmart. There’s no sign by the door. They tell you to look for the door with the ashtray next to it to know which one the entrance is. But inside, the buildings are deliberately designed to look like you would hope the Internet would look like. They’re basically hotels and the customers are the network engineers that care for the equipment. So the interiors are meant to appeal–very explicitly meant to appeal–to the kind of sci-fi sensibility of network engineers. So that means a kind of big, red silo when you walk in. It means blue spotlights and very dramatic lighting with a sort of black ceiling like a theater. When you ask why is it dark, why are there blue lights, they pretend it’s for security, but in fact the founder is very explicit about the fact that he knew that this had to appeal to network engineers. They call the aesthetic cyberific.

GW: So a lot of these spaces are actually designed to appeal to human sensibilities and are not purely driven by technical considerations?

AB: Well, there’s certainly a technical element to the way they’re designed and Equinix prides itself in having the best technical design. They have a patent in cable management because one element to the building’s performance is the management of the cables. It’s all about cages, it’s all about the router in a cage or a bank of routers in a cage, and then cables that go up into ceiling are strung in layers of racks. Four layers of racks, each with a different type of cable: power, fiber, copper, and inner duct, which is like super fiber. The whole building is designed to accommodate these connections between cables and that’s an explicitly designed piece. But in terms of what the building feels like, it’s meant to appeal to a network engineers.

GW: Along this whole journey to the Internet, you met a lot of network engineers and technologists and related computer people. Did you meet any designers who had a hand in shaping any of this? Did you search them out?

AB: The one designer I spoke with was the guy who designed Facebook’s data center in Oregon, a guy named Neil Sheehan of Sheehan Partners in Chicago. The building is a beautiful building. It has the feel of a Donald Judd sculpture to it. It comes right up out of the landscape and it’s got these beautifully clean concrete walls and a sort of light well on top with a sophisticated entry court. What’s interesting about it is that Facebook has been very explicit that this building is a showpiece. So if you believe that architecture expresses ideals, and for the most part the Internet has not had an idealistic architectural expression, here Facebook is saying with this building that they want to show the way in which they care about their practices and their infrastructure and their customers.

Facebook’s Prineville Data Center, Oregon | Sheehan Partners, Ltd. photos: Jonnu Singleton

GW: Notwithstanding this showpiece of Facebook’s, what architectural ideals has the Internet been conveying up until now?

AB: At their best, the buildings of the Internet exhibit this sort of incredibly robust functionalism that comes out of telecom, where the buildings are very thoughtfully laid out, are very strong. The way I described them at one point is they’re like very fancy plumbing supply warehouses. There’s clearly thought put into them. They’re not just any building. Yet, they’re very deliberately anonymous and discreet.

GW: Deliberately anonymous?

AB: Because the general trend is that these are unmarked buildings. When you walk by them–and I’m thinking of one in Amsterdam that is essentially the office for one of the biggest global backbones–it’s a building that looks like it could be a mechanic shop. It’s a relatively small, relatively industrial-looking building. But if you look closely, it clearly is an expensive building. It’s clearly robustly built and built for its specific purpose. It has a very defined and incredibly functional expression. But then there is a whole class of buildings that don’t have that. They feel sloppy and fast and like basic suburban commercial/industrial boxes. It’s the same thing with the cable landing stations, the places were the trans-oceanic cables make landfall; the language they speak is of robustness and of stability. They’re clearly there to last. But they’re also so quiet, they try not to look like anything. They are like assertively anonymous buildings.

GW: With this this new Facebook data center in Oregon, do you think that the buildings of the Internet will become more representational and less anonymous?

AB: Yes, I do. A good example is a data center in London called Telehouse West. It’s a campus with three buildings, one each from 1991, 2001, and 2011. The first one is next to a relatively significant Grimshaw building, the Financial Times printworks. The first Telehouse West building is very British High-Tech, but it was built not for the Internet but as a back office for banks. The middle one from 2001 is nothing; it is a quiet and horrible building. But the 2011 one has this very sophisticated, pixelated facade. It’s a big windowless box, but the pixelated façade is very much saying, I am a building of technology, I am a giant machine.

Telehouse West | photo: James Brindle

If the whole notion of the cloud is to replace the hard drive in the computer on your desk with a hard drive in a computer far away, as we get more sophisticated about thinking about the consequences of that replacement, you start to think more about what that far-away hard drive computer is. As soon as you start to think more about what it is, then you need pictures. And as soon as it becomes a sort of corporate emblem in some way or another then that architectural expression follows. That’s absolutely what Facebook has done.

GW: What does an architect need to think about when designing these new representational buildings of the Internet?

AB: I hadn’t thought of that. I know the trend in the past has been to express the ethereality of the Internet. It’s been about fluid curves and strange shapes and somehow expressing that it’s virtual. And the future trend I think would be the opposite. It would be about expressing stability. It’s hard to say how deliberate this was but the Facebook building reads like a Greek temple. I mean, it’s got this long low building that sits in the landscape, on the top of this butte. It reads as stable, the concrete walls, the way it’s landscaped. There’s no reference to the notion that this is somehow ethereal. It feels just the opposite. But that makes sense. These buildings are the next evolution. Sort of like a bank used to be, a bank was meant to express stability. Up until now, the trust has been abstract, but I think that the trust will soon be literal and we’ll soon want to see where this stuff is and as a result, we’ll express that sense of stability.

GW: How would you say the Internet is different as an infrastructure than electricity or telephones?

AB: Well, the Internet as a whole has no designer. I mean, physically it’s the ultimate emergent system. It isn’t to say there haven’t been forces or people that made that helped define certain places. Exchange points, for example, are usually where they are for two reasons. There’s some fact of geography, like 60 Hudson Street in Manhattan is the elbow of Lower Manhattan, which has always been a communications hub. Then there’s almost always a charismatic salesman who convinced the first two networks to come and then everybody else to come on top. That’s recent, that’s in the last 15 years. But there’s always some kind of geographic fact that makes a spot important and then there’s always somebody who made it happen.

GW: So if the Internet is located where it is in part because it’s exploiting some existing infrastructure or economic system or communications history, would it look differently if you were designing it tabula rasa? Is there a theoretical ideal of the physical form of the Internet?

AB: Yeah, there is and it was the phone system. The phone system is a master planned system. But the Internet is the opposite. There is no defined structure to the Internet. It’s entirely the millions of decisions of each autonomous network. And each network is truly autonomous. That’s the fundamental idea. It’s almost a philosophical idea; it cannot be anything but emergent because it’s a network of networks. It’s always about this agreement between two networks that are in part competitors and in part cooperators. So the transition has been from a top-down design system like the telephone, particularly the nationalized systems, to the emergent system of the Internet. And they overlap certainly, but not exactly.

There is no defined structure to the Internet. It’s entirely the millions of decisions of each autonomous network.

GW: How could cities be designed differently to better accommodate the Internet?

AB: There’s always the difficulty of getting fiber to buildings, but that’s a relatively small issue. The bigger issue for smaller cities is having good local hubs. Big cities don’t have that problem. In some ways their local hubs are too big. They’re too expensive. In New York the main hub buildings cost a fortune…they’re too expensive for people to get into and it pushes other people out. It decreases opportunities in some ways because of that.

But in a second or third tier city, cities do better when somebody has managed to create a building that operates as carrier neutral. It’s not owned by a Verizon or a Sprint or a Time Warner and it offers the right environment for networks to connect to each other. So you need that building for that for that connection to happen. Most cities have developed that as a matter of course, but not all. One example I looked at a little bit is South Bend, Indiana, which is lucky because it’s a railroad city. There happened to be a guy there who basically turned the railroad station into an interconnection facility. He runs it independently. And so South Bend is doing this big municipal fiber network because of the connection to the rest of the Internet that this building offers. The flip side of that, one of New York’s major interconnection facilities, 111 Eighth Avenue, which used to be privately owned and had this sort of ecosystem of different networks, is now wholly owned by Google. It is an incredible reversal of the argument that these buildings should be neutral.

GW: To backtrack to an earlier point you made about Internet concentrating in certain cities, whether it’s at these twelve major exchange points or around the larger data centers, did you then look at how those buildings are in turn affecting the neighborhoods and cities in which they are concentrated?

AB: The best example of that at moment is Oregon. Oregon’s traditional industry collapsed for the most part and the data centers are in many ways replacing that traditional industry. The data centers are encouraged because they fill a financial vacuum with the collapse of the timber and aluminum industries. They then also benefit from the power supply and the hydroelectric supply that timber and aluminum benefitted from, that infrastructure. And as enough of them settled there, then the air conditioner repair guys, the security system guys, the electricians, all the service people who grow the human capital to serve those centers set up shop there. And then more people come because those services are available. The even more dramatic example is Amsterdam. As a matter policy in the mid-90s, Amsterdam said it should be a port for the Internet as it’s always been a port for everything else. And so then said that anyone who digs a trench to lay fiber has to announce their intention. Anyone else who wants to put their fiber in that trench is allowed and they split they cost. As a result there was this incredible abundance of fiber; that both allowed Amsterdam as a city to be a key interconnection point for the Internet on a global scale. And then that also made Internet access in the Netherlands cheap and faster than anywhere else because there’s both so much fiber in the ground and because there are so many international links that wholesale bandwidth is cheaper. So it’s just as with their airport, you can go anywhere from Amsterdam, even though Amsterdam is a city of about 1.2 million people.

GW: Did you look at how the Internet is physically manifesting itself in new cities? Like New Songdo in South Korea or Masdar in Abu Dhabi? Is there a difference in the way the Internet evolves in a new city versus being retroactively installed in an existing city?

AB: I didn’t. But in terms of the fiber in the ground on the city scale, of course there will be a difference. It’s going to be incredibly more efficient when that piece of it is master planned. But then the connection from that city to the rest of the Internet–it either requires somebody making the expense of building a stronger direct connection, which I’m sure is what Songo did, or it will suffer from being an extra hop away and always having an extra charge of getting back to one of those hubs.

GW: What do you think is the most relevant thing for architects that you learned throughout writing this book?

AB: There’s something very relevant, which is that the hard divide between the physical and virtual worlds that we’ve been operating under and assuming for the last fifteen years can’t possibly exist. To do that is to ignore the physical manifestation of a key part of our experience. And if the connection between that physical manifestation and our experience, the thing we have on our screen, is vague for the moment, as we depend more and more on the stuff far away the connection will become more visible and more legible. An architecture magazine tweeted something like, architecture writer Andrew Blum looks at the cloud, seems a bit far-fetched for architecture. So I tweeted back that architecture is always striving for relevance. You can’t ignore the question of what is the fate of place when all of us sit in front of our screens.

Andrew Blum is a journalist writing about architecture, design, technology, urbanism, art, and travel. He is a contributing editor at Metropolis and Urban Omnibus, and his articles and essays have appeared in Wired, The New York Times, Popular Science and Architectural Record, among many other publications.

This interview took place on June 14, 2012.

Friday, September 14. 2012

Via MIT Technology Review

-----

The Internet is both a utility and a medium. Only one of these things is exciting.

|

You know what's cool? Getting attention for your startup because this guy played you in a movie.

|

So Sean "You know what's cool? A billion dollars" Parker just declared the Internet boring! Then Brian Lam said non monsieur, you're the one who is boring.

Attention conservation notice: If you're not asleep or clicking over to Buzzfeed already, the boring one is you.

Parker just launched the last thing anyone should expend resources on given the limited time we have left on this dying planet: a Chatroulette clone called Airtime. Like its predecessor, it's about to fill up with people's genitals, because apparently its genitalia-detection algorithm doesn't work.

About the only thing of value to come out of this bonfire of investor cash is the inevitable pile-on that will follow Parker's comment. So, is the Internet boring? I say yes.

The Internet is both a utility and a medium. Only one of these things is exciting.

I have the luxury of writing for geeks whose job it is to get excited about complicated systems like the Internet, but let's face it, the more mainstream it becomes, the more the best part of the web, "geek culture," will be divorced from the web itself. In ways large and small, it's already happening. TMZ is the traffic monster, not Slashdot.

As a generator of profoundly new ideas, the web is dead. Reading Techcrunch these days is an exercise in postmodernism. (A startup that gamifies the job search gets $21 million in funding. Really?) All the innovation is in mobile, which is why Facebook is boring but Instagram is cool and therefore worth almost as much as a company that puts robots on top of thousands of pounds of high explosives and successfully flies them to the International Space Station.

As a medium, the web just kind of replaced all the other stuff that came before. If you were excited about magazines in the 90's, well, they still exist! Yeah, they're kind of different, and people who write for them have to put up with trolls in the comments, but otherwise, it's not as different as all the navel-gazing media writers would have you believe.

On the other hand, as a utility, there's hardly been a more exciting time to be on the web. It's the universal glue that binds everything else together, and mastery over its increasingly arcane ways is the ticket to participating in whatever remains of the middle class after we're done socializing all the costs of our Internet-speed financial system. But like I said, this utility function of the Internet is increasingly irrelevant to the ever large swath of humanity that relies on it. It's like asking people to get excited about civic infrastructure. (Which is awesome! This book changed my life.)

So, Internet = boring? Yes, absolutely. Now that the novelty has worn off, all we've really got is each other, saying the same ridiculous and mundane things we've always said. It remains the case that hell is other people.

Friday, March 23. 2012

Via Pasta&Vinegar

-----

- reaDIYmate: Wi-Fi paper companions

"reaDIYmates are fun Wi-fi paper companions that move and play sounds depending on what's happening in your digital life. Assemble them in 10 minutes with no tools or glue, then choose what you want them to do through a simple web interface. Link them to your digital life (Gmail, Facebook, Twitter, Foursquare, RSS feeds, SoundCloud, If This Then That, and more to come) or control them remotely in real time from your iPhone."

Monday, January 30. 2012

Via Mammoth

-----

by rholmes

["Interior components of the cooling system" at a Facebook data center in Palo Alto; image via Alexis Madrigal's report for Domus on Facebook's Open Computer Project, which "describes in detail how to construct an energy-efficient data centre".]

“Secret Servers”, an article by James Bridle originally published in issue 099 of Icon magazine, looks at the relationship between architecture and the physical infrastructure of the internet. I found Bridle’s last few paragraphs particularly provocative:

“What is at stake is the way in which architects help to define and shape the image of the network to the general public. Datacenters are the outward embodiment of a huge range of public and private services, from banking to electronic voting, government bureaucracy to social networks. As such, they stand as a new form of civic architecture, at odds with their historical desire for anonymity.

Facebook’s largest facility is its new datacenter in Prineville, Oregon, tapping into the same cheap electricity which powers Google’s project in The Dalles. The social network of more than 600 million users is instantiated as a 307,000 square foot site currently employing over 1,000 construction workers—which will dwindle to just 35 jobs when operational. But in addition to the $110,000 a year Facebook has promised to local civic funds, and a franchise fee for power sold by the city, comes a new definition for datacenters and their workers, articulated by site manager Ken Patchett: “We’re the blue collar guys of the tech industry, and we’re really proud of that. This is a factory. It’s just a different kind of factory then you might be used to. It’s not a sawmill or a plywood mill, but it’s a factory nonetheless.”

This sentiment is echoed in McDonald’s description of “a new age industrial architecture”, of cities re-industrialised rather than trying to become “cultural cities”, a modern Milan emphasising the value of engineering and the craft and “making” inherent in information technology and digital real estate.

The role of the architect in the new digital real estate is to work at different levels, in Macdonald’s words “from planning and building design right down to cultural integration with other activities.” The cloud, the network, the “new heavy industry”, is reshaping the physical landscape, from the reconfiguration of Lower Manhattan to provide low-latency access to the New York Stock Exchange, to the tangles of transatlantic fiber cables coming ashore at Widemouth Bay, an old smuggler’s haunt on the Cornish coast. A formerly stealth sector is coming out into the open, revealing a tension between historical discretion and corporate projection, and bringing with it the opportunity to define a new architectural vocabulary for the digitised world.”

Though Bridle does not make this link explicit in the article, the idea of a potential “new architectural vocabulary” is clearly related to the “New Aesthetic” that Bridle began talking about this past May. (I’ve always liked Matt Berg’s description of it as a “sensor vernacular”, and Robin Sloan’s “digital backwash aesthetic”. I’m not sure either of those capture exactly what Bridle’s been talking about — more like pieces of it — but they all dance around the same set of things, or at least similar sets.) Here’s Bridle’s original description, pinched together:

For so long we’ve stared up at space in wonder, but with cheap satellite imagery and cameras on kites and RC helicopters, we’re looking at the ground with new eyes, to see structures and infrastructures.

The map fragments, visible at different resolutions, accepting of differing hierarchies of objects.

Views of the landscape are superimposed on one another. Time itself dilates.

Representations of people and of technology begin to break down, to come apart not at the seams, but at the pixels.

The rough, pixelated, low-resolution edges of the screen are becoming in the world.

And when that — a new aesthetic vocabulary — gets linked to a “re-industrialization”, pulling together aesthetics, culture, economics, and politics, you’ve got a pretty significant project. I’d like to talk about this at more length later, but for now I will just quote from Dan Hill’s fantastic 14 Cities project. (Independent of the concerns in this post, the whole project is worth a read.) This is the fourth of the fourteen fictional future cities Hill describes, “Re-industrial City”:

“The advances in various light manufacturing technologies throughout the early part of the 21st century — rapid prototyping, 3D printing and various local clean energy sources — enabled a return of industry to the city. Noise, pollution and other externalities were so low as to be insignificant, and allied to the nascent interest in digitally-enabled craft at the turn of the century, by the early 2020s suburbs had become light industrial zones once again.

Waterloo, Alexandria and the Inner West of Sydney through to Pyrmont once again became a thriving manufacturing centre, albeit on a domestic scale, as people were able to ‘micro-manufacture’ products from their backyard, or send designs to mass-manufacture hubs supported by logistics networks of electric delivery vans and trains. Melbourne had led the way through its nurturing of production in the creative industries and its existing built fabric.

In an ironic twist, former warehouses and factories are being partially converted from apartments back into warehouses and factories. Yet the domestic scale of the technologies means they can coexist with living spaces, actually suggesting a return to the craftsman’s studio model of the Middle Ages. The ‘faber’ movement — faber, to make — spread through most Australian cities, with the ‘re-industrial city’ as the result, a genuinely mixed-use productive place — with an identity.”

[For more on the New Aesthetic, read Rob Walker's recent interview with James Bridle at Design Observer. It's also well-worth checking out the essay in Domus by Alexis Madrigal that the image at top is taken from.]

Friday, November 04. 2011

Via Mammoth

-----

["Bundled, Buried, and Behind Closed Doors", a documentary short by Ben Mendelsohn and Alex Cholas-Wood, looks at one of our favorite things -- the physical infrastructure of the internet -- and, in particular, the telco hotel at 60 Hudson Street. It's particularly fascinating to see how 60 Hudson Street exhibits the "tendency of communications infrastructure to retrofit pre-existing networks to suit the needs of new technologies": the building became a modern internet hub primarily because it was already a hub in earlier communications networks, permeated by pneumatic tubes, telegraph cables, and telephone lines, and thus easily suited to the running of fiber-optic cables. (This is important because it demonstrates the relative fixity of infrastructural geographies -- like the pattern of the cities they are embedded in, the positions of infrastructures tend to endure even as the infrastructures themselves decay and are replaced.)]

Tuesday, October 04. 2011

-----

de Zak Stone

Before there was MySpace, there was GeoCities, the vast metropolis of glitchy amateur websites, pulsating with gif animations, that were the hub of digital culture for countless late-'90s teens. If you haven't found yourself in some cobweb-coated corner of the internet in a while and landed on one of their sites, that's because Yahoo shut down U.S. GeoCities two years ago, just 10 years after acquiring it for $3.57 billion at the height of the dot-com boom.

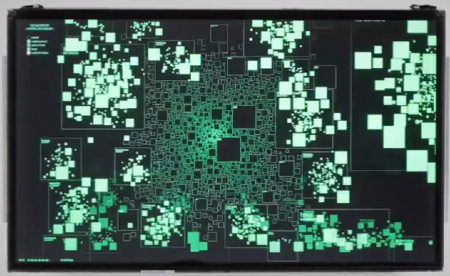

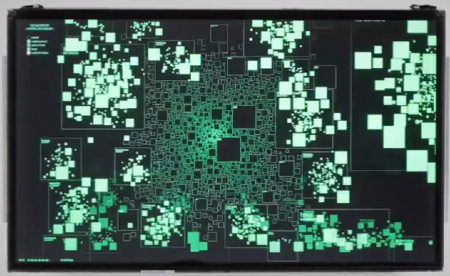

Pained by the potential loss of the record of 35 million participants' personal expression, the Internet Archive Team launched a project to save the GeoCities data for posterity, releasing a 641-GB torrent file worth of GeoCities data on the one year anniversary of its closing last October. Now this year, Dutch information designer Richard Vijgen has plotted that data along a scrollable world map of all those ancient GeoCities. He's calling it The Deleted City, "a digital archaeology of the world wide web as it exploded into the 21st century." It lives as an interactive touchscreen data visualization.

The project gives a visual representation to the change in thinking and living through the internet that we've undergone in the past decade and a half. Before the internet was understood as a (social) network, GeoCities conceived of it as a city, where "homesteaders" could build on a digital parcel, grouped in "neighborhoods" based on topic. (Celebrity oriented sites were grouped together in "Hollywood," for example.) The Deleted City replicates this logic by organizing the old websites along an urban grid. Thematic "neighborhoods" that had more content associated appear bigger. As you wander the city, you can zoom in to get more detail, and eventually locate individual html sites.

While GeoCities lives on in the popular imagination as the punchline of design and tech jokes—there's even a website to paint over slick 21st-century design with a GeoCities patina—Deleted Cities draws attention to the the way that it helped many people pioneer the web, and captures a slice of web history in a dynamic and elegant way.

Via Fast Company's Co.Design

Tuesday, July 19. 2011

Via Numerama

-----

Par Guillaume Champeau - publié le Lundi 18 Juillet 2011 à 10h39 - posté dans Télécoms

Les grands patrons des télécoms réunis par Bruxelles pour plancher sur les modes de financement du très haut débit à court terme dans l'Union Européenne ont accouché, sans surprise, d'une série de propositions qui enterrent la neutralité du net au profit d'un accès à plusieurs internets, plus ou moins riches selon l'abonnement payé. Des propositions qui enfoncent la porte déjà ouverte par la Commission Européenne.

Souvenez-vous. C'était en mars dernier. Sans grand bruit, la Commission Européenne réunissait à Bruxelles près d'une quarantaine de grands patrons des télécoms : Steve Jobs (Apple), Xavier Niel (Free), Stéphane Richard (Orange), Jean-Phillipe Courtois (Microsoft), Jean-Bernard Lévy (Vivendi), Stephen Elop (Nokia),..

La réunion avait pour but de demander aux industriels comment, selon eux, "assurer au mieux les investissements de très haut niveau du secteur privé nécessaires pour le déploiement des réseaux haut-débit de prochaine génération pour maintenir la croissance d'internet". La Commission souhaite en effet rendre possible l'objectif fixé par l'Agenda Numérique de l'Europe, qui prévoit que tous les Européens aient accès à Internet à 30 Mbps minimum d'ici 2020, et au moins la moitié d'entre eux à 100 Mbps.

Pour élaborer les propositions, un groupe de pilotage composé avait été désigné, composé par Jean-Bernard Lévy de Vivendi, Ben Verwaayen d'Alcatel-Lucent, et René Obermann de Deutsche Telekom. "Preuve qu'il y a dès la composition du groupe la volonté de trouver un équilibre entre le financement des réseaux et le financement des contenus, ce qui n'est jamais très bon signe pour la neutralité du net", pressentions-nous alors.

Le résultat est encore pire que nos craintes de l'époque, et confirme la tendance exprimée par la Commission Européenne le mois dernier, lorsqu'elle a dit vouloir privilégier le libre marché à la défense de la neutralité du net.

11 propositions pour enterrer la neutralité des réseaux

Lors d'une seconde réunion le 13 juillet dernier, les trois compères ont en effet remis une série de 11 propositions, insoutenables pour les partisans de la neutralité du net. Prenant l'objectif européen comme une aubaine pour prétendre que le déploiement du très haut-débit à court terme ne peut se faire sur les mêmes bases que précédemment, le groupe conclut que l'Europe "doit encourager la différenciation en matière de gestion du trafic pour promouvoir l’innovation et les nouveaux services, et répondre à la demande de niveaux de qualité différents". Il s'agit donc de faire payer plus cher ceux qui souhaitent accéder sans bridage à certains services qui demandent davantage de bande passante, ou "une moindre latence, ce qui est capital dans le jeu vidéo", explique le patron de Vivendi à la Tribune.

Il est aussi clairement envisagé de permettre aux éditeurs de services d'acheter un accès privilégié aux abonnés à Internet, pour que leur service soit plus rapide que celui des concurrents qui ne paieraient pas la dîme. "La valorisation du potentiel des marchés bifaces apportera plus d’innovation, d’efficacité et un déploiement plus rapide des réseaux de nouvelle génération, au bénéfice des consommateurs et des industries créatives", croit pouvoir affirmer le groupe de travail.

Par ailleurs, il justifie l'absence de représentants d'organisations de citoyens et de consommateurs parmi la quarantaine de dirigeants consultés par Bruxelles. "Les intérêts à long terme des consommateurs coïncident avec la promotion de l’innovation et l’investissement". Ils n'ont qu'à subir la mort de la neutralité du net, c'est au final dans leur intérêt, assurent les patrons des télécoms.

Dans La Tribune, Jean-Bernard Lévy raconte que la réunion du 13 juillet s'est "formidablement mieux passée que l'on ne pensait", et qu'elle a parmi de découvrir "un degré de consensus remarquable et inattendu entre ces acteurs de toute la chaîne de valeur, opérateurs, fabricants, agrégateurs, éditeurs de chaînes, etc". En oubliant, au passage, que les internautes sont les premiers acteurs de cette chaîne de valeur. Non seulement parce qu'ils payent leur accès à Internet. Mais aussi parce qu'ils sont aujourd'hui, et de très loin, les premiers producteurs des contenus qui y circulent.

Personal comment:

This can't be good... (Thanks Nicolas for the link)

Thursday, July 07. 2011

-----

Is there such a thing as DIY internet? An amazing open-source project in Afghanistan proves you don’t need millions to get connected.

While visiting family last week, the topic of conversation turned to the internet, net neutrality, and both corporate and government attempts to police the online world. A family member remarked that if they wanted to, the U.S. government could simply turn off the internet and the entire world would be screwed.

Having read this inspiring article by Douglas Rushkoff on Shareable.net, I surprised the room by disagreeing. I said that we didn’t need the corporate built internet, and that if we had to, the people could build their own. Of course, not being that technically minded, I couldn’t offer a concrete idea of how this could be achieved. Until now.

A recent Fast Company article shines a spotlight on the Afghan city of Jalalabad which has a high-speed Internet network whose main components are built out of trash found locally. Aid workers, mostly from the United States, are using the provincial city in Afghanistan’s far east as a pilot site for a project called FabFi.

FabFi is an open-source, FabLab-grown system using common building materials and off-the-shelf electronics to transmit wireless ethernet signals across distances of up to several miles. With Fabfi, communities can build their own wireless networks to gain high-speed internet connectivity—thus enabling them to access online educational, medical, and other resources.

Residents who desire an internet connection can build a FabFi node out of approximately $60 worth of everyday items such as boards, wires, plastic tubs, and cans that will serve a whole community at once.

Jalalabad’s longest link is currently 2.41 miles, between the FabLab and the water tower at the public hospital in Jalalabad, transmitting with a real throughput of 11.5Mbps (compared to 22Mbps ideal-case for a standards compliant off-the-shelf 802.11g router transitting at a distance of only a few feet). The system works consistently through heavy rain, smog and a couple of good sized trees.

With millions of people still living without access to high-speed internet, including much of rural America, an open-source concept like FabFi could have profound ramifications on education and political progress.

Because FabFi is fundamentally a technological and sociological research endeavor, it is constantly growing and changing. Over the coming months expect to see infrastructure improvements to improve stability and decrease cost, and added features such as meshing and bandwidth aggregation to support a growing user base.

In addition to network improvements, there are plans to leverage the provided connectivity to build online communities and locally hosted resources for users in addition to MIT OpenCourseWare, making the system much more valuable than the sum of its uplink bandwidth. Follow the developments on the FabFi Blog.

Friday, May 27. 2011

Via OWNI.eu

-----

by Yann Leroux

We, the digiboriginals, perceive a state of hostility towards cyberspace that reached a climax at the eG8. The neutrality on which our values are grounded have been strongly challenged under a false pretext. We have reached the point where the Internet must be protected from governments.

We have shaped a province far removed from culture into a vibrant city, whose wealth benefits all. We built cyberspace. We have built it bit after bit, manifesto after manifesto, lolcat after lolcat. There, we have our cathedrals and our bazaars. We have invented worlds, networks of social and informational systems that are second to none.

The cyberspace is not a new space to conquer. It does not exist to be colonized nor civilized.

The cyberspace is a space of civilization, and has been since its founding. This is an undeniable fact, because it is built and inhabited by men and women.

The Internet does not discriminate between Joe and Mark. It is not an egalitarian space. It breaks through barriers, yet these barriers were socially constructed. They are not in the network’s architecture.

We oppose the cyberspace changing into a space of surveillance.

We oppose states abandoning the protections they own their citizens.

We oppose states violating the right to privacy in the cyberspace.

We oppose modifying cyberspace’s architecture.

We oppose outdated copyright models becoming the norm for everything we share.

At a time when the end of humanity becomes a tangible hypothesis, more than ever we need a commons space where we can gather and solve the issues at hand. The cyberspace makes Tahrir squares and Puerta del Sol possible.

We cannot afford to lose our future – it is time to defend it.

Thursday, March 31. 2011

Vis MIT Technology Review

-----

By Kate Greene

The Open Network Foundation wants to let programmers take control of computer networks.

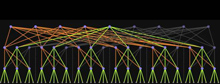

|

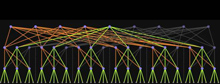

Off switch: This visualization shows network traffic when traffic loads are low and switches (the large dots) can be turned off to save power.

Credit: Open Flow Project |

Most data networks could be faster, more energy efficient, and more secure. But network hardware—switches, routers, and other devices—is essentially locked down, meaning network operators can't change the way they function. Software called OpenFlow, developed at Stanford University and the University of California, Berkeley, has opened some network hardware, allowing researchers to reprogram devices to perform new tricks.

Now 23 companies, including Google, Facebook, Cisco, and Verizon, have formed the Open Networking Foundation (ONF) with the intention of making open and programmable networks mainstream. The foundation aims to put OpenFlow and similar software into more hardware, establish standards that let different devices communicate, and let programmers write software for networks as they would for computers or smart phones.

"I think this is a true opportunity to take the Internet to a new level where applications are connected directly to the network," says Paul McNab, vice president of data center switching and services at Cisco.

Computer networks may not be as tangible as phones or computers, but they're crucial: cable television, Wi-Fi, mobile phones, Internet hosting, Web search, corporate e-mail, and banking all rely on the smooth operation of such networks. Applications that run on the type of programmable networks that the ONF envisions could stream HD video more smoothly, provide more reliable cellular service, reduce energy consumption in data centers, or even remotely clean computers of viruses.

The problem with today's networks, explains Nick McKeown, a professor of electrical engineering and computer sciences at Stanford who helped develop OpenFlow, is that data flows through them inefficiently. As data travels through a standard network, its path is determined by the switches it passes through, says McKeown. "It's a little bit like a navigation system [in a car] trying to figure out what the map looks like at the same time it's trying to find you directions," McKeown explains.

With a programmable network, he says, software can collect information about the network as a whole, so data travels more efficiently. A more complete view of a network, explains Scott Shenker, professor of electrical engineering and computer science at the University of California, Berkeley, is a product of two things: the first is OpenFlow firmware (software embedded in hardware) that taps into the switches and routers to read the state of the hardware and to direct traffic; the second is a network operating system that creates a network map and chooses the most efficient route.

OpenFlow and a network operating system "provide a consistent view of the network and do that at once for many applications," says McKeown. "It becomes trivial to find new paths."

Some OpenFlow research projects require just a couple hundred lines of code to completely change the data traffic patterns in a network—with dramatic results. In one project, McKeown says, researchers reduced a data center's energy consumption by 60 percent simply by rerouting network traffic within the center and turning off switches when they weren't in use.

This sort of research has caught the attention of big companies, and is one reason why the ONF was formed. Google is interested in speeding up the networks that connect its data centers. These data centers generally communicate through specified paths, but if a route fails, traffic needs to be rerouted, says Urs Hoelzle, senior vice president of operations at Google. Using standard routing instructions, this process can take 20 minutes. If Google had more control over how the data flowed, it could reroute within seconds, Hoelzle says.

Cisco, a company that builds the hardware that routes much of the data on the Internet, sees ONF as a way to help customers build better Internet services. Facebook, for example, relies on Cisco hardware to serve up status updates, messages, pictures, and video to hundreds of millions of people worldwide. "You can imagine the flood of data," says McNab.

Future ONF standards could let people program a network to get different kinds of performance when needed, says McNab. Building that sort of functionality into Cisco hardware could make it more appealing to Internet services that need to be fast.

The first goal of the ONF is to take over the specifications of OpenFlow, says McKeown. As a research project, OpenFlow has found success on more than a dozen campuses, but it needs to be modified so it can work well at various companies. The next step is to develop easy-to-use interfaces that let people program networks just as they would program a computer or smart phone. "This is a very big step for the ONF," he says, because it could increase the adoption of standards and speed up innovation for network applications. He says the process could take two years.

In the meantime, companies including Google, Cisco, and others will test open networking protocols on their internal networks—in essence, they'll be testing out a completely new kind of Internet.

Copyright Technology Review 2011.

|