Wednesday, October 21. 2015

Create a Self-Destructing Website With This Open Source Code | #web #design

Note: suddenly speaking about web design, wouldn' it be the time to start again doing some interaction design on the web? Aren't we in need of some "net art" approach, some weirder propositions than the too slick "responsive design" of a previsible "user-centered" or even "experience" design dogma? These kind of complex web/interaction experiences almost all vanished (remember Jodi?) To the point that there is now a vast experimental void for designers to tap again into!

Well, after the site that can only be browsed by one person at a time (with a poor visual design indeed), here comes the one that self destruct itself. Could be a start... Btw, thinking about files, sites or contents, etc. that would self destruct themsleves would probably help save lots of energy in data storage, hard drives and datacenters of all sorts, where these data sits like zombies.

Via GOOD

-----

By Isis Madrid

Former head of product at Flickr and Bitly, Matt Rothenberg recently caused an internet hubbub with his Unindexed project. The communal website continuously searched for itself on Google for 22 days, at which point, upon finding itself, spontaneously combusted.

In addition to chasing its own tail on Google, Unindexed provided a platform for visitors to leave comments and encourage one another to spread the word about the website. According to Rothenberg, knowledge of the website was primarily passed on in the physical world via word of mouth.

“Part of the goal with the project was to create a sense of unease with the participants—if they liked it, they could and should share it with others, so that the conversation on the site could grow,” Rothenberg told Motherboard. “But by doing so they were potentially contributing to its demise via indexing, as the more the URL was out there, the faster Google would find it.”

When the website finally found itself on Google, the platform disappeared and this message replaced it:

“HTTP/1.1 410 Gone This web site is no longer here. It was automatically and permanently deleted after being indexed by Google. Prior to its deletion on Tue Feb 24 2015 21:01:14 GMT+0000 (UTC) it was active for 22 days and viewed 346 times. 31 of those visitors had added to the conversation.”

If you are interested in creating a similar self-destructing site, feel free to start with Rothenberg’s open source code.

Friday, October 02. 2015

I&IC at Renewable Futures Conference in Riga | #thinking #speculation #futures

Via iiclouds.org

-----

The design research Inhabiting and Interfacing the Cloud(s) will be presented during the peer reviewed Renewable Futures Conference next week in Riga (Estonia), which will be the first edition of a serie that promiss to scout for radical approaches.

Christophe Guignard will introduce the participants to the stakes and the progresses of our ongoing experimental work. There will be profiled and inspiring speakers such as Lev Manovitch, John Thackara, Andreas Brockmann, etc.

Christophe Guignard will make a short “follow up” about the conference on this blog once he’ll be back from Riga.

Friday, August 14. 2015

Commune Revisited | #commune #ideals #magazine

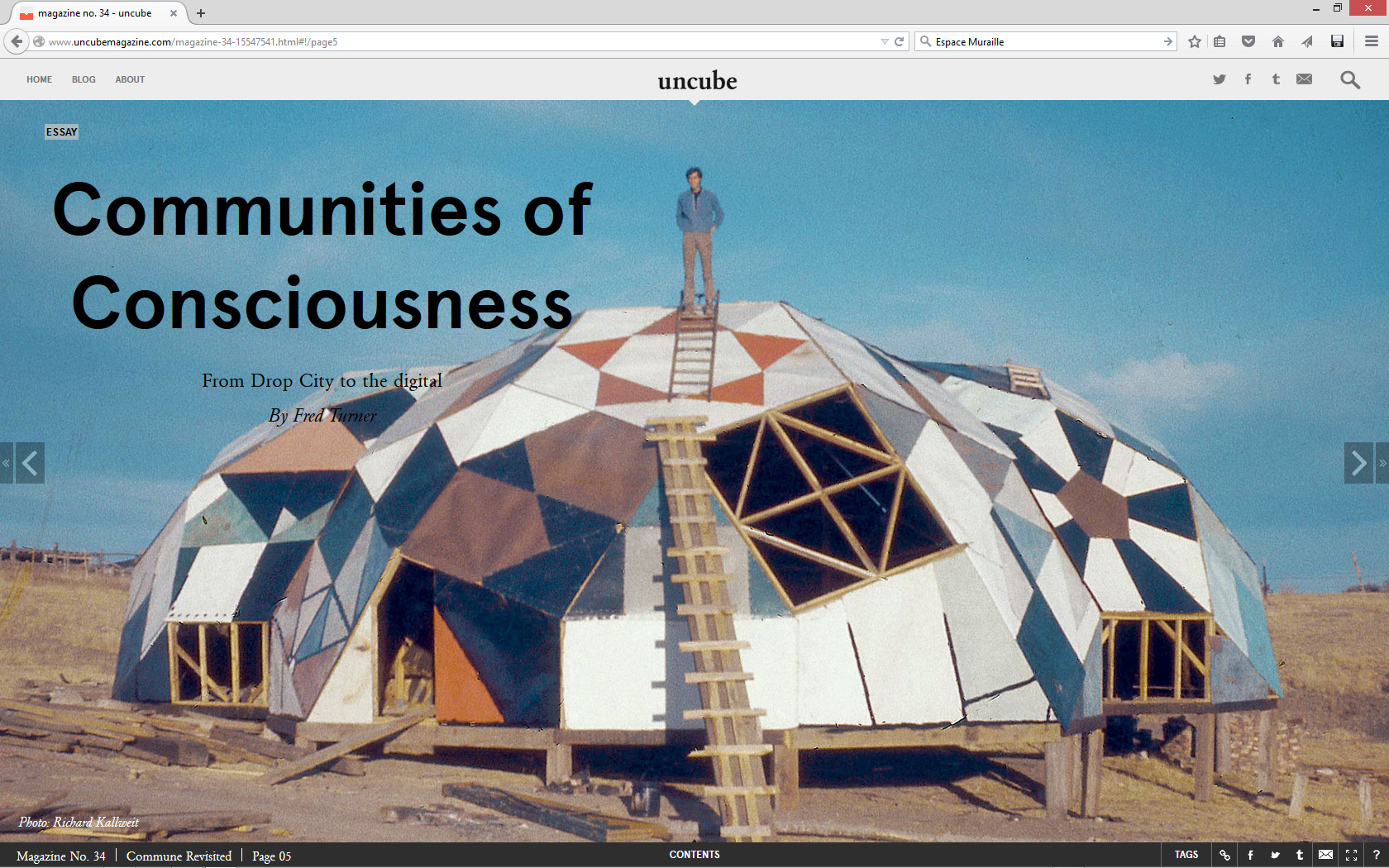

Note: While being interested in the idea of the commune for some time now --I've been digging into old stories, like the ones of the well named Haight-Ashbury's Diggers, or the Droppers, in connection to system theory, cybernetics and information theory and then of course, to THE Personal Computer as "small scale technology" , so as to "the biggest commune of all: the internet" (F. Turner)--.

The idealistic social flatness of the communes, anarchic yet with inevitable emerging order, its "counter" approach to western social organization but also the fact that in the end, the 60ies initiatives seemed to have "failed" for different reasons, interests me for further works. These "diggings" are also somehow connected to a ongoing project and tool we recently published online, a "data commune": Datadroppers (even so it is just a shared tool).

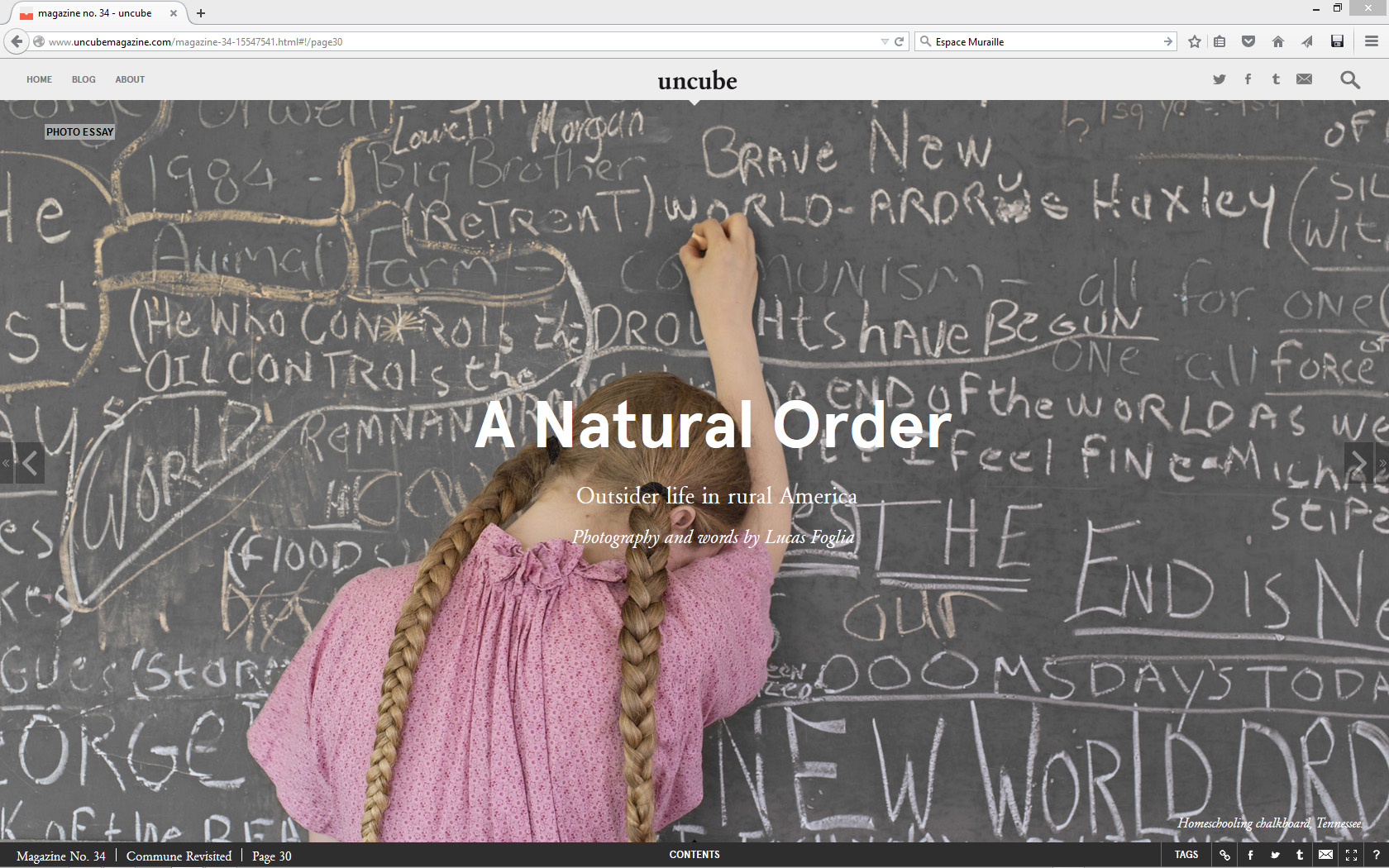

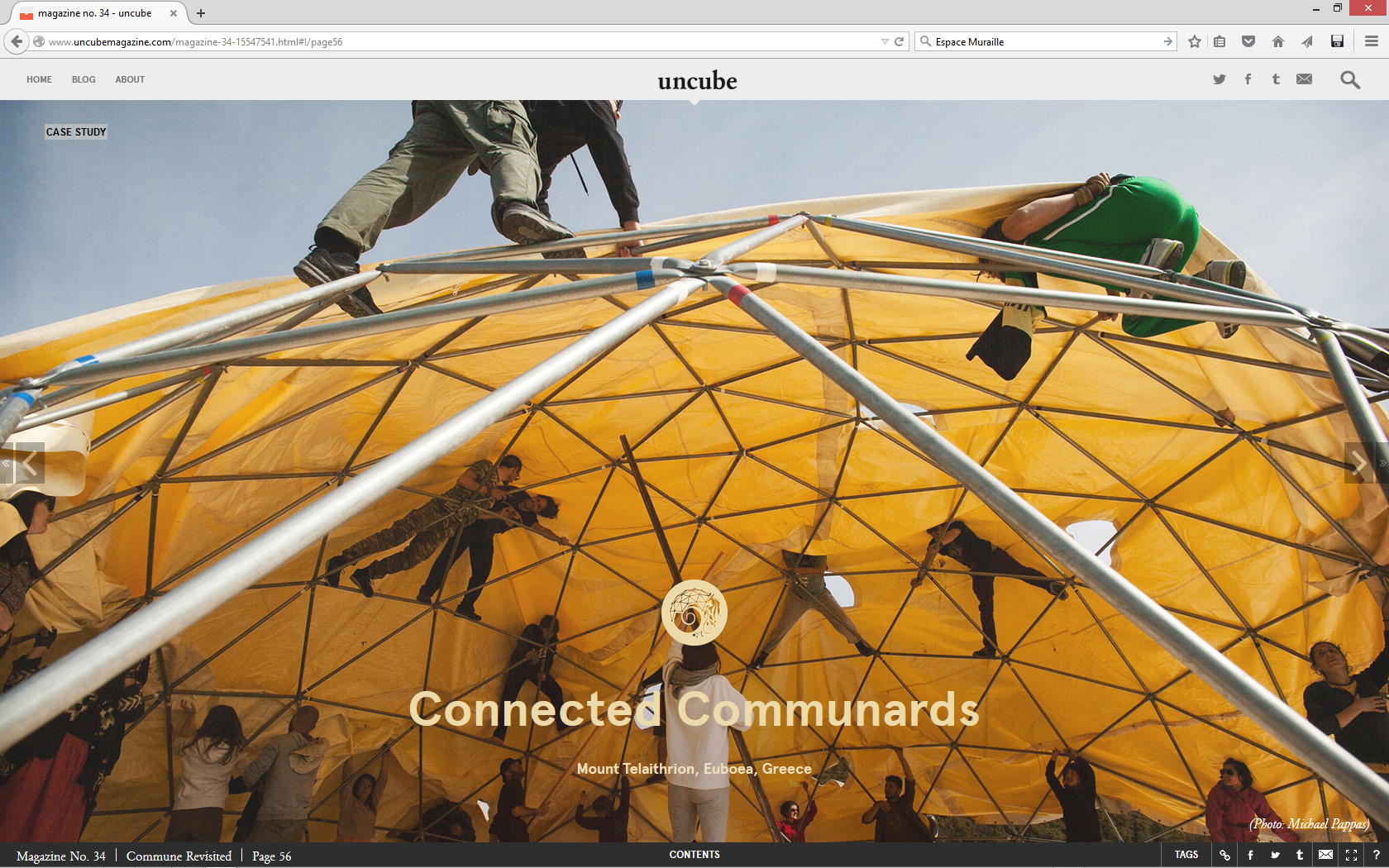

Following this interest, I came accross this latest online publication by uncube (Issue #34) about the Commune Revisited, which both have an historic approach to old experiments (like the one of Drop City), and to more recent ones, up to the "gated community" ... The idea of the editors being to investigate the diversity of the concepts. It brings an interesting contemporary twist and understanding to the general idea... In a time when we are totally fed up with neo liberalism.

Via Uncube

-----

"One year after our Urban Commons issue, we're returning to the idea of the communal, this time investigating just how diversly the concept of "commune" can be interpreted - and not always with entirely benevolent intentions or successful results.

Wether trying to escape a broken economy or an oppressive system via new forms of existence or looking to break the system itself via anarchic methodologies, forming a commune traditionnaly involves segregation or stepping "outside" society.

But no matter how off-grid and back-to-nature the contemporary communities that we investigate here are, it turns out they are far more connected than we think.

Turn on, tune out, drop in.

The editors"

Related Links:

Sunday, February 01. 2015

Deterritorialized House - Inhabiting the data center, 2014 sketches... | #data #decenter #housing

By fabric | ch

-----

Along different projects we are undertaking at fabric | ch, we continue to work on self initiated researches and experiments (slowly, way too slowly... Time is of course missing). Deterritorialized House is one of them, introduced below.

Some of these experimental works concern the mutating "home" program (considered as "inhabited housing"), that is obviously an historical one for architecture but that is also rapidly changing "(...) under pressure of multiple forces --financial, environmental, technological, geopolitical. What we used to call home may not even exist anymore, having transmuted into a financial commodity measured in sqm (square meters)", following Joseph Grima's statement in sqm. the quantified home, "Home is the answer, but what is the question?"

In a different line of works, we are looking to build physical materializations in the form of small pavilions for projects like i.e. Satellite Daylight, 46°28'N, while other researches are about functions: based on live data feeds, how would you inhabit a transformed --almost geo-engineered atmospheric/environmental condition? Like the one of Deterritorialized Living (night doesn't exist in this fictional climate that consists of only one day, no years, no months, no seasons), the physiological environment of I-Weather, or the one of Perpetual Tropical Sunshine, etc.?

We are therefore very interested to explore further into the ways you would inhabit such singular and "creolized" environments composed of combined dimensions, like some of the ones we've designed for installations. Yet considering these environments as proto-architecture (architectured/mediated atmospheres) and as conditions to inhabit, looking for their own logic.

We are looking forward to publish the results of these different projects along the year. Some as early sketches, some as results, or both. I publish below early sketches of such an experiment, Deterritorialized House, linked to the "home/house" line of research. It is about symbiotically inhabiting the data center... Would you like it or not, we surely de-facto inhabit it, as it is a globally spread program and infrastructure that surrounds us, but we are thinking here in physically inhabiting it, possibly making it a "home", sharing it with the machines...

What is happening when you combine a fully deterritorialized program (super or hyper-modern, "non lieu", ...) with the one of the home? What might it say or comment about contemporary living? Could the symbiotic relation take advantage of the heat the machine are generating --directly connected to the amount of processing power used--, the quality of the air, the fact that the center must be up and running, possibly lit 24/7, etc.

As we'll run a workshop next week in the context of another research project (Inhabiting and Interfacing the Cloud(s), an academic program between ECAL, HEAD, EPFL-ECAL Lab and EPFL in this case) linked to this idea of questioning the data center --its paradoxically centralized program, its location, its size, its functionalism, etc.--, it might be useful to publish these drawings, even so in their early phase (theys are dating back from early 2014, the project went back and forth from this point and we are still working on it.)

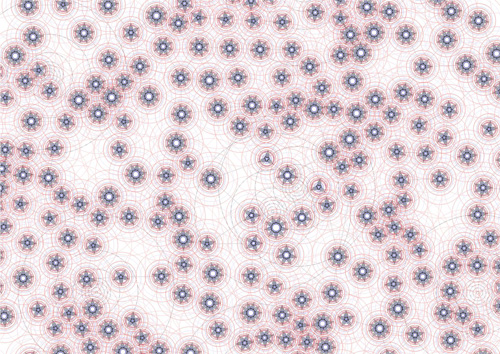

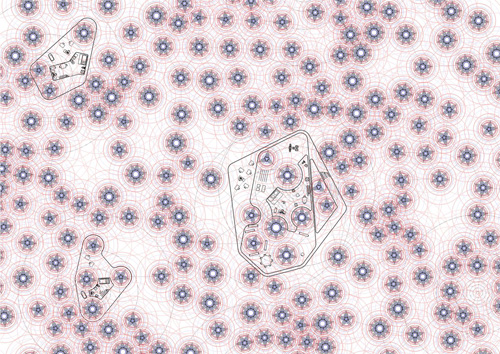

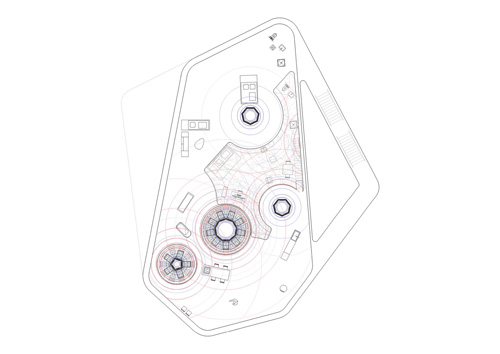

1) The data center level (level -1 or level +1) serves as a speculative territory and environment to inhabit (each circle in this drawing is a fresh air pipe sourrounded by a certain number of computers cabinets --between 3 and 9).

A potential and idealistic new "infinite monument" (global)? It still needs to be decided if it should be underground, cut from natural lighting or if it should be fragmented into many pieces and located in altitude (--likely, according to our other scenarios that are looking for decentralization and collaboration), etc. Both?

Fresh air is coming from the outside through the pipes surrounded by the servers and their cabinets (the incoming air could be an underground cooled one, or the one that can be found in altitude, in the Swiss Alps --triggering scenarios like cities in the moutains? moutain data farming? Likely too, as we are looking to bring data centers back into small or big urban environments). The computing and data storage units are organized like a "landscape", trying to trigger different atmospheric qualities (some areas are hotter than others with the amount of hot air coming out of the data servers' cabinets, some areas are charged in positive ions, air connectivity is obviously everywhere, etc.)

Artificial lighting follows a similar organization as the servers' cabinets need to be well lit. Therefore a light pattern emerges as well in the data center level. Running 24/7, with the need to be always lit, the data center uses a very specific programmed lighting system: Deterritorialized Daylight linked to global online data flows.

2) Linked to the special atmospheric conditions found in this "geo-data engineered atmosphere" (the one of the data center itself, level -1 or 1), freely organized functions can be located according to their best matching location. There are no thick walls as the "cabinets islands" acts as semi-open partitions.

A program starts to appear that combines the needs of a data center and the one of a small housing program which is immersed into this "climate" (dense connectivity, always artificially lit, 24°C permanent heat). "Houses" start to appear as "plugs" into a larger data center.

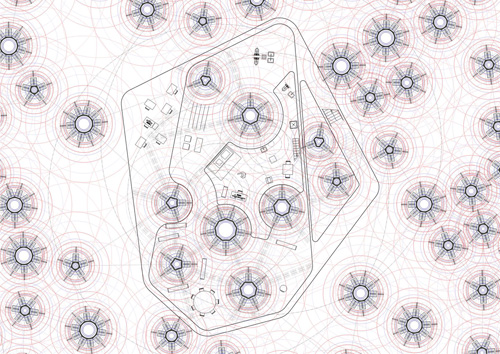

3) A detailed view (data center, level -1 or +1) on the "housing plug" that combine programs. At this level, the combination between an office-administration unit for a small size data center start to emerge, combined with a kind of "small office - home office" that is immersed into this perpetually lit data space. This specific small housing space (a studio, or a "small office - home office") becomes a "deterritorialized" room within a larger housing program that we'll find on the upper level(s), likely ground floor or level +2 of the overall compound.

4) Using the patterns emerging from different spatial components (heat, light, air quality --dried, charged in positive ions--, wifi connectivity), a map is traced and "moirés" patterns of spatial configurations ("moirés spaces") start to happen. These define spatial qualities. Functions are "structurelessly" placed accordingly, on a "best matching location" basis (needs in heat, humidity, light, connectivity which connect this approach to the one of Philippe Rahm, initiated in a former research project, Form & Function Follow Climate (2006). Or also i.e. the one of Walter Henn, Burolandschaft (1963), if not the one of Junya Ishigami's Kanagawa Institute).

Note also that this is a line of work that we are following in another experimental project at fabric | ch, about which we also hope to publish along the year, Algorithmic Atomized Functioning --a glimpse of which can be seen in Desierto Issue #3, 28° Celsius.

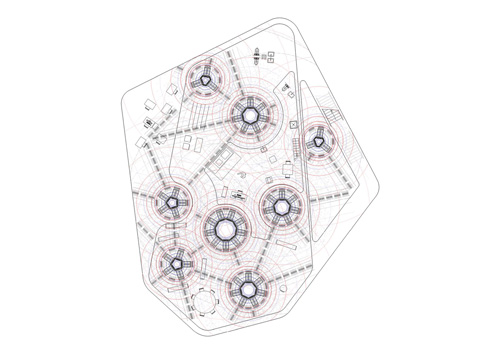

5) On ground level or on level +2, the rest of the larger house program and few parts of the data center that emerges. There are no other heating or artificial lighting devices besides the ones provided by the data center program itself. The energy spent by the data center must serve and somehow be spared by the house. Fresh and hot zones, artificial light and connectivity, etc. are provided by the data center emergences in the house, so has from the opened "small office - home office" that is located one floor below. Again, a map is traced based and moirés patterns of specific locations and spatial configurations emerge. Functions are also placed accordingly (hot, cold, lit, connected zones).

Starts or tries to appear a "creolized" housing object, somewhere in between a symbiotic fragmented data center and a house, possibly sustaining or triggering new inhabiting patterns...

--------------------------------

Project (ongoing): fabric | ch

Team: Patrick Keller, Christophe Guignard, Christian Babski, Sinan Mansuroglu

Tuesday, December 23. 2014

Can Sucking CO2 Out of the Atmosphere Really Work? | #atmosphere

-----

A Columbia scientist and his startup think they have a plan to save the world. Now they have to convince the rest of us.

By Eli Kintish

CTO and co-founder Peter Eisenberger in front of Global Thermostat’s air-capturing machine.

Physicist Peter Eisenberger had expected colleagues to react to his idea with skepticism. He was claiming, after all, to have invented a machine that could clean the atmosphere of its excess carbon dioxide, making the gas into fuel or storing it underground. And the Columbia University scientist was aware that naming his two-year-old startup Global Thermostat hadn’t exactly been an exercise in humility.

But the reception in the spring of 2009 had been even more dismissive than he had expected. First, he spoke to a special committee convened by the American Physical Society to review possible ways of reducing carbon dioxide in the atmosphere through so-called air capture, which means, essentially, scrubbing it from the sky. They listened politely to his presentation but barely asked any questions. A few weeks later he spoke at the U.S. Department of Energy’s National Energy Technology Laboratory in West Virginia to a similarly skeptical audience. Eisenberger explained that his lab’s research involves chemicals called amines that are already used to capture concentrated carbon dioxide emitted from fossil-fuel power plants. This same amine-based technology, he said, also showed potential for the far more difficult and ambitious task of capturing the gas from the open air, where carbon dioxide is found at concentrations of 400 parts per million. That’s up to 300 times more diffuse than in power plant smokestacks. But Eisenberger argued that he had a simple design for achieving the feat in a cost-effective way, in part because of the way he would recycle the amines. “That didn’t even register,” he recalls. “I felt a lot of people were pissing on me.”

The next day, however, a manager from the lab called him excitedly. The DOE scientists had realized that amine samples sitting around the lab had been bonding with carbon dioxide at room temperature—a fact they hadn’t much appreciated until then. It meant that Eisenberger’s approach to air capture was at least “feasible,” says one of the DOE lab’s chemists, Mac Gray.

Five years later, Eisenberger’s company has raised $24 million in investments, built a working demonstration plant, and struck deals to supply at least one customer with carbon dioxide harvested from the sky. But the next challenge is proving that the technology could have a transformative impact on the world, befitting his company’s name.

The need for a carbon-sucking machine is easy to see. Most technologies for mitigating carbon dioxide work only where the gas is emitted in large concentrations, as in power plants. But air-capture machines, installed anywhere on earth, could deal with the 52 percent of carbon-dioxide emissions that are caused by distributed, smaller sources like cars, farms, and homes. Secondly, air capture, if it ever becomes practical, could gradually reduce the concentration of carbon dioxide in the atmosphere. As emissions have accelerated—they’re now rising at 2 percent per year, twice as rapidly as they did in the last three decades of the 20th century—scientists have begun to recognize the urgency of achieving so-called “negative emissions.”

The obvious need for the technology has enticed several other efforts to come up with various approaches that might be practical. For example, Climate Engineering, based in Calgary, captures carbon using a liquid solution of sodium hydroxide, a well-established industrial technique. A firm cofounded by an early pioneer of the idea, Eisenberg’s Columbia colleague Klaus Lackner, worked on the problem for several years before giving up in 2012.

“Negative emissions are definitely needed to restore the atmosphere given that we’re going to far exceed any safe limit for CO2, if there is one. The question in my mind is, can it be done in an economical way?”

A report released in April by the Intergovernmental Panel on Climate Change says that avoiding the internationally agreed upon goal of 2 °C of global warming will likely require the global deployment of “carbon dioxide removal” strategies like air capture. (See “The Cost of Limiting Climate Change Could Double without Carbon Capture Technology.”) “Negative emissions are definitely needed to restore the atmosphere given that we’re going to far exceed any safe limit for CO2, if there is one,” says Daniel Schrag, director of the Harvard University Center for the Environment. “The question in my mind is, can it be done in an economical way?”

Most experts are skeptical. (See “What Carbon Capture Can’t Do.”) A 2011 report by the American Physical Society identified key physical and economic challenges. The fact that carbon dioxide will bind with amines, forming a molecule called a carbamate, is well known chemistry. But carbon dioxide still represents only one in 2,500 molecules in the air. That means an effective air-capture machine would need to push vast amounts of air past amines to get enough carbon dioxide to stick to them and then regenerate the amines to capture more. That would require a lot of energy and thus be very expensive, the 2011 report said. That’s why it concluded that air capture “is not currently an economically viable approach to mitigating climate change.”

The people at Global Thermostat understand these daunting economics but remain defiantly optimistic. The way to make air capture profitable, says Global Thermostat cofounder Graciela Chichilnisky, a Columbia University economist and mathematician, is to take advantage of the demand for the gas by various industries. There already exists a well-established, billion-dollar market for carbon dioxide, which is used to rejuvenate oil wells, make carbonated beverages, and stimulate plant growth in commercial greenhouses. Historically, the gas sells for around $100 per ton. But Eisenberger says his company’s prototype machine could extract a concentrated ton of the gas for far less than that. The idea is to first sell carbon dioxide to niche markets, such as oil-well recovery, to eventually create bigger ones, like using catalysts to make fuels in processes that are driven by solar energy. “Once capturing carbon from the air is profitable, people acting in their own self-interest will make it happen,” says Chichilnisky.

Warming up

Eisenberger and Chichilnisky were colleagues at Columbia in 2008 when they realized that they had complementary interests: his in energy, and hers in environmental economics, including work to help shape the 1991 Kyoto Protocol, the first global treaty on cutting emissions. Nations had pledged big cuts, says Chichilnisky, but economic and political realities had provided “no way to implement it.” The pair decided to create a business to tackle the carbon challenge.

They focused on air capture, which was first developed by Nazi scientists who used liquid sorbents to remove accumulations of CO2 in submarines. In the winter of 2008 Eisenberger sequestered himself in a quiet house with big glass windows overlooking the ocean in Mendocino County, California. There he studied existing literature on capturing carbon and made a key decision. Scientists developing techniques to capture CO2 have thus far sought to work at high concentrations of the gas. But Eisenberger and Chichilnisky focused on another term in those equations: temperature.

Engineers have previously deployed amines to scrub CO2 from flue gases, whose temperatures are around 70 °C when they exit power plants. Subsequently removing the CO2 from the amines—“regenerating” the amines—generally requires reactions at 120 °C. By contrast, Eisenberger calculated that his system would operate at roughly 85 °C, requiring less total energy. It would use relatively cheap steam for two purposes. The steam would heat the surface, driving the CO2 off the amines to be collected, while also blowing CO2 away from the surface.

"Even if air capture were to someday prove profitable, whether it should be scaled up is another question."

The upshot? With less heat-management infrastructure than what is required with amines in the smokestacks of power plants, the design of a scrubber could be simpler and therefore cheaper. Using data from their prototype, Eisenberger’s team figures the approach could cost between $15 and $50 per ton of carbon dioxide captured from air, depending on how long the amine surfaces last.

If Global Thermostat can achieve anywhere near the prices it’s touting, a number of niche markets beckon. The startup has partnered with a Carson City, Nevada-based company called Algae Systems to make biofuels using carbon dioxide and algae. Meanwhile the demand is rising for carbon dioxide to inject into depleted oil wells, a technique known as enhanced oil recovery. One study estimates that the application could require as much as 3 billion tons of carbon dioxide annually by 2021, a nearly tenfold increase over the 2011 market.

That still represents a drop in the bucket in terms of the amounts needed to reduce or even stabilize the concentration of CO2 in the atmosphere. But Eisenberger says there are really no alternatives to air capture. Simply capturing carbon emissions from coal-fired power plants, he says, only extends society’s dependence on carbon-intensive coal.

Suck it up

It’s a warm December afternoon in Silicon Valley as Eisenberger and I make our way across SRI International’s concrete research center. It’s in these low-slung buildings where engineers first developed ARPAnet, Apple’s Siri software, and countless other technological advances. About a quarter mile from the entrance, a 40-foot-high tower of fans, steel, and silver tubes comes into view. This is the Global Thermostat demonstration plant. It’s imposing and clean. Eisenberger gazes at the quiet scene around the tower, which includes a tall tree. “It’s doing exactly what the tree is doing,” says Eisenberger. But then he corrects himself. “Well, actually, it’s doing it a lot better.”

After Eisenberger earned a PhD physics in 1967 at Harvard, stints at Bell Labs, Princeton, and Stanford followed. At Exxon in the 1980s he led work on solar energy, then served as director of Lamont-Doherty, the geosciences lab at Columbia. There he has taught a long-standing seminar called “The Earth/Human system.” It was in that seminar, in 2007, with Lackner as a guest lecturer, that Eisenberger first heard about air capture. After a year or so of preparation, he and Chichilnisky reached out to billionaire Edgar Bronfman Jr. “Sometimes when you hear something that must be too good to be true, it’s because it is,” was Bronfman’s reaction, according to his son, who was present at the meeting. But the scion implored his father: “If they’re right, this is one of the biggest opportunities out there.” The family invested $18 million.

That largesse has allowed the company to build its demonstration despite basically no federal support for air capture research. (Global Thermostat chose SRI as its site due to the facility’s prior experience with carbon-capture technology.) The rectangular tower uses fans to draw air in over alternating 10-foot-wide surfaces known as contactors. Each is comprised of 640 ceramic cubes embedded with the amine sorbent. The tower raises one contactor as another is lowered. That allows the cubes of one to collect CO2 from ambient air while the other is stripped of the gas by the application of the steam, at 85 °C. For now that gas is simply vented, but depending on the customer it could be injected into the ground, shipped by pipe, or transferred to a chemical plant for industrial use.

A key challenge facing the company is the ruggedness of the amine sorbent surfaces. They tend to decay rapidly when oxidized, and frequently replacing the sorbents could make the process much less cost-effective than Eisenberger projects.

False hope

None of the world’s thousands of coal plants have been outfitted for full-scale capture of their carbon pollution. And if it isn’t economical for use in power plants, with their concentrated source of carbon dioxide, the prospects of capturing it out of the air seem dim to many experts. “There’s really little chance that you could capture CO2 from ambient air more cheaply than from a coal plant, where the flue gas is 300 times more concentrated,” says Robert Socolow, director of the Princeton Environment Institute and co-director of the university’s carbon mitigation initiative.

Adding to the skepticism over the feasibility of air capture is that there are other, cheaper ways to create the so-called negative emissions. A more practical way to do it, Schrag says, would involve deriving fuels from biomass—which removes CO2 from the atmosphere as it grows. As that feedstock is fermented in a reactor to create ethanol, it produces a stream of pure carbon dioxide that can be captured and stored underground. It’s a proven technique and has been tested at a handful of sites worldwide.

Even if air capture were to someday prove profitable, whether it should be scaled up is another question. Say a solar power plant is built outside an existing coal plant. Should the energy the new solar plant produces be used to suck carbon out of the atmosphere, or to allow the coal plant to be shut down by replacing its energy output? The latter makes much more sense, says Socolow. He and others have another concern about air capture: that claims about its feasibility could breed complacency. “I don’t want us to give people the false hope that air capture can solve the carbon emissions problem without a strong focus on [reducing the use of] fossil fuels,” he says.

Eisenberger and Chichilnisky are adamant about the importance of sucking CO2 out of the atmosphere rather than focusing entirely on capturing it from coal plants. In 2010, the pair developed a version of their technology that mixes air with flue gas from a coal or gas-fired power plant. That approach provides a source of steam while capturing both atmospheric carbon and new emissions. It also could lower costs by providing a higher concentration of CO2 for the machine to capture. “It’s a very impressive system, a triumph,” says Socolow, who thinks scientific advances made in air capture will eventually be used primarily on coal and gas power plants.

Such an application could play a critical role in cleaning up greenhouse gas emissions. But Eisenberger has revealed even loftier goals. A patent granted to him and Chichilnisky in 2008 described air capture technology as, among other things, “a global thermostat for controlling average temperature of a planet’s atmosphere.”

Eli Kintisch is a correspondent for Science magazine.

Related Links:

Friday, April 25. 2014

Forget Flying Cars – Smart Cities Just Need Smart Citizens | #smart?

Via ArchDaily via The European

-----

This article by Carlo Ratti originally appeared in The European titled “The Sense-able City“. Ratti outlines the driving forces behind the Smart Cities movement and explain why we may be best off focusing on retrofitting existing cities with new technologies rather than building new ones.

What was empty space just a few years ago is now becoming New Songdo in Korea, Masdar in the United Arab Emirates or PlanIT in Portugal — new “smart cities”, built from scratch, are sprouting across the planet and traditional actors like governments, urban planners and real estate developers, are, for the first time, working alongside large IT firms — the likes of IBM, Cisco, and Microsoft.

The resulting cities are based on the idea of becoming “living labs” for new technologies at the urban scale, blurring the boundary between bits and atoms, habitation and telemetry. If 20th century French architect Le Corbusier advanced the concept of the house as a “machine for living in”, these cities could be imagined as inhabitable microchips, or “computers in open air”.

Read on for more about the rise of Smart Cities

Wearable Computers and Smart Trash

The very idea of a smart city runs parallel to “ambient intelligence” — the dissemination of ubiquitous electronic systems in our living environments, allowing them to sense and respond to people. That fluid sensing and actuation is the logical conclusion of the liberation of computing: from mainframe solidity to desktop fixity, from laptop mobility to handheld ubiquity, to a final ephemerality as computing disappears into the environment and into humans themselves with development of wearable computers.

It is impossible to forget the striking side-by-side images of the past two Papal Inaugurations: the first, for Benedict XVI in 2005, shows the raised hands of a cheering crowd, while the second, for Francesco I in 2013, a glimmering constellation of smartphone screens held aloft to take pictures. Smart cities are enabled by the atomization of technology, ushering an age when the physical world is indistinguishable from its digital overlay.

The key mechanism behind ambient intelligence, then, is “sensing” — the ability to measure what happens around us and to respond dynamically. New means of sensing are suffusing every aspect of urban space, revealing its visible and invisible dimensions: we are learning more about our cities so that they can learn about us. As people talk, text, and browse, data collected from telecommunication networks is capturing urban flows in real time and crystallizing them as Google’s traffic congestion maps.

Like a tracer running through the veins of the city, networks of air quality sensors attached to bikes can help measure an individual’s exposure to pollution and draw a dynamic map of the urban air on a human scale, as in the case of the Copenhagen Wheel developed by new startup Superpedestrian. Even trash could become smarter: the deployment of geolocating tags attached to ordinary garbage could paint a surprising picture of the waste management system, as trash is shipped throughout the country in a maze-like disposal process — as we saw in Seattle with our own Trash Track project.

Afraid of Our Own Bed

Today, people themselves (equipped with smartphones, naturally) can be instruments of sensing. Over the past few years, a new universe of urban apps has appeared — allowing people to broadcast their location, information and needs — and facilitating new interactions with the city. Hail a taxi (“Uber”), book a table for dinner (“OpenTable”), or have physical encounters based on proximity and profiles (“Grindr” and “Blendr”): real-time information is sent out from our pockets, into the city, and right back to our fingertips.

In some cases, the very process of sensing becomes a deliberate civic action: citizens themselves are taking an increasingly active role in participatory data sharing. Users of Waze automatically upload detailed road and traffic information so that their community can benefit from it. 311-type apps allow people to report non-emergencies in their immediate neighborhood, from potholes to fallen tree branches, and subsequently organize a fix. Open Street Map does the same, enabling citizens to collaboratively draw maps of places that have never been systematically charted before — especially in developing countries not yet graced by a visit from Google.

These examples show the positive implications of ambient urban intelligence but the data that emerges from fine-grained sensing is inherently neutral. It is a tool that can be used in many different applications, and to widely varying ends. As artist-turned-XeroxPARC-pioneer Rich Gold once asked in an incisive (and humorous) essay: “How smart does your bed have to be, before you are afraid to go to sleep at night?” What might make our nights sleepless, in this case, is the sheer amount of data being generated by sensing. According to a famous quantification by Google’s Eric Schmidt, every 48 hours we produce as much data as all of humanity until 2003 (an estimation that is already three years old). Who has access to this data? How do we avoid the dystopian ending of Italo Calvino’s 1960s short story “The Memory of the World,” where humanity’s act of infinite recording unravels as intrigue, drama, and murder?

And finally, does this new pervasive data dimension require an entirely new city? Probably not. Of course, ambient intelligence might have architectural ramifications, like responsive building facades or occupant-targeted climates. But in each of the city-sensing examples above, technology does not necessarily call for new urban space — many IT-infused “smart city initiatives” feel less like a necessity and more like a justification of real estate operations on a massive scale – with a net result of bland spatial products.

Forget About Flying Cars

Ambient intelligence can indeed pervade new cities, but perhaps most importantly, it can also animate the rich, chaotic erstwhile urban spaces — like a new operating system for existing hardware. This was already noted by Bill Mitchell at the beginning of our digital era: “The gorgeous old city of Venice […] can integrate modern telecommunications infrastructure far more gracefully than it could ever have adapted to the demands of the industrial revolution.” Could ambient intelligence bring new life to the winding streets of Italian hill towns, the sweeping vistas of Santorini, or the empty husks of Detroit?

We might need to forget about the flying cars that zip through standard future cities discourse. Urban form has shown an impressive persistence over millennia — most elements of the modern city were already present in Greek and Roman times. Humans have always needed, and will continue to need, the same physical structures for their daily lives: horizontal planes and vertical walls (no offense, Frank O. Gehry). But the very lives that unfold inside those walls is now the subject of one of the most striking transformations in human history. Ambient intelligence and sensing networks will not change the container but the contained; not smart cities but smart citizens.

This article by Carlo Ratti originally appeared in The European Magazine

Related Links:

Thursday, April 17. 2014

Air pollution now the world’s biggest environmental health risk with 7 million deaths per year | #air #health

Following last month catastrophic measures in Paris. Not a funny information, yet good to know. Air quality will undoubtedly become a very big (geo)political issue in the coming years, certainly an engineering one too.

Via Treehugger

-----

CC BY-ND 2.0 Flickr

The World Health Organization (WHO) released a report last year showing that air pollution killed more people than AIDS and malaria combined. It was based on 2010 figures, which were the latest available at the time. There's now a new study which looked at 2012 data, and it seems like things are even worse than we first believed.

“The risks from air pollution are now far greater than previously thought or understood, particularly for heart disease and strokes,” says Dr Maria Neira, Director of WHO’s Department for Public Health, Environmental and Social Determinants of Health. “Few risks have a greater impact on global health today than air pollution; the evidence signals the need for concerted action to clean up the air we all breathe.”

The WHO found that outdoor air pollution was linked to an estimated 3.7 million deaths in 2012 from urban and rural sources worldwide, and indoor air pollution, mostly caused by cooking (!) on inefficient coal and biomass stoves was linked to 4.3 million deaths in 2012.

Because many people are exposed to both indoor and outdoor air pollution, there is overlap in these two numbers, but the WHO estimates that the total number of victims from air pollution in 2012 was around 7 million, which is tragic since it would take relatively little in many of those cases to save livesFlickr/CC BY-SA 2.0

And it's not really a question of money, since the health costs and lost productivity caused by air pollution are higher in the long-term...

Here's how the health impacts break down for both indoor and outdoor air pollution:

Outdoor air pollution-caused deaths – breakdown by disease:

- 40% – ischaemic heart disease;

- 40% – stroke;

- 11% – chronic obstructive pulmonary disease (COPD);

- 6% - lung cancer; and

- 3% – acute lower respiratory infections in children.

Indoor air pollution-caused deaths – breakdown by disease:

- 34% - stroke;

- 26% - ischaemic heart disease;

- 22% - COPD;

- 12% - acute lower respiratory infections in children; and

- 6% - lung cancer

There are lots of big obvious things we can do, such as replace inefficient and pollution small stoves in poorer countries with better stoves or even better, electric cooking. Many countries, like China, could also do a lot to cut pollution at their coal plants and over time phase out coal (which isn't just a problem for air pollution, but also for water and ground pollution and global warming). There are all these low-hanging fruits that would make a huge difference. To see how dramatic the improvement could be, just look at these photos showing how bad the situation was in the US not so long ago (China is just repeating what has gone on elsewhere...).

One thing we can do to help: plant more trees! Recent studies show that they are even better at filtering the air in urban areas than we previously thought.

© Michael Graham Richard

Related on TreeHugger.com:

- Think Air Quality Regulations Don't Matter? Look at Pittsburgh in the 1940s!

- This scary map shows the health impacts of coal power plants in China

- The smog in Los Angeles doesn't quite sting like it used to, but there's still work to be done

- Coal pollution in China lowers life expectancy by 5 years

- What kills more people than AIDS and malaria combined? Air pollution

- Trees are awesome: Study shows tree leaves can capture 50%+ of particulate matter pollution

Monday, October 14. 2013

World's Largest Solar Thermal Energy Plant Opens in California

Via Inhabitat via Computed·By

-----

The much-anticipated Ivanpah Solar Electric Generating System just kicked into action in California’s Mojave Desert. The 3,500 acre facility is the world’s largest solar thermal energy plant, and it has the backing of some major players; Google, NRG Energy, BrightSource Energy and Bechtel have all invested in the project, which is constructed on federally-leased public land. The first of Ivanpah’s three towers is now feeding energy into the grid, and once the site is fully operational it will produce 392 megawatts — enough to power 140,000 homes while reducing carbon emissions by 400,000 tons per year.

Ivanpah is comprised of 300,000 sun-tracking mirrors (heliostats), which surround three, 459-foot towers. The sunlight concentrated from these mirrors heats up water contained within the towers to create super-heated steam which then drives turbines on the site to produce power.

The first successfully operating unit will sell power to California’s Pacific Gas and Electric, as will Unit 3 when it comes online in the coming months. Unit 2 is also set to come online shortly, and will provide power to Southern California Edison.

Construction began on the facility in 2010, and achieved it’s first “flux” in March, a crucial test which proved its readiness to begin commercial operation. Tests this past Tuesday formed Ivanpah’s “first sync” which began feeding power into the grid.

As John Upton at Grist points out, the project is not without its critics, noting that some “have questioned why a solar plant that uses water would be built in the desert — instead of one that uses photovoltaic panels,” while others have been upset by displacement of local wildlife—notably 100 endangered desert tortoises.

But the Ivanpah plant still constitutes a major milestone, both globally as the world’s largest solar thermal energy plant, and locally for the significant contribution it will make towards California’s renewable energy goal of achieving 3,000 MW of solar generating capacity through public utilities and private ownership.

Personal comment:

After the project in Spain, early images and controversy dating back 2012, here comes a new amazing infrastructure / solar power plant in California. Welcome!

This could be the base for a new "dome" over a city that would live in the shadow of its own power supplier... A different 2050 reboot?

I wonder what happens under the mirrors of the California plant btw. I remember these other images from a project in Australia that combined different approaches (solar and wind tower) + specific agriculture in the shaded area.

Monday, October 07. 2013

Building Cities that Think Like Planets

-----

This essay is adapted from Marina Alberti Cities as Hybrid Ecosystems (Forthcoming) and from Marina Alberti “Anthropocene City”, forthcoming in The Anthropocene Project by the Deutsche Museum Special Exhibit 2014-1015

Cities face an important challenge: they must rethink themselves in the context of planetary change. What role do cities play in the evolution of Earth? From a planetary perspective, the emergence and rapid expansion of cities across the globe may represent another turning point in the life of our planet. Earth’s atmosphere, on which we all depend, emerged from the metabolic process of vast numbers of single-celled algae and bacteria living in the seas 2.3 billion years ago. These organisms transformed the environment into a place where human life could develop. Adam Frank, an Astrophysicist at the University of Rochesters, reminds us that the evolution of life has completely changed big important characteristics of the planet (NPR 13.7: Cosmos & Culture, 2012). Can humans now change the course of Earth’s evolution? Can the way we build cities determine the probability of crossing thresholds that will trigger non-linear, abrupt change on a planetary scale (Rockström et al 2009)?

For most of its history, Earth has been relatively stable, and dominated primarily by negative feedbacks that have kept it from getting into extreme states (Lenton and Williams 2013). Rarely has the earth experienced planetary-scale tipping points or system shifts. But the recent increase in positive feedback (i.e., climate change), and the emergence of evolutionary innovations (i.e. novel metabolisms), could trigger transformations on the scale of the Great Oxidation (Lenton and Williams 2013). Will we drive Earth’s ecosystems to unintentional collapse? Or will we consciously steer the Earth towards a resilient new era?

In my forthcoming book, Cities as Hybrid Ecosystems, I propose a co-evolutionary paradigm for building a science of cities that “think like planets” (see the Note at the bottom)— a view that focuses both on unpredictable dynamics and experimental learning and innovation in urban ecosystems. In the book I elaborate on some concepts and principles of design and planning that can emerge from such a perspective: self-organization, heterogeneity, modularity, feedback, and transformation.

How can thinking on a planetary scale help us understand the place of humans in the evolution of Earth and guide us in building a human habitat of the “long now”?

Planetary Scales

Humans make decisions simultaneously at multiple time and spatial scales, depending on the perceived scale of a given problem and scale of influence of their decision. Yet it is unlikely that this scale extends beyond one generation or includes the entire globe. The human experience of space and time has profound implications for our understanding of world phenomena and for making long- and short-term decisions. In his book What time is this place, Kevin Lynch (1972) eloquently told us that time is embedded in the physical world that we inhabit and build. Cities reflect our experience of time, and the way we experience time affects the way we view and change the environment. Thus our experience of time plays a crucial role in whether we succeed in managing environmental change. If we are to think like a planet, the challenge will be to deal with scales and events far removed from everyday human experience. Earth is 4.6 billion years old. That’s a big number to conceptualize and account for in our individual and collective decisions.

Thinking like a planet implies expanding the time and spatial scales of city design and planning, but not simply from local to global and from a few decades to a few centuries. Instead, we will have to include the scales of the geological and biological processes on which our planet operates. Thinking on a planetary scale implies expanding the idea of change. Lynch (1972) reminds us that “the arguments of planning all come down to the management of change.” But what is change?

Human experience of change is often confined to fluctuations within a relatively stable domain. However Planet Earth has displayed rare but abrupt changes and regime shifts in the past. Human experience of abrupt change is limited to marked changes in regional system dynamics, such as altered fire regimes, and extinctions of species. Yet, since the Industrial Revolution, humans have been pushing the planet outside a stability domain. Will human activities trigger such a global event? We can’t answer that, as we don’t understand enough about how regime shifts propagate across scales, but emerging evidence does suggest that if we continue to disrupt ecosystems and climate we face an increasing risk of crossing those thresholds that keep the earth in a relatively stable domain. Until recently our individual behaviors and collective institutions have been shaped primarily by change that we can envision relatively easily on a human time scale. Our behaviors are not tuned to the slow and imperceptible but systematic changes that can drive dramatic shifts in Earth’s systems.

Planetary shifts can be rapid: the glaciation of the Younger Dryas (abrupt climatic change resulting in severe cold and drought) occurred roughly 11,500 years ago, apparently over only a few decades. Or, it can unfold slowly: the Himalayas took over a million years to form. Shifts can emerge as the results of extreme events like volcanic eruptions, or relatively slow processes, like the movement of tectonic plates. Though we still don’t completely understand the subtle relationship between local and global stability in complex systems, several scientists hypothesize that the increasing complexity and interdependence of socio-economic networks can produce ‘tipping cascades’ and ‘domino dynamics’ in the Earth’s system, leading to unexpected regime shifts (Helbing 2013, Hughes et al 2013).

Planetary Challenges and Opportunities

A planetary perspective for envisioning and building cities that we would like to live in—cities that are livable, resilient, and exciting—provides many challenges and opportunities. To begin, it requires that we expand the spectrum of imaginary archetypes. Current archetypes reflect skewed and often extreme simplifications of how the universe works, ranging from biological determinism to techno-scientific optimism. At best they represent accurate but incomplete accounts of how the world works. How can we reconcile the messages contained in the catastrophic versus optimistic views of the future of Earth? And, how can we hold divergent explanations and arguments as plausibly true? Can we imagine a place where humans have co-evolved with natural systems? What does that world look like? How can we create that place in the face of limited knowledge and uncertainty, holding all these possible futures as plausible options?

Futures Archetypes. Credits: Upper left: 17th street canal, David Grunfeld Landov Media; Upper right: Qunli National Urban Wetland, Turenscape; Lower left: Hurricane Katrina – NOAA; Lower right: EDITT tower, Hamzah & Yeang

The concept of “planetary boundaries” offers a framework for humanity to operate safely on a planetary scale. Rockström et al (2009) developed the concept of planetary boundaries to inform us about the levels of anthropogenic change that can be sustained so we can avoid potential planetary regime shifts that would dramatically affect human wellbeing. The concept does not imply, and neither rules out, planetary-scale tipping points associated with human drivers. Hughes et al (2013) do address some the misconception surrounding planetary-scale tipping points that confuses a system’s rate of change with the presence or absence of a tipping point. To avoid the potential consequences of unpredictable planetary-scale regime shifts we will have to shift our attention towards the drivers and feedbacks rather than focus exclusively on the detectable system responses. Rockström et al (2009) identify nine areas that are most in need of set planetary boundaries: climate change; biodiversity loss; input of nitrogen and phosphorus in soils and waters; stratospheric ozone depletion; ocean acidification; global consumption of freshwater; changes in land use for agriculture; air pollution; and chemical pollution.

A different emphasis is proposed by those scientists who have advanced the concept of planetary opportunities: solution-oriented research to provide realistic, context-specific pathways to a sustainable future (DeFries et al. 2012). The idea is to shift our attention to how human ingenuity can expand the ability to enhance human wellbeing (i.e. food security, human health), while minimizing and reversing environmental impacts. The concept is grounded in human innovation and the human capacity to develop alternative technologies, implement “green” infrastructure, and reconfigure institutional frameworks. The potential opportunities to explore solution-oriented research and policy strategies are amplified in an urbanizing planet, where such solutions can be replicated and can transform the way we build and inhabit the Earth.

Imagining a Resilient Urban Planet

While these different images of the future are both plausible and informative, they speak about the present more than the future. They all represent an extension of the current trajectory as if the future would unfold along the path of our current way of asking questions, and our way of understanding and solving problems. Yes, these perspectives do account for uncertainty but it is defined by the confidence intervals around this trajectory. Both stories are grounded in the inevitable dichotomies of humans and nature, and technology vs. ecology. These views are at best an incomplete account of what is possible: they reflect a limited ability to imagine the future beyond such archetypes. Why can we imagine smart technologies and not smart behaviors, smart institutions, and smart societies? Why think only of technology and not of humans and their societies that co-evolve with Earth?

Understanding the co-evolution of human and natural systems is key to build a resilient society and transform our habitat. One of the greatest questions in biology today is whether natural selection is the only process driving evolution and what the other potential forces might be. To understand how evolution constructs the mechanisms of life, molecular biologists would argue that we also need to understand the self-organization of genes governing the evolution of cellular processes and influencing evolutionary change (Johnson and Kwan Lam 2010).

To function, life on Earth depends on the close cooperation of multiple elements. Biologists are curious about the properties of complex networks that supply resources, process waste, and regulate the system’s functioning at various scales of biological organization. West et al. (2005) propose that natural selection solved this problem by evolving hierarchical fractal-like branching. Other characteristics of evolvable systems are flexibility (i.e. phenotypic plasticity), and novelty. This capacity for innovation is an essential precondition for any system to function. Gunderson and Holling (2002) have noted that if systems lack the capacity for innovation and novelty, they may become over-connected and dynamically locked, unable to adapt. To be resilient and evolve, they must create new structures and undergo dynamic change. Differentiation, modularity, and cross-scale interactions of organizational structures have been described as key characteristics of systems that are capable of simultaneously adapting and innovating (Allen and Holling 2010).

To understand coevolution of human-natural systems will require advancement in the evolution and social theories that explain how complex societies and cooperation have evolved. What role does human ingenuity play? In Cities as Hybrid Ecosystems I propose that coupled human-natural systems are not governed only by either natural selection or human ingenuity alone, but by hybrid processes and mechanisms. It is their hybrid nature that makes them unstable and at the same time able to innovate. This novelty of hybrid systems is key to reorganization and renewal. Urbanization modifies the spatial and temporal variability of resources, creates new disturbances, and generates novel competitive interactions among species. This is particularly important because the distribution of ecological functions within and across scales is key to the system being able to regenerate and renew itself (Peterson et al. 1998).

The city that thinks like a planet: What does it look like?

In this blog article I have ventured to pose this question, but I will not venture to provide an answer. In fact no single individual can do that. The answer resides in the collective imagination and evolving behaviors of people of diverse cultures who inhabit a diversity of places on the planet. Humanity has the capacity to think in the long term. Indeed, throughout history, people in societies faced with the prospect of deforestation, or other environmental changes, have successfully engaged in long-term thinking, as Jared Diamond (2005) reminds us: consider Tokugawa shoguns, Inca emperors, New Guinea highlanders, or 16th-century German landowners. Or, more recently, the Chinese. Many countries in Europe, and the United States, have dramatically reduced their air pollution and meanwhile increased their use of energy and combustion of fossil fuels. Humans have the intellectual and moral capacity to do even more when tuned into challenging problems and engaged in solving them.

A city that thinks like a planet is not built on already set design solutions or planning strategies. Nor can we assume that the best solution would work equally well across the world regardless of place and time. Instead, such a city will be built on principles that expand its drawing board and collaborative action to include planetary processes and scales, to position humanity in the evolution of Earth. Such a view acknowledges the history of the planet in every element or building block of the urban fabric, from the building to the sidewalk, from the back yard to the park, from the residential street to the highway. It is a view that is curious about understanding who we are and about taking advantage of the novel patterns, processes, and feedbacks that emerge from human and natural interactions. It is a city grounded in the here and the now and simultaneously in the different time and spatial scales of human and natural processes that govern the Earth. A city that thinks like a planet is simultaneously resilient and able to change.

How can such a perspective guide decisions in practice? Urban planners and decision makers, making strategic decisions and investments in public infrastructure, want to know whether certain generic properties or qualities of a city’s architecture and governance could predict its capacity to adapt and transform itself. Can such a shift in perspective provide a new lens, a new way to interpret the evolution of human settlements, and to support humans in successfully adapting to change? Evidence emerging from the study of complex systems points to their key properties that expand adaptation capacity while enabling them to change: self organization, heterogeneity, modularity, redundancy, and cross-scale interactions.

A co-evolutionary perspective shifts the focus of planning towards human-natural interactions, adaptive feedback mechanisms, and flexible institutional settings. Instead of predefining “solutions,” that communities must implement, such perspective focuses on understanding the ‘rules of the game’, to facilitate self-organization and careful balance top-down and bottom-up managements strategies (Helbing 2013). Planning will then rely on principles that expand heterogeneity of forms and functions in urban structures and infrastructures that support the city. They support modularity (selected as opposed to generalized connectivity) to create interdependent decentralized systems with some level of autonomy to evolve.

In cities across the world, people are setting great examples that will allow for testing such hypotheses. Human perception of time and experience of change is an emerging key in the shift to a new perspective for building cities. We must develop reverse experiments to explore what works, what shifts the time scale of individual and collective behaviors. Several Northern European cities have adopted successful strategies to cut greenhouse gases, and combined them with innovative approaches that will allow them to adapt to the inevitable consequences of climate change. One example is the Copenhagen 2025 Climate Plan. It lays out a path for the city to become the first carbon-neutral city by 2025 through efficient zero-carbon mobility and building. The city is building a subway project that will place 85 percent of its inhabitants within 650 yards of a Metro station. Nearly three-quarters of the emissions reductions will come as people transition to less carbon-intensive ways of producing heat and electricity through a diverse supply of clean energy: biomass, wind, geothermal, and solar. Copenhagen is also one of the first cities to adopt a climate adaptation plan to reduce its vulnerability to the extreme storm events and rising seas expected in the next 100 years.

In the Netherlands, alternative strategies are being explored to allow people to live with the inevitable floods. These strategies involve building on water to develop floating communities and engineering and implementing adaptive beach protections that take advantage of natural processes. The experimental Sand Motor project uses a combination of wind, waves, tides, and sand to replenish the eroded coasts. The Dutch Rijkswaterstaat and the South Holland provincial authority placed a large amount of sand in an artificial 1 km long and 2 km wide peninsula into the sea, allowing for the wave and currents to redistribute it and build sand dunes and beaches to protect the coast over time.

New York is setting an example for long-term planning by combining adaptation and transformation strategies into its plan to build a resilient city, and Mayor Michael Bloomberg has outlined a $19.5 billion plan to defend the city against rising seas. In many rapidly growing cities of the Global South, similar leadership is emerging. For example, Johannesburg which adopted one of the first climate change adaptation plan, and so have Durban and Cape Town, in South Africa and Quito, Equador, along with Ho Chi Minh City Vietnam, where a partnership with the City of Rotterdam Netherlands has been established to develop a resilience strategy.

To think like a planet and explore what is possible we may need to reframe our questions. Instead of asking what is good for the planet, we must ask what is good for a planet inhabited by people. What is a good human habitat on Earth? And instead of seeking optimal solutions, we should identify principles that will inform the diverse communities across the world. The best choices may be temporary, since we do not fully understand the mechanisms of life, nor can we predict the consequences of human action. They may very well vary with place and depend on their own histories. But human action may constrain the choices available for life on earth.

Scenario Planning

Scenario planning offers a systematic and creative approach to thinking about the future by letting scientists and practitioners expand old mindsets of ecological sciences and decision making. It provides a tool we can use to deal with the limited predictability of changes on the planetary scale and to support decision-making under uncertainty. Scenarios help bring the future into present decisions (Schwartz 1996). They broaden perspectives, prompt new questions, and expose the possibilities for surprise.

Scenarios have several great features. We expect that they can shift people’s attention toward resilience, redefine decision frameworks, expand the boundaries of predictive models, highlight the risks and opportunities of alternative future conditions, monitor early warning signals, and identify robust strategies (Alberti et al 2013)

A fundamental objective of scenario planning is to explore the interactions among uncertain trajectories that would otherwise be overlooked. Scenarios highlight the risks and opportunities of plausible future conditions. The hypothesis is that if planners and decision makers look at multiple divergent scenarios, they will engage in a more creative process for imagining solutions that would be invisible otherwise. Scenarios are narratives of plausible futures; they are not predictions. But they are extremely powerful when combined with predictive modeling. They help expand boundary conditions and provide a systematic approach we can use to deal with intractable uncertainties and assess alternative strategic actions. Scenarios can help us modify model assumptions and assess the sensitivities of model outcomes. Building scenarios can help us highlight gaps in our knowledge and identify the data we need to assess future trajectories.

Scenarios can also shine spotlights on warning signals, allowing decision makers to anticipate unexpected regime shifts and to act in a timely and effective way. They can support decision making in uncertain conditions by providing us a systematic way to assess the robustness of alternative strategies under a set of plausible future conditions. Although we do not know the probable impacts of uncertain futures, scenarios will provide us the basis to assess critical sensitivities, and identify both potential thresholds and irreversible impacts so we can maximize the wellbeing of both humans and our environment.

A new ethic for a hybrid planet

More than half a century ago, Aldo Leopold (1949) introduced the concept of “thinking like a mountain”: he wanted to expand the spatial and temporal scale of land conservation by incorporating the dynamics of the mountain. Defining a Land Ethic was a first step in acknowledging that we are all part of larger community hat include soils, waters, plants, and animals, and all the components and processes that govern the land, including the prey and predators. Now, along the same lines, Paul Hirsch and Bryan Norton (2012) In Ethical Adaptation to Climate Change: Human Virtues of the Future, MIT Press, articulates a new environmental ethics by suggesting that we “think like a planet.” Building on Hirsch and Norton’s idea, we need to expand the dimensional space of our mental models of urban design and planning to the planetary scale.

Marina Alberti

Seattle

Note: The metaphor of “thinking like a planet” builds on the idea of cognitive transformation proposed by Paul Hirsch and Bryan Norton (2012) In Ethical Adaptation to Climate Change: Human Virtues of the Future, MIT Press.

Related Links:

Wednesday, September 04. 2013

Subverting Planned Obsolescence With the Fixers Collective

Via Make

-----

Iconoclastic economist Herman Daly helped popularize the term “steady state economics.” It’s a concept many makers are already familiar with whether they know it or not. You can read all about it here, but at its essence steady state economics is a closed loop system that mimics nature in that it does not need new inputs or materials to keep running. It runs at a steady state and doesn’t grow lest it overshoot the carrying capacity of the natural resources on which it depends. Repair, repurposing, and recycling are what make the system work.

Of course, we live in the opposite system, one that requires new resources to build new things to replace last year’s model and all the stuff we throw away because it’s broken or out of style. One of the features of this model is “planned obsolescence” It’s a great system for getting people to buy new products, but it’s not so great for the planet (see the Great Pacific Garbage Patch, landfill leachate, and climate change for examples).

But like I said, many makers already know the virtues of repurposing and fixing “broken” stuff. One of my favorite examples is the humble Fixers Collective. They describe themselves as an “ongoing social experiment encouraging improvisational fixing and mending and fighting planned obsolescence.” The New York-based group gets together to fix broken appliances and electronics and to give them a second life. The project began as an art project in 2008, but lived on when participants realized they liked the experience of getting together to fix stuff and teach others.

The Fixers Collective will be returning to Maker Faire New York this month. They invite attendees to bring their broken stuff and learn how to fix it. But Vincent realizes many people don’t want to lug broken appliance to the fair so they may also have appliances on hand that people can take apart to see how they work and what’s inside.

Program director Vincent Lai says reusing or fixing objects is often better than recycling, citing figures that only 40 to 60 percent of recycled material avoids the landfill. Beyond that, he says it’s fun to watch the “eureka moment” when participants pull the chain on a formerly broken lamp they learned to fix themselves.

Even if you aren’t ready to embrace stead state economics it’s empowering to know you can fix that old toaster or lamp sitting in your garage. The Fixers Collective can show you how. While the Fixers Collective is based in New York, there are other likeminded groups all over. Here’s a map.

Related Links:

fabric | rblg

This blog is the survey website of fabric | ch - studio for architecture, interaction and research.

We curate and reblog articles, researches, writings, exhibitions and projects that we notice and find interesting during our everyday practice and readings.

Most articles concern the intertwined fields of architecture, territory, art, interaction design, thinking and science. From time to time, we also publish documentation about our own work and research, immersed among these related resources and inspirations.

This website is used by fabric | ch as archive, references and resources. It is shared with all those interested in the same topics as we are, in the hope that they will also find valuable references and content in it.

Quicksearch

Categories

Calendar

|

|

July '25 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | 3 | 4 | 5 | 6 | |

| 7 | 8 | 9 | 10 | 11 | 12 | 13 |

| 14 | 15 | 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 | 26 | 27 |

| 28 | 29 | 30 | 31 | |||