Friday, January 23. 2015

Note: Following my recent posts about the research project "Inhabiting & Intercacing the Cloud(s)" I'm leading for ECAL, Nicolas Nova and I will be present during next Lift Conference in Geneva (Feb. 4-6 2015) for a talk combined with a workshop and a skype session with EPFL (a workshop related to the I&IC research project will be on the finish line at EPFL –Prof. Dieter Dietz’s ALICE Laboratory– on the day we’ll present in Geneva). If you plan to take part to Lift 15, please come say "hello" and exchange about the project.

Via the Lift Conference & iiclouds.org

—–

Inhabiting and Interfacing the Cloud(s)

Fri, Feb. 06 2015 – 10:30 to 12:30

Room 7+8 (Level 2)

Architect (EPFL), founding member of fabric | ch and Professor at ECAL

Principal at Near Future Laboratory and Professor at HEAD Geneva

Workshop description : Since the end of the 20th century, we have been seeing the rapid emergence of “Cloud Computing”, a new constructed entity that combines extensively information technologies, massive storage of individual or collective data, distributed computational power, distributed access interfaces, security and functionalism.

In a joint design research that connects the works of interaction designers from ECAL & HEAD with the spatial and territorial approaches of architects from EPFL, we’re interested in exploring the creation of alternatives to the current expression of “Cloud Computing”, particularly in its forms intended for private individuals and end users (“Personal Cloud”). It is to offer a critical appraisal of this “iconic” infrastructure of our modern age and its user interfaces, because to date their implementation has followed a logic chiefly of technical development, governed by the commercial interests of large corporations, and continues to be seen partly as a purely functional,centralized setup. However, the Personal Cloud holds a potential that is largely untapped in terms of design, novel uses and territorial strategies.

The workshop will be an opportunity to discuss these alternatives and work on potential scenarios for the near future. More specifically, we will address the following topics:

- How to combine the material part with the immaterial, mediatized part? Can we imagine the geographical fragmentation of these setups?

- Might new interfaces with access to ubiquitous data be envisioned that take nomadic lifestyles into account and let us offer alternatives to approaches based on a “universal” design? Might these interfaces also partake of some kind of repossession of the data by the end users?

- What setups and new combinations of functions need devising for a partly nomadic lifestyle? Can the Cloud/Data Center itself be mobile?

- Might symbioses also be developed at the energy and climate levels (e.g. using the need to cool the machines, which themselves produce heat, in order to develop living strategies there)? If so, with what users (humans, animals, plants)?

The joint design research Inhabiting & Interfacing the Cloud(s) is supported by HES-SO, ECAL & HEAD.

Interactivity : The workshop will start with a general introduction about the project, and moves to a discussion of its implications, opportunities and limits. Then a series of activities will enable break-out groups to sketch potential solutions.

Thursday, November 13. 2014

By fabric | ch

-----

I'm very happy to write that after several months of preparation, I'm leading a new design-research (that follows Variable Environment, dating back from 2007!) for the University of Art & design, Lausanne (ECAL), in partnership with Nicolas Nova (HEAD). The project will see the transversal collaboration of architects, interaction designers, ethnographers and scientists with the aim of re-investigating "cloud computing" and its infrastructures from a different point of view. The name of the project: Inhabiting and Interfacing the Cloud(s), which is now online under the form of a blog that will document our progresses. The project should last until 2016.

The main research team is composed of:

Patrick Keller, co-head (Prof. ECAL M&ID, fabric | ch) / Nicolas Nova, co-head (Prof. HEAD MD, Near Future Laboratory) / Christophe Guignard (Prof. ECAL M&ID, fabric | ch) / Lucien Langton (assistant ECAL M&ID) / Charles Chalas (assistant HEAD MD) / Dieter Dietz (Prof. EPFL - Alice) & Caroline Dionne (Post-doc EPFL - Alice) / Dr. Christian Babski (fabric | ch).

I&IC Workshops with students from the HEAD, ECAL (interaction design) and EPFL (architecture) will be conducted by:

James Auger (Prof. RCA, Auger - Loizeau) / Matthew Plummer-Fernandez (Visiting Tutor Goldsmiths College, Algopop) / Thomas Favre - Bulle (Lecturer EPFL).

Finally, a group of "advisors" will keep an eye on us and the research artifacts we may produce:

Babak Falsafi (Prof. EPFL - Ecocloud) / Prof. Zhang Ga (TASML, Tsinghua University) / Dan Hill (City of Sound, Future Cities Catapult) / Ludger Hovestadt (Prof. ETHZ - CAAD) / Geoff Manaugh (BLDGBLOG, Gizmodo).

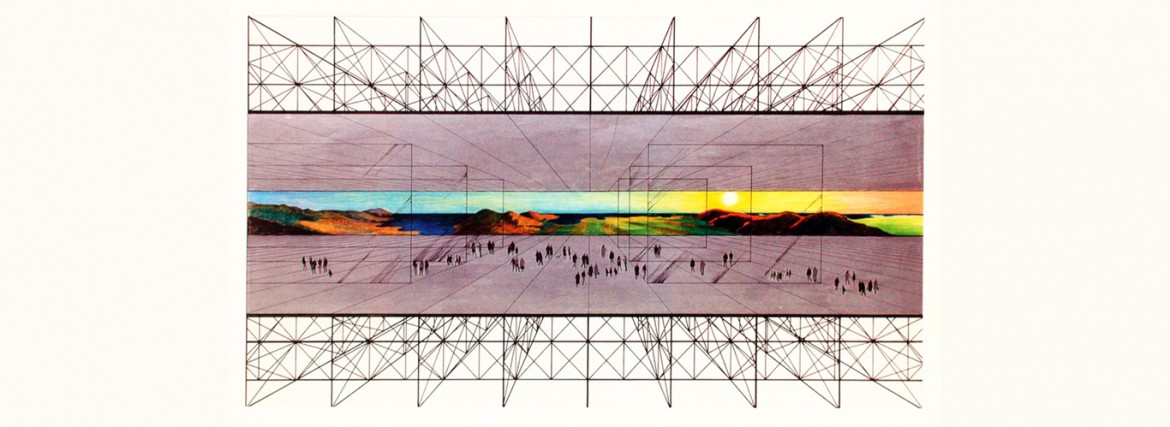

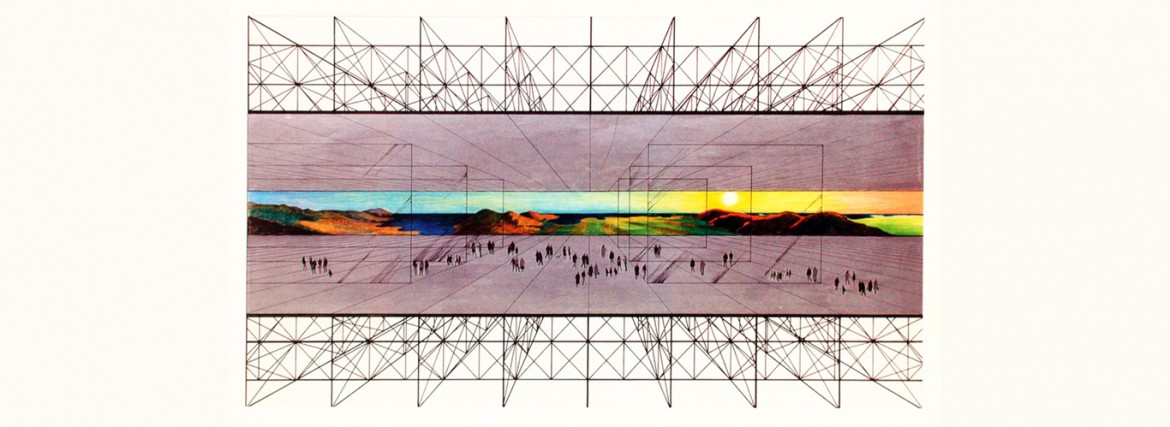

Andrea Branzi, 1969, Research for "No-Stop City".

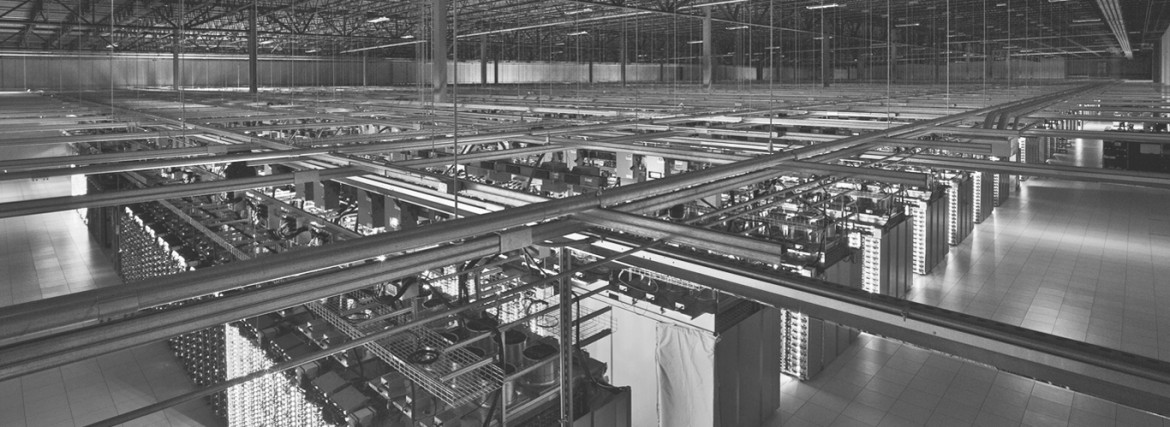

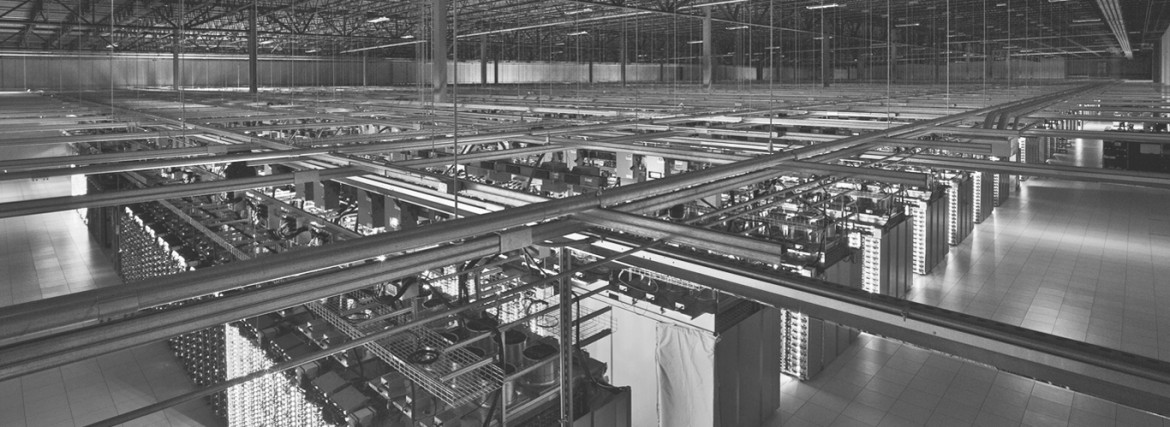

Google data center in Lenoir, North Carolina (USA), 2013.

As stated on the I&IC webiste:

The design research I&IC (Inhabiting and Interfacing the Clouds), explores the creation of counter-proposals to the current expression of “Cloud Computing”, particularly in its forms intended for private individuals and end users (“Personal Cloud”). It is led by Profs. Patrick Keller (ECAL) and Nicolas Nova (HEAD) and is documented online as a work in progress, 2014-2017.

I&IC is to offer an alternative point of view, a critical appraisal as well as to provide an “access to tools” about this iconic infrastructure of our modernity and its user interfaces, because to date their implementation has followed a logic chiefly of technical development, mainly governed by corporate interests, and continues therefore to be paradoxically envisioned as a purely functional, centralized setup.

However, the Personal Cloud holds a potential that is largely untapped in terms of design, novel uses and territorial strategies. Through its cross-disciplinary approach that links interaction design, the architectural and territorial dimensions as well as ethnographic studies, our project aims at producing alternative models resulting from a more contemporary approach, notably factoring in the idea of creolization (theorized by E. Glissant).

Monday, June 30. 2014

Note: I've already collected articles about this project, which interestingly, would add a permanent human presence in a layer of the atmosphere (the statosphere) where humans were not or very rarely present up to now. We also have to underline the fact that this will be an additionnal move toward the "brandification/privatization" (of the upper levels of our atmosphere --stratosphere, thermosphere-- and outer space).

It is interesting indeed, with clever worlds like "bringing the internet to million of people". Yet some other ones have a more critical view upon this strategic move by corporate interests: read Google Eyes in the Sky (by Will Oremus on Slate)

Via Next Nature

-----

Balloon-Powered Internet For Everyone

Both Google and Facebook have challenging intentions to bring the Internet to the next billion people, and while Zuckerberg’s dream involves drones with lasers, Google is planning to create a hot air balloon network.

With a system of balloons traveling on the edge of space, Project Loon will attempt to connect to internet the two-thirds of the world’s population that doesn’t have access to the Net. The balloons will float in the stratosphere, twice as high as airplanes and the weather. Users can connect to the network using a specific Internet antenna attached to their building.

“Project Loon uses software algorithms to determine where its balloons need to go, then moves each one into a layer of wind blowing in the right direction. By moving with the wind, the balloons can be arranged to form one large communications network” is explained on Project Loon website.

Currently, Google is still in the testing phase to learn more about wind patterns, and improve the balloons design. A step toward universal Internet connection?

Find more at Project Loon

Wednesday, May 28. 2014

Excerpt:

"In some ways, there’s a bug in the open source ecosystem. Projects start when developers need to fix a particular problem, and when they open source their solution, it’s instantly available to everyone. If the problem they address is common, the software can become wildly popular in a flash — whether there is someone in place to maintain the project or not. So some projects never get the full attention from developers they deserve. “I think that is because people see and touch Linux, and they see and touch their browsers, but users never see and touch a cryptographic library,” says Steve Marquess, one of the OpenSSL foundation’s partners."

Via Wired

-----

How Heartbleed Broke the Internet — And Why It Can Happen Again

Illustration: Ross Patton/WIRED

Stephen Henson is responsible for the tiny piece of software code that rocked the internet earlier this week (note: early last month).

The key moment arrived at about 11 o’clock on New Year’s Eve, 2011. With 2012 just minutes away, Henson received the code from Robin Seggelmann, a respected academic who’s an expert in internet protocols. Henson reviewed the code — an update for a critical internet security protocol called OpenSSL — and by the time his fellow Britons were ringing in the New Year, he had added it to a software repository used by sites across the web.

Two years would pass until the rest of the world discovered this, but this tiny piece of code contained a bug that would cause massive headaches for internet companies worldwide, give conspiracy theorists a field day, and, well, undermine our trust in the internet. The bug is called Heartbleed, and it’s bad. People have used it to steal passwords and usernames from Yahoo. It could let a criminal slip into your online bank account. And in theory, it could even help the NSA or China with their surveillance efforts.

It’s no surprise that a small bug would cause such huge problems. What’s amazing, however, is that the code that contained this bug was written by a team of four coders that has only one person contributing to it full-time. And yet Henson’s situation isn’t an unusual one. It points to a much larger problem with the design of the internet. Some of its most important pieces are controlled by just a handful of people, many of whom aren’t paid well — or aren’t paid at all. And that needs to change. Heartbleed has shown — so very clearly — that we must add more oversight to the internet’s underlying infrastructure. We need a dedicated and well-funded engineering task force overseeing not just online encryption but many other parts of the net.

The sad truth is that open source software — which underpins vast swathes of the net — has a serious sustainability problem. While well-known projects such as Linux, Mozilla, and the Apache web server enjoy hundreds of millions of dollars of funding, there are many other important projects that just don’t have the necessary money — or people — behind them. Mozilla, maker of the Firefox browser, reported revenues of more than $300 million in 2012. But the OpenSSL Software Foundation, which raises money for the project’s software development, has never raised more than $1 million in a year; its developers have never all been in the same room. And it’s just one example.

In some ways, there’s a bug in the open source ecosystem. Projects start when developers need to fix a particular problem, and when they open source their solution, it’s instantly available to everyone. If the problem they address is common, the software can become wildly popular in a flash — whether there is someone in place to maintain the project or not. So some projects never get the full attention from developers they deserve. “I think that is because people see and touch Linux, and they see and touch their browsers, but users never see and touch a cryptographic library,” says Steve Marquess, one of the OpenSSL foundation’s partners.

Another Popular, Unfunded Project

Take another piece of software you’ve probably never heard of called Dnsmasq. It was kicked off in the late 1990s by a British systems administrator named Simon Kelley. He was looking for a way for his Netscape browser to tell him whenever his dial-up modem had become disconnected from the internet. Scroll forward 15 years and 30,000 lines of code, and now Dnsmasq is a critical piece of network software found in hundreds of millions of Android mobile phones and consumer routers.

Kelley quit his day job only last year when he got a nine-month contract to do work for Comcast, one of several gigantic internet service providers that ships his code in its consumer routers. He doesn’t know where his paycheck will come from in 2015, and he says he has sympathy for the OpenSSL team, developing critical and widely used software with only minimal resources. “There is some responsibility to be had in writing software that is running as root or being exposed to raw network traffic in hundreds of millions of systems,” he says. Fifteen years ago, if there was a bug in his code, he’d have been the only person affected. Today, it would be felt by hundreds of millions. “With each release, I get more nervous,” he says.

Money doesn’t necessarily buy good code, but it pays for software audits and face-to-face meetings, and it can free up open-source coders from their day jobs. All of this would be welcome at the OpenSSL project, which has never had a security audit, Marquess says. Most of the Foundation’s money comes from companies asking for support or specific development work. Last year, only $2,000 worth of donations came in with no strings attached. “Because we have to produce specific deliverables that doesn’t leave us the latitude to do code audits, security reviews, refactoring: the unsexy activities that lead to a quality code base,” he says.

The problem is also preventing some critical technologies from being added to the internet. Jim Gettys says that a flaw in the way many routers are interacting with core internet protocols is causing a lot of them to choke on traffic. Gettys and a developer named Dave Taht know how to fix the issue — known as Bufferbloat — and they’ve started work on the solution. But they can’t get funding. “This is a project that has fallen through the cracks,” he says, “and a lot of the software that we depend on falls through the cracks one way or another.”

Earlier this year, the OpenBSD operating system — used by security conscious folks on the internet — nearly shut down, after being hit by a $20,000 power bill. Another important project — a Linux distribution for routers called Openwrt is also “badly underfunded,” Gettys says.

Gettys should know. He helped standardize the protocols that underpin the web and build core components of the Unix operating system, which now serves as the basis for everything from the iPhone to the servers that drive the net. He says there’s no easy answer to the problem. “I think there are ways to put money into the ecosystem,” he says, “but getting people to understand the need has been difficult.”

Eric Raymond, a coder and founder of the Open Source Initiative, agrees. “The internet needs a dedicated civil-engineering brigade to be actively hunting for vulnerabilities like Heartbleed and Bufferbloat, so they can be nailed before they become serious problems,” he said via email. After this week, it’s hard to argue with him.

Monday, May 19. 2014

Via The Verge (via Computed·Blg)

-----

The internet will have nearly 3 billion users, about 40 percent of the world's population, by the end of 2014, according to a new report from the United Nations International Telecommunications Union. Two-thirds of those users will be in developing countries.

Those numbers refer to people who have used the internet in the last three months, not just those who have access to it.

Internet penetration is reaching saturation in developed countries, while it's growing rapidly in developing countries. Three out of four people in Europe will be using the internet by the end of the year, compared to two out of three in the Americas and one in three in Asia and the Pacific. In Africa, nearly one in five people will be online by the end of the year.

Mobile phone subscriptions will reach almost 7 billion. That growth rate is slowing, suggesting that the number will plateau soon. Mobile internet subscriptions are still growing rapidly, however, and are expected to reach 2.3 billion by the end of 2014.

These numbers make it easy to imagine a future in which every human on Earth is using the internet. The number of people online will still be dwarfed by the number of things, however. Cisco estimates the internet already has 10 billion connected devices and is expected to hit 50 billion by 2020.

At the time we (fabric | ch) are starting a new research project in collaboration with Nicolas Nova and other partners for ECAL and Head (research project entitled at this time "Interfacing & Inhabiting the Cloud(s)") and after visiting some facilities, this documentary by Timo Arnall comes exactly at the right moment!

Via Elasticspace (Timo Arnall)

-----

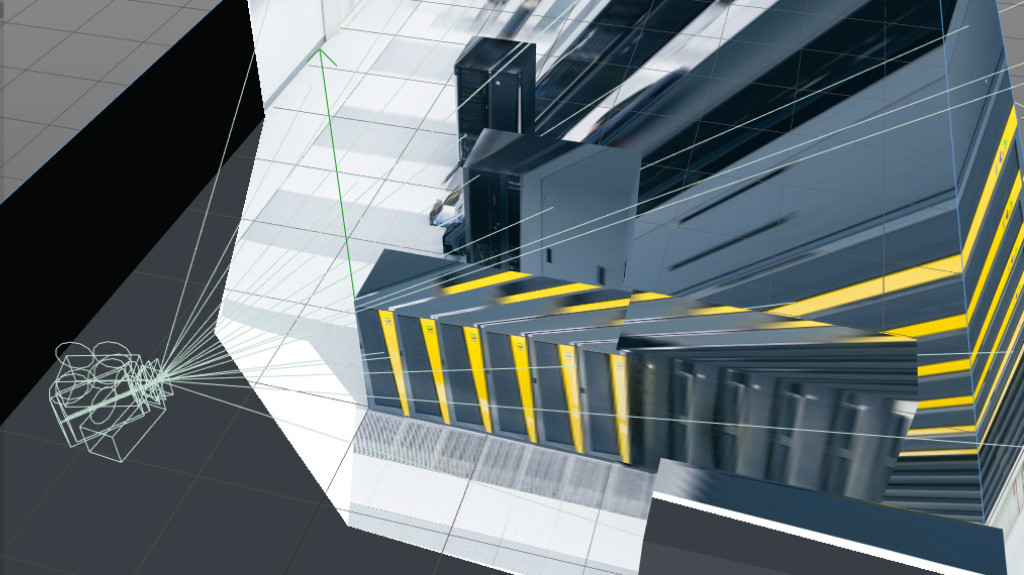

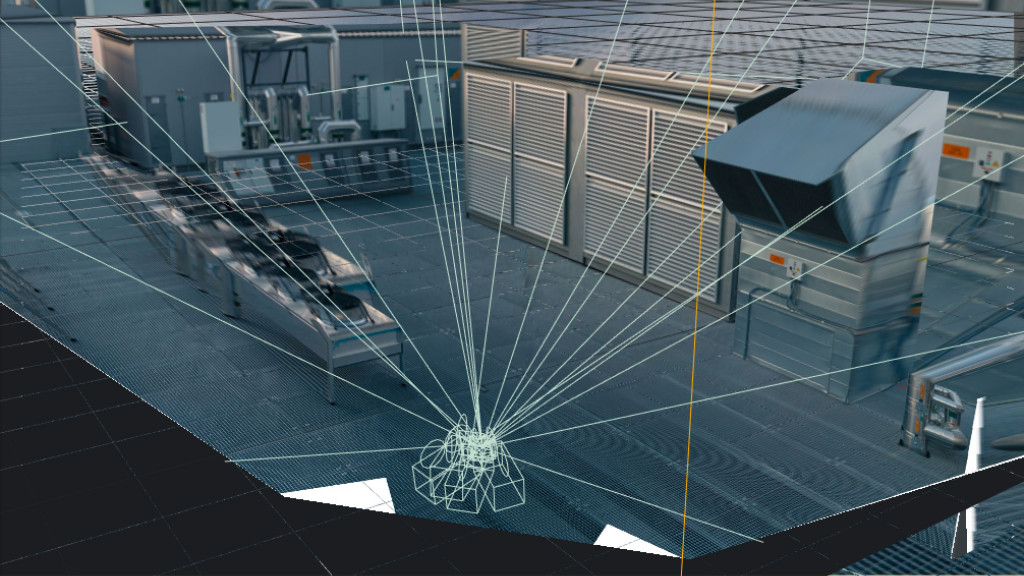

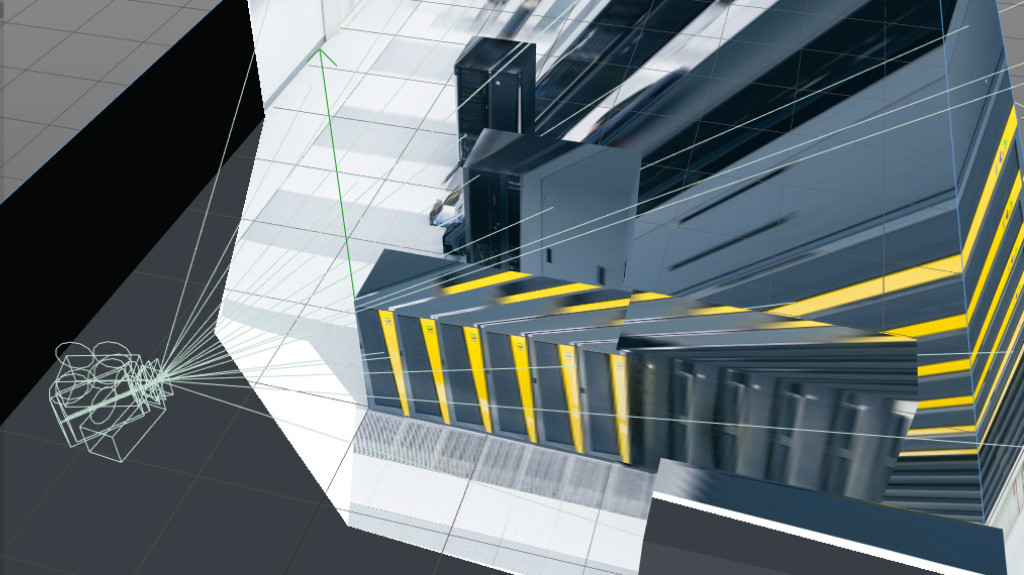

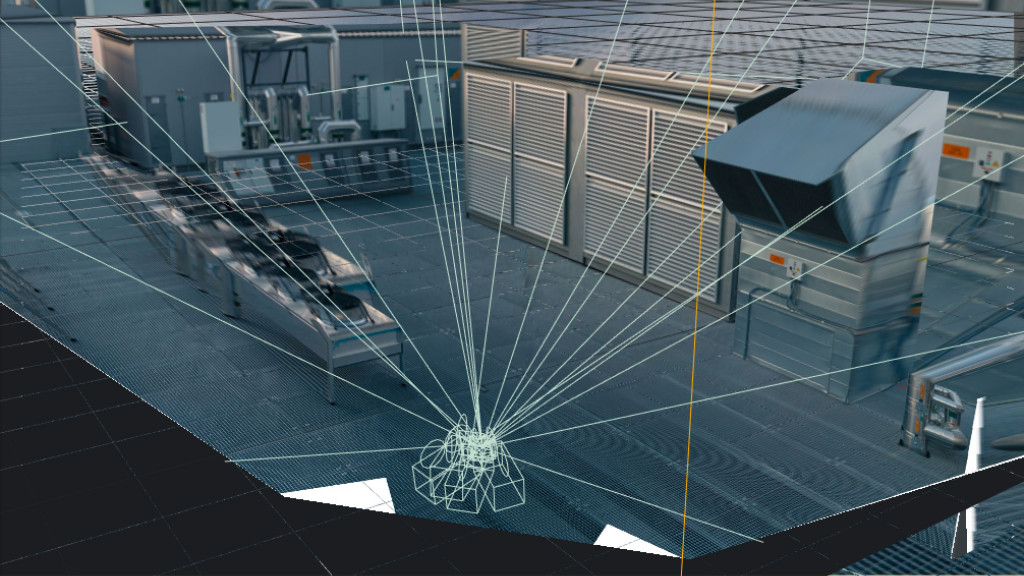

Internet machine is a multi-screen film about the invisible infrastructures of the internet. The film reveals the hidden materiality of our data by exploring some of the machines through which ‘the cloud’ is transmitted and transformed.

Installation: Digital projection, 3 x 16:10 screens, each 4.85m x 2.8m.

Medium: Digital photography, photogrammetry and 3D animation.

Internet machine (showing now at Big Bang Data or watch the trailer) documents one of the largest, most secure and ‘fault-tolerant’ data-centres in the world, run by Telefonica in Alcalá, Spain. The film explores these hidden architectures with a wide, slowly moving camera. The subtle changes in perspective encourage contemplative reflection on the spaces where internet data and connectivity are being managed.

In this film I wanted to look beyond the childish myth of ‘the cloud’, to investigate what the infrastructures of the internet actually look like. It felt important to be able to see and hear the energy that goes into powering these machines, and the associated systems for securing, cooling and maintaining them.

What we find, after being led through layers of identification and security far higher than any airport, are deafeningly noisy rooms cocooning racks of servers and routers. In these spaces you are buffeted by hot and cold air that blusters through everything.

Server rooms are kept cool through quiet, airy ‘plenary’ corridors that divide the overall space. There are fibre optic connections routed through multiple, redundant, paths across the building. In the labyrinthine corridors of the basement, these cables connect to the wider internet through holes in rough concrete walls.

Power is supplied not only through the mains, but backed up with warm caverns of lead batteries, managed by gently buzzing cabinets of relays and switches.

These are backed up in turn by rows of yellow generators, supplied by diesel storage tanks and contracts with fuel supply companies so that the data centre can run indefinitely until power returns.

The outside of the building is a facade of enormous stainless steel water tanks, containing tens of thousands of litres of cool water, sitting there in case of fire.

And up on the roof, to the sound of birdsong, is a football-pitch sized array of shiny aluminium ‘chillers’ that filter and cool the air going into the building.

In experiencing these machines at work, we start to understand that the internet is not a weightless, immaterial, invisible cloud, and instead to appreciate it as a very distinct physical, architectural and material system.

Production

This was a particularly exciting project, a chance for an ambitious and experimental location shoot in a complex environment. Telefónica were particularly accommodating and allowed unprecedented access to shoot across the entire building, not just in the ‘spectacular’ server rooms. Thirty two locations were shot inside the data centre over the course of two days, followed by five weeks of post-production.

I had to invent some new production methods to create a three-screen installation, based on some techniques I developed over ten years ago. The film was shot using both video and stills, using a panoramic head and a Canon 5D mkIII. The video was shot using the Magic Lantern RAW module on the 5D, while the RAW stills were processed in Lightroom and stitched together using Photoshop and Hugin.

The footage was then converted into 3D scenes using camera calibration techniques, so that entirely new camera movements could be created with a virtual three-camera rig. The final multi-screen installation is played out in 4K projected across three screens.

There are more photos available at Flickr.

-

Internet machine is part of BIG BANG DATA, open from 9 May 2014 until 26 October 2014 at CCCB (Barcelona) and from February-May 2015 at Fundación Telefónica (Madrid).

Internet Machine is produced by Timo Arnall, Centre de Cultura Contemporània de Barcelona – CCCB, and Fundación Telefónica. Thanks to José Luis de Vicente, Olga Subiros, Cira Pérez and María Paula Baylac.

Wednesday, February 26. 2014

Three years ago we published a post by Nicolas Nova about Salvator Allende's project Cybersyn. A trial to build a cybernetic society (including feedbacks from the chilean population) back in the early 70ies.

Here is another article and picture piece about this amazing projetc on Frieze. You'll need to buy the magazione to see the pictures, though!

-----

Via Frieze

Phograph of Cybersyn, Salvador Allende's attempt to create a 'socialist internet, decades ahead of its time'

This is a tantalizing glimpse of a world that could have been our world. What we are looking at is the heart of the Cybersyn system, created for Salvador Allende’s socialist Chilean government by the British cybernetician Stafford Beer. Beer’s ambition was to ‘implant an electronic nervous system’ into Chile. With its network of telex machines and other communication devices, Cybersyn was to be – in the words of Andy Beckett, author of Pinochet in Piccadilly (2003) – a ‘socialist internet, decades ahead of its time’.

Capitalist propagandists claimed that this was a Big Brother-style surveillance system, but the aim was exactly the opposite: Beer and Allende wanted a network that would allow workers unprecedented levels of control over their own lives. Instead of commanding from on high, the government would be able to respond to up-to-the-minute information coming from factories. Yet Cybersyn was envisaged as much more than a system for relaying economic data: it was also hoped that it would eventually allow the population to instantaneously communicate its feelings about decisions the government had taken.

In 1973, General Pinochet’s cia-backed military coup brutally overthrew Allende’s government. The stakes couldn’t have been higher. It wasn’t only that a new model of socialism was defeated in Chile; the defeat immediately cleared the ground for Chile to become the testing-ground for the neoliberal version of capitalism. The military takeover was swiftly followed by the widespread torture and terrorization of Allende’s supporters, alongside a massive programme of privatization and de-regulation. One world was destroyed before it could really be born; another world – the world in which there is no alternative to capitalism, our world, the world of capitalist realism – started to emerge.

There’s an aching poignancy in this image of Cybersyn now, when the pathological effects of communicative capitalism’s always-on cyberblitz are becoming increasingly apparent. Cloaked in a rhetoric of inclusion and participation, semio-capitalism keeps us in a state of permanent anxiety. But Cybersyn reminds us that this is not an inherent feature of communications technology. A whole other use of cybernetic sytems is possible. Perhaps, rather than being some fragment of a lost world, Cybersyn is a glimpse of a future that can still happen.

Wednesday, November 27. 2013

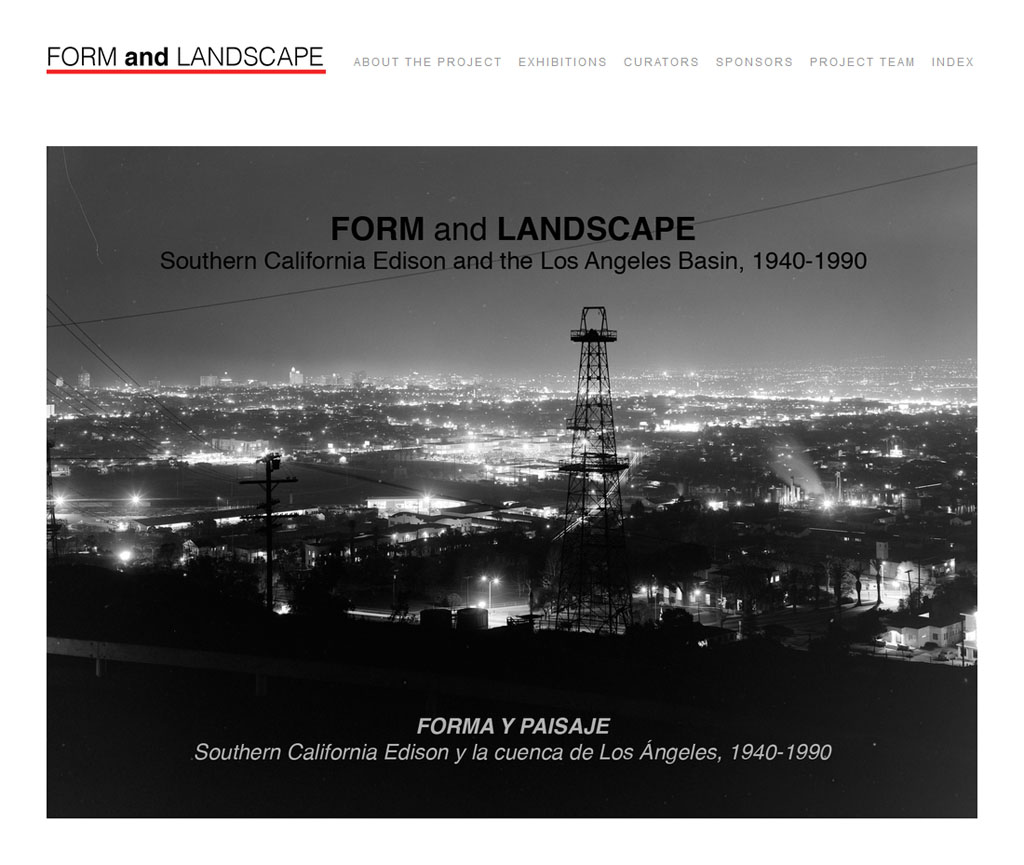

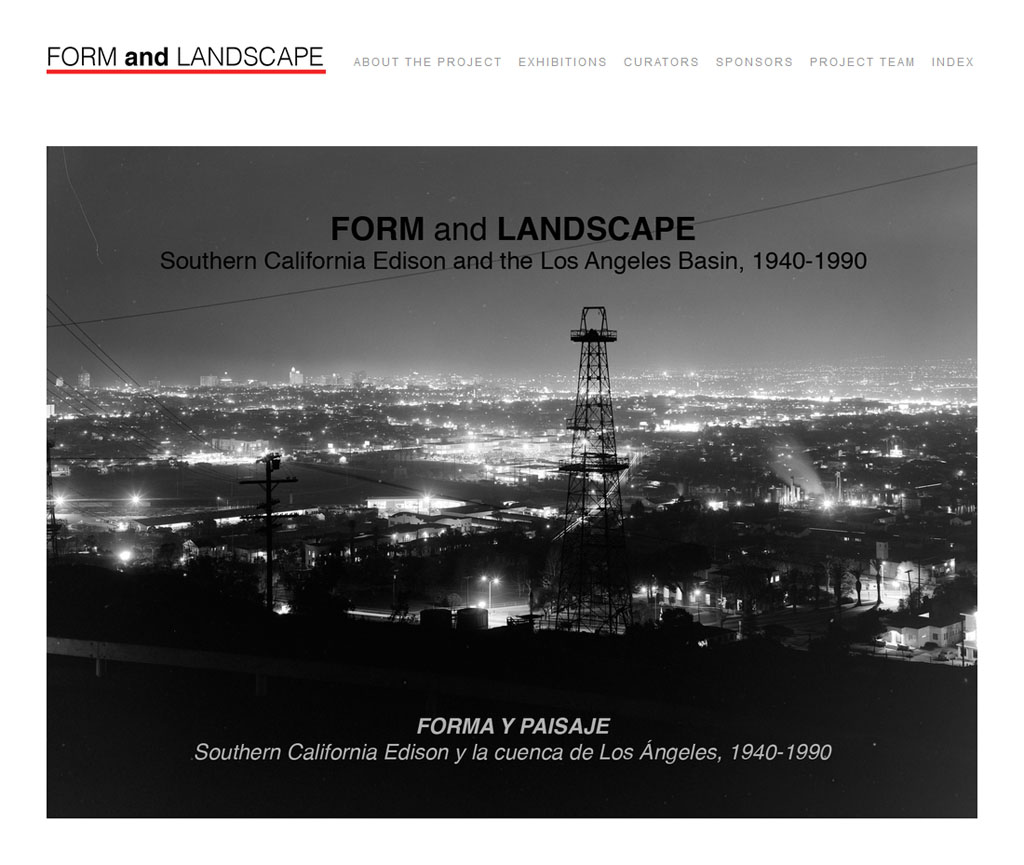

Note: an interesting and superb photo/documentary collection about the electrification of the Los Angeles Basin (40-90ies). Thanks Yoo-Mi Steffen for the link!

-----

A project by William Deverell and Greg Hise.

"In the aftermath of Pacific Standard Time (PST), a uniquely successful collaborative project of exhibitions, public programs, and publications which together took intellectual and aesthetic stock of southern California’s artists, art scenes, and artistic production across nearly the entirety of the post-World War II era, the Getty launched an initiative with a tighter focus on architecture during the same era. “Pacific Standard Time Presents: Modern Architecture in L.A.” has as one of its ambitious goals a collective explication of “how the city was made Modern.” For PST, the Getty partnered with dozens of cultural and educational institutions to offer a diverse and eclectic array of exhibits and programs. The institutional and grant-making alchemy of Getty leadership mixed with centrifugal funding and freedom worked magnificently; in sheer volume and insight alike, the meld of scholarly consideration with public programming revolutionized our collective understanding of the regional art world across four or five decades of the twentieth century. Pacific Standard Time Presents (PSTP) benefits from and builds on that considerable momentum. And that is where this on-line photographic exhibition comes in."

More about it HERE.

Monday, October 14. 2013

Via Inhabitat via Computed·By

-----

The much-anticipated Ivanpah Solar Electric Generating System just kicked into action in California’s Mojave Desert. The 3,500 acre facility is the world’s largest solar thermal energy plant, and it has the backing of some major players; Google, NRG Energy, BrightSource Energy and Bechtel have all invested in the project, which is constructed on federally-leased public land. The first of Ivanpah’s three towers is now feeding energy into the grid, and once the site is fully operational it will produce 392 megawatts — enough to power 140,000 homes while reducing carbon emissions by 400,000 tons per year.

Ivanpah is comprised of 300,000 sun-tracking mirrors (heliostats), which surround three, 459-foot towers. The sunlight concentrated from these mirrors heats up water contained within the towers to create super-heated steam which then drives turbines on the site to produce power.

The first successfully operating unit will sell power to California’s Pacific Gas and Electric, as will Unit 3 when it comes online in the coming months. Unit 2 is also set to come online shortly, and will provide power to Southern California Edison.

Construction began on the facility in 2010, and achieved it’s first “flux” in March, a crucial test which proved its readiness to begin commercial operation. Tests this past Tuesday formed Ivanpah’s “first sync” which began feeding power into the grid.

As John Upton at Grist points out, the project is not without its critics, noting that some “have questioned why a solar plant that uses water would be built in the desert — instead of one that uses photovoltaic panels,” while others have been upset by displacement of local wildlife—notably 100 endangered desert tortoises.

But the Ivanpah plant still constitutes a major milestone, both globally as the world’s largest solar thermal energy plant, and locally for the significant contribution it will make towards California’s renewable energy goal of achieving 3,000 MW of solar generating capacity through public utilities and private ownership.

Personal comment:

After the project in Spain, early images and controversy dating back 2012, here comes a new amazing infrastructure / solar power plant in California. Welcome!

This could be the base for a new "dome" over a city that would live in the shadow of its own power supplier... A different 2050 reboot?

I wonder what happens under the mirrors of the California plant btw. I remember these other images from a project in Australia that combined different approaches (solar and wind tower) + specific agriculture in the shaded area.

Tuesday, September 10. 2013

By fabric | ch

-----

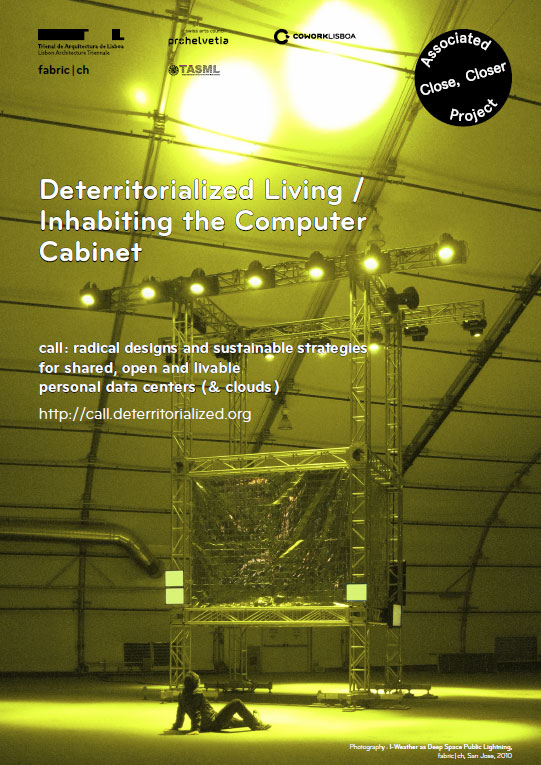

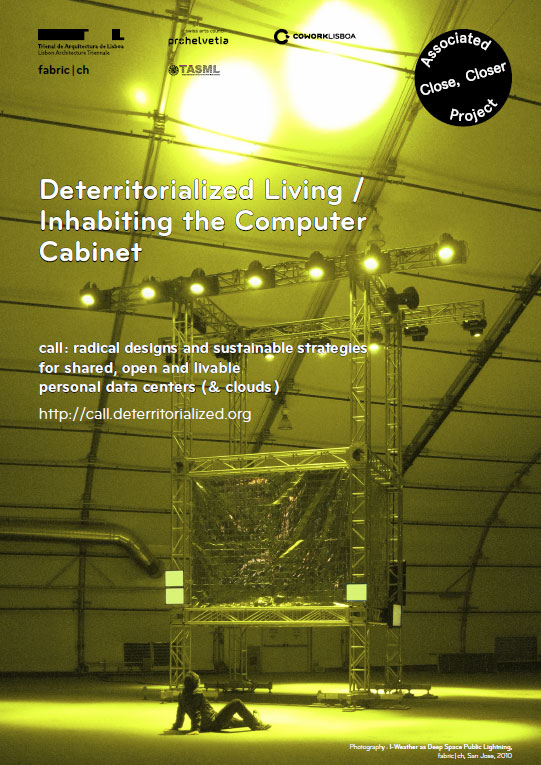

Following our residency in Beijing at the Tsinghua University that ended last July, fabric | ch transformed itself into the organizers of a call (!) that will run between next september and october, in partneship with TASML (Beijing). The call is closely related to the work and workshop we've done in Beijing.

The results of this CALL (1st price) will be presented during the next Lisbon Architecture Triennale, CLOSE, CLOSER (curator Beatrice Galilee), so as another list of EVENTS, as an Associated Program and during a talk together with fabric | ch.

An Award of Distinction is open to international submissions.

You can find below a copy of this open call dedicated to individuals or interdisciplinary groups of students and faculty members of Tsinghua University in the fields of Architecture, Design, Art and Sciences (1st prize), so as to the international community (students and professionals, Award of Distinction).

Call: http://call.deterritorialized.org/

Download the call in pdf: http://bit.ly/18LozDr

Download the poster: http://bit.ly/1amu7Gk

Events during Close, Closer: http://beijing.deterritorialized.org/

Please spread the message!

-----

Download the call in English (PDF)

Download the call in Chinese (PDF)

Call : radical designs and sustainable strategies for shared, open and livable personal data centers (& clouds)

In collaboration with fabric | ch, Tsinghua Art & Sciences Research Center Media Laboratory (TASML) is pleased to announce an open call to the Tsinghua community for individuals or interdisciplinary groups of students and faculty in the fields of Architecture, Design, Art and Sciences. Conceived by fabric | ch, the competition is inspired by Deterritorialized Living, a workshop, a project and series of online “tools / atmospherics” developed on the Tsinghua campus during a recent residency.

The purpose of the competition is to explore a radicalized experience of deterritorialisation / detemporalization through intensive use of network, transportation and sometimes biochemical devices as well as to investigate alternative strategies in lieu of corporate approaches to data, data centers and cloud computing. The competition aims to develop speculative and innovative artifacts (code, interfaces, programs, objects, devices, spaces, etc.) for this contemporary situation.

Inhabiting the Computer Cabinet competition (Deterritorialized Living, the Beijing sessions) will be an Associated Project during Close, Closer, the much anticipated Lisbon Architecture Triennale that will take place in late summer and fall of 2013, curated by Beatrice Galilee, Liam Young, Mariana Pestana and Jose Esparza Chong Cuy.

Brief

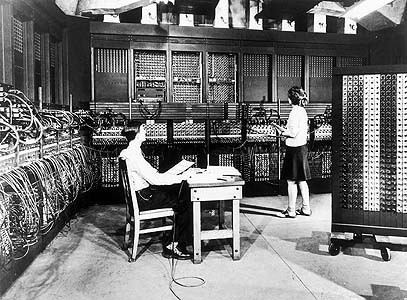

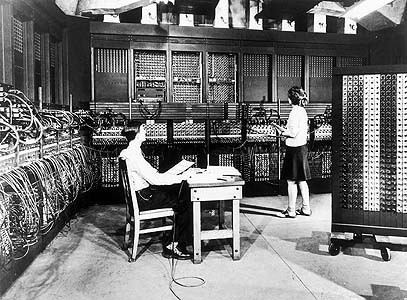

One of the first general purpose computer, the Eniac I, back in 1946.

Context: Since the public emergence of the Internet and the web in the mid ’90s and the ubiquitous presence of wireless communication, peer to peer exchanges, and social networks of all kinds in recent years, we have witnessed a growing tendency towards horizontally mediated decentralization. These conditions have not only deeply influenced the ways in which people and societies interact (social interactions, exchanges, mobilities, artifacts, economies, etc.), but have also affected how clusters of computers and hardware collaborate or exchange information. To some extent, networks have generated some sort of “geo-engineered” milieu that triggers an experience of delocalization: ambient deterritorialization that is always around, always on.

Recently the “network” concept has started to widen its influence: the energy industry is planning to adopt the horizontal model with its “smart grid” plans, in which everybody should be able to produce their own clean energy and store it or share it with the rest of the community. We can witness something similar in alternative, locally produced food: the idea of distributed food that is produced close to the place where it will be eaten, through the approach of highly decentralized and small scale “gardening” or through certain forms of urban “farming”. Rapid prototyping also helps to spur a similar movement in the product design community.

Yet, on the data side, we are witnessing the exact opposite: we have moved from a fundamentally decentralized model towards a highly “mainframed” (centralized) structure of corporately owned data, services and data centers, although these seemingly “immaterial” information architectures appear to be deceptively decentralized, accessible everywhere, anytime.

Should we then consider personal-urban-“data-farming” instead of corporate data centralization too? Or should we rather try urban-“data-gardening” instead? Could we build a highly decentralized, almost atomized open system of small interconnected data centers? Could we possibly inhabit these data centers, taking advantage of the heat they generate, the high-bandwidth network access they provide, the data they collect? Should we also consider their necessary relocation while taking into account their highly mediated nature?

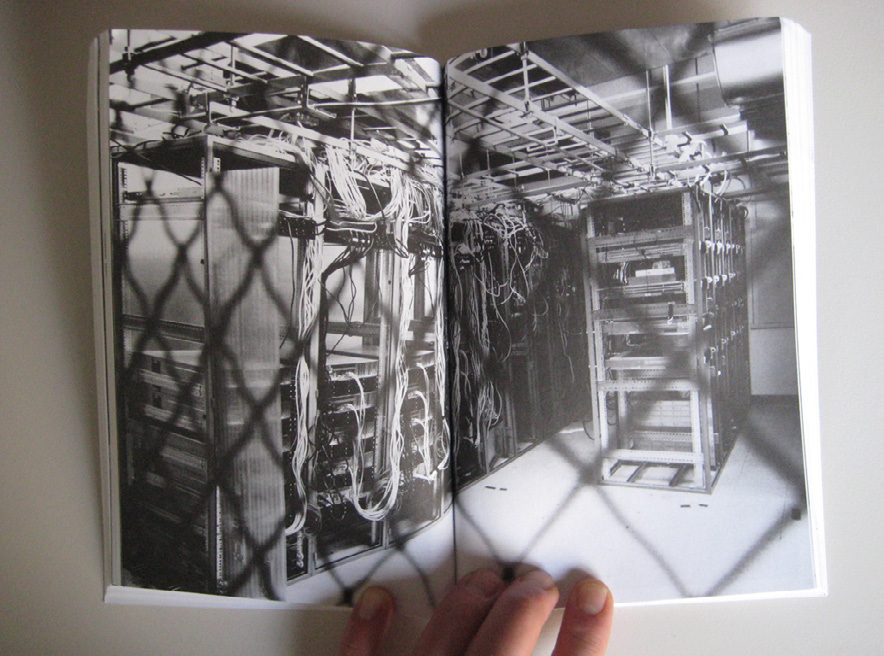

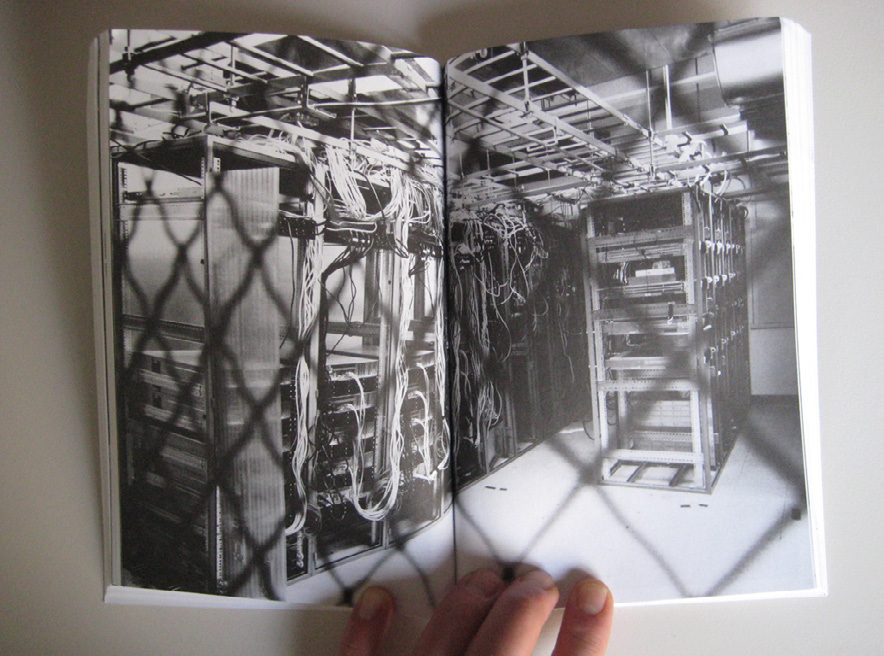

… and a picture of several servers cabinets taken out of Clog: Data Space (2012).

Or should we simply consider the data center figure (and its services) for what it is: the furtive icon of our modernity and of the radically modified relation we maintain to global territory? Should we therefore think about it in even more radical or speculative terms?

Based on the context above, we are calling for proposals under the title of Deterritorialized Living (Beijing sessions) / Inhabiting the Computer Cabinet.

Objective: An abstract space of 9 square meters is proposed for the competition, to be designed into a large computer cabinet that is inhabitable. Its exact shape, height and volume are to be defined by the candidates.

The cabinet can be situated in any natural or artificial place on Earth. It can also be located in an ideal environment (which should be defined in detail). Cooling (natural or artificial) is the only necessary condition: fresh air (and/or other refreshing means) needs to enter the space and to cool down the machines. It is then transformed into hot air charged with positive ions by the processing units that could in turn be used for any other meaningful purposes or symbiotic uses, before eventually being extracted.

The computer cabinet functions as a small data center. A certain number of servers, NAS (networked attached storage), virtual machines, etc. are therefore also installed within this space. The inhabitant(s) have to share the space with the machines in some ways. The status and/or security of the data could also be addressed in some creative ways.

The cabinet is part of a network and can be combined or aggregated with others to form a larger, possibly mobile, mediated and/or networked structure.

Goal: by taking advantage of the physical, informational, computational, chemical, biological, environmental or climatological features of the facility (inside and outside), the project focuses on creating a livable environment within the computer data cabinet (or personal data center). The outcome of the project could be to engage with the overall design or to develop a very specific device, object, software, interface and/or installation within this given framework.

Ressources

http://www.deterritorialized.org is an artificial atmosphere conceived by fabric | ch that is delivered in the form of algorithmically constructed data feeds. It is composed by a set of web services and libraries that were developed in the context of a residency on the Tsinghua University campus in Beijing, between Spring and Summer of 2013 (at TASML). The open data feeds of this "geo-engineered" climate can be addressed and used by any custom designed program or device (the website will be open from the 6th of September).

These open-source, “ambient deterritorialization” data feeds and environments in the form of Deterritorialized Air (N2, O2, CO2, Ar), Deterritorialized Daylight (Lm, IR, UV) and Deterritorialized Time can be freely used in the context of this competition.

Eligibility / Rules

The call is open to all students and young faculty members of Tsinghua University. The application should include candidate’s name and school or department affiliation.

The Award of Distinction of US$ 1000 is open to international submissions.

Works submitted by individuals or teams are all welcome. However we highly encourage interdisciplinary, transdepartmental team participation. All submissions should be written in English.

Submissions shall not be published or made public in other venues until a final decision by the jury is made public.

Submission Deadline

The submission deadline is October 14, 2013, 10pm Beijing time.

Any uncompleted submission by the time of the deadline will be excluded from judgment by the Jury.

Schedule

Competition launch: August 30, 2013

-

Final Submission: 10pm (Beijing time), October 14, 2013

-

Announcement of winning entries and mentions: November 1, 2013

-

Presentation by the winning team in Lisbon at the Lisbon International Architecture Biennial Close, Closer, Portugal: December 14, 2013

Submission Guidelines

Email submission, in one email with one pdf file attached, to call@deterritorialized.org, maximum size limit is 20mb, with the title of the message as the title of your project.

File to be attached to your mail:

One pdf document in A3 horizontal layout (labelled: name-of-my-project.pdf), to be screened (the jury will look at your proposition on screen), containing the following, not exceeding 8 pages:

A - Information

- Title of the project.

- Name / surname, Tsinghua school or department affiliation (or profile for Award of Distinction), email address and date of birth of the representing member for the team (one person only).

- Names / surnames, Tsinghua school or department affiliation (or profile for Award of Distinction), email addresses and dates of birth of all other members of the team.

- Short descriptive biography (not exceeding 150 English words of each individual).

- Links to additional visual materials, including video, website, etc., if needed.

B - Project

- Images and diagrams summary of the project (most important elements, in screen quality).

- Project concept: describe the conceptual reasoning of the project.

- Project description: describe what it does practically.

- Technical description: describe the technical components of the project.

Note:Proposal should be written in English. Project title should be listed in the lower right corner of each page on all documents.

Evaluation guidelines

Transformative potential, speculation, risk taking, and the originality yet feasibility of the proposal will be key factors for the jury.

Quality of presentation and documentation (including technical, scientific description and visual presentation).

Note:

The project shouldn’t be a “fantasy”. Its feasibility should be proven in some ways (demo, mockups, proofs of concept, technical schemes), even though the technologies proposed might still be in their research phase.

Jury members

An international jury will select the winning proposal. Jury members will be disclosed along with the announcement of the winning candidates.

Awards / Prizes

Two prizes will be awarded by the jury, along with two honorary mentions.

1st prize: A trip for the winner or one representative of the winning team to Lisbon (including airfare and hotel) to present the results of their proposal during a talk, along with fabric | ch and TASML. Free viewing of the Triennial Close, Closer.

2nd prize: 3000 RMB.

2 honorary mentions

Award of Distinction

Price: 1000 US Dollars

This award is open to international submissions from students and professionals.

About the organizers

fabric | ch is a Swiss based art and architecture studio that combines experimentation, exhibition and production. It formulates new architectural proposals and produces singular livable spaces that mingle territories, algorithms, “geo-engineered” atmospheres and technologies. Through their works, the architects and scientists of fabric | ch have investigated the field of contemporary spaces, from networked related environment to the interfacing of dimensions and locations such as their recent works about “spatial creolization”.

www.fabric.ch

TASML (Tsinghua University Art and Science Research Center Media) is conceived as a research and production unit that aims to synergize the rich resources available among the University’s diverse research institutions and laboratories to create an incubator for crossbred, interdisciplinary experiments among artists, designers, scientists and technologists. TASML also functions as a center and a hub for worldwide exchange and collaboration both with academic and research institutions and the global media art and design community. Through information sharing and knowledge transfer, TASML can also be seen as a catalyst of innovations for other disciplines in the arts and for the creative industry in general.

tasml.parsons.edu

COWORKLISBOA is a 750 m2 shared office for startups, nano companies and independent or mobile professionals such as designers, architects, illustrators, translators, among others. Getting fat and lazy @ home? Come, it is at LX Factory and Central Station in Lisbon.

www.coworklisboa.pt

Close, Closer, the third Lisbon Architecture Trienale will put forward an alternative reading of contemporary spatial practice from the 12th of September to the 15th of December in Lisbon, Portugal. For three months chief curator Beatrice Galilee and curators Liam Young, Mariana Pestana and José Esparza Chong Cuy will examine the multiple possibilities of architectural output through critical and experimental exhibitions, events, performances and debates across the city.

www.close-closer.com

|