Wednesday, September 21. 2016

Rainbow Installation Inside Bristol Biennal | #insideovertherainbow

Note: I would definitely like to do the same (or a bit differently with I-Weather --we almost did back in 2012 during 01SJ in San Francisco in fact, but we were missing a bit of light strength compared to the space--)!

Via Fubiz

-----

The artist Liz West continues inventing original and psychedelic installations, this time as part of the Bristol Biennal. Her project Our Colour is composed of filters that allow the lights to change and is a good way to study the reactions of the human brain when confronted to certain luminous atmospheres. After travelling through all the shades, each person usually ends up enjoying his or her favorite one.

Related Links:

Tuesday, July 05. 2016

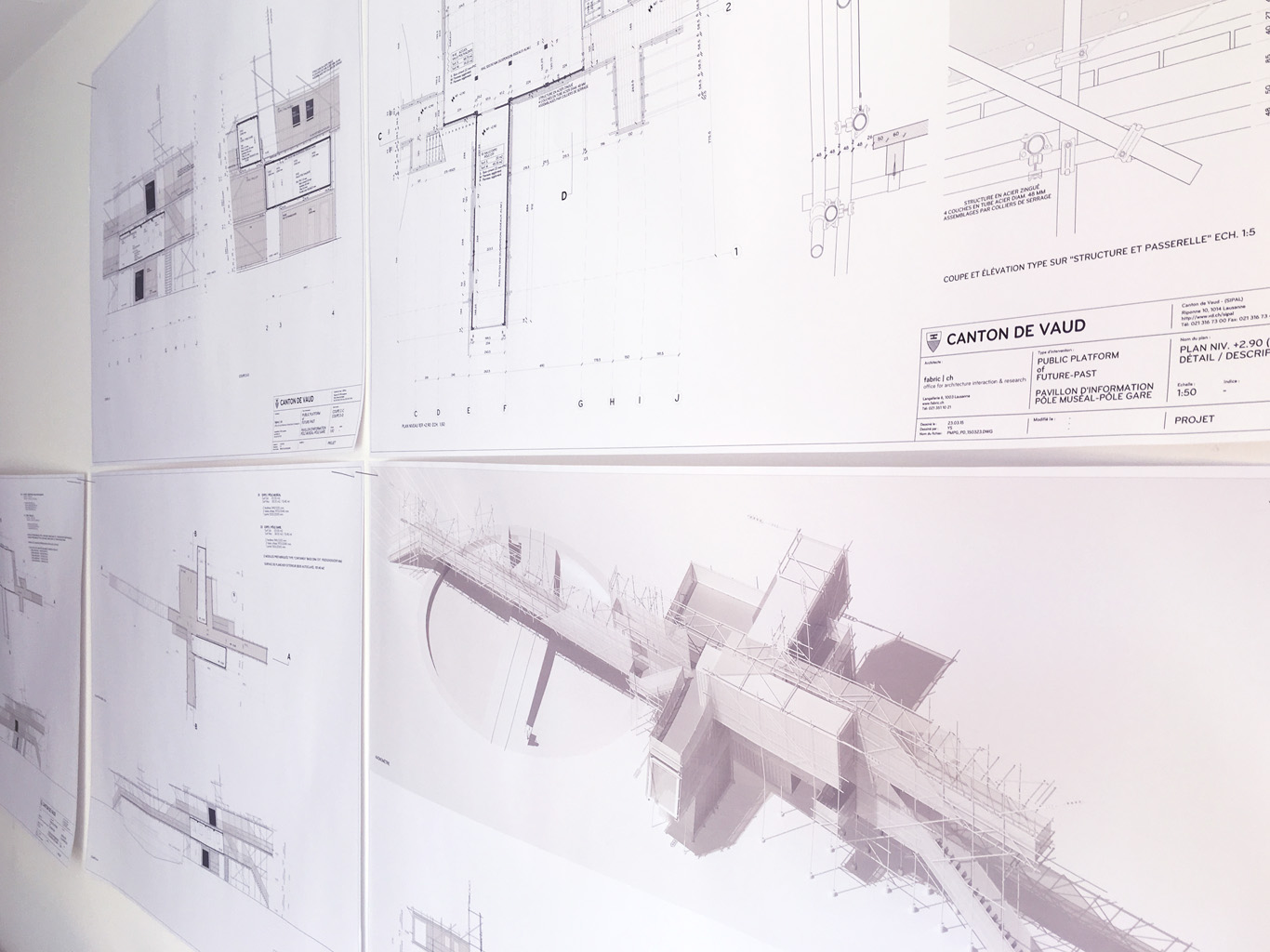

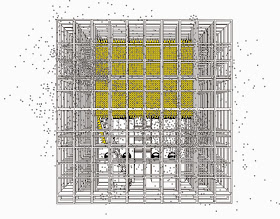

Public Platform of Future-Past, extended study phase. Bots, "Ar.I." & tools? | #data #monitoring #architecture

Note: in the continuity of my previous post/documentation concerning the project Platform of Future-Past (fabric | ch's recent winning competition proposal), I publish additional images (several) and explanations about the second phase of the Platform project, for which we were mandated by Canton de Vaud (SiPAL).

The first part of this article gives complementary explanations about the project, but I also take the opportunity to post related works and researches we've done in parallel about particular implications of the platform proposal. This will hopefully bring a neater understanding to the way we try to combine experimentations-exhibitions, the creation of "tools" and the design of larger proposals in our open and process of work.

Notably, these related works concerned the approach to data, the breaking of the environment into computable elements and the inevitable questions raised by their uses as part of a public architecture project.

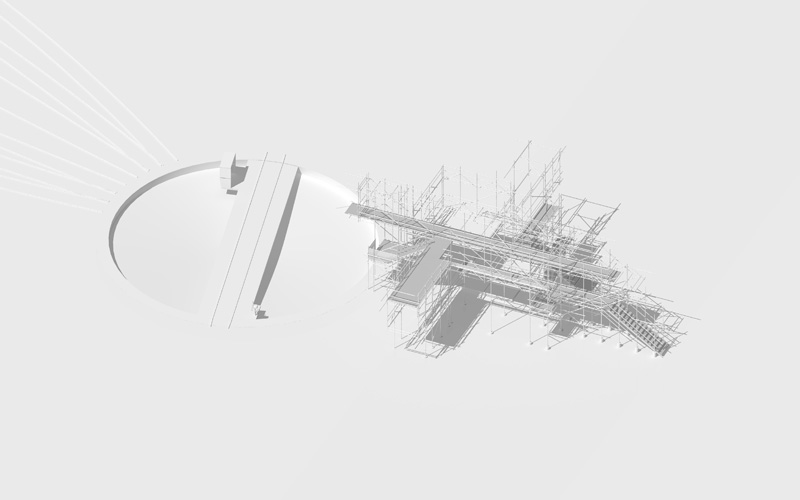

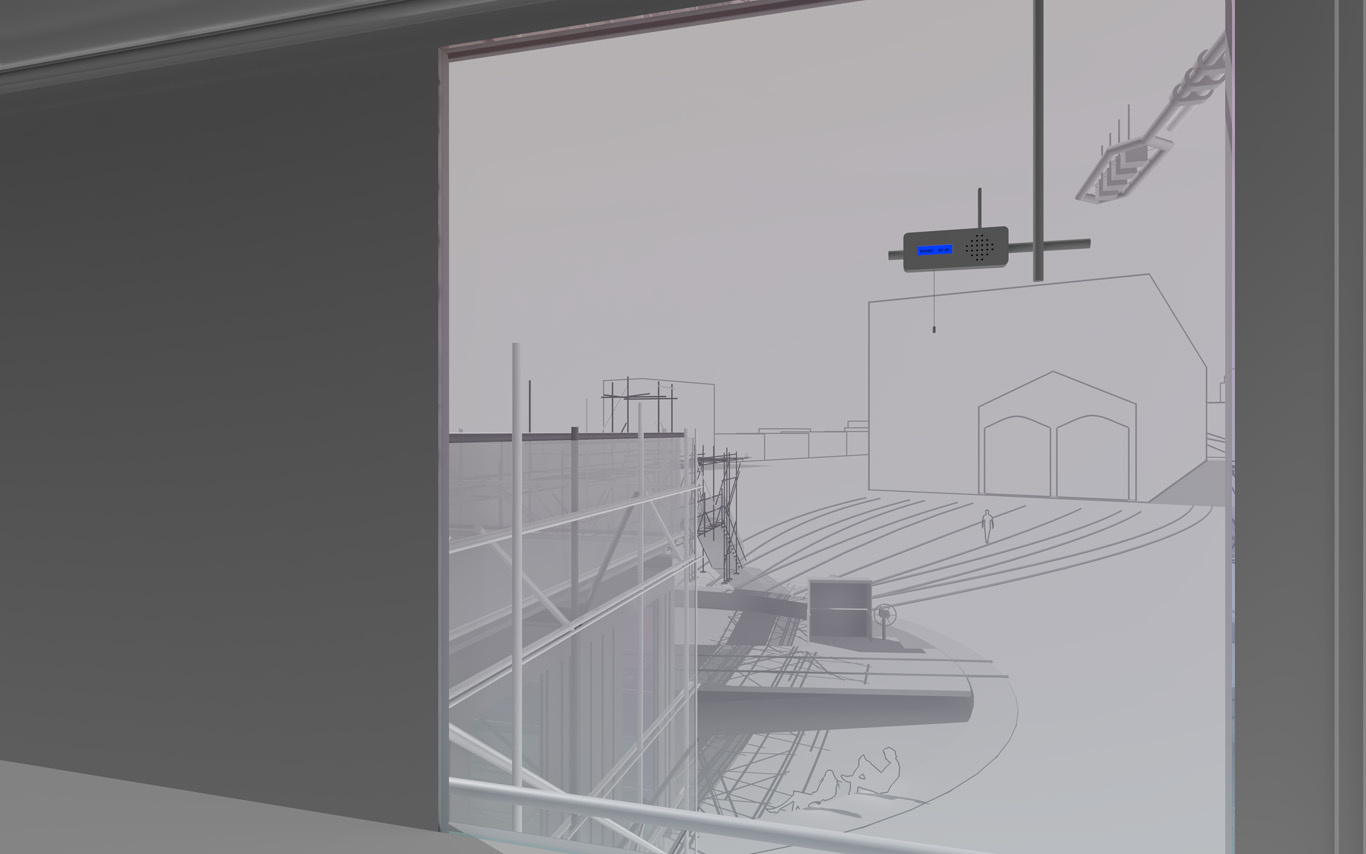

The information pavilion was potentially a slow, analog and digital "shape/experience shifter", as it was planned to be built in several succeeding steps over the years and possibly "reconfigure" to sense and look at its transforming surroundings.

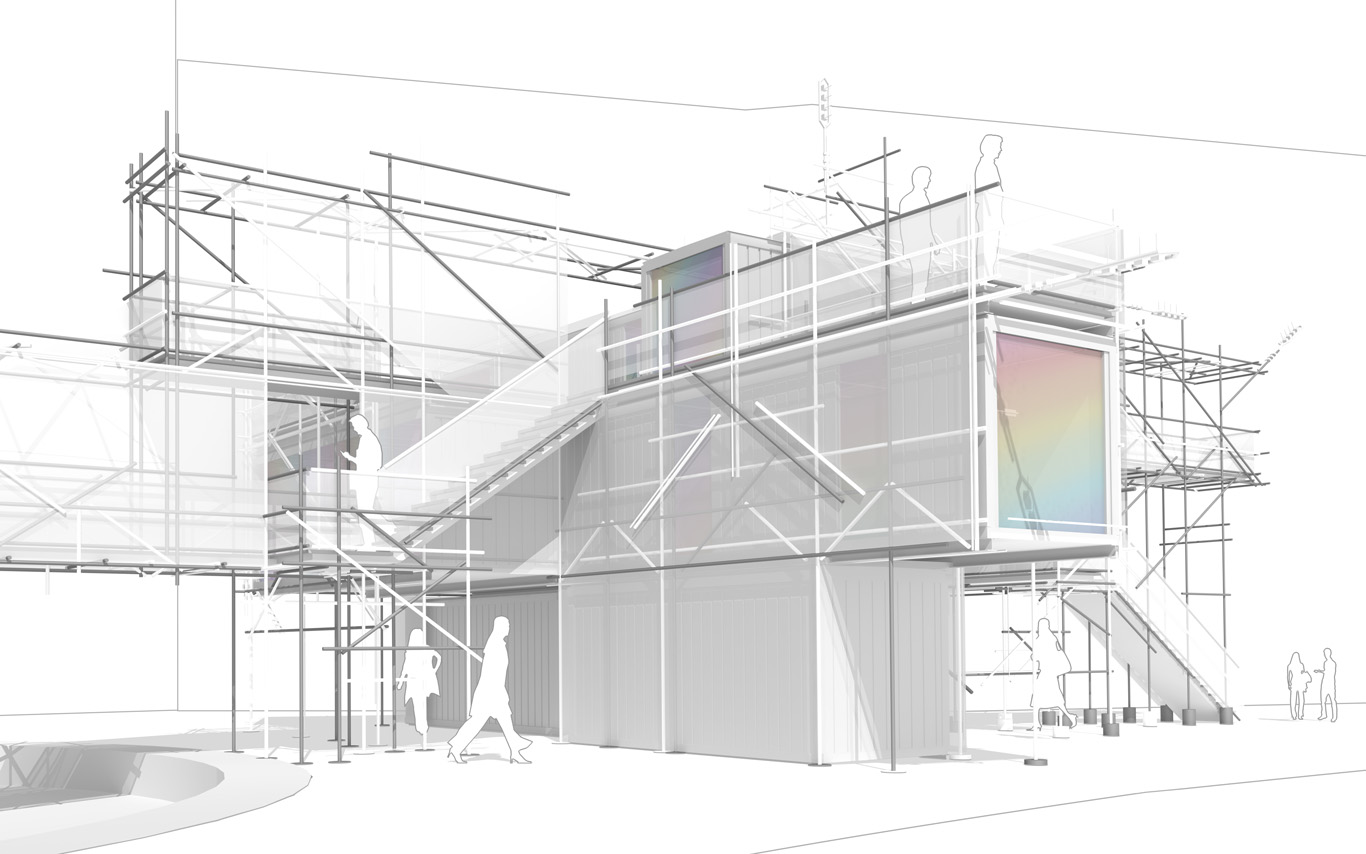

The pavilion conserved therefore an unfinished flavour as part of its DNA, inspired by these old kind of meshed constructions (bamboo scaffoldings), almost sketched. This principle of construction was used to help "shift" if/when necessary.

In a general sense, the pavilion answered the conventional public program of an observation deck about a construction site. It also served the purpose of documenting the ongoing building process that often comes along. By doing so, we turned the "monitoring dimension" (production of data) of such a program into a base element of our proposal. That's where a former experimental installation helped us: Heterochrony.

As it can be noticed, the word "Public" was added to the title of the project between the two phases, to become Public Platform of Future-Past (PPoFP) ... which we believe was important to add. This because it was envisioned that the PPoFP would monitor and use environmental data concerning the direct surroundings of the information pavilion (but NO DATA about uses/users). Data that we stated in this case Public, while the treatment of the monitored data would also become part of the project, "architectural" (more below about it).

For these monitored data to stay public, so as for the space of the pavilion itself that would be part of the public domain and physically extends it, we had to ensure that these data wouldn't be used by a third party private service. We were in need to keep an eye on the algorithms that would treat the spatial data. Or best, write them according to our design goals (more about it below).

That's were architecture meets code and data (again) obviously...

By fabric | ch

-----

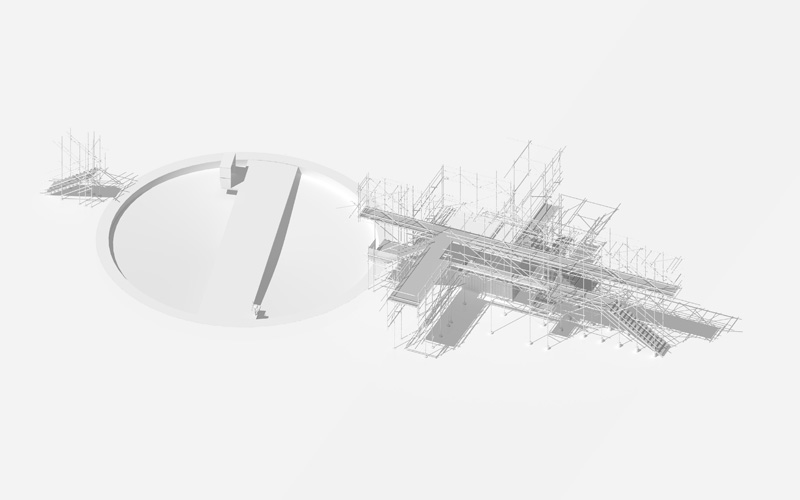

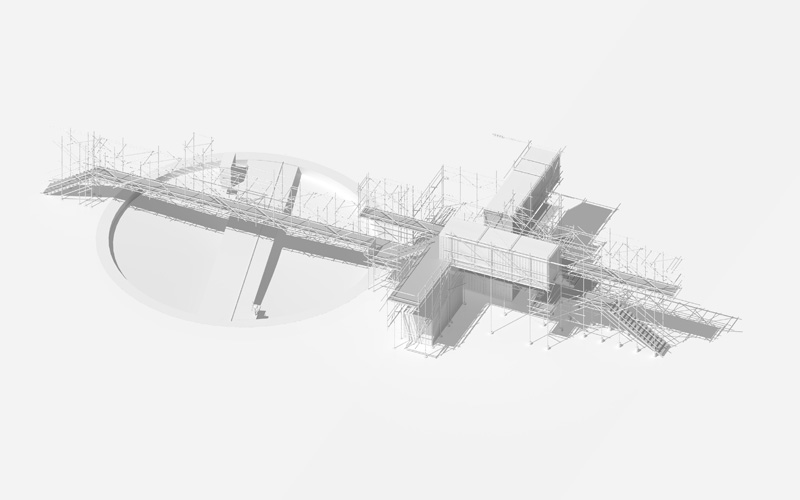

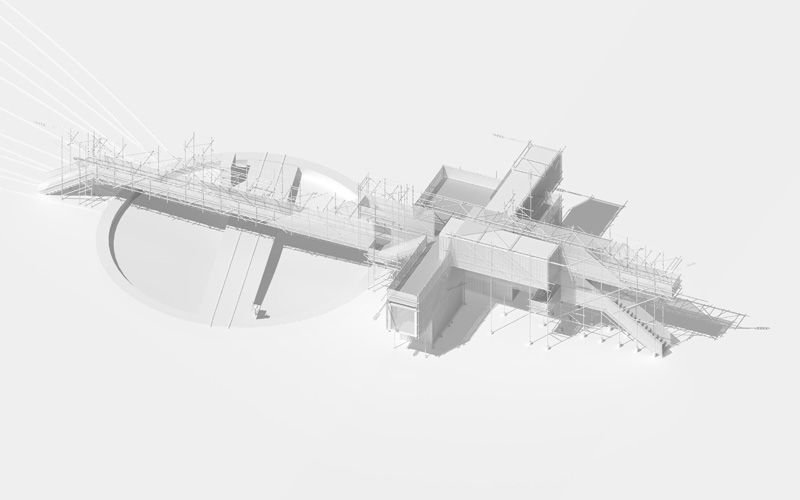

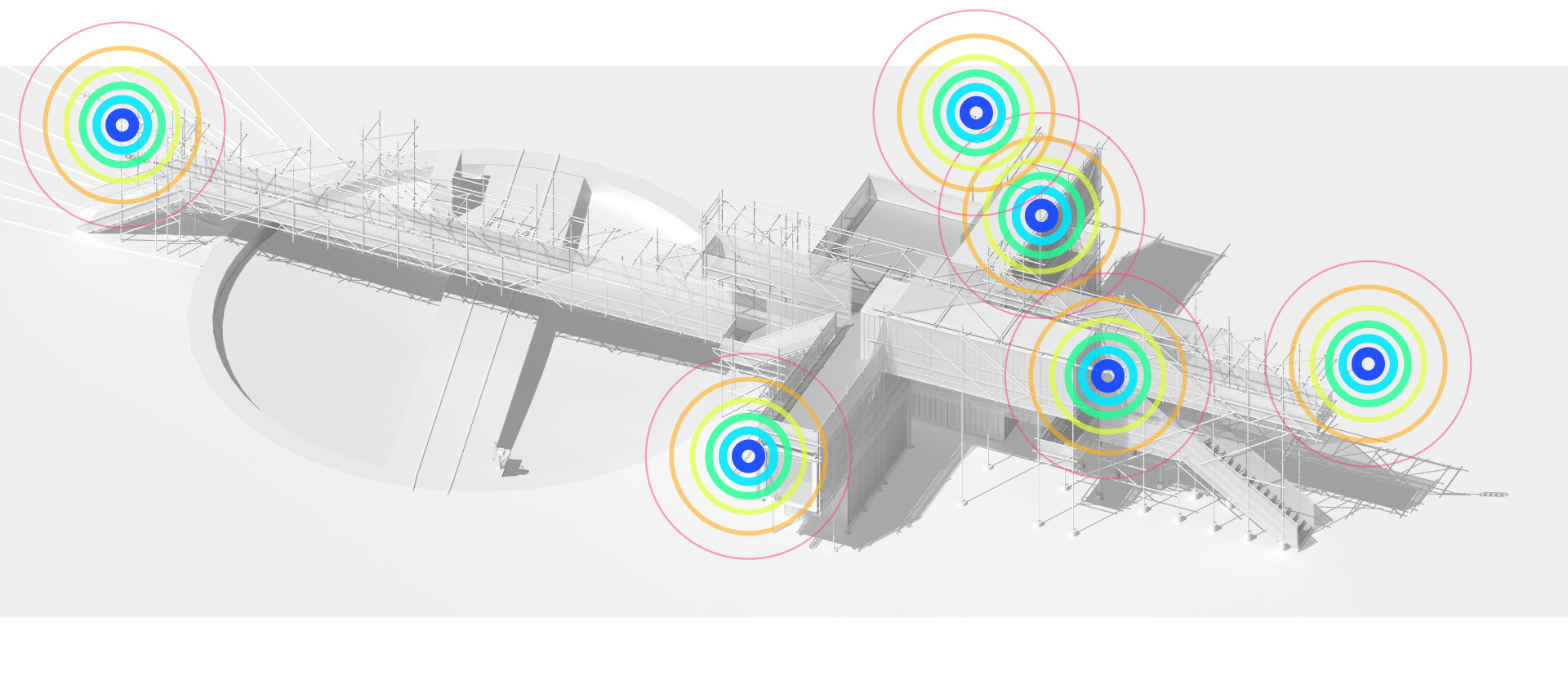

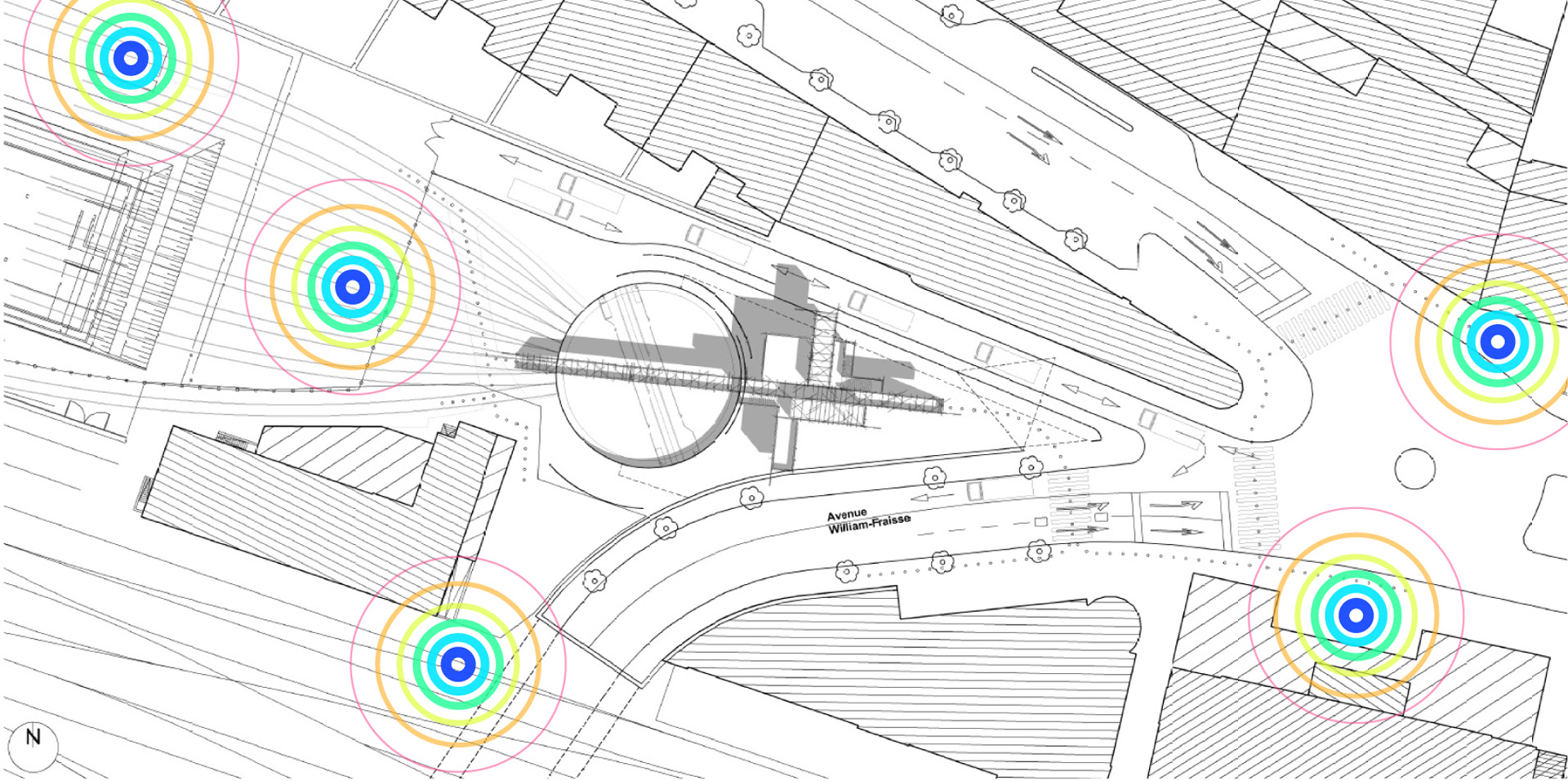

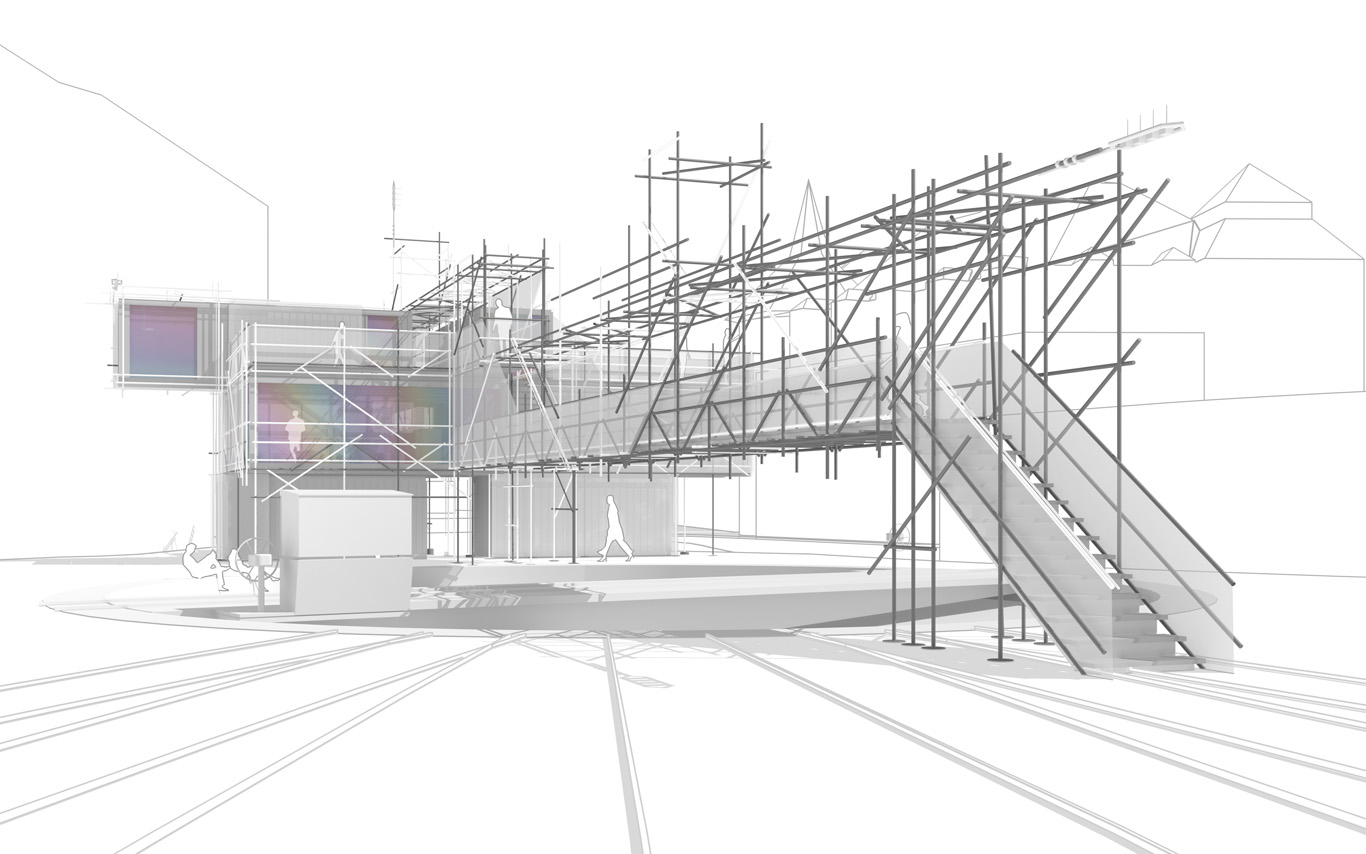

The Public Platform of Future-Past is a structure (an information and sightseeing pavilion), a Platform that overlooks an existing Public site while basically taking it as it is, in a similar way to an archeological platform over an excavation site.

The asphalt ground floor remains virtually untouched, with traces of former uses kept as they are, some quite old (a train platform linked to an early XXth century locomotives hall), some less (painted parking spaces). The surrounding environment will move and change consideralby over the years while new constructions will go on. The pavilion will monitor and document these changes. Therefore the last part of its name: "Future-Past".

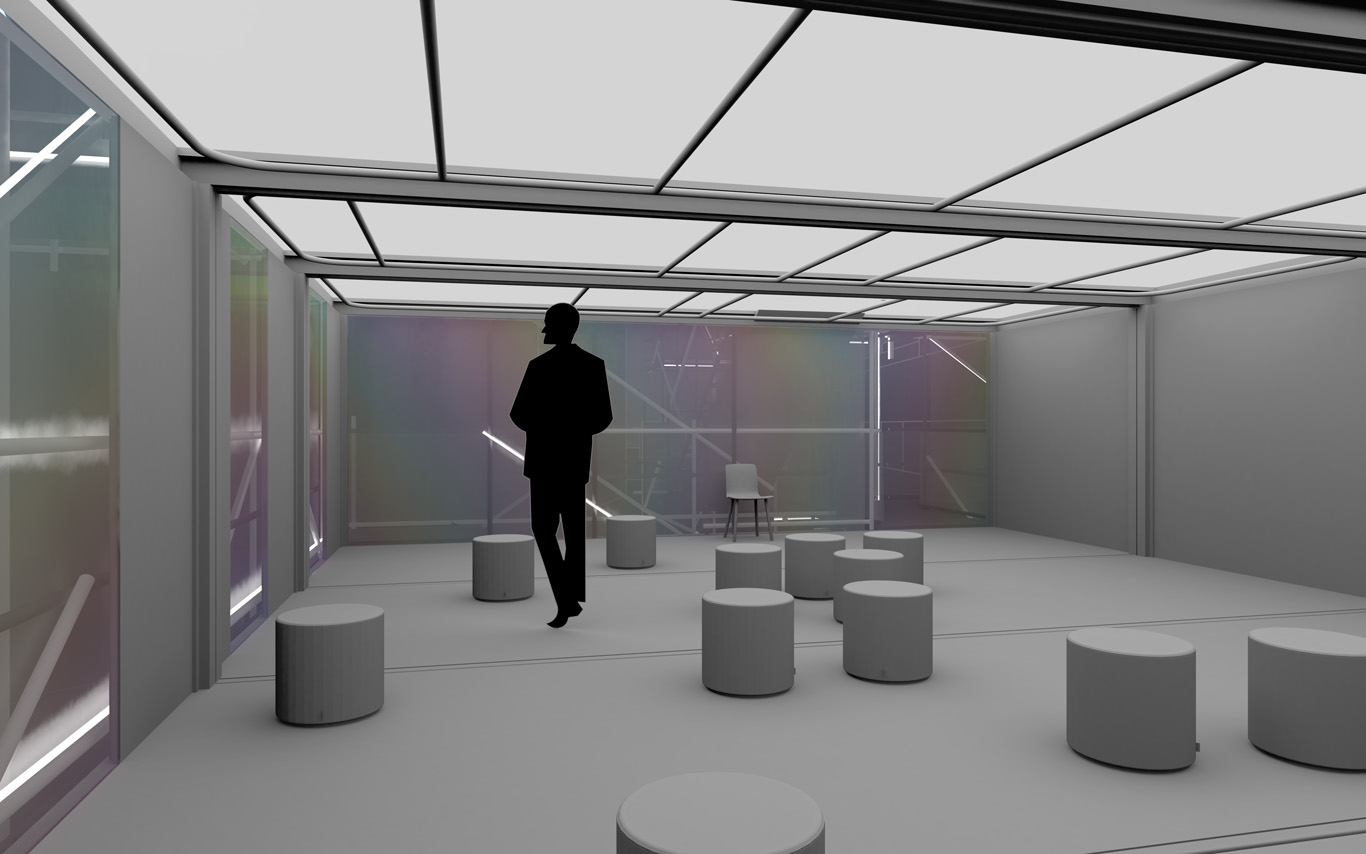

By nonetheless touching the site in a few points, the pavilion slightly reorganizes the area and triggers spaces for a small new outdoor cafe and a bikes parking area. This enhanced ground floor program can work by itself, seperated from the upper floors.

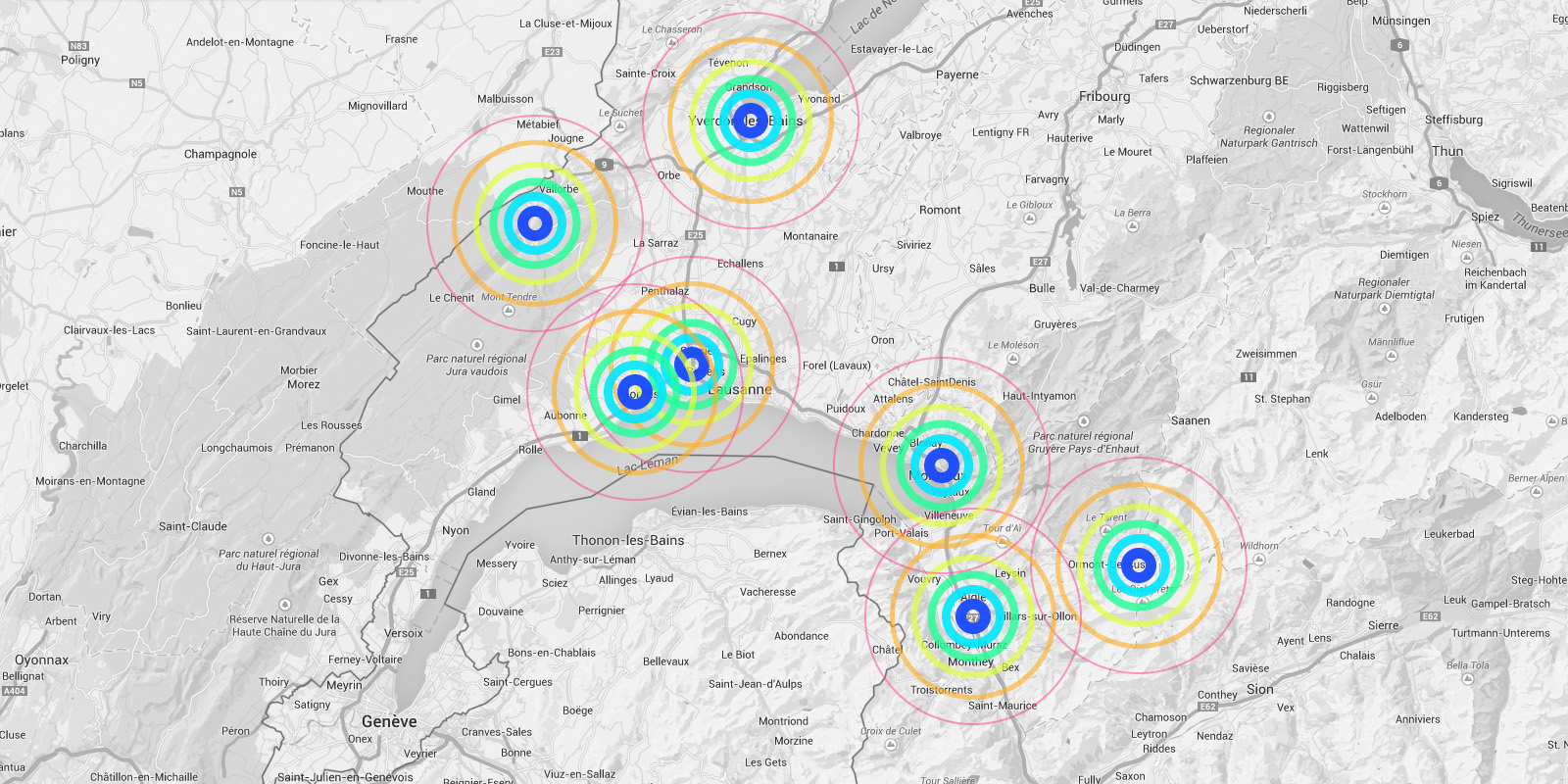

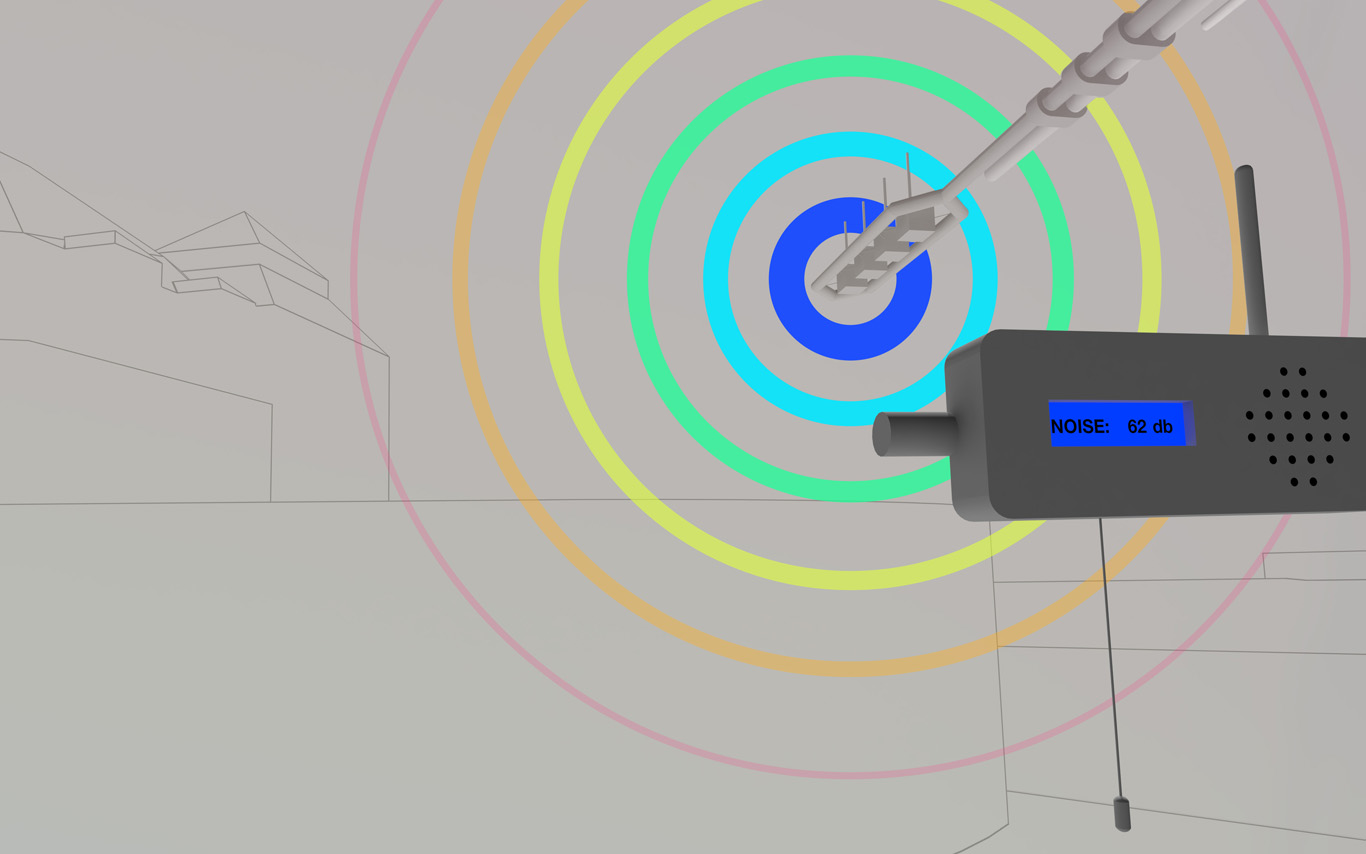

Several areas are linked to monitoring activities (input devices) and/or displays (in red, top -- that concern interests points and views from the platform or elsewhere --). These areas consist in localized devices on the platform itself (5 locations), satellite ones directly implented in the three construction sites or even in distant cities of the larger political area --these are rather output devices-- concerned by the new constructions (three museums, two new large public squares, a new railway station and a new metro). Inspired by the prior similar installation in a public park during a festival -- Heterochrony (bottom image) --, these raw data can be of different nature: visual, audio, integers from sensors (%, °C, ppm, db, lm, mb, etc.), ...

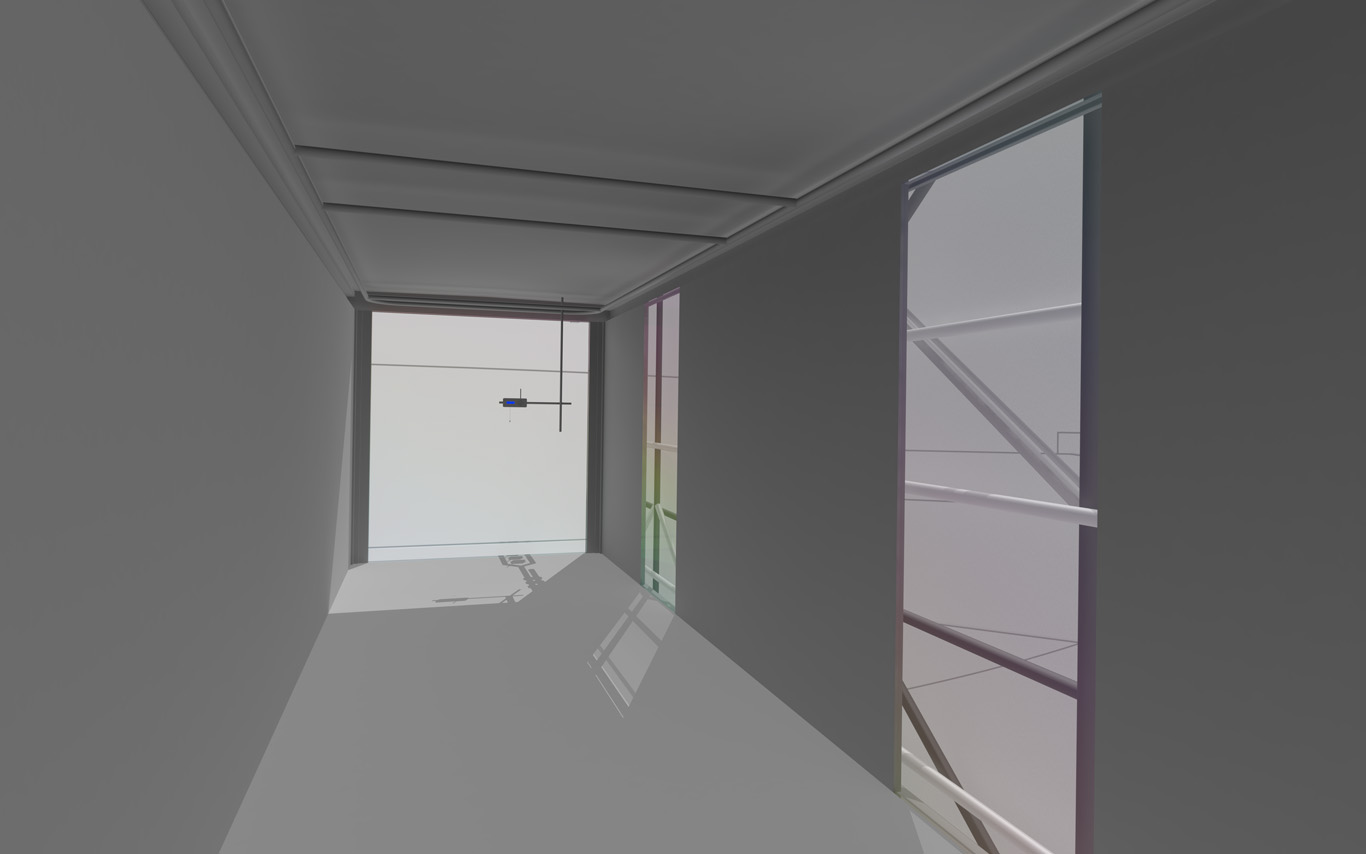

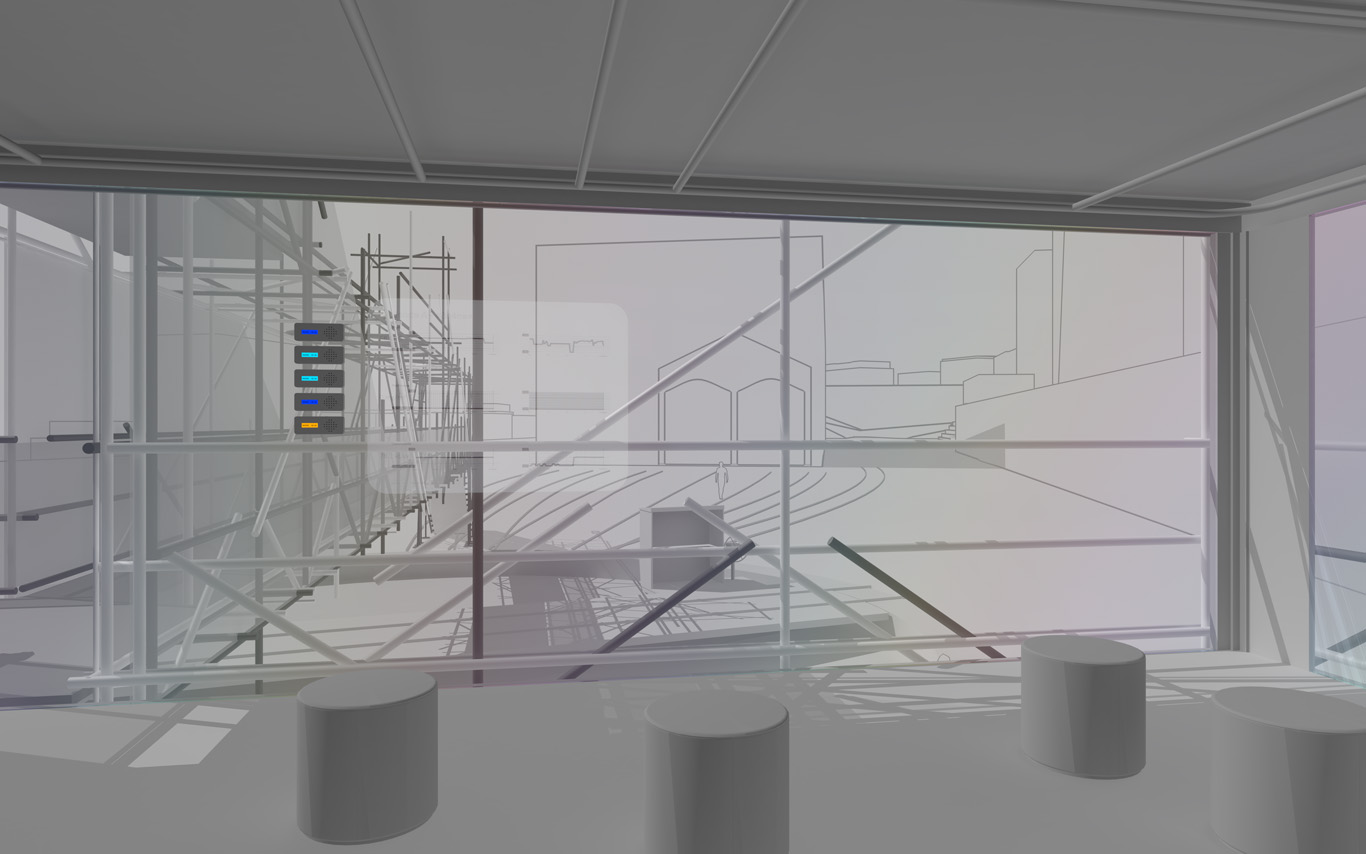

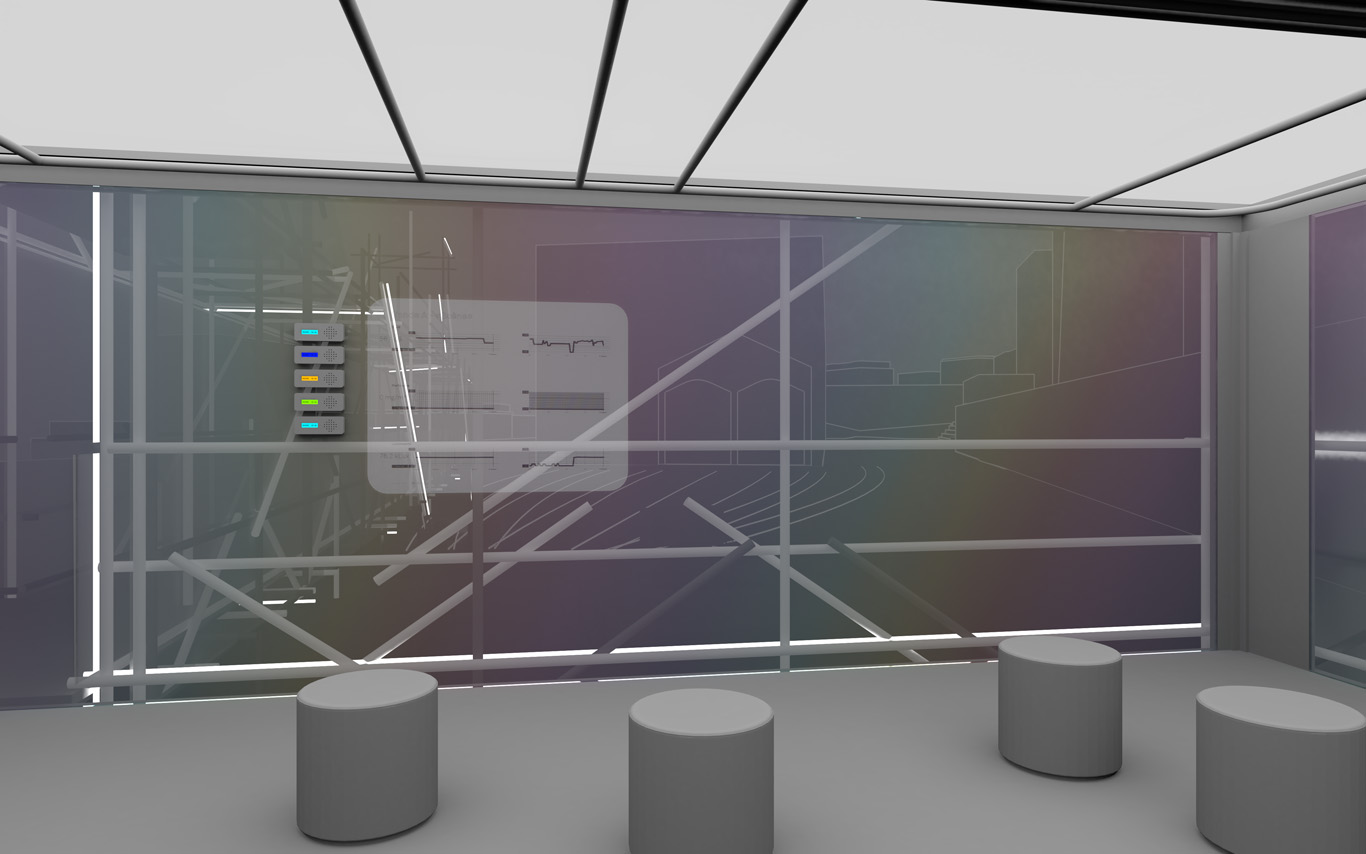

Input and output devices remain low-cost and simple in their expression: several input devices / sensors are placed outside of the pavilion in the structural elements and point toward areas of interest (construction sites or more specific parts of them). Directly in relation with these sensors and the sightseeing spots but on the inside are placed output devices with their recognizable blue screens. These are mainly voice interfaces: voice outputs driven by one bot according to architectural "scores" or algorithmic rules (middle image). Once the rules designed, the "architectural system" runs on its own. That's why we've also named the system based on automated bots "Ar.I." It could stand for "Architectural Intelligence", as it is entirely part of the architectural project.

The coding of the "Ar.I." and use of data has the potential to easily become something more experimental, transformative and performative along the life of PPoFT.

Observers (users) and their natural "curiosity" play a central role: preliminary observations and monitorings are indeed the ones produced in an analog way by them (eyes and ears), in each of the 5 interesting points and through their wanderings. Extending this natural interest is a simple cord in front of each "output device" that they can pull on, which will then trigger a set of new measures by all the related sensors on the outside. This set new data enter the database and become readable by the "Ar.I."

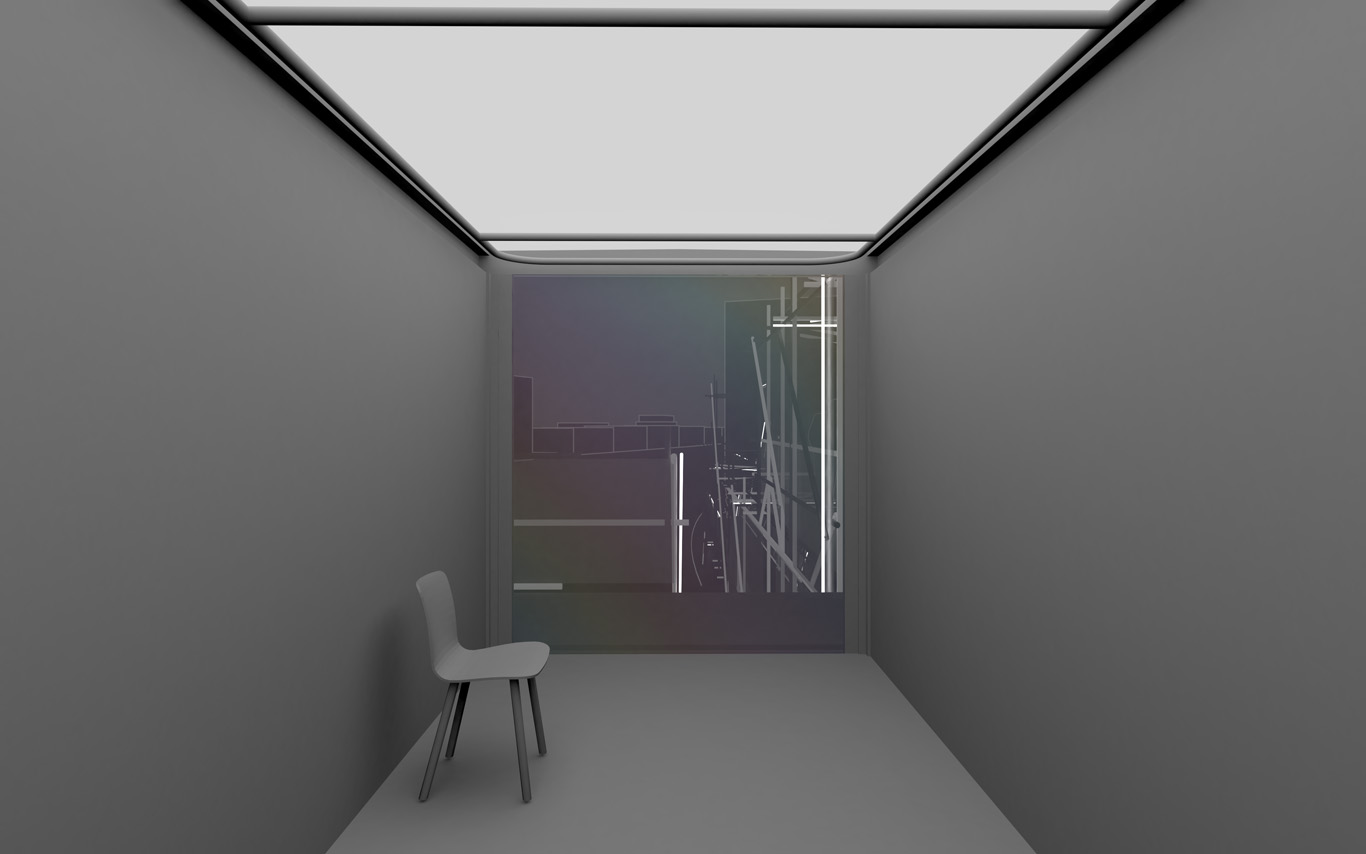

The whole part of the project regarding interaction and data treatments has been subject to a dedicated short study (a document about this study can be accessed here --in French only--). The main design implications of it are that the "Ar.I." takes part in the process of "filtering" which happens between the "outside" and the "inside", by taking part to the creation of a variable but specific "inside atmosphere" ("artificial artificial", as the outside is artificial as well since the anthropocene, isn't it ?) By doing so, the "Ar.I." bot fully takes its own part to the architecture main program: triggering the perception of an inside, proposing patterns of occupations.

"Ar.I." computes spatial elements and mixes times. It can organize configurations for the pavilion (data, displays, recorded sounds, lightings, clocks). It can set it to a past, a present, but also a future estimated disposition. "Ar.I." is mainly a set of open rules and a vocal interface, at the exception of the common access and conference space equipped with visual displays as well. "Ar.I." simply spells data at some times while at other, more intriguingly, it starts give "spatial advices" about the environment data configuration.

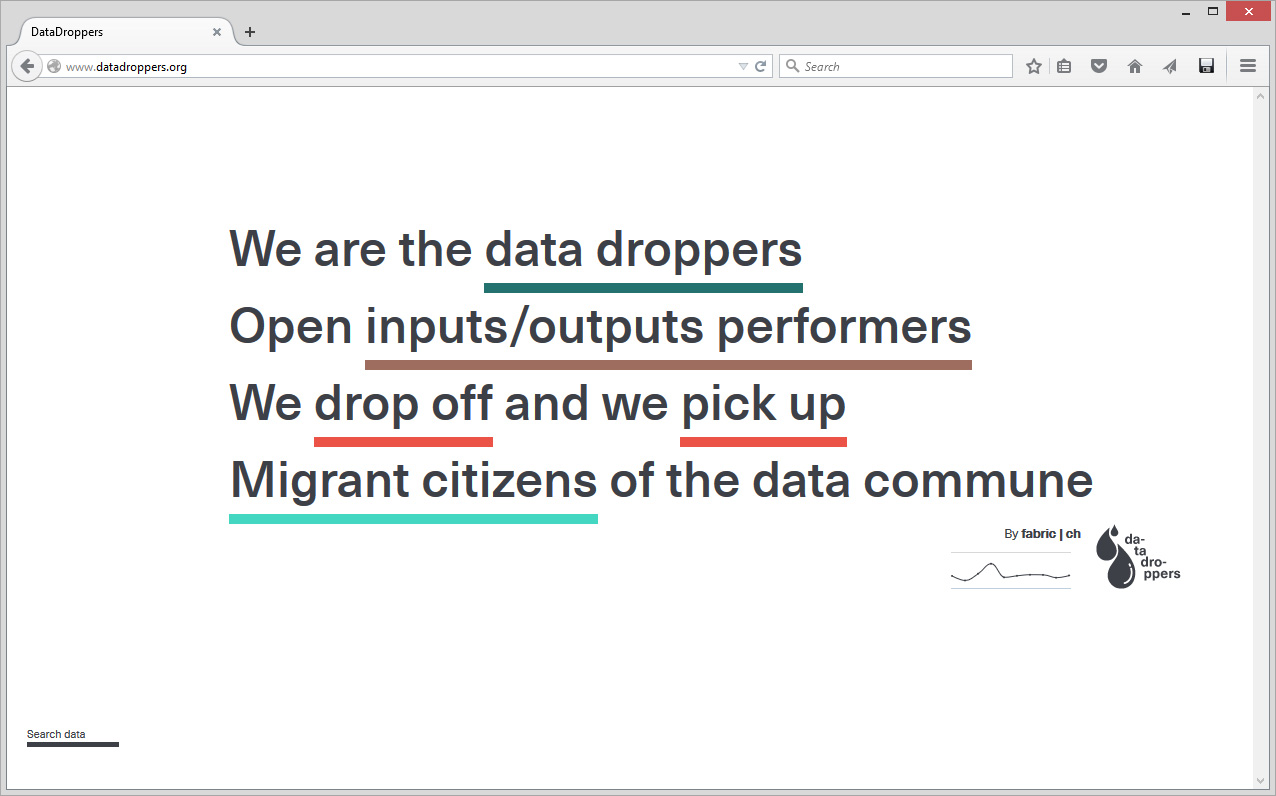

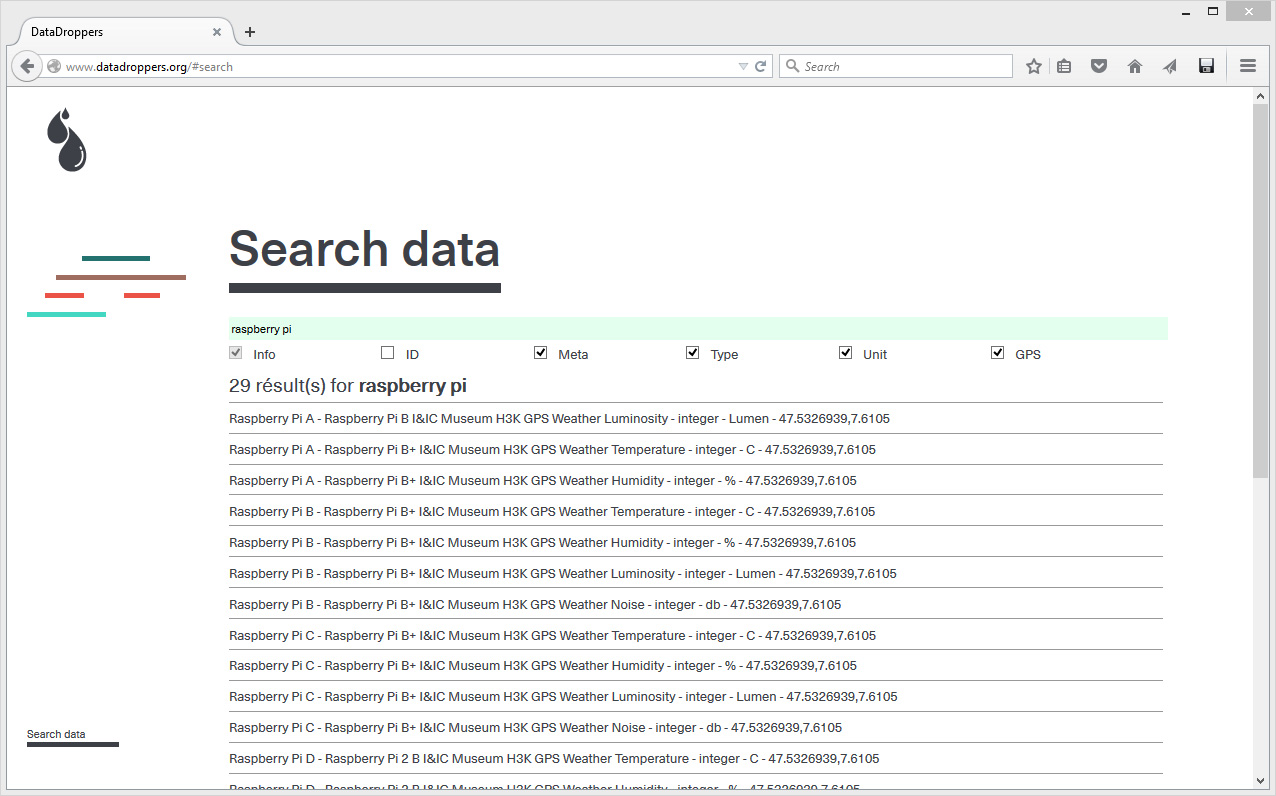

In parallel to Public Platform of Future Past and in the frame of various research or experimental projects, scientists and designers at fabric | ch have been working to set up their own platform for declaring and retrieving data (more about this project, Datadroppers, here). A platform, simple but that is adequate to our needs, on which we can develop as desired and where we know what is happening to the data. To further guarantee the nature of the project, a "data commune" was created out of it and we plan to further release the code on Github.

In tis context, we are turning as well our own office into a test tube for various monitoring systems, so that we can assess the reliability and handling of different systems. It is then the occasion to further "hack" some basic domestic equipments and turn them into sensors, try new functions as well, with the help of our 3d printer in tis case (middle image). Again, this experimental activity is turned into a side project, Studio Station (ongoing, with Pierre-Xavier Puissant), while keeping the general background goal of "concept-proofing" the different elements of the main project.

A common room (conference room) in the pavilion hosts and displays the various data. 5 small screen devices, 5 voice interfaces controlled for the 5 areas of interests and a semi-transparent data screen. Inspired again by what was experimented and realized back in 2012 during Heterochrony (top image).

----- ----- -----

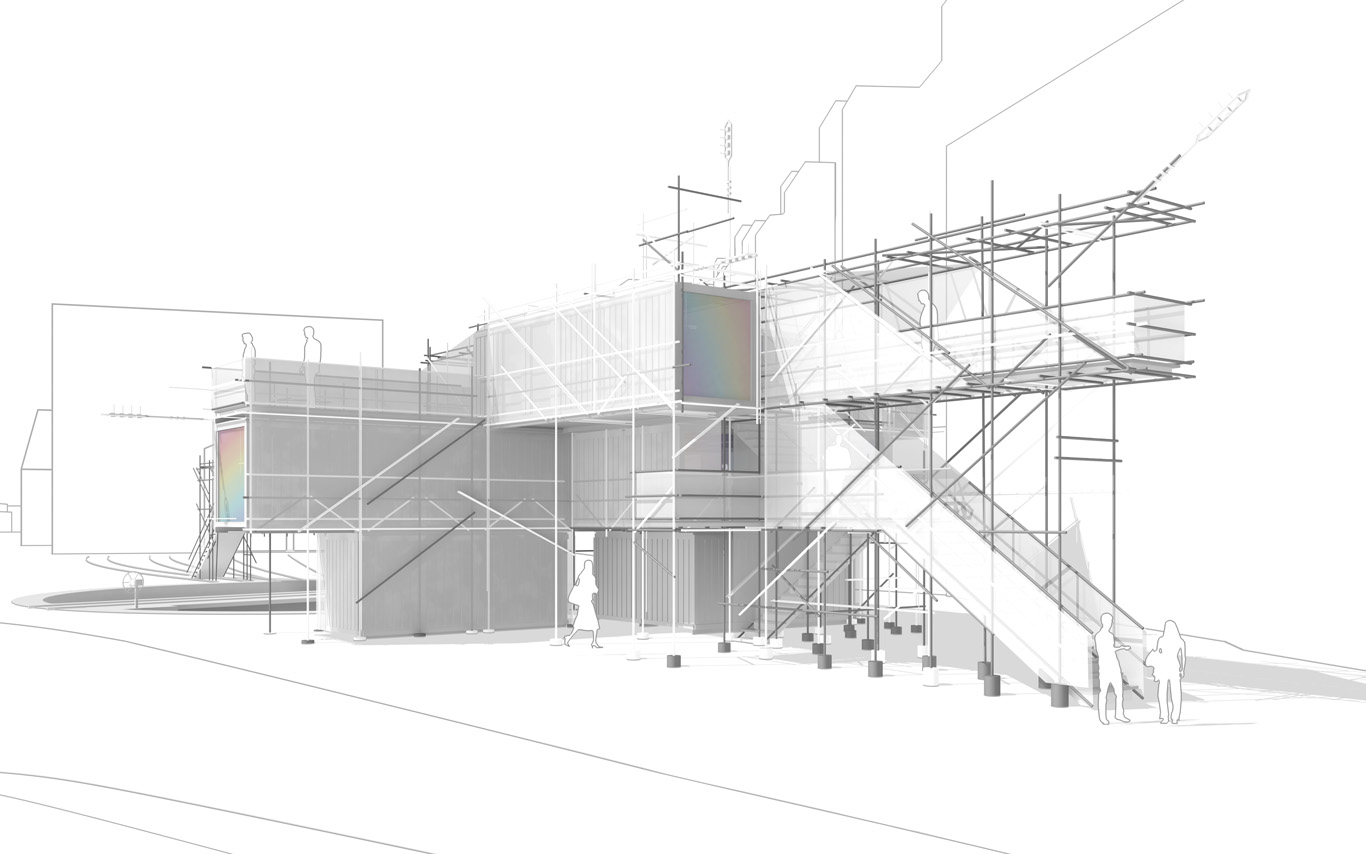

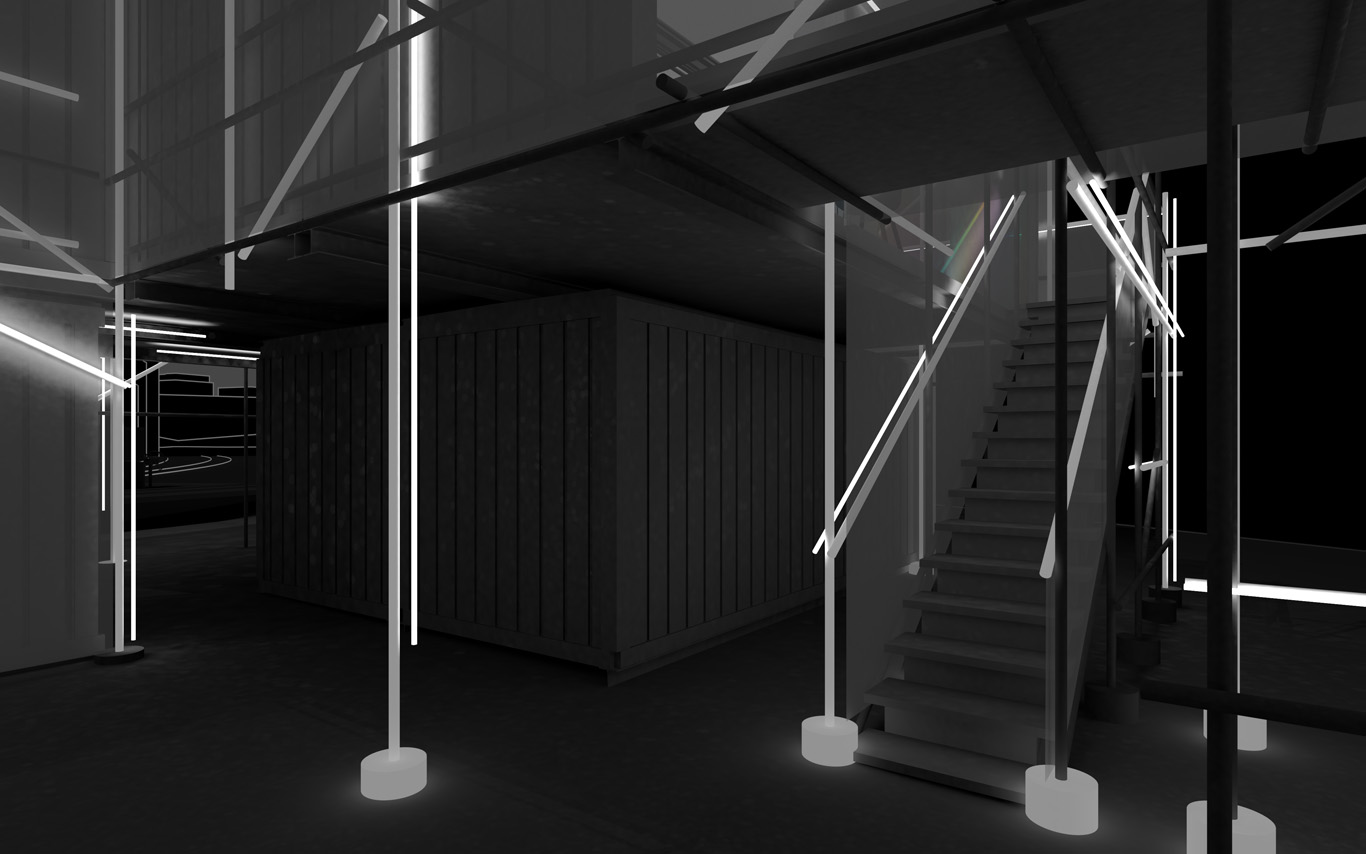

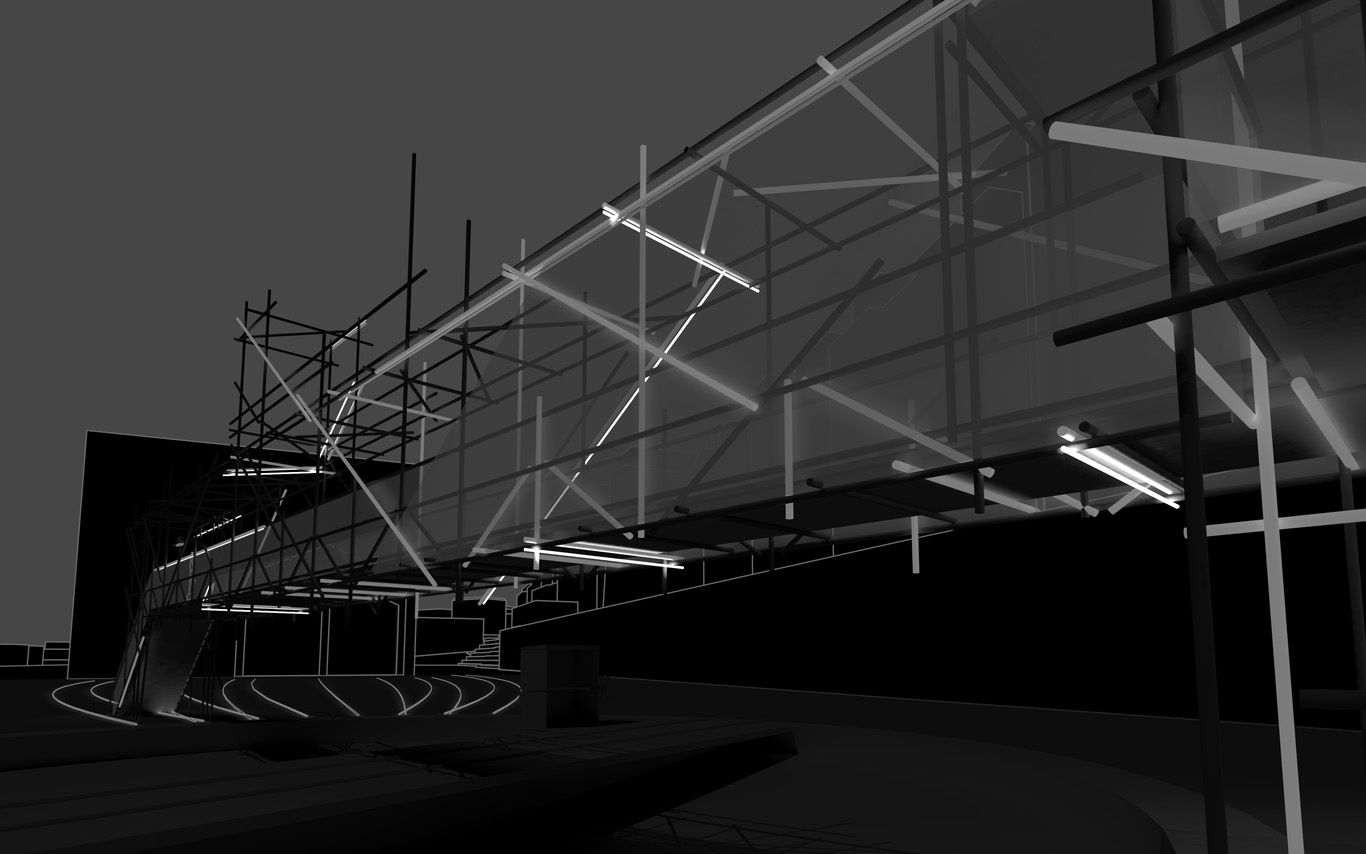

PPoFP, several images. Day, night configurations & few comments

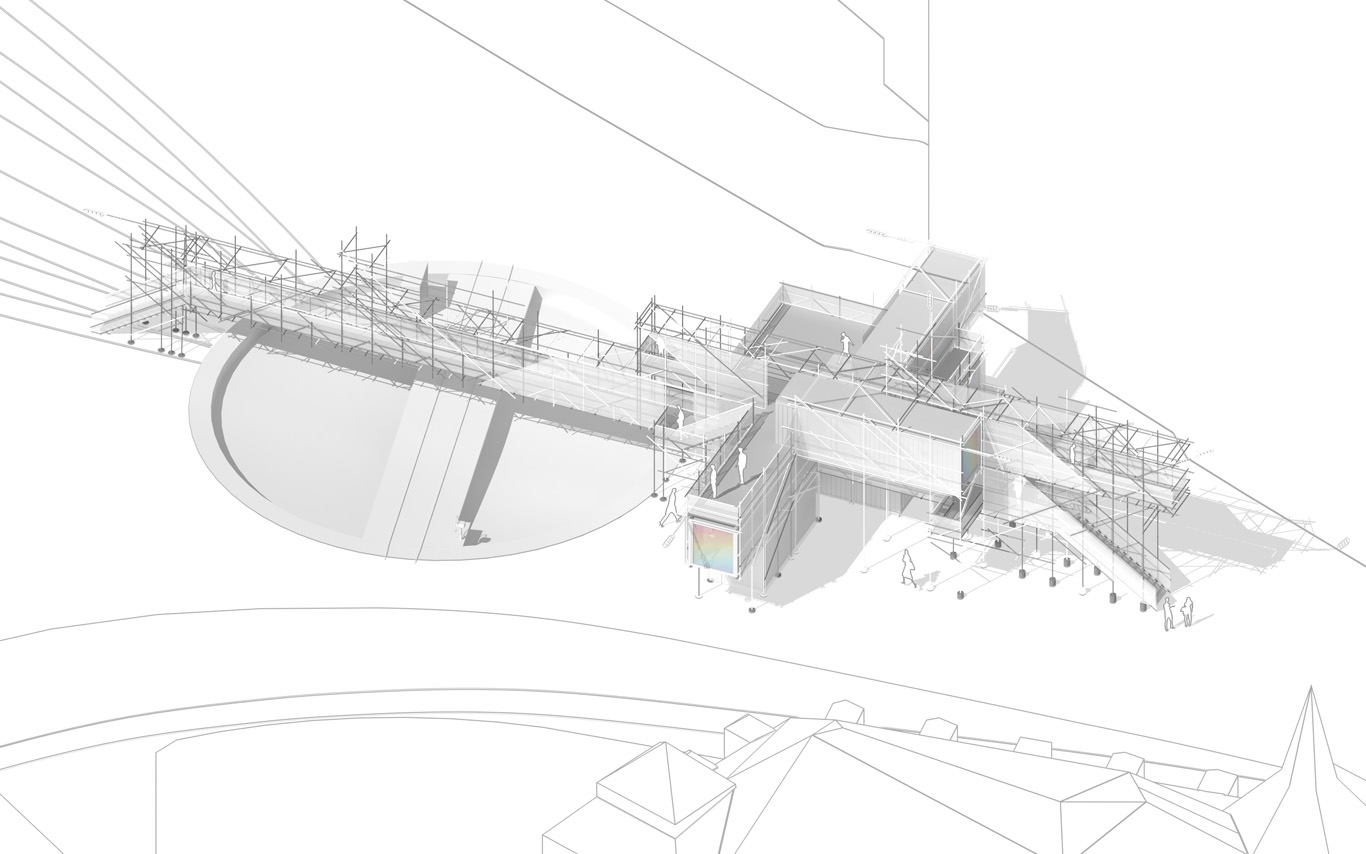

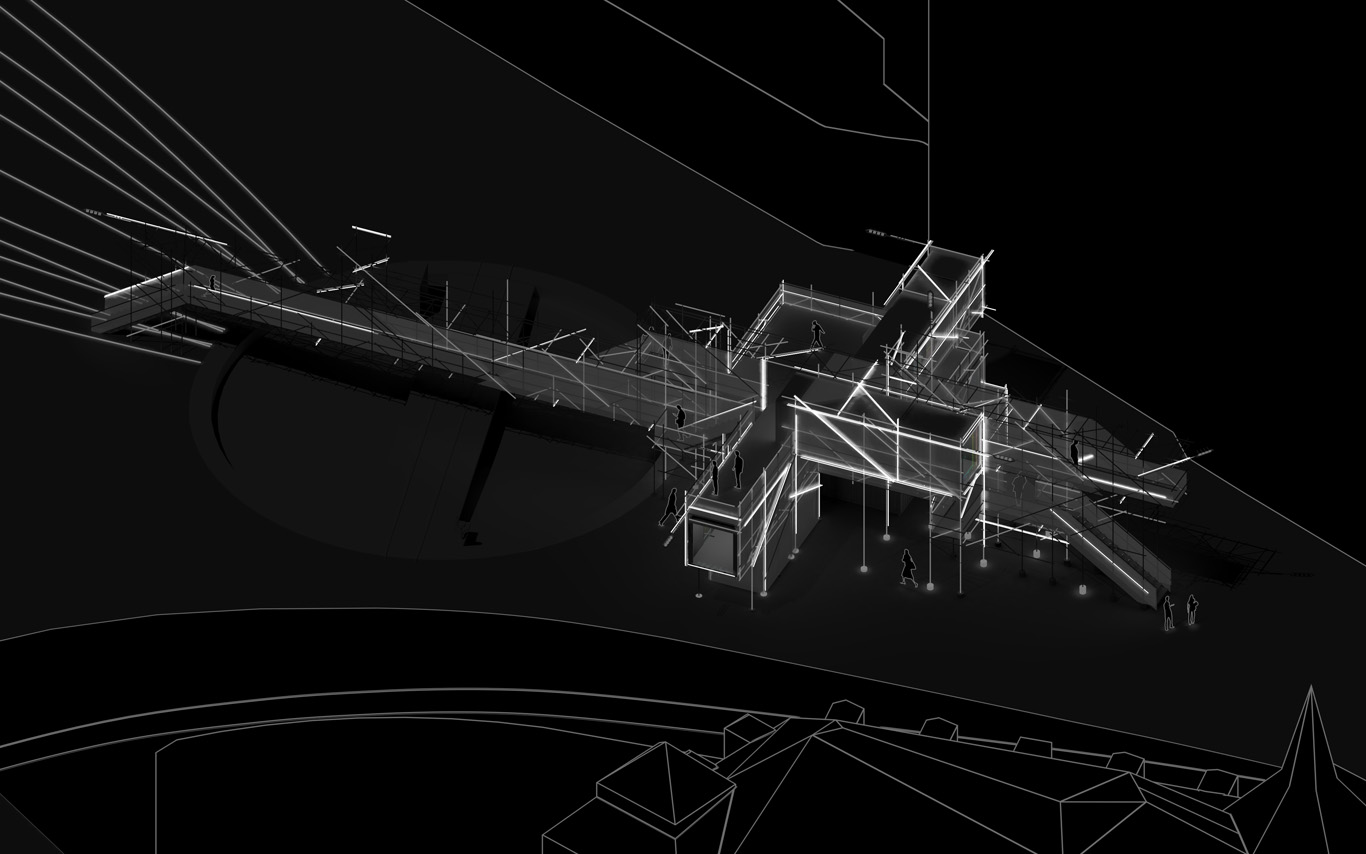

Public Platform of Future-Past, axonometric views day/night.

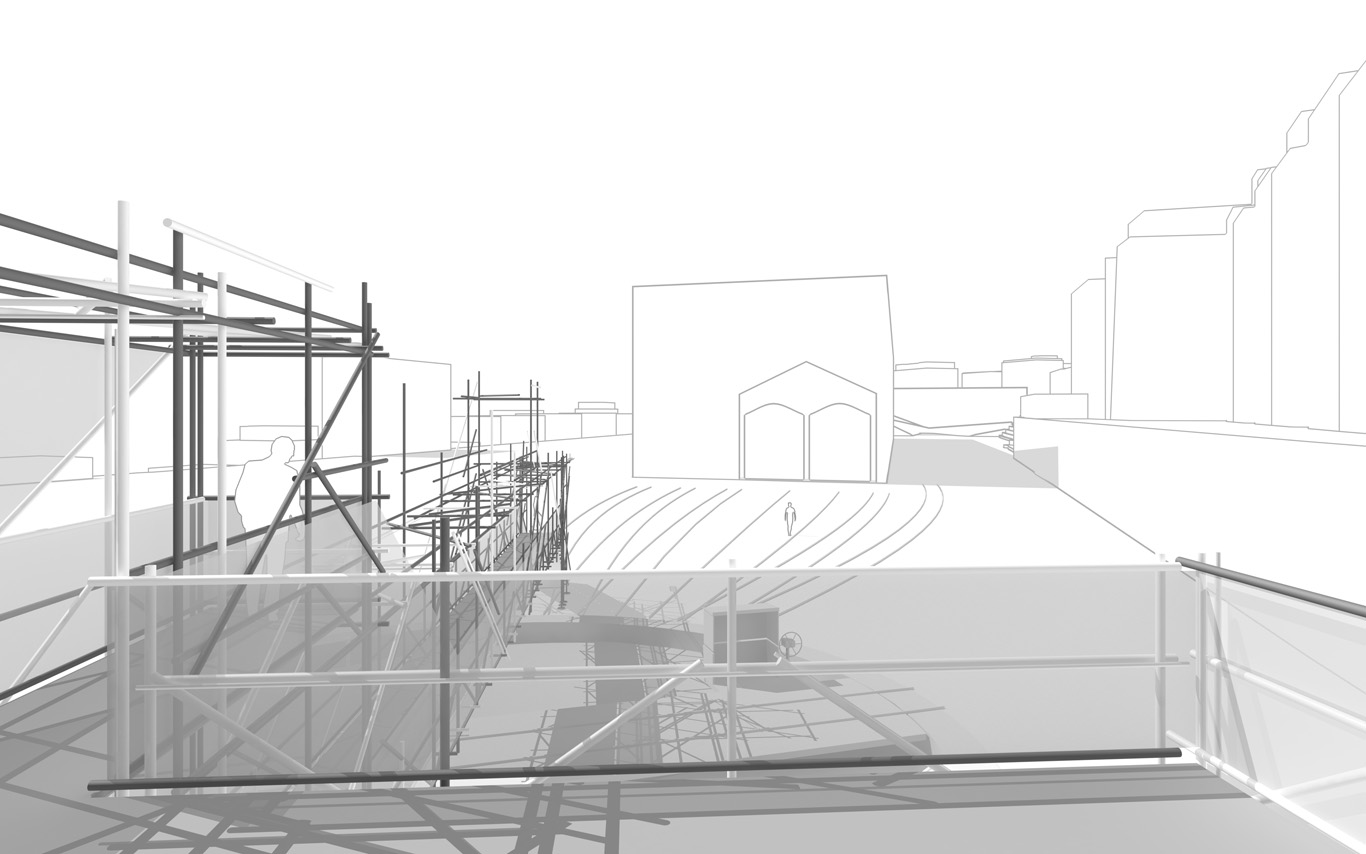

An elevated walkway that overlook the almost archeological site (past-present-future). The circulations and views define and articulate the architecture and the five main "points of interests". These mains points concentrates spatial events, infrastructures and monitoring technologies. Layer by layer, the suroundings are getting filtrated by various means and become enclosed spaces.

Walks, views over transforming sites, ...

Data treatment, bots, voice and minimal visual outputs.

Night views, circulations, points of view.

Night views, ground.

Random yet controllable lights at night. Underlined areas of interests, points of "spatial densities".

Project: fabric | ch

Team: Patrick Keller, Christophe Guignard, Christian Babski, Sinan Mansuroglu, Yves Staub, Nicolas Besson.

Tuesday, June 14. 2016

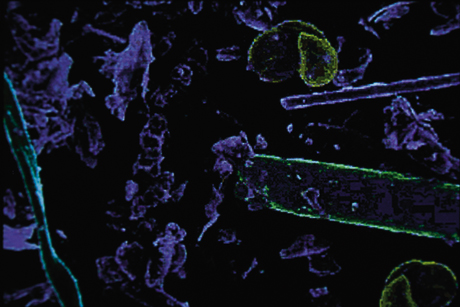

Breatheable Food | #air #food #particles

Note: the architecture (of atmospheres) could become atomized into fine particles that aggregate in different manners along time, following different "rules" (these "rules" being the ones to be designed by the architect).

While we digg into sensors than monitor elements of the atmosphere (physical and non physical elements), we're definitely looking for a kind of architecture that would "deal" with these elements/particles and recompose them.

Via Cabinet (Spring 2001)

-----

By David Gissen

In the history of architecture and design there have only been a few "effects"—electric light, forced air—that have had the capacity to cause massive environmental and behavioral shifts. Last year at Barcelona's annual design fair, the Catalonian designer Marti Guixe presented another—breathable food. "Pharma-food, a system of nourishment by breathing," is an appliance that was developed by Guixe to explore the transformation of food into pure information.

Dust Food Muesli. Photos: Inga Knölke.

Pharma-food joins the work of other, primarily European, designers who are exploring alternative regimens for such activities as washing or eating. One of Guixe's Catalonian contemporaries, Ana Mir, is exploring a technology that allows one to wash without water. Like Guixe's approach, this project would allow washing to occur anywhere. In their work, these designers not only free regimens from their fixed location in relation to certain products; they also free these activities from their traditional engagement with the body. Unlike designers such as Philippe Starck or Richard Sapper, who strive to revise traditional technologies, Guixe has discovered that the problem of eating does not involve the design of a new type of stove, sink, or refrigerator—the problem of eating requires finding a new mouth.

Pharma-BAR. Photo: Inga Knölke.

Guixe, who has been studying alternative forms of eating for several years, realized that the breathing of "food" already occurs via the inhalation of dust that hangs in the air at work and at home. Guixe hypothesized that this form of eating, from which one gains a miniscule amount of minerals and vitamins, could be trans-formed into a more potent meal, a "dust-muesli," that would supply a powerful dose of nutrients. The Pharma-Food appliance, which sprays this ærosolized nutrition, connects to a computer and requires Microsoft Excel to enter exact values for such things as riboflavin, vitamin C, and protein. The combination of these nutrients are saved on the computer as documents with names such as "SPAMT," which has the nutrient "language" of tomatoes and bread, and "Costa Brova," a "seafood" dish that is heavy on the iodine and light on carbohydrates. Guixe imagines diners composing these "meals" and sending them as e-mail attachments to other owners of the Pharma-food emitter. "Like MP3," says Guixe.

While Guixe has explored the experience of eating this information, less explored and of equal significance is where this type of eating can now take place. Guixe imagines Pharma-food in a special "Pharma-bar," essentially a simple room with tables and chairs and several emitters. But why is this necessary when he has liberated food from kitchens and from forms of ingestion that require utensils and dishes? Pharma-food will allow eating to occur anywhere at any time; on subways, in cars, in our beds, while exercising, sleeping, or making love. Most interesting is what effect this device will have on the home, particularly the American home, which is dominated by the kitchen. While technologies are given free range at work and in other public spheres, the home is typically the place where devices such as Pharma-food are tamed and held in balance by a previous technology that the new device is meant to replace. Central heat did not eliminate the fireplace; it allowed this formerly grimy, soot-filled artifact to become an æsthetic symbol and heart of the American home. People began using fireplaces less, but when they did, they burned wood in them again instead of coal. Similarly, cooking the monthly meal may involve stoking a wood-fueled, cast-iron stove while simultaneously breathing a few appetizers with friends.

-

David Gissen is associate curator for architecture and design at the National Building Museum in Washington, D.C. He is currently developing an exhibition on human conveyance (elevators, escalators and moving sidewalks) and one on flying buildings.

Related Links:

Tuesday, April 28. 2015

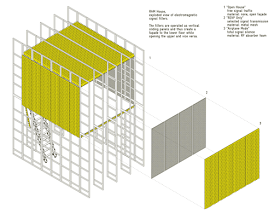

Signal-Blocking Architecture and the Faraday Home | #faraday

Via BLDGBLOG

-----

Image: "RAM House" by Space Caviar].

An interesting new project by Space Caviar asks, "Does your home have an airplane mode?"

Exploring what it could mean to design future homes so that they offer an optional state of complete electromagnetic privacy, they have put together a "domestic prototype" in which the signal-blocking capabilities of new architectural materials are heavily emphasized, becoming a structural component of the house itself.

[Image: "RAM House" by Space Caviar].

In other words, why just rely on aftermarket home alterations such as WiFi-blocking paint, when you can actually factor the transmission of signals through architectural space into the design of your home in the first place?

[Image: "RAM House" by Space Caviar].

Space Caviar call this "a new definition of privacy in the age of sentient appliances and signal-based communication," in the process turning the home into "a space of selective electromagnetic autonomy."

As the space of the home becomes saturated by “smart” devices capable of monitoring their surroundings, the role of the domestic envelope as a shield from an external gaze becomes less relevant: it is the home itself that is observing us. The RAM House responds to this near-future scenario by proposing a space of selective electromagnetic autonomy. Within the space’s core, Wi-Fi, cellphone and other radio signals are filtered by various movable shields of radar-absorbent material (RAM) and faraday meshing, preventing signals from entering and—more importantly—escaping. Just as a curtain can be drawn to visually expose the domestic interior of a traditional home, panels can be slid open to allow radio waves to enter and exit, when so desired.

The result is the so-called "RAM House," named for those "movable shields of radar-absorbent material," and it will be on display at the Atelier Clerici in Milan from April 14-19.

Wednesday, December 24. 2014

EmTech: Google’s Internet “Loon” Balloons Will Ring the Globe within a Year | #atmosphere

Note: Google Earth or literally and progressively Google's Earth? It could also be considered as the start of the privatization of the lower stratosphere, where up to now, no artifacts were permanently present.

-----

Google X research lab boss Astro Teller says experimental wireless balloons will test delivering Internet access throughout the Southern Hemisphere by next year.

By Tom Simonite

Astro Teller & a Project Loon prototype sails skyward.

Within a year, Google is aiming to have a continuous ring of high-altitude balloons in the Southern Hemisphere capable of providing wireless Internet service to cell phones on the ground.

That’s according to Astro Teller, head of the Google X lab, the company established with the purpose of working on “moon shot” research projects. He spoke at MIT Technology Review’s EmTech conference in Cambridge today.

Teller said that the balloon project, known as Project Loon, was on track to meet the goal of demonstrating a practical way to get wireless Internet access to billions of people who don’t have it today, mostly in poor parts of the globe.

For that to work, Google would need a large fleet of balloons constantly circling the globe so that people on the ground could always get a signal. Teller said Google should soon have enough balloons aloft to prove that the idea is workable. “In the next year or so we should have a semi-permanent ring of balloons somewhere in the Southern Hemisphere,” he said.

Google first revealed the existence of Project Loon in June 2013 and has tested Loon Balloons, as they are known, in the U.S., New Zealand, and Brazil. The balloons fly at 60,000 feet and can stay aloft for as long as 100 days, their electronics powered by solar panels. Google’s balloons have now traveled more than two million kilometers, said Teller.

The balloons provide wireless Internet using the same LTE protocol used by cellular devices. Google has said that the balloons can serve data at rates of 22 megabits per second to fixed antennas, and five megabits per second to mobile handsets.

Google’s trials in New Zealand and Brazil are being conducted in partnership with local cellular providers. Google isn’t currently in the Internet service provider business—despite dabbling in wired services in the U.S. (see “Google Fiber’s Ripple Effect”)—but Teller said Project Loon would generate profits if it worked out. “We haven’t taken a dime of revenue, but if we can figure out a way to take the Internet to five billion people, that’s very valuable,” he said.

Related Links:

Tuesday, December 23. 2014

Can Sucking CO2 Out of the Atmosphere Really Work? | #atmosphere

-----

A Columbia scientist and his startup think they have a plan to save the world. Now they have to convince the rest of us.

By Eli Kintish

CTO and co-founder Peter Eisenberger in front of Global Thermostat’s air-capturing machine.

Physicist Peter Eisenberger had expected colleagues to react to his idea with skepticism. He was claiming, after all, to have invented a machine that could clean the atmosphere of its excess carbon dioxide, making the gas into fuel or storing it underground. And the Columbia University scientist was aware that naming his two-year-old startup Global Thermostat hadn’t exactly been an exercise in humility.

But the reception in the spring of 2009 had been even more dismissive than he had expected. First, he spoke to a special committee convened by the American Physical Society to review possible ways of reducing carbon dioxide in the atmosphere through so-called air capture, which means, essentially, scrubbing it from the sky. They listened politely to his presentation but barely asked any questions. A few weeks later he spoke at the U.S. Department of Energy’s National Energy Technology Laboratory in West Virginia to a similarly skeptical audience. Eisenberger explained that his lab’s research involves chemicals called amines that are already used to capture concentrated carbon dioxide emitted from fossil-fuel power plants. This same amine-based technology, he said, also showed potential for the far more difficult and ambitious task of capturing the gas from the open air, where carbon dioxide is found at concentrations of 400 parts per million. That’s up to 300 times more diffuse than in power plant smokestacks. But Eisenberger argued that he had a simple design for achieving the feat in a cost-effective way, in part because of the way he would recycle the amines. “That didn’t even register,” he recalls. “I felt a lot of people were pissing on me.”

The next day, however, a manager from the lab called him excitedly. The DOE scientists had realized that amine samples sitting around the lab had been bonding with carbon dioxide at room temperature—a fact they hadn’t much appreciated until then. It meant that Eisenberger’s approach to air capture was at least “feasible,” says one of the DOE lab’s chemists, Mac Gray.

Five years later, Eisenberger’s company has raised $24 million in investments, built a working demonstration plant, and struck deals to supply at least one customer with carbon dioxide harvested from the sky. But the next challenge is proving that the technology could have a transformative impact on the world, befitting his company’s name.

The need for a carbon-sucking machine is easy to see. Most technologies for mitigating carbon dioxide work only where the gas is emitted in large concentrations, as in power plants. But air-capture machines, installed anywhere on earth, could deal with the 52 percent of carbon-dioxide emissions that are caused by distributed, smaller sources like cars, farms, and homes. Secondly, air capture, if it ever becomes practical, could gradually reduce the concentration of carbon dioxide in the atmosphere. As emissions have accelerated—they’re now rising at 2 percent per year, twice as rapidly as they did in the last three decades of the 20th century—scientists have begun to recognize the urgency of achieving so-called “negative emissions.”

The obvious need for the technology has enticed several other efforts to come up with various approaches that might be practical. For example, Climate Engineering, based in Calgary, captures carbon using a liquid solution of sodium hydroxide, a well-established industrial technique. A firm cofounded by an early pioneer of the idea, Eisenberg’s Columbia colleague Klaus Lackner, worked on the problem for several years before giving up in 2012.

“Negative emissions are definitely needed to restore the atmosphere given that we’re going to far exceed any safe limit for CO2, if there is one. The question in my mind is, can it be done in an economical way?”

A report released in April by the Intergovernmental Panel on Climate Change says that avoiding the internationally agreed upon goal of 2 °C of global warming will likely require the global deployment of “carbon dioxide removal” strategies like air capture. (See “The Cost of Limiting Climate Change Could Double without Carbon Capture Technology.”) “Negative emissions are definitely needed to restore the atmosphere given that we’re going to far exceed any safe limit for CO2, if there is one,” says Daniel Schrag, director of the Harvard University Center for the Environment. “The question in my mind is, can it be done in an economical way?”

Most experts are skeptical. (See “What Carbon Capture Can’t Do.”) A 2011 report by the American Physical Society identified key physical and economic challenges. The fact that carbon dioxide will bind with amines, forming a molecule called a carbamate, is well known chemistry. But carbon dioxide still represents only one in 2,500 molecules in the air. That means an effective air-capture machine would need to push vast amounts of air past amines to get enough carbon dioxide to stick to them and then regenerate the amines to capture more. That would require a lot of energy and thus be very expensive, the 2011 report said. That’s why it concluded that air capture “is not currently an economically viable approach to mitigating climate change.”

The people at Global Thermostat understand these daunting economics but remain defiantly optimistic. The way to make air capture profitable, says Global Thermostat cofounder Graciela Chichilnisky, a Columbia University economist and mathematician, is to take advantage of the demand for the gas by various industries. There already exists a well-established, billion-dollar market for carbon dioxide, which is used to rejuvenate oil wells, make carbonated beverages, and stimulate plant growth in commercial greenhouses. Historically, the gas sells for around $100 per ton. But Eisenberger says his company’s prototype machine could extract a concentrated ton of the gas for far less than that. The idea is to first sell carbon dioxide to niche markets, such as oil-well recovery, to eventually create bigger ones, like using catalysts to make fuels in processes that are driven by solar energy. “Once capturing carbon from the air is profitable, people acting in their own self-interest will make it happen,” says Chichilnisky.

Warming up

Eisenberger and Chichilnisky were colleagues at Columbia in 2008 when they realized that they had complementary interests: his in energy, and hers in environmental economics, including work to help shape the 1991 Kyoto Protocol, the first global treaty on cutting emissions. Nations had pledged big cuts, says Chichilnisky, but economic and political realities had provided “no way to implement it.” The pair decided to create a business to tackle the carbon challenge.

They focused on air capture, which was first developed by Nazi scientists who used liquid sorbents to remove accumulations of CO2 in submarines. In the winter of 2008 Eisenberger sequestered himself in a quiet house with big glass windows overlooking the ocean in Mendocino County, California. There he studied existing literature on capturing carbon and made a key decision. Scientists developing techniques to capture CO2 have thus far sought to work at high concentrations of the gas. But Eisenberger and Chichilnisky focused on another term in those equations: temperature.

Engineers have previously deployed amines to scrub CO2 from flue gases, whose temperatures are around 70 °C when they exit power plants. Subsequently removing the CO2 from the amines—“regenerating” the amines—generally requires reactions at 120 °C. By contrast, Eisenberger calculated that his system would operate at roughly 85 °C, requiring less total energy. It would use relatively cheap steam for two purposes. The steam would heat the surface, driving the CO2 off the amines to be collected, while also blowing CO2 away from the surface.

"Even if air capture were to someday prove profitable, whether it should be scaled up is another question."

The upshot? With less heat-management infrastructure than what is required with amines in the smokestacks of power plants, the design of a scrubber could be simpler and therefore cheaper. Using data from their prototype, Eisenberger’s team figures the approach could cost between $15 and $50 per ton of carbon dioxide captured from air, depending on how long the amine surfaces last.

If Global Thermostat can achieve anywhere near the prices it’s touting, a number of niche markets beckon. The startup has partnered with a Carson City, Nevada-based company called Algae Systems to make biofuels using carbon dioxide and algae. Meanwhile the demand is rising for carbon dioxide to inject into depleted oil wells, a technique known as enhanced oil recovery. One study estimates that the application could require as much as 3 billion tons of carbon dioxide annually by 2021, a nearly tenfold increase over the 2011 market.

That still represents a drop in the bucket in terms of the amounts needed to reduce or even stabilize the concentration of CO2 in the atmosphere. But Eisenberger says there are really no alternatives to air capture. Simply capturing carbon emissions from coal-fired power plants, he says, only extends society’s dependence on carbon-intensive coal.

Suck it up

It’s a warm December afternoon in Silicon Valley as Eisenberger and I make our way across SRI International’s concrete research center. It’s in these low-slung buildings where engineers first developed ARPAnet, Apple’s Siri software, and countless other technological advances. About a quarter mile from the entrance, a 40-foot-high tower of fans, steel, and silver tubes comes into view. This is the Global Thermostat demonstration plant. It’s imposing and clean. Eisenberger gazes at the quiet scene around the tower, which includes a tall tree. “It’s doing exactly what the tree is doing,” says Eisenberger. But then he corrects himself. “Well, actually, it’s doing it a lot better.”

After Eisenberger earned a PhD physics in 1967 at Harvard, stints at Bell Labs, Princeton, and Stanford followed. At Exxon in the 1980s he led work on solar energy, then served as director of Lamont-Doherty, the geosciences lab at Columbia. There he has taught a long-standing seminar called “The Earth/Human system.” It was in that seminar, in 2007, with Lackner as a guest lecturer, that Eisenberger first heard about air capture. After a year or so of preparation, he and Chichilnisky reached out to billionaire Edgar Bronfman Jr. “Sometimes when you hear something that must be too good to be true, it’s because it is,” was Bronfman’s reaction, according to his son, who was present at the meeting. But the scion implored his father: “If they’re right, this is one of the biggest opportunities out there.” The family invested $18 million.

That largesse has allowed the company to build its demonstration despite basically no federal support for air capture research. (Global Thermostat chose SRI as its site due to the facility’s prior experience with carbon-capture technology.) The rectangular tower uses fans to draw air in over alternating 10-foot-wide surfaces known as contactors. Each is comprised of 640 ceramic cubes embedded with the amine sorbent. The tower raises one contactor as another is lowered. That allows the cubes of one to collect CO2 from ambient air while the other is stripped of the gas by the application of the steam, at 85 °C. For now that gas is simply vented, but depending on the customer it could be injected into the ground, shipped by pipe, or transferred to a chemical plant for industrial use.

A key challenge facing the company is the ruggedness of the amine sorbent surfaces. They tend to decay rapidly when oxidized, and frequently replacing the sorbents could make the process much less cost-effective than Eisenberger projects.

False hope

None of the world’s thousands of coal plants have been outfitted for full-scale capture of their carbon pollution. And if it isn’t economical for use in power plants, with their concentrated source of carbon dioxide, the prospects of capturing it out of the air seem dim to many experts. “There’s really little chance that you could capture CO2 from ambient air more cheaply than from a coal plant, where the flue gas is 300 times more concentrated,” says Robert Socolow, director of the Princeton Environment Institute and co-director of the university’s carbon mitigation initiative.

Adding to the skepticism over the feasibility of air capture is that there are other, cheaper ways to create the so-called negative emissions. A more practical way to do it, Schrag says, would involve deriving fuels from biomass—which removes CO2 from the atmosphere as it grows. As that feedstock is fermented in a reactor to create ethanol, it produces a stream of pure carbon dioxide that can be captured and stored underground. It’s a proven technique and has been tested at a handful of sites worldwide.

Even if air capture were to someday prove profitable, whether it should be scaled up is another question. Say a solar power plant is built outside an existing coal plant. Should the energy the new solar plant produces be used to suck carbon out of the atmosphere, or to allow the coal plant to be shut down by replacing its energy output? The latter makes much more sense, says Socolow. He and others have another concern about air capture: that claims about its feasibility could breed complacency. “I don’t want us to give people the false hope that air capture can solve the carbon emissions problem without a strong focus on [reducing the use of] fossil fuels,” he says.

Eisenberger and Chichilnisky are adamant about the importance of sucking CO2 out of the atmosphere rather than focusing entirely on capturing it from coal plants. In 2010, the pair developed a version of their technology that mixes air with flue gas from a coal or gas-fired power plant. That approach provides a source of steam while capturing both atmospheric carbon and new emissions. It also could lower costs by providing a higher concentration of CO2 for the machine to capture. “It’s a very impressive system, a triumph,” says Socolow, who thinks scientific advances made in air capture will eventually be used primarily on coal and gas power plants.

Such an application could play a critical role in cleaning up greenhouse gas emissions. But Eisenberger has revealed even loftier goals. A patent granted to him and Chichilnisky in 2008 described air capture technology as, among other things, “a global thermostat for controlling average temperature of a planet’s atmosphere.”

Eli Kintisch is a correspondent for Science magazine.

Related Links:

Wednesday, October 08. 2014

Desierto Issue #3 - 28° Celsius is out! Deterritorialized Living included | #atmosphere

Note: a few of our recent works and exhibitions are included in this promising young publication related to architectural thinking, Desierto, edited by Paper - Architectural Histamine in Madrid. At the editorial team invitation, I had the occasion to write a paper about Deterritorialized Living and one of its physical installation last year in Pau (France), during Pau Acces(s). We also took the occasion of the publication to give a glimpse of a related research project called Algorithmic Atomized Functioning.

By fabric | ch

-----

From the editorial team:

"The temperature of the invisible and the desacralization of the air. 28° Celsius is the temperature at which protection becomes superfluous. It is also the temperature at which swimming pools are acclimatised.

Within the limits of the this hygrothermal comfort zone, we do not require the intervention of our body's thermoregulatory mechanisms nor that of any external artificial thermal controls in order to feel pleasantly comfortable while carrying out a sedentary activity without clothing.

28° Celsius is thus the temperature at which clothing can disappear, just as architecture could."

.jpg)

.jpg)

Authors are Gabriel Ruiz-Larrea, Sean Lally, Philippe Rahm, Nerea Calvillo, myself, Helen Mallinson, Antonio Cobo, José Vella Castillo and Pauly Garcia-Masedo.

.jpg)

Editorial by gabriel Ruiz-Larrea (editor in chief). Editorial team composed of Natalia David, Nuria Úrculo, María Buey, Daniel Lacasta Fitzsimmons.

.jpg)

.jpg)

.jpg)

.jpg)

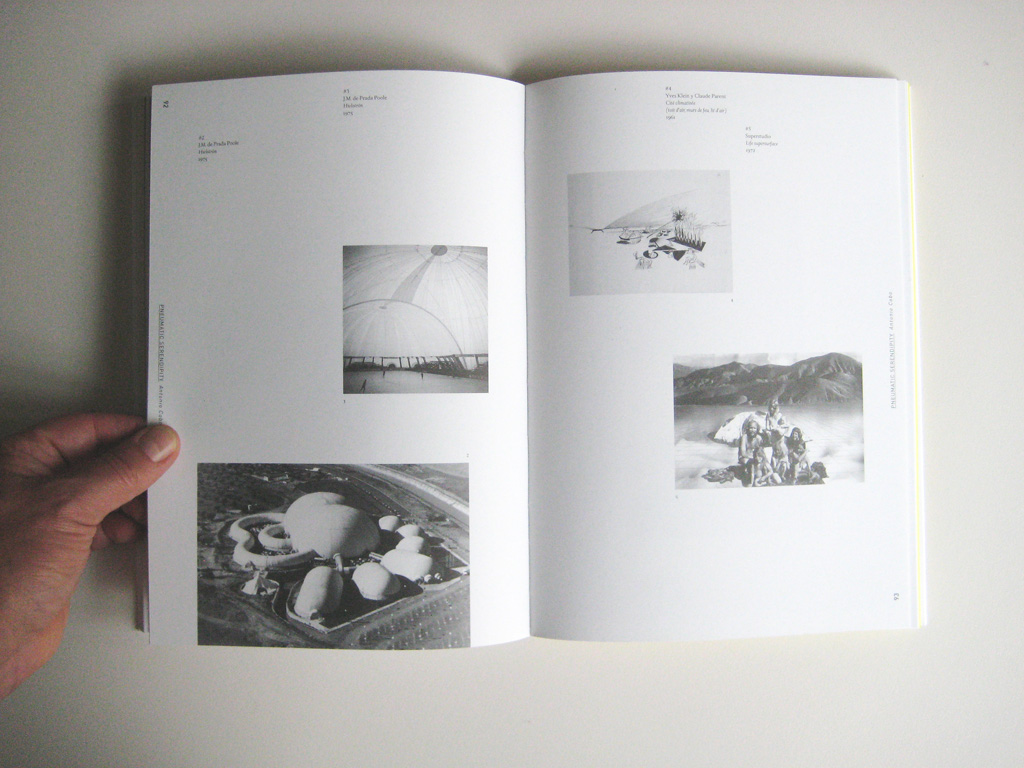

Inhabiting Deterritorialization, by Patrick Keller, with images of Deterritorialized Living website, Deterritorialized Daylight installation (Pau, France) and Algorithmic Atomized Functioning.

Desierto #3 and past issues can be ordered online on Paper bookstore.

Friday, May 02. 2014

Sounds atmospheres | #sound #architecture

Thursday, April 17. 2014

Air pollution now the world’s biggest environmental health risk with 7 million deaths per year | #air #health

Following last month catastrophic measures in Paris. Not a funny information, yet good to know. Air quality will undoubtedly become a very big (geo)political issue in the coming years, certainly an engineering one too.

Via Treehugger

-----

CC BY-ND 2.0 Flickr

The World Health Organization (WHO) released a report last year showing that air pollution killed more people than AIDS and malaria combined. It was based on 2010 figures, which were the latest available at the time. There's now a new study which looked at 2012 data, and it seems like things are even worse than we first believed.

“The risks from air pollution are now far greater than previously thought or understood, particularly for heart disease and strokes,” says Dr Maria Neira, Director of WHO’s Department for Public Health, Environmental and Social Determinants of Health. “Few risks have a greater impact on global health today than air pollution; the evidence signals the need for concerted action to clean up the air we all breathe.”

The WHO found that outdoor air pollution was linked to an estimated 3.7 million deaths in 2012 from urban and rural sources worldwide, and indoor air pollution, mostly caused by cooking (!) on inefficient coal and biomass stoves was linked to 4.3 million deaths in 2012.

Because many people are exposed to both indoor and outdoor air pollution, there is overlap in these two numbers, but the WHO estimates that the total number of victims from air pollution in 2012 was around 7 million, which is tragic since it would take relatively little in many of those cases to save livesFlickr/CC BY-SA 2.0

And it's not really a question of money, since the health costs and lost productivity caused by air pollution are higher in the long-term...

Here's how the health impacts break down for both indoor and outdoor air pollution:

Outdoor air pollution-caused deaths – breakdown by disease:

- 40% – ischaemic heart disease;

- 40% – stroke;

- 11% – chronic obstructive pulmonary disease (COPD);

- 6% - lung cancer; and

- 3% – acute lower respiratory infections in children.

Indoor air pollution-caused deaths – breakdown by disease:

- 34% - stroke;

- 26% - ischaemic heart disease;

- 22% - COPD;

- 12% - acute lower respiratory infections in children; and

- 6% - lung cancer

There are lots of big obvious things we can do, such as replace inefficient and pollution small stoves in poorer countries with better stoves or even better, electric cooking. Many countries, like China, could also do a lot to cut pollution at their coal plants and over time phase out coal (which isn't just a problem for air pollution, but also for water and ground pollution and global warming). There are all these low-hanging fruits that would make a huge difference. To see how dramatic the improvement could be, just look at these photos showing how bad the situation was in the US not so long ago (China is just repeating what has gone on elsewhere...).

One thing we can do to help: plant more trees! Recent studies show that they are even better at filtering the air in urban areas than we previously thought.

© Michael Graham Richard

Related on TreeHugger.com:

- Think Air Quality Regulations Don't Matter? Look at Pittsburgh in the 1940s!

- This scary map shows the health impacts of coal power plants in China

- The smog in Los Angeles doesn't quite sting like it used to, but there's still work to be done

- Coal pollution in China lowers life expectancy by 5 years

- What kills more people than AIDS and malaria combined? Air pollution

- Trees are awesome: Study shows tree leaves can capture 50%+ of particulate matter pollution

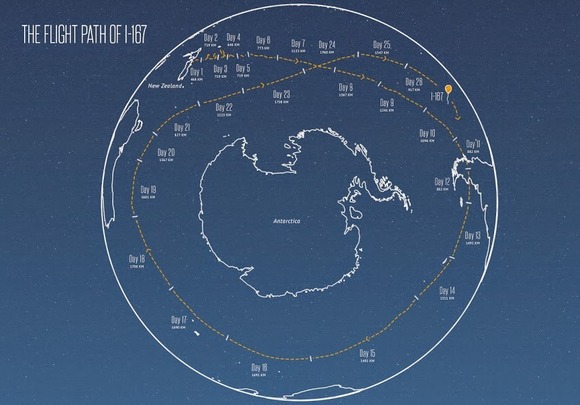

Google's Project Loon balloon goes around the world in 22 days | #unmanned #Internet

Via Computed·Blg via PCWorld

-----

When Jules Verne wrote Around the World in Eighty Days, this probably isn’t what he had in mind: Google’s Project Loon announced last week one of its balloons had circumnavigated the Earth in 22 days.

Granted, we’re not talking a grand tour of the world here: The balloon flew in a loop over the open ocean surrounding Antarctica, starting at New Zealand. According to the Project Loon team, it was the latest accomplishment for the balloon fleet, which just achieved 500,000 kilometers of flight.

While it may seem like fun and games, Project Loon’s larger goal is to use high-altitude balloons to “connect people in rural and remote areas, help fill coverage gaps, and bring people back online after disasters.”

Currently, the project is test-flying balloons to learn more about wind patterns, and to test its balloon designs. In the past nine months, the project team has used data it’s accumulated during test flights to “refine our prediction models and are now able to forecast balloon trajectories twice as far in advance.”

It also modified the balloon’s air pump (which pumps air in and out of the balloon) to operate more efficiently, which in turn helped the balloon stay on course in this latest test run.

Project Loon’s next step toward universal Internet connection is to create “a ring of uninterrupted connectivity around the 40th southern parallel,” which it expects to pull off sometime this year.

fabric | rblg

This blog is the survey website of fabric | ch - studio for architecture, interaction and research.

We curate and reblog articles, researches, writings, exhibitions and projects that we notice and find interesting during our everyday practice and readings.

Most articles concern the intertwined fields of architecture, territory, art, interaction design, thinking and science. From time to time, we also publish documentation about our own work and research, immersed among these related resources and inspirations.

This website is used by fabric | ch as archive, references and resources. It is shared with all those interested in the same topics as we are, in the hope that they will also find valuable references and content in it.

Quicksearch

Categories

Calendar

|

|

July '25 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | 3 | 4 | 5 | 6 | |

| 7 | 8 | 9 | 10 | 11 | 12 | 13 |

| 14 | 15 | 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 | 26 | 27 |

| 28 | 29 | 30 | 31 | |||

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)