Thursday, March 31. 2011

Via BLGDBLOG

-----

by noreply@blogger.com (Geoff Manaugh)

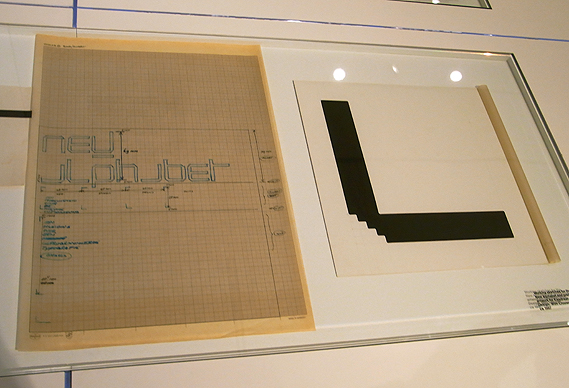

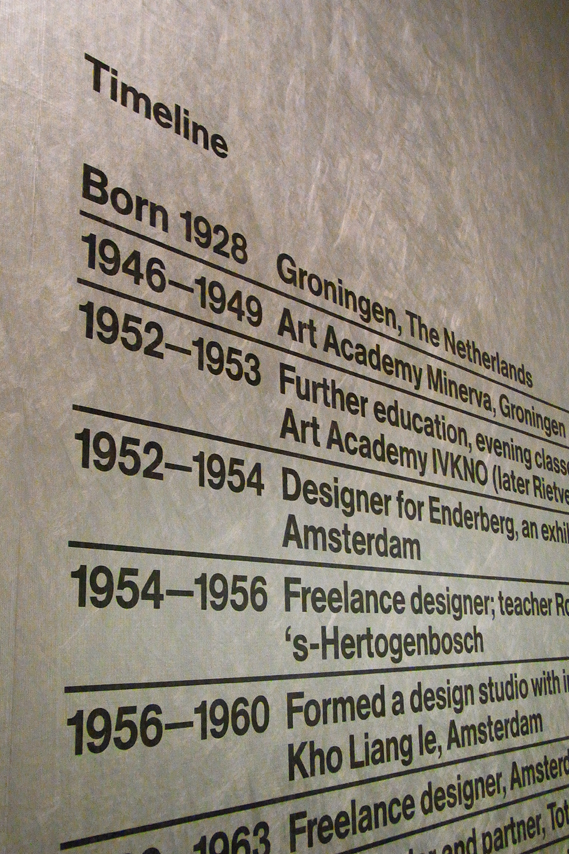

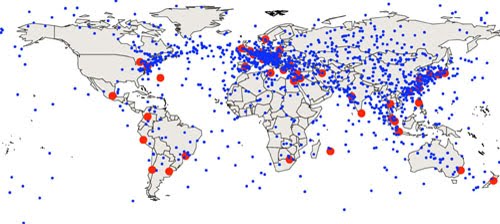

A recent paper published in the Physical Review has some astonishing suggestions for the geographic future of financial markets. Its authors, Alexander Wissner-Grossl and Cameron Freer, discuss the spatial implications of speed-of-light trading. Trades now occur so rapidly, they explain, and in such fantastic quantity, that the speed of light itself presents limits to the efficiency of global computerized trading networks.

These limits are described as "light propagation delays."

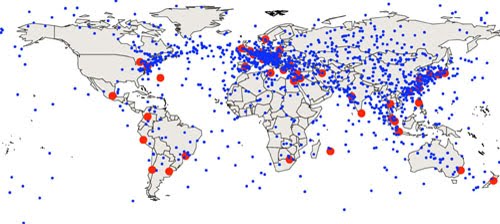

[Image: Global map of "optimal intermediate locations between trading centers," based on the earth's geometry and the speed of light, by Alexander Wissner-Grossl and Cameron Freer]. [Image: Global map of "optimal intermediate locations between trading centers," based on the earth's geometry and the speed of light, by Alexander Wissner-Grossl and Cameron Freer].

It is thus in traders' direct financial interest, they suggest, to install themselves at specific points on the Earth's surface—a kind of light-speed financial acupuncture—to take advantage both of the planet's geometry and of the networks along which trades are ordered and filled. They conclude that "the construction of relativistic statistical arbitrage trading nodes across the Earth’s surface" is thus economically justified, if not required.

Amazingly, their analysis—seen in the map, above—suggests that many of these financially strategic points are actually out in the middle of nowhere: hundreds of miles offshore in the Indian Ocean, for instance, on the shores of Antarctica, and scattered throughout the South Pacific (though, of course, most of Europe, Japan, and the U.S. Bos-Wash corridor also make the cut).

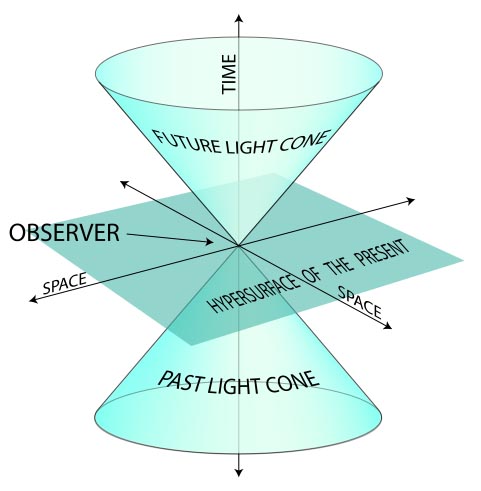

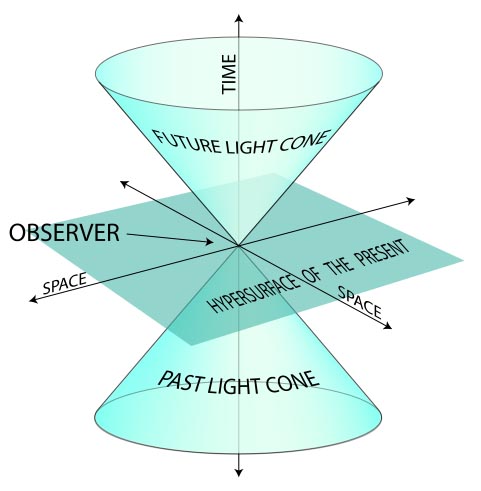

These nodes exist in what the authors refer to as "the past light cones" of distant trading centers—thus the paper's multiple references to relativity. Astonishingly, this thus seems to elide financial trading networks with the laws of physics, implying the eventual emergence of what we might call quantum financial products. Quantum derivatives! (This also seems to push us ever closer to the artificially intelligent financial instruments described in Charles Stross's novel Accelerando). Erwin Schrödinger meets the Dow.

It's financial science fiction: when the dollar value of a given product depends on its position in a planet's light-cone.

[Image: Diagrammatic explanation of a "light cone," courtesy of Wikipedia]. [Image: Diagrammatic explanation of a "light cone," courtesy of Wikipedia].

These points scattered along the earth's surface are described as "optimal intermediate locations between trading centers," each site "maximiz[ing] profit potential in a locally auditable manner."

Wissner-Grossl and Freer then suggest that trading centers themselves could be moved to these nodal points: "we show that if such intermediate coordination nodes are themselves promoted to trading centers that can utilize local information, a novel econophysical effect arises wherein the propagation of security pricing information through a chain of such nodes is effectively slowed or stopped." An econophysical effect.

In the end, then, they more or less explicitly argue for the economic viability of building artificial islands and inhabitable seasteads—i.e. the "construction of relativistic statistical arbitrage trading nodes"—out in the middle of the ocean somewhere as a way to profit from speed-of-light trades. Imagine, for a moment, the New York Stock Exchange moving out into the mid-Atlantic, somewhere near the Azores, onto a series of New Babylon-like platforms, run not by human traders but by Watson-esque artificially intelligent supercomputers housed in waterproof tombs, all calculating money at the speed of light.

[Image: An otherwise unrelated image from NOAA featuring a geodetic satellite triangulation network]. [Image: An otherwise unrelated image from NOAA featuring a geodetic satellite triangulation network].

"In summary," the authors write, "we have demonstrated that light propagation delays present new opportunities for statistical arbitrage at the planetary scale, and have calculated a representative map of locations from which to coordinate such relativistic statistical arbitrage among the world’s major securities exchanges. We furthermore have shown that for chains of trading centers along geodesics, the propagation of tradable information is effectively slowed or stopped by such arbitrage."

Historically, technologies for transportation and communication have resulted in the consolidation of financial markets. For example, in the nineteenth century, more than 200 stock exchanges were formed in the United States, but most were eliminated as the telegraph spread. The growth of electronic markets has led to further consolidation in recent years. Although there are advantages to centralization for many types of transactions, we have described a type of arbitrage that is just beginning to become relevant, and for which the trend is, surprisingly, in the direction of decentralization. In fact, our calculations suggest that this type of arbitrage may already be technologically feasible for the most distant pairs of exchanges, and may soon be feasible at the fastest relevant time scales for closer pairs.

Our results are both scientifically relevant because they identify an econophysical mechanism by which the propagation of tradable information can be slowed or stopped, and technologically significant, because they motivate the construction of relativistic statistical arbitrage trading nodes across the Earth’s surface.

For more, read the original paper: PDF.

(Thanks to Nicola Twilley for the tip!)

Vis MIT Technology Review

-----

By Kate Greene

The Open Network Foundation wants to let programmers take control of computer networks.

|

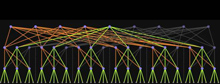

Off switch: This visualization shows network traffic when traffic loads are low and switches (the large dots) can be turned off to save power.

Credit: Open Flow Project |

Most data networks could be faster, more energy efficient, and more secure. But network hardware—switches, routers, and other devices—is essentially locked down, meaning network operators can't change the way they function. Software called OpenFlow, developed at Stanford University and the University of California, Berkeley, has opened some network hardware, allowing researchers to reprogram devices to perform new tricks.

Now 23 companies, including Google, Facebook, Cisco, and Verizon, have formed the Open Networking Foundation (ONF) with the intention of making open and programmable networks mainstream. The foundation aims to put OpenFlow and similar software into more hardware, establish standards that let different devices communicate, and let programmers write software for networks as they would for computers or smart phones.

"I think this is a true opportunity to take the Internet to a new level where applications are connected directly to the network," says Paul McNab, vice president of data center switching and services at Cisco.

Computer networks may not be as tangible as phones or computers, but they're crucial: cable television, Wi-Fi, mobile phones, Internet hosting, Web search, corporate e-mail, and banking all rely on the smooth operation of such networks. Applications that run on the type of programmable networks that the ONF envisions could stream HD video more smoothly, provide more reliable cellular service, reduce energy consumption in data centers, or even remotely clean computers of viruses.

The problem with today's networks, explains Nick McKeown, a professor of electrical engineering and computer sciences at Stanford who helped develop OpenFlow, is that data flows through them inefficiently. As data travels through a standard network, its path is determined by the switches it passes through, says McKeown. "It's a little bit like a navigation system [in a car] trying to figure out what the map looks like at the same time it's trying to find you directions," McKeown explains.

With a programmable network, he says, software can collect information about the network as a whole, so data travels more efficiently. A more complete view of a network, explains Scott Shenker, professor of electrical engineering and computer science at the University of California, Berkeley, is a product of two things: the first is OpenFlow firmware (software embedded in hardware) that taps into the switches and routers to read the state of the hardware and to direct traffic; the second is a network operating system that creates a network map and chooses the most efficient route.

OpenFlow and a network operating system "provide a consistent view of the network and do that at once for many applications," says McKeown. "It becomes trivial to find new paths."

Some OpenFlow research projects require just a couple hundred lines of code to completely change the data traffic patterns in a network—with dramatic results. In one project, McKeown says, researchers reduced a data center's energy consumption by 60 percent simply by rerouting network traffic within the center and turning off switches when they weren't in use.

This sort of research has caught the attention of big companies, and is one reason why the ONF was formed. Google is interested in speeding up the networks that connect its data centers. These data centers generally communicate through specified paths, but if a route fails, traffic needs to be rerouted, says Urs Hoelzle, senior vice president of operations at Google. Using standard routing instructions, this process can take 20 minutes. If Google had more control over how the data flowed, it could reroute within seconds, Hoelzle says.

Cisco, a company that builds the hardware that routes much of the data on the Internet, sees ONF as a way to help customers build better Internet services. Facebook, for example, relies on Cisco hardware to serve up status updates, messages, pictures, and video to hundreds of millions of people worldwide. "You can imagine the flood of data," says McNab.

Future ONF standards could let people program a network to get different kinds of performance when needed, says McNab. Building that sort of functionality into Cisco hardware could make it more appealing to Internet services that need to be fast.

The first goal of the ONF is to take over the specifications of OpenFlow, says McKeown. As a research project, OpenFlow has found success on more than a dozen campuses, but it needs to be modified so it can work well at various companies. The next step is to develop easy-to-use interfaces that let people program networks just as they would program a computer or smart phone. "This is a very big step for the ONF," he says, because it could increase the adoption of standards and speed up innovation for network applications. He says the process could take two years.

In the meantime, companies including Google, Cisco, and others will test open networking protocols on their internal networks—in essence, they'll be testing out a completely new kind of Internet.

Copyright Technology Review 2011.

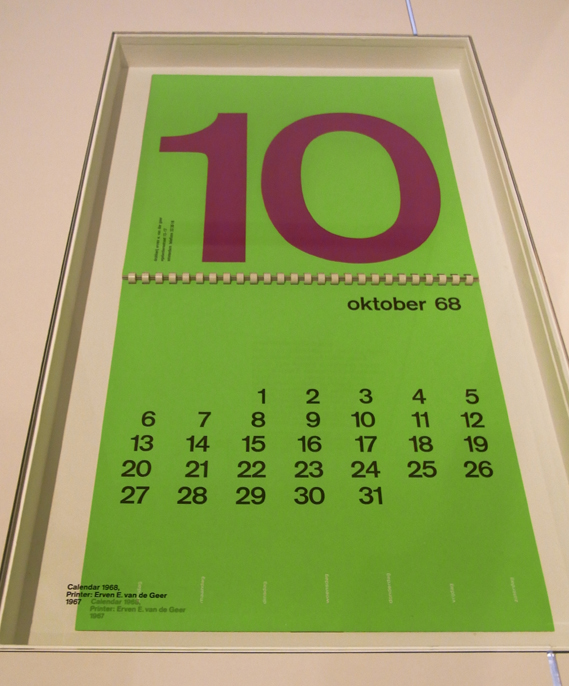

Wednesday, March 30. 2011

Via Creative Review

-----

By Patrick Burgoyne

www.creativereview.co.uk/cr-blog/2011/march/wim-crouwel-at-londons-design-museum www.creativereview.co.uk/cr-blog/2011/march/wim-crouwel-at-londons-design-museum

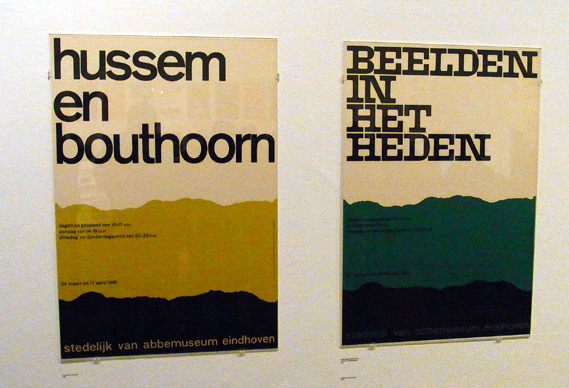

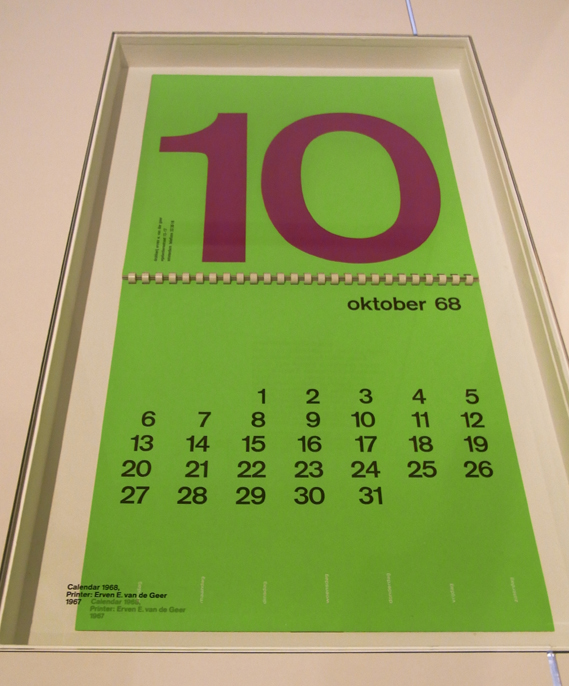

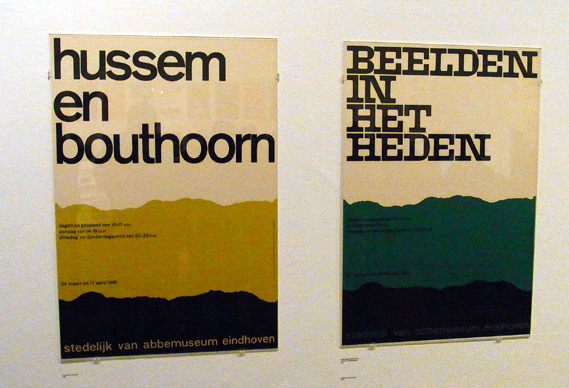

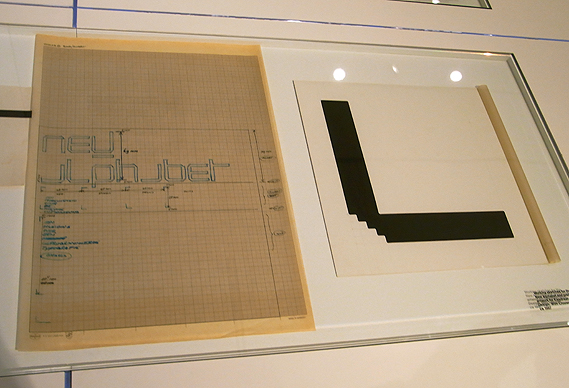

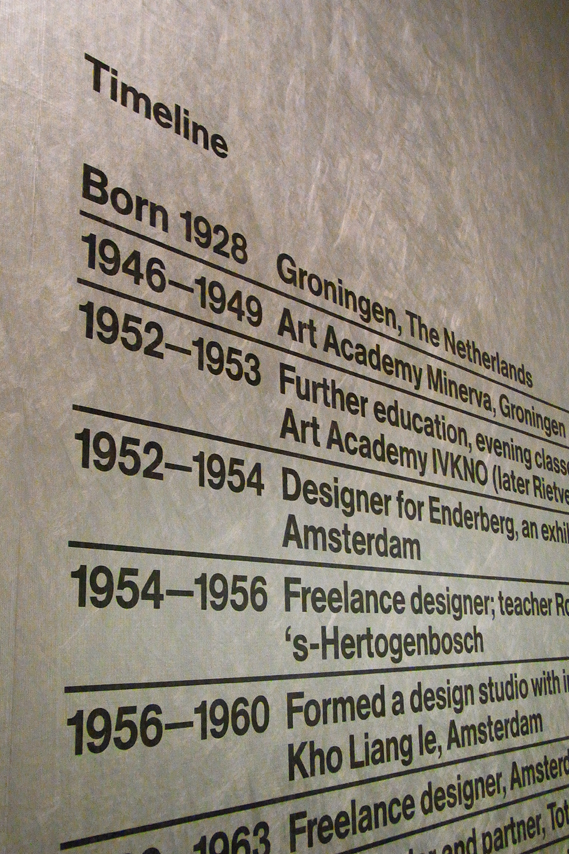

The Design Museum's Wim Crouwel show opened last night and... it's fantastic. A substantial, 'proper' graphic design exhibition given the space and treatment that Crouwel's extraordinary output deserves

The Design Museum often seems nervous about graphics – perhaps it is unconvinced that graphic design can attract the visitor numbers and sponsorship that fashion or furniture can command. Wim Crouwel A Graphic Odyssey takes the first floor room that also housed the Peter Saville and Alan Fletcher shows. But the space has been transformed.

Much credit must go to the show's designers, architects 6a who, along with co-curator Tony Brook, persuaded the Design Museum to open up the space and paint it white. At previous shows in that room, the space has been divided, with visitors taken round a pathway into individual spaces. The Crouwel show is in one big room and all the better for it.

The serried ranks of Crouwel's posters also look fantastic from a distance, as do run-outs of some of his many logos.

Printed material is housed in a series of simple white tables, one set being raised to afford a better view.

The whole thing is very simple, very clean and ordered. Sometimes graphic design shows can seem too ephemeral, trivial almost. Wim Crouwel A Graphic Odyssey is a serious, substantial retrospective of a phenomenal career and a fitting tribute to a truly great designer. Co-curators Brook and Margaret Cubbage should be congratulated on what is, for my money, the finest graphic design show that the Design Museum has staged. Let's hope it opens the door for more in the future.

NB: We thought you'd appreciate these images as a first sight, but the show is to be professionally photographed in the next few days. We will update with better images when we can get them. Michael Johnson has also blogged about the show here

Wim Crouwel A Graphic Odyssey is at the Design Museum, Shad Thames, London SE1 until July 3.

RELATED CONTENT

Rick Poynor discusses the extraordinarily high regard in which Crouwel is held by some UK designers here

Fancy some Wim Crouwel wallpaper?

How Blanka lovingly recreated Crouwel's classic Vormgevers poster, complete with wobbly hand-drawn lines, here

A review of Crouwel's 2007 D&AD President's Lecture

Michael C Place and Nicky Place of Build interview Crouwel for CR, here

Tuesday, March 29. 2011

Via MIT Technology (Blogs)

-----

Bill Atkinson invented everything from the menu bar to hypercard--the program that inspired the first wiki.

The wiki is a funny thing: unlike the blog, which is a bastard child of the human need to record life's events and the exhibitionistic tendencies the Internet encourages, there is nothing all that obvious about it.

Ward Cunningham, the programmer who invented the modern wiki, has said that this is precisely what made it so compelling -- it was one of those too-obvious ideas that doesn't really make sense until you've seen it in action. To judge by the level of discourse in the average well-trafficked comment thread, to put a page on the internet and to invite all to edit it is a recipe for defacement and worse. Yet it works -- in part due to its occasionally ant-democratic nature.

Unlike the first weblogs, which were personal diaries, the very first wiki -- it still exists -- is devoted to software development. But where did its creator get the idea to create a wiki (then called a WikiWiki) in the first place?

Hypercard.

It's a name that will mean a great deal to anyone who can identify this creature:

Hypercard was the world wide web before the web even existed. Only it wasn't available across a network, and instead of hypertext, it was merely hypermedia -- in other words, different parts of the individual 'cards' one could create with it were linkable to other cards.

The most famous application ever to be built with Hypercard is the original version of the computer game Myst. (Which, like seemingly every other bit of puzzle, arcade and adventure game nostalgia, has been reconstituted on the iPhone.)

Hypercard made it easy to build "stacks" of graphically rich (for the time, anyway) "cards" that could be interlinked. Cunningham built a stack in Hypercard that documented computer programmers and their ideas, and, later, programming patterns. The web allowed him to realize an analogous "stack" in a public space; the last step was to allow anyone to add to it.

Bill Atkinson, the Apple programmer who invented Hypercard, also invented MacPaint, the QuickDraw toolbox that the original Macintosh used for graphics, and the Menu bar. He is literally one of those foundational programmers whose ideas -- or at least their expression -- have influenced millions, and have descendants on practically every computer in existence.

Which means Atkinson gave birth to a system elegant enough to presage the world wide web, inspire the first Wiki (without which Wikipedia, begun in 2001, would have been impossible) and give rise to the most haunting computer game of a generation. Both Atkinson and Cunningham are links in a long chain of inspiration and evolution stretching back to the earliest notions of hypertext.

And that's how Apple -- or specifically Bill Atkinson -- helped give birth to the wiki. Which is 16 years old today!

Via GOOD

-----

by Alex Goldmark

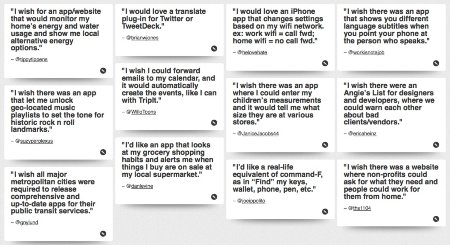

Sure, it seems like there's an app for everything. But we're not quite there yet. There are still many practical problems with seemingly simple solutions that have yet to materialize, like this:

I wish someone would use the foursquare API to build a cab-sharing app to help you split a ride home at the end of the night.

Or this:

I wish there was a website where non-profits could ask for what they need and people could work for them from home.

Meet the Internet Wishlist, a "suggestion box for the future of technology." Composed of hopeful tweets from people around the world, the site ends up reading like a mini-blog of requests for mobile apps, basic grand dreaming, and tech-focused humor posts. All kidding aside, though, creator Amrit Richmond hopes the list will ultimately lead to a bit of demand-driven design.

To contribute, people post an idea on Twitter and include #theiwl in their tweet. Richmond then collects the most "forward thinking" onto the Wishlist website. (Full disclosure, she's a former Art Editor here at GOOD and now a creative strategist for nonprofits and start-ups.)

"I hope the project inspires entrepreneurs, developers and designers to innovate and build the products and features that people want," Richmond says. "I see so many startups try and solve problems that don't need solving. ... I wanted to uncover and show what kinds of day-to-day problems people have that they want technology to solve for them."

The list already has an active community of posters, who are quick to point out when there's already an app or website fulfilling a poster's wish. For instance, several commenters pointed out that people can already connect with nonprofits and do micro-volunteering from home through Sparked.

To see the full wishlist or subscribe, go here.

What would you ask for?

|

[Image: Diagrammatic explanation of a "light cone," courtesy of

[Image: Diagrammatic explanation of a "light cone," courtesy of  [Image: An otherwise unrelated image from NOAA featuring a geodetic

[Image: An otherwise unrelated image from NOAA featuring a geodetic