Monday, December 21. 2015

Gramazio Kohler celebrates 10 years of research in digital fabrication at ETHZ in a video | #digitalfabrication #researchbydesign

-----

One decade of Gramazio Kohler Research at ETH Zurich – The Architecture of Digital Fabrication from Gramazio Kohler Research on Vimeo.

Tuesday, December 23. 2014

Imposing Security | #code

Note: while I'm rather against too much security (therefore, not "Imposing security") and probably reticent to the fact that we, as human beings, are "delegating" too far our daily routines and actions to algorithms (which we wrote), this article stresses the importance of code in our everyday life as well as the fact that it goes down to the language which is used to code a program. Interesting to know that some coding languages are more likely to produce mistakes and errors.

-----

Computer programmers won’t stop making dangerous errors on their own. It’s time they adopted an idea that makes the physical world safer.

Three computer bugs this year exposed passwords, e-mails, financial data, and other kinds of sensitive information connected to potentially billions of people. The flaws cropped up in different places—the software running on Web servers, iPhones, the Windows operating system—but they all had the same root cause: careless mistakes by programmers.

Each of these bugs—the “Heartbleed” bug in a program called OpenSSL, the “goto fail” bug in Apple’s operating systems, and a so-called “zero-day exploit” discovered in Microsoft’s Internet Explorer—was created years ago by programmers writing in C, a language known for its power, its expressiveness, and the ease with which it leads programmers to make all manner of errors. Using C to write critical Internet software is like using a spring-loaded razor to open boxes—it’s really cool until you slice your fingers. Alas, as dangerous as it is, we won’t eliminate C anytime soon—programs written in C and the related language C++ make up a large portion of the software that powers the Internet. New projects are being started in these languages all the time by programmers who think they need C’s speed and think they’re good enough to avoid C’s traps and pitfalls. But even if we can’t get rid of that language, we can force those who use it to do a better job. We would borrow a concept used every day in the physical world. Obvious in retrospect Of the three flaws, Heartbleed was by far the most significant. It is a bug in a program that implements a protocol called Secure Sockets Layer/Transport Layer Security (SSL/TLS), which is the fundamental encryption method used to protect the vast majority of the financial, medical, and personal information sent over the Internet. The original SSL protocol made Internet commerce possible back in the 1990s. OpenSSL is an open-source implementation of SSL/TLS that’s been around nearly as long. The program has steadily grown and been extended over the years. Today’s cryptographic protocols are thought to be so strong that there is, in practice, no way to break them. But Heartbleed made SSL’s encryption irrelevant. Using Heartbleed, an attacker anywhere on the Internet could reach into the heart of a Web server’s memory and rip out a little piece of private data. The name doesn’t come from this metaphor but from the fact that Heartbleed is a flaw in the “heartbeat” protocol Web browsers can use to tell Web servers that they are still connected. Essentially, the attacker could ping Web servers in a way that not only confirmed the connection but also got them to spill some of their contents. It’s like being able to check into a hotel that occasionally forgets to empty its rooms’ trash cans between guests. Sometimes these contain highly valuable information. Heartbleed resulted from a combination of factors, including a mistake made by a volunteer working on the OpenSSL program when he implemented the heartbeat protocol. Although any of the mistakes could have happened if OpenSSL had been written in a modern programming language like Java or C#, they were more likely to happen because OpenSSL was written in C. Many developers design their own reliability tests and then run the tests themselves. Even in large companies, code that seems to work properly is frequently not tested for lurking flaws. Apple’s flaw came about because some programmer inadvertently duplicated a line of code that, appropriately, read “goto fail.” The result was that under some conditions, iPhones and Macs would silently ignore errors that might occur when trying to ascertain the legitimacy of a website. With knowledge of this bug, an attacker could set up a wireless access point that might intercept Internet communications between iPhone users and their banks, silently steal usernames and passwords, and then reëncrypt the communications and send them on their merry way. This is called a “man-in-the-middle” attack, and it’s the very sort of thing that SSL/TLS was designed to prevent. Remarkably, “goto fail” happened because of a feature in the C programming language that was known to be problematic before C was even invented! The “goto” statement makes a computer program jump from one place to another. Although such statements are common inside the computer’s machine code, computer scientists have tried for more than 40 years to avoid using “goto” statements in programs that they write in so-called “high-level language.” Java (designed in the early 1990s) doesn’t have a “goto” statement, but C (designed in the early 1970s) does. Although the Apple programmer responsible for the “goto fail” problem could have made a similar mistake without using the “goto” statement, it would have been much less probable. We know less about the third bug because the underlying source code, part of Microsoft’s Internet Explorer, hasn’t been released. What we do know is that it was a “use after free” error: the program tells the operating system that it is finished using a piece of memory, and then it goes ahead and uses that memory again. According to the security firm FireEye, which tracked down the bug after hackers started using it against high-value targets, the flaw had been in Internet Explorer since August 2001 and affected more than half of those who got on the Web through traditional PCs. The bug was so significant that the Department of Homeland Security took the unusual step of telling people to temporarily stop running Internet Explorer. (Microsoft released a patch for the bug on May 1.) Automated inspectors There will always be problems in anything designed or built by humans, of course. That’s why we have policies in the physical world to minimize the chance for errors to occur and procedures designed to catch the mistakes that slip through. Home builders must follow building codes, which regulate which construction materials can be used and govern certain aspects of the building’s layout—for example, hallways must reach a minimum width, and fire exits are required. Building inspectors visit the site throughout construction to review the work and make sure that it meets the codes. Inspectors will make contractors open up walls if they’ve installed them before getting the work inside inspected. The world of software development is completely different. It’s common for developers to choose the language they write in and the tools they use. Many developers design their own reliability tests and then run the tests themselves! Big companies can afford separate quality–assurance teams, but many small firms go without. Even in large companies, code that seems to work properly is frequently not tested for lurking security flaws, because manual testing by other humans is incredibly expensive—sometimes more expensive than writing the original software, given that testing can reveal problems the developers then have to fix. Such flaws are sometimes called “technical debt,” since they are engineering costs borrowed against the future in the interest of shipping code now. The solution is to establish software building codes and enforce those codes with an army of unpaid inspectors. Crucially, those unpaid inspectors should not be people, or at least not only people. Some advocates of open-source software subscribe to the “many eyes” theory of software development: that if a piece of code is looked at by enough people, the security vulnerabilities will be found. Unfortunately, Heartbleed shows the fallacy in this argument: though OpenSSL is one of the most widely used open-source security programs, it took paid security engineers at Google and the Finnish IT security firm Codenomicon to find the bug—and they didn’t find it until two years after many eyes on the Internet first got access to the code. Instead, this army of software building inspectors should be software development tools—the programs that developers use to create programs. These tools can needle, prod, and cajole programmers to do the right thing. This has happened before. For example, back in 1988 the primary infection vector for the world’s first Internet worm was another program written in C. It used a function called “gets()” that was common at the time but is inherently insecure. After the worm was unleashed, the engineers who maintained the core libraries of the Unix operating system (which is now used by Linux and Mac OS) modified the gets() function to make it print the message “Warning: this program uses gets(), which is unsafe.” Soon afterward, developers everywhere removed gets() from their programs. The same sort of approach can be used to prevent future bugs. Today many software development tools can analyze programs and warn of stylistic sloppiness (such as the use of a “goto” statement), memory bugs (such as the “use after free” flaw), or code that doesn’t follow established good-programming standards. Often, though, such warnings are disabled by default because many of them can be merely annoying: they require that code be rewritten and cleaned up with no corresponding improvement in security. Other bug–finding tools aren’t even included in standard development tool sets but must instead be separately downloaded, installed, and run. As a result, many developers don’t even know about them, let alone use them. To make the Internet safer, the most stringent checking will need to be enabled by default. This will cause programmers to write better code from the beginning. And because program analysis tools work better with modern languages like C# and Java and less well with programs written in C, programmers should avoid starting new projects in C or C++—just as it is unwise to start construction projects using old-fashioned building materials and techniques. Programmers are only human, and everybody makes mistakes. Software companies need to accept this fact and make bugs easier to prevent. Simson L. Garfinkel is a contributing editor to MIT Technology Review and a professor of computer science at the Naval Postgraduate School.

Sunday, December 14. 2014

I&IC workshop #3 at ECAL: output > Networked Data Objects & Devices | #data #things

Via iiclouds.org

-----

The third workshop we ran in the frame of I&IC with our guest researcher Matthew Plummer-Fernandez (Goldsmiths University) and the 2nd & 3rd year students (Ba) in Media & Interaction Design (ECAL) ended last Friday (| rblg note: on the 21st of Nov.) with interesting results. The workshop focused on small situated computing technologies that could collect, aggregate and/or “manipulate” data in automated ways (bots) and which would certainly need to heavily rely on cloud technologies due to their low storage and computing capacities. So to say “networked data objects” that will soon become very common, thanks to cheap new small computing devices (i.e. Raspberry Pis for diy applications) or sensors (i.e. Arduino, etc.) The title of the workshop was “Botcave”, which objective was explained by Matthew in a previous post.

The choice of this context of work was defined accordingly to our overall research objective, even though we knew that it wouldn’t address directly the “cloud computing” apparatus — something we learned to be a difficult approachduring the second workshop –, but that it would nonetheless question its interfaces and the way we experience the whole service. Especially the evolution of this apparatus through new types of everyday interactions and data generation.

Matthew Plummer-Fernandez (#Algopop) during the final presentation at the end of the research workshop.

Through this workshop, Matthew and the students definitely raised the following points and questions:

1° Small situated technologies that will soon spread everywhere will become heavy users of cloud based computing and data storage, as they have low storage and computing capacities. While they might just use and manipulate existing data (like some of the workshop projects — i.e. #Good vs. #Evil or Moody Printer) they will altogether and mainly also contribute to produce extra large additional quantities of them (i.e. Robinson Miner). Yet, the amount of meaningful data to be “pushed” and “treated” in the cloud remains a big question mark, as there will be (too) huge amounts of such data –Lucien will probably post something later about this subject: “fog computing“–, this might end up with the need for interdisciplinary teams to rethink cloud architectures.

2° Stored data are becoming “alive” or significant only when “manipulated”. It can be done by “analog users” of course, but in general it is now rather operated by rules and algorithms of different sorts (in the frame of this workshop: automated bots). Are these rules “situated” as well and possibly context aware (context intelligent) –i.e.Robinson Miner? Or are they somehow more abstract and located anywhere in the cloud? Both?

3° These “Networked Data Objects” (and soon “Network Data Everything”) will contribute to “babelize” users interactions and interfaces in all directions, paving the way for new types of combinations and experiences (creolization processes) — i.e. The Beast, The Like Hotline, Simon Coins, The Wifi Cracker could be considered as starting phases of such processes–. Cloud interfaces and computing will then become everyday “things” and when at “house”, new domestic objects with which we’ll have totally different interactions (this last point must still be discussed though as domesticity might not exist anymore according to Space Caviar).

Moody Printer – (Alexia Léchot, Benjamin Botros)

Moody Printer remains a basic conceptual proposal at this stage, where a hacked printer, connected to a Raspberry Pi that stays hidden (it would be located inside the printer), has access to weather information. Similarly to human beings, its “mood” can be affected by such inputs following some basic rules (good – bad, hot – cold, sunny – cloudy -rainy, etc.) The automated process then search for Google images according to its defined “mood” (direct link between “mood”, weather conditions and exhaustive list of words) and then autonomously start to print them.

A different kind of printer combined with weather monitoring.

The Beast – (Nicolas Nahornyj)

Top: Nicolas Nahornyj is presenting his project to the assembly. Bottom: the laptop and “the beast”.

The Beast is a device that asks to be fed with money at random times… It is your new laptop companion. To calm it down for a while, you must insert a coin in the slot provided for that purpose. If you don’t comply, not only will it continue to ask for money in a more frequent basis, but it will also randomly pick up an image that lie around on your hard drive, post it on a popular social network (i.e. Facebook, Pinterest, etc.) and then erase this image on your local disk. Slowly, The Beast will remove all images from your hard drive and post them online…

A different kind of slot machine combined with private files stealing.

Robinson – (Anne-Sophie Bazard, Jonas Lacôte, Pierre-Xavier Puissant)

Top: Pierre-Xavier Puissant is looking at the autonomous “minecrafting” of his bot. Bottom: the proposed bot container that take on the idea of cubic construction. It could be placed in your garden, in one of your room, then in your fridge, etc.

Robinson automates the procedural construction of MineCraft environments. To do so, the bot uses local weather information that is monitored by a weather sensor located inside the cubic box, attached to a Raspberry Pi located within the box as well. This sensor is looking for changes in temperature, humidity, etc. that then serve to change the building blocks and rules of constructions inside MineCraft (put your cube inside your fridge and it will start to build icy blocks, put it in a wet environment and it will construct with grass, etc.)

A different kind of thermometer combined with a construction game.

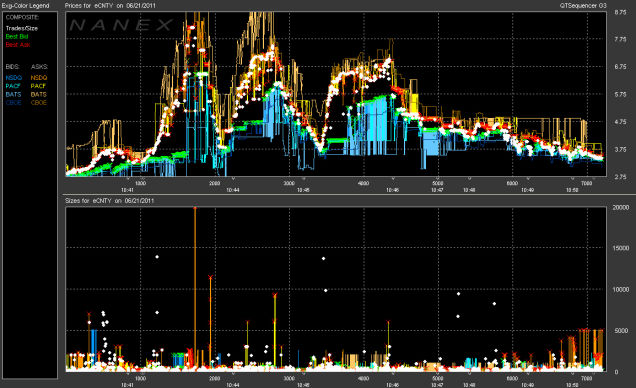

Note: Matthew Plummer-Fernandez also produced two (auto)MineCraft bots during the week of workshop. The first one is building environment according to fluctuations in the course of different market indexes while the second one is trying to build “shapes” to escape this first envirnment. These two bots are downloadable from theGithub repository that was realized during the workshop.

#Good vs. #Evil – (Maxime Castelli)

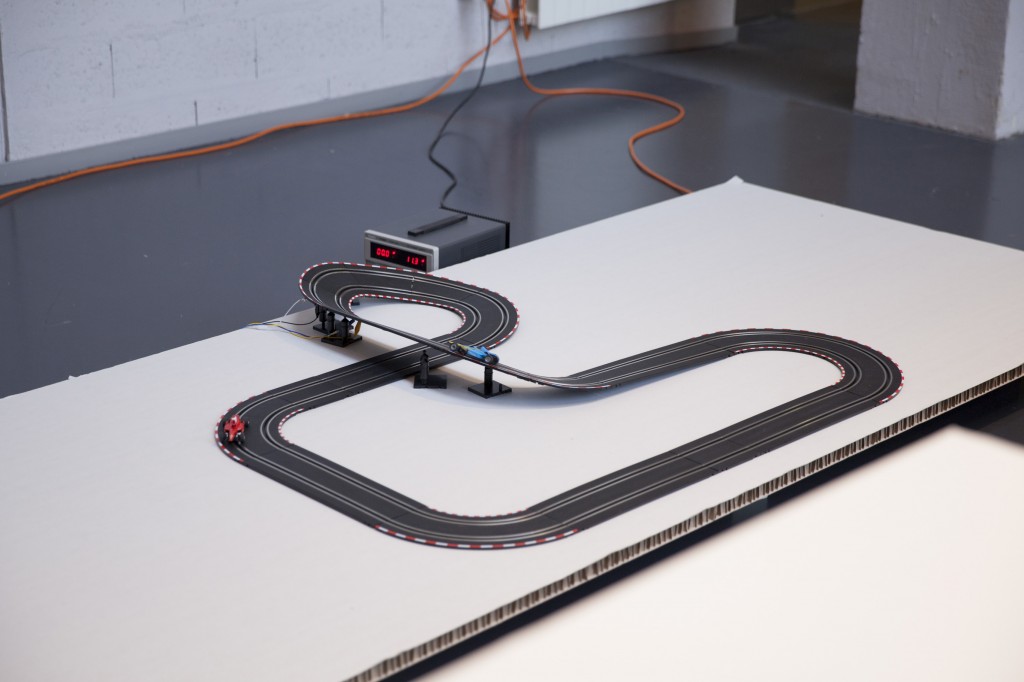

Top: a transformed car racing game. Bottom: a race is going on between two Twitter hashtags, materialized by two cars.

#Good vs. #Evil is a quite straightforward project. It is also a hack of an existing two racing cars game. Yet in this case, the bot is counting iterations of two hashtags on Twitter: #Good and #Evil. At each new iteration of one or the other word, the device gives an electric input to its associated car. The result is a slow and perpetual race car between “good” and “evil” through their online hashtags iterations.

A different kind of data visualization combined with racing cars.

The “Like” Hotline – (Mylène Dreyer, Caroline Buttet, Guillaume Cerdeira)

Top: Caroline Buttet and Mylène Dreyer are explaining their project. The screen of the laptop, which is a Facebook account is beamed on the left outer part of the image. Bottom: Caroline Buttet is using a hacked phone to “like” pages.

The “Like” Hotline is proposing to hack a regular phone and install a hotline bot on it. Connected to its online Facebook account that follows a few personalities and the posts they are making, the bot ask questions to the interlocutor which can then be answered by using the keypad on the phone. After navigating through a few choices, the bot hotline help you like a post on the social network.

A different kind of hotline combined with a social network.

Simoncoin – (Romain Cazier)

Top: Romain Cazier introducing its “coin” project. Bottom: the device combines an old “Simon” memory game with the production of digital coins.

Simoncoin was unfortunately not finished at the end of the week of workshop but was thought out in force details that would be too long to explain in this short presentation. Yet the main idea was to use the game logic of Simon to generate coins. In a parallel to the Bitcoins that are harder and harder to mill, Simon Coins are also more and more difficult to generate due to the game logic.

Another different kind of money combined with a memory game.

The Wifi Cracker – (Bastien Girshig, Martin Hertig)

Top: Bastien Girshig and Martin Hertig (left of Matthew Plummer-Fernandez) presenting. Middle and Bottom: the wifi password cracker slowly diplays the letters of the wifi password.

The Wifi Cracker is an object that you can independently leave in a space. It furtively looks a little bit like a clock, but it won’t display the time. Instead, it will look for available wifi networks in the area and start try to find their protected password (Bastien and Martin found a ready made process for that). The bot will test all possible combinations and it will take time. Once the device will have found the working password, it will use its round display to transmit the password. Letter by letter and slowly as well.

A different kind of cookoo clock combined with a password cracker.

Acknowledgments:

Lots of thanks to Matthew Plummer-Fernandez for its involvement and great workshop direction; Lucien Langton for its involvment, technical digging into Raspberry Pis, pictures and documentation; Nicolas Nova and Charles Chalas (from HEAD) so as Christophe Guignard, Christian Babski and Alain Bellet for taking part or helping during the final presentation. A special thanks to the students from ECAL involved in the project and the energy they’ve put into it: Anne-Sophie Bazard, Benjamin Botros, Maxime Castelli, Romain Cazier, Guillaume Cerdeira, Mylène Dreyer, Bastien Girshig, Jonas Lacôte, Alexia Léchot, Nicolas Nahornyj, Pierre-Xavier Puissant.

From left to right: Bastien Girshig, Martin Hertig (The Wifi Cracker project), Nicolas Nova, Matthew Plummer-Fernandez (#Algopop), a “mystery girl”, Christian Babski (in the background), Patrick Keller, Sebastian Vargas, Pierre Xavier-Puissant (Robinson Miner), Alain Bellet and Lucien Langton (taking the pictures…) during the final presentation on Friday.

Friday, November 21. 2014

Botcaves on #Github | #iiclouds #bots #code

Via iiclouds.org

-----

Note: a message from Matthew on Tuesday about his ongoing I&IC workshop. More resources to come there by the end of the week, as students are looking into many different directions!

I’ve started a github repository for the workshop so I can post code and tips there.

Please share with the students:https://github.com/plummerfernandez/botcaves/

Tuesday, October 07. 2014

A Dating Site for Algorithms | #code

Note: the title of the post would tend to let us think that this is a place where algorithms could date, together... (not for humans either). It is not realy the case and it is "just" a place where you can go digg for unused algorithms. Intersting too though. But I must admit that I first rebloged this post because of its title...

-----

A startup called Algorithmia wants to connect underused algorithms with those who want to make sense of data.

By Rachel Metz

A startup called Algorithmia has a new twist on online matchmaking. Its website is a place for businesses with piles of data to find researchers with a dreamboat algorithm that could extract insights–and profits–from it all.

The aim is to make better use of the many algorithms that are developed in academia but then languish after being published in research papers, says cofounder Diego Oppenheimer. Many have the potential to help companies sort through and make sense of the data they collect from customers or on the Web at large. If Algorithmia makes a fruitful match, a researcher is paid a fee for the algorithm’s use, and the matchmaker takes a small cut. The site is currently in a private beta test with users including academics, students, and some businesses, but Oppenheimer says it already has some paying customers and should open to more users in a public test by the end of the year.

“Algorithms solve a problem. So when you have a collection of algorithms, you essentially have a collection of problem-solving things,” says Oppenheimer, who previously worked on data-analysis features for the Excel team at Microsoft.

Oppenheimer and cofounder Kenny Daniel, a former graduate student at USC who studied artificial intelligence, began working on the site full time late last year. The company raised $2.4 million in seed funding earlier this month from Madrona Venture Group and others, including angel investor Oren Etzioni, the CEO of the Allen Institute for Artificial Intelligence and a computer science professor at the University of Washington.

Etzioni says that many good ideas are essentially wasted in papers presented at computer science conferences and in journals. “Most of them have an algorithm and software associated with them, and the problem is very few people will find them and almost nobody will use them,” he says.

One reason is that academic papers are written for other academics, so people from industry can’t easily discover their ideas, says Etzioni. Even if a company does find an idea it likes, it takes time and money to interpret the academic write-up and turn it into something testable.

To change this, Algorithmia requires algorithms submitted to its site to use a standardized application programming interface that makes them easier to use and compare. Oppenheimer says some of the algorithms currently looking for love could be used for machine learning, extracting meaning from text, and planning routes within things like maps and video games.

Early users of the site have found algorithms to do jobs such as extracting data from receipts so they can be automatically categorized. Over time the company expects around 10 percent of users to contribute their own algorithms. Developers can decide whether they want to offer their algorithms free or set a price.

All algorithms on Algorithmia’s platform are live, Oppenheimer says, so users can immediately use them, see results, and try out other algorithms at the same time.

The site lets users vote and comment on the utility of different algorithms and shows how many times each has been used. Algorithmia encourages developers to let others see the code behind their algorithms so they can spot errors or ways to improve on their efficiency.

One potential challenge is that it’s not always clear who owns the intellectual property for an algorithm developed by a professor or graduate student at a university. Oppenheimer says it varies from school to school, though he notes that several make theirs open source. Algorithmia itself takes no ownership stake in the algorithms posted on the site.

Eventually, Etzioni believes, Algorithmia can go further than just matching up buyers and sellers as its collection of algorithms grows. He envisions it leading to a new, faster way to compose software, in which developers join together many different algorithms from the selection on offer.

Related Links:

Friday, September 05. 2014

Skeuo Office ? | #nothankyou

A little bit of irony about skeuomorphism by Studio Moniker in their video for the "office of the future". It looks like the real offices of Google though, according to the many pictures that have populated the web about their offices interior design... I hope Google glasses don't make you see the world that way btw.

Via Moniker

-----

"In a world where workspaces need to be generic in order to accommodate multiple activities, Moniker and LRvH introduce skeuomorphism for the workspace.

Jump to synchronize the office floor with the kind of work you are doing!"

So, do you believe that Google employees are jumping too to synchronize their working space?

Friday, July 25. 2014

Algorithmic creationism | #code #games

This algorithmic creationism game makes me think, to some extent, to the researches lead by philosophers, mathematicians, or physicists to prove that our own everyday world would be (or wouldn't be) the result of an extra large simulation...Yet, funilly, even so this game world is announced as "algoritmically generated", planets populated by dynosaurs or similar creatures are still present, so as the dark emperor's cosmic fleet! There's probably some commercial determinism within their creationism rules...

At some point though, we could make the following comment: what is the fundamental difference between the use of algorithms to carve a digital world for a game (a computer generated simulation) and the pratice of many contemporary architects that uses similar (generative) algorithms to carve physical buildings to live in, not to speak about all the other algorithms that structure our everyday life? If not by somebody else, we are creating our own simulation, so to say.

-----

No Man’s Sky: A Vast Game Crafted by Algorithms

A new computer game, No Man’s Sky, demonstrates a new way to build computer games filled with diverse flora and fauna.

By Simon Parkin

The quality of the light on any one particular planet will depend on the color of its solar system’s sun.

Sean Murray, one of the creators of the computer game No Man’s Sky, can’t guarantee that the virtual universe he is building is infinite, but he’s certain that, if it isn’t, nobody will ever find out. “If you were to visit one virtual planet every second,” he says, “then our own sun will have died before you’d have seen them all.”

No Man’s Sky is a video game quite unlike any other. Developed for Sony’s PlayStation 4 by an improbably small team (the original four-person crew has grown only to 10 in recent months) at Hello Games, an independent studio in the south of England, it’s a game that presents a traversable universe in which every rock, flower, tree, creature, and planet has been “procedurally generated” to create a vast and diverse play area.

“We are attempting to do things that haven’t been done before,” says Murray. “No game has made it possible to fly down to a planet, and for it to be planet-sized, and feature life, ecology, lakes, caves, waterfalls, and canyons, then seamlessly fly up through the stratosphere and take to space again. It’s a tremendous challenge.”

Procedural generation, whereby a game’s landscape is generated not by an artist’s pen but an algorithm, is increasingly prevalent in video games. Most famously Minecraft creates a unique world for each of its players, randomly arranging rocks and lakes from a limited palette of bricks whenever someone begins a new game (see “The Secret to a Video Game Phenomenon”). But No Man’s Sky is far more complex and sophisticated. The tens of millions of planets that comprise the universe are all unique. Each is generated when a player discovers it, and is subject to the laws of its respective solar systems and vulnerable to natural erosion. The multitude of creatures that inhabit the universe dynamically breed and genetically mutate as time progresses. This is virtual world building on an unprecedented scale (see video below).

This presents numerous technological challenges, not least of which is how to test a universe of such scale during its development – the team is currently using virtual testers—automated bots that wander around taking screenshots which are then sent back to the team for viewing. Additionally, while No Man’s Sky might have an infinite-sized universe, there aren’t an infinite number of players. To avoid the problem of a kind of virtual loneliness, where a player might never encounter another person on his or her travels, the game starts every new player in the same galaxy (albeit on his or her own planet) with a shared initial goal of traveling to its center. Later in the game, players can meet up, fight, trade, mine, and explore. “Ultimately we don’t know whether people will work, congregate, or disperse,” Murray says. “I know players don’t like to be told that we don’t know what will happen, but that’s what is exciting to us: the game is a vast experiment.”

The game also bears the weight of unrivaled expectation. At the E3 video game conference in Los Angeles in June, no other game met with such applause. It is the game of many childhood science fiction dreams. For Murray, that is truer than for most. He was born in Ireland, but the family lived on a farm in the Australian outback, away from civilization. “At night you could see the vastness of space,” he says. “Meanwhile, we were responsible for our own electricity and survival. We were completely cut off. It had an impact on me that I carry through life.”

Murray formed Hello Games in 2009 with three friends, all of whom had previously worked at major studios. Hello Games’ first title, Joe Danger, let players control a stuntman. The game was, according to Murray, “annoyingly successful” in the sense that it locked him and his friends into a cycle of sequels that they had formed the company to escape. During the next few years the team made four Joe Danger games for seven different platforms. “Then I had a midlife game development crisis,” says Murray. “It changes your mindset when a single game’s development represents a significant chunk of life.”

Murray decided it was time to embark upon the game he’d imagined as a child, a game about frontiership and existence on the edge of the unexplored. “We talked about the feeling of landing on a planet and effectively being the first person to discover it, not knowing what was out there,” he says. “In this era in which footage of every game is recorded and uploaded to YouTube, we wanted a game where, even if you watched every video, it still wouldn’t be spoiled for you.”

When players discover a new planet, climb that planet’s tallest peak, or identify a new species of plant or animal, they are able to upload the discovery to the game’s servers, their name forever associated with the location, like a digital Christopher Columbus or Neil Armstrong. “Players will even be able to mark the planet as toxic or radioactive, or indicate what kind of life is there and then that then appears on everyone’s map,” says Murray.

Experimentation has been a watchword throughout the game’s production. Originally the game was entirely randomly generated. “Only around 1 percent of the time would it create something that looked natural, interesting, and pleasing to the eye; the rest of the time it was a mess and, in some cases where the sky, the water, and the terrain were all the same color, unplayable,” Murray says. So the team began to create simple rules, such as the distance from a sun at which it is likely that there will be moisture,” he explains. “From that we decide there will be rivers, lakes, erosion, and weather, all of which is dependent on what the liquid is made from. The color of the water in the atmosphere will derive from what the liquid is; we model the refractions to give you a modeled atmosphere.”

Similarly, the quality of light will depend on whether the solar system has a yellow sun or, for example, a red giant or red dwarf. “These are simple rules, but combined they produce something that seems natural, recognizable to our eyes. We have come from a place where everything was random and messy to something which is procedural and emergent, but still pleasingly chaotic in the mathematical sense. Things happen with cause and effect, but they are unpredictable for us.”

At the blockbuster studios in which he once worked, 300-person teams would have to build content from scratch. Now, thanks to the increased power of PCs and video game consoles, a relatively tiny team is able to create unimaginable scope. In this sense, Hello Games may be on the cusp not only of a new universe, but also of an entirely new way of creating games. “When I look at game development in general I think the cost of creating content is the real problem,” he says. “The sheer amount of assets that artists must build to furnish a world is what forces so many safe creative bets. Likewise, you can’t have 300 people working experimentally. Game development is often more like building a skyscraper that has form and definition but is ultimately quite similar to what is around it. It never sat right with me to be in a huge warehouse with hundreds of people making a game. That is not the way it should be—and now it doesn’t have to be.”

Related Links:

Tuesday, June 03. 2014

The 10 Algorithms That Dominate Our World | #conditions #algorithms

Note: not a very surprizing list and not deep into algorithms per se (rather services based on a mix of algorithms), interesting nonetheless!

Via io9

-----

The importance of algorithms in our lives today cannot be overstated. They are used virtually everywhere, from financial institutions to dating sites. But some algorithms shape and control our world more than others — and these ten are the most significant.

Just a quick refresher before we get started. Though there's no formal definition, computer scientists describe algorithms as a set of rules that define a sequence of operations. They're a series of instructions that tell a computer how it's supposed to solve a problem or achieve a certain goal. A good way to think of algorithms is by visualizing a flowchart.

(...)

-

-

-

More about it HERE.

Friday, April 25. 2014

ECAL students create bizarre smart home objects in Milan | #smart?

Before you'll start reading, let me add a missing information: the projects were developed during a full semester by 2nd year bachelor students at the ECAL, under the direction of Profs. Chris Kabel (product design) and Alain Bellet (interaction design).

Via It's Nice That

-----

By Rob Alderson

Léa Pereyre, Claire Pondard, Tom Zambaz: Mr Time (Image By ECAL/Axel Crettenand & Sylvain Aebischer)

It’s laudable that designers are working on worthy projects that will have a practical impact on building a better future, but we’re big believers that creatives should be engaged in making tomorrow a bit more fun too. Luckily for us, there are institutions like the Ecole cantonale d’art de Lausanne (ECAL).

At this year’s Milan Salone, ECAL’s Industrial Design and Media & Interaction Design students unveiled a series of weird and wonderful objects that presented “a playful interpretation take on the concept of the smart home.” These included a clock that mimics the gestures of those looking at it, cacti that respond musically to being caressed, a pair of chairs one of which reacts to the movements of the sitter in the other, a tea spoon that won’t be separated from its mug and a fan that is powered by the amplified breath of the homeowner.

It’s fair to say that some of these creations are completely impractical, but they all raise questions about our future interaction with household objects and they do so in the quirkiest way possible.

Related Links:

Thursday, April 24. 2014

Les-tuh skwair | #algorithm

-----

“As algorithmic systems become more prevalent, I’ve begun to notice of a variety of emergent behaviors evolving to work around these constraints, to deal with the insufficiency of these black box systems…The first behavior is adaptation. These are situations where I bend to the system’s will. For example, adaptations to the shortcomings of voice UI systems — mispronouncing a friend’s name to get my phone to call them; overenunciating; or speaking in a different accent because of the cultural assumptions built into voice recognition. We see people contort their behavior to perform for the system so that it responds optimally.”

Alexis Lloyd (NYTimes R&D) shares some interesting views under the title In the Loop: Designing Conversations with Algorithms.

fabric | rblg

This blog is the survey website of fabric | ch - studio for architecture, interaction and research.

We curate and reblog articles, researches, writings, exhibitions and projects that we notice and find interesting during our everyday practice and readings.

Most articles concern the intertwined fields of architecture, territory, art, interaction design, thinking and science. From time to time, we also publish documentation about our own work and research, immersed among these related resources and inspirations.

This website is used by fabric | ch as archive, references and resources. It is shared with all those interested in the same topics as we are, in the hope that they will also find valuable references and content in it.

Quicksearch

Categories

Calendar

|

|

July '25 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | 3 | 4 | 5 | 6 | |

| 7 | 8 | 9 | 10 | 11 | 12 | 13 |

| 14 | 15 | 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 | 26 | 27 |

| 28 | 29 | 30 | 31 | |||