Via Frieze

-----

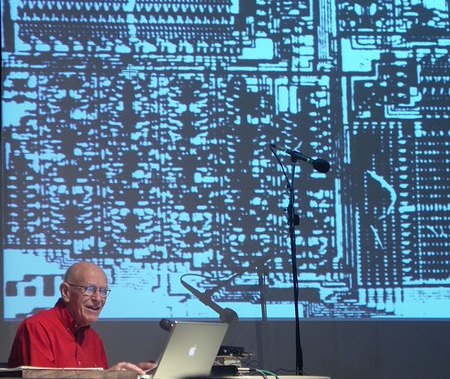

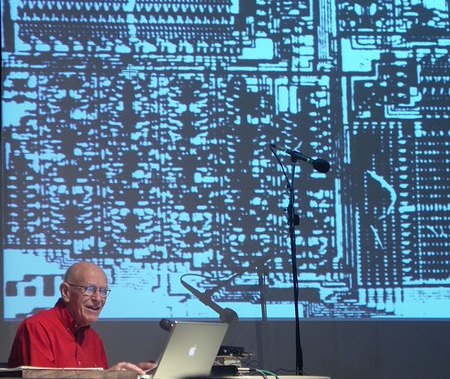

Max Mathews on his 80th birthday (2007)

Max Mathews, known as the father of computer music, passed away last month at the age of 84. In this interview, conducted at his home in San Francisco three weeks before his death, Mathews discusses his life and work – an incredible career that included blazing the trail for digital sound synthesis in the 1950s; directing acoustics and behavioural research at Bell Labs from 1962 to 1985; pioneering computer-based live performance in the 1970s; and collaborating with John Cage, Edgard Varèse, and many other noted artists and composers.

Geeta Dayal: How did you get started with working with computers?

Max Mathews: I was in love with computers. When I went to MIT [in 1950], I worked in the summer in the Bureau of Standards on an analogue computer. In addition to taking courses at MIT, I worked in the Dynamic Analysis and Control Laboratory, which was a big analogue computer. The digital computer at MIT in those days was called Whirlwind; it was very interesting but hadn’t, in my opinion, yet developed to an extent that it could solve interesting problems for the world. The analogue computers were much further developed, and were doing that. So that’s why I went in that direction.

The Dynamic Analysis and Control Laboratory developed from a wartime analogue computer called a differential analyzer, which was a brainchild of Vannevar Bush. We studied missile control systems for anti-aircraft missiles, since that was a big danger at the time. I learned how to do numerical integration, servomechanisms – many things that have been useful to me in my entire life. By my very good fortune, I got a job at the Bell Telephone Laboratories in acoustic research when I got my degree [a doctorate in electrical engineering, in 1954]. That was a time when digital computers were just beginning to be useful. And Bell Laboratories had lots of money; they had an early IBM computer – an IBM 650. They were working on speech coding to transmit more telephone channels over expensive long-distance facilities, like the undersea cable to Europe.

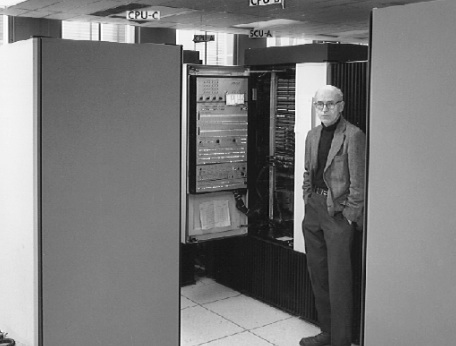

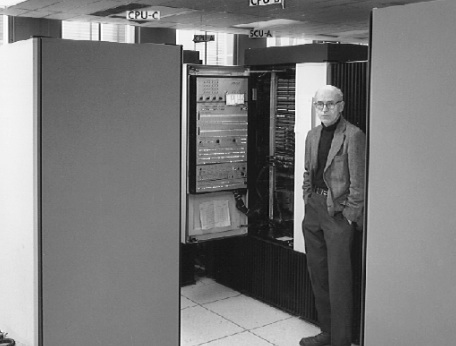

Max Mathews in IBM 7094 (1965)

It was a very expensive, slow and discouraging process, because speech is so complex. So people – not I, actually – would work for two or three years on a speech compressor, encoder, and then they would try it out and you couldn’t understand it. So they would tear it up and start over again. I thought the digital computer had now developed so if I could put speech into the computer, we could programme speech code in a few months, and then tear it up and start over again. That turned out to be a very successful research process. As a matter of fact, today it has turned out to be the universal communication process; almost all communications signals are encoded digitally today. Claude Shannon and his information theory showed that you could transmit digital signals essentially error-free, provided the information rate in the signal was less than the channel capacity you had. That was an existence proof. But other people came along and showed how to do it. The way that it’s done today is called error-correcting codes; you digitize the signal you want and you send quite a bit of extra digits that will correct any errors in the communication signal.

GD: Did you know Vannevar Bush?

MM: I never knew him at all. He had retired by the time I got to MIT. His fame and his influence was still very strong. I did know Jerry Wiesner, and he was a great man and affected many peoples’ lives, including mine. At that time he was in charge of the radiation laboratory, which was in Building 20, which you probably remember.

GD: Yes. I took my music classes in Building 20, when I was a student at MIT.

MM: It was a great place. I worked there – that was where my Dynamic Analysis and Control laboratory was. That’s where my wife worked as a food technologist; that’s where Jerry Lettvin ended up working. We all were sad when progress came along and that building got swept away.

GD: Have you read this book by Stewart Brand, called How Buildings Learn: What Happens After They’re Built (1994)? He talks about how Building 20’s temporary construction and ramshackle nature encouraged people to experiment and try new things.

MM: To build something that didn’t fit in a normal room, you knocked the wall down and built something that would fit! That’s what you need for experimentation. You don’t need something nice and neat and unchangeable. Building 20 was a wonderful place – no question about that.

GD: So going back to the dawn of computer music – can you talk about your forays into the area in the 1950s? I read that in the early 1950s, Australia was doing some stuff with computer-based music.

MM: That’s right. They may be the first. Well, it’s always impossible and dangerous to claim the first. But very early digitally synthesized music was done on an Australian computer, and it was fairly well written up. Unfortunately, they never recorded any of it, so we can’t hear the original stuff. But there was a computer in England, a Ferranti computer, and the BBC brought one of their phonograph record recorders in, and did record some of the computer music from that … I believe that may well be the oldest recording of computer-synthesized music. 1951. Anyway, I found ways of digitizing sound and putting it into a computer, and then doing things to it, taking it out of the computer again, and playing the processed sound. And actually, it was PCM [pulse code modulation] in those days, and the technique for digitizing sound was well known.

The crucial component to make this all work was the digital tape recorder. I don’t know if it was an IBM invention, but the practical machines came from IBM at that time. They had one computer in NYC that had their digital tape recorders. We built a digital tape recorder and a digital tape player at Bell Labs so we could record a sample of speech, take it into Madison Avenue, write our own programme, run down the stairs to the computer which was costing US$300 an hour to use, stick the cards in the computer, and maybe stick a ream of tape on the computer with the input sound, and get the sound out and take it back to the labs and reconvert it to speech.

We had to load the tape by hand. Punched paper tape was another input/output medium which came before the magnetic tape recorder, but wasn’t nearly as fast. The whole thing was to use the tape recorder to speed up the output of the computer. We operated at 10,000 samples per second in those days, which gives you a frequency bandwidth of a maximum of 5,000 samples. That’s the Nyquist frequency; it’s half the sampling rate. We had 12-bit samples, which gives you a better signal-to-noise ratio than most analogue tape recorders in the beginning. We could run the computer all night, record the tape slowly, and take it back to Bell Labs and play it back in real time, and listen to the sound. So that ushered in this era of research on acoustic sounds and speech.

My boss John Pierce also played the piano and loved music. We were going to a concert, and in the intermission he said, ‘Max, if you can get sound out of a computer, could you write a programme to synthesize music on a computer?’ I thought I could, and John encouraged me to take a little time off from speech coding and write a music programme. So this led to MUSIC 1 [a trailblazing programme for generating digital audio on a computer], which made sound. I don’t think the sound would be called music by most anyone, but it did have a pitch, a scale, and notes, and things like that. This led to MUSIC 2, 3, 4 and 5, all of which I wrote. MUSIC 2 is to get more than one voice out of the computer. MUSIC 1 only had one voice, and one wave shape, and one timbre. MUSIC 3 was my big breakthrough, because it was what was called a block diagram compiler, so that we could have little blocks of code that could do various things. One was a generalized oscillator … other blocks were filters, and mixers, and noise generators.

The crucial thing here is that I didn’t try to define the timbre and the instrument. I just gave the musician a tool bag of what I call unit generators, and he could connect them together to make instruments, that would make beautiful music timbres. I also had a way of writing a musical score in a computer file, so that you could, say, play a note at a given pitch at a given moment of time, and make it last for two and a half seconds, and you could make another note and generate rhythm patterns. This sort of caught on, and a whole bunch of the programmes in the United States were developed from that. Princeton had a programme called Music 4B, that was developed from my MUSIC 4 programme. And (the MIT professor) Barry Vercoe came to Princeton. At that time, IBM changed computers from the old 1794 to the IBM 360 computers, so Barry rewrote the MUSIC programme for the 360, which was no small job in those days. You had to write it in machine language.

As for the start of tape music in the western hemisphere, one was this fellow Vladimir Ussachevsky at Columbia University, and another studio was at the University of Michigan; those were the two earliest studios in the beginning of the 1950s. My first MUSIC 1 programme ran in 1957. The last programme I wrote, MUSIC 5, came out in 1967. That was my last programme, because I wrote it in FORTRAN. FORTRAN is still alive today, it’s still in very good health, so you can recompile it for the new generation of computers. Vercoe wrote it for the 360, and then when the 360 computers died, he rewrote another programme called MUSIC 11 for the PDP-11, and when that died he got smart, and he wrote a programme in the C language called CSound. That again is a compiler language and it’s still a living language; in fact, it’s the dominant language today. So he didn’t have to write any more programmes.

GD: Your boss actually encouraged you to take time off from work to write MUSIC? Bell Labs sounds like it was an amazing place.

MM: Bell Labs was a golden era. Golden for several things. One was that the research money to support it was gotten as a tax on the earnings or the profits of the telephone companies. We got it as a lump sum. The vice president in charge of research, William O. Baker, insisted that there be no strings attached to the money and that we could use it in the way we thought was best. So a lot of very important things were done with this support, or byproducts of things that were used in telephony. There were the radio telescopes, and the measurement of the background radiation with the very low-noise antennas that we developed that supported the Big Bang theory, and there was of course the transistor. And there were all sorts of speech codings that are still very important, and error correcting codes. The departments originally only hired Ph.D. physicists, mathematicians, and maybe a few chemists. Then they gradually let in some engineers. The whole research department, the position you took was a member of staff – MTS, member of technical staff. That was the highest position in the research department! [laughs]

GD: I read a story that in the 1950s, when you were working at Bell Labs late at night, you would pipe your musical experiments through the whole intercom system at Bell Labs.

MM: [Smiles mysteriously] Well, that could be. Now, what about the history of music? Well, electronic music got started in Europe and came here. Computer music and digital synthesis got started here, and moved back to Europe. And the person who took it back, primarily, was Jean-Claude Risset, a Frenchman, who took a degree in physics at the Sorbonne, and he persuaded his physics instructor to work at Bell Labs for at first a year, and then he came back and stayed a couple years later. Then he came back to France, and got the telephone company there to build a tape recorder reader that would read digital tapes and convert them to sound. So he got computer music synthesis going in Europe. And this interested Pierre Boulez, who by that time was a very famous orchestra conductor, with an orchestra in Germany, an orchestra in the New York Philharmonic, and an orchestra in London.

Pompidou [who was now the president of France, in 1970] wanted him to come back and conduct an orchestra in Paris, and Boulez said he wasn’t very interested in that, but if Pompidou would make him a music research laboratory he would come back and run that. And so Pompidou said I’ll do anything you want – in fact I’ll give you a little orchestra in addition to that, an orchestra that specializes in modern music, the Ensemble Intercontemporain. The research lab was called IRCAM, and Risset was put in charge of one division of that. There were five divisions, one for computer music. Then computers got so powerful and so universal that all parts of IRCAM worked on computer music. And they still do.

Of course, computer music, along with everything else computer-wise, was swept along with the power of the computers, which increased unbelievably in the time between 1955 and today. The power increased somewhere between 10,000 and 100,000 times in terms of the speed of the computer, and memory size. And the price went down so that the IBM machine we started with – which was a room full of refrigerator-sized objects that you could walk through, with a raised floor, and a tangle of wires…

GD: …This was the IBM 704?

MM: The 704, 1794, 360 – a whole series of those things. The computation center computers, even the Cray supercomputers, these were all rooms full of things. Now this little [Macbook Pro] I bought for US$1700 is thousands of times faster than all those computers. And everyone else has them too.

GD: What’s your attitude about how difficult it was for you in the 1950s to make computer music, versus making computer music now? That the incredible constraints you had actually increased innovation? That perhaps there’s too much you can do, now?

MM: I’ve wondered about that. I do know that in the ‘50s and ‘60s, and even in the ‘70s, that the quality of computer – how beautiful we could make the timbres – was limited by how much computing time we could afford to buy, and the limited power of early computers. That is now no longer true. Almost any computer anyone has, has thousands of times more power than anyone knows how to use in a musically useful way. Musically useful means making things that really light up peoples’ pleasure centers, that they think are beautiful, or expressive. The limitations, and the domain of future progress, lies in better understanding how our brains work for music. Why we love music; what we love about it.

We knew at the beginning that the computer could make any sound the human ear could hear, and any timbre. That was not true of traditional instruments. The violin is certainly beautiful, but it will always sound like a violin. That can be very good, and it’s also limited. And the computer is not limited.

GD: What drew you to wanting to synthesize sound in the first place?

MM: I learned how to play violin when I was a kid. When I was a teenager, I learned that music was beautiful by listening to records.

GD: What were your favorite records?

MM: There was a listening room in Seattle when I was in the navy [during World War II]. When the war was over, I would go there. I listened to symphonies; Brahms was a favorite. One of the first vinyl records, which didn’t have all the hiss and crackle of shellac records, was Till Eulenspiegel lustige Streiche (1894–5). And I still remember that. The Richard Strauss story about the happy-go-lucky fellow who did a lot of bad things and eventually got hung in the end, but everyone loved him.

Music from Mathematics (c.1962)

GD: Didn’t you build a 50-channel mixer in 1964, for the New York Philharmonic and Leonard Bernstein? For a performance of John Cage’s Atlas Eclipticalis?

MM: [Laughs] Yes, it would have been in the 1960s, because Cage and Jim Tenney were the two conductors; they ran the mixer. The mixer did have roughly 50 input channels, one for each pair of musicians at a given music stand. It was an octopus of wires, and they all came into these two consoles with a lot of knobs to adjust the volumes, and to direct the sound to one or more of about a dozen loudspeakers which were positioned around Avery Fisher Hall. Cage wrote the music for the performers, and he and Tenney ran the mixer during the performance. Even by Cage’s fairly generous standards, it wasn’t what he had hoped for. He added a piano portion, and I forgot the name of his pianist to the piece [David Tudor], and my judgment was that Bernstein stayed as far away as he could get; he couldn’t stand it. And I was just as happy to have him stay away, to tell you the truth.

GD: Did you and Bernstein not get along?

MM: We didn’t get close enough to not get along. But if we had gotten any closer, I would have quit the project.

The instruments did not have contact microphones on them, and of course you don’t want to put a contact microphone on a Stradivarius. I’d encouraged the musicians to bring their second violins, or any old violin, instead of their best violins. I arranged the contact mics to be on parts of the instrument that aren’t permanent, like the bridge, and had gone through quite a bit of trouble to be sure that the contact microphones could be put on the instruments without damaging the instruments. I think most of the instrumentalists didn’t have any trouble with that. So I was really mad at Bernstein when he came in one morning and told the instrumentalists that if they didn’t want to use the mics, they didn’t have to. I think most of them went ahead and used the mics. And Bernstein didn’t come back again. It was a concert series, about four or five nights of this piece, that it was played. Anyhow, it was fun to work with Cage, and it was fun to work with the orchestra, and it was fun to build this rather large mixer.

GD: How did you get involved with Cage, and this project?

MM: Let’s see, how did I get involved with Cage? I’ve forgotten, but we did get to be good friends, and I also wrote some computer programmes to simulate –he had this Chinese set of sticks he’d throw randomly, the I Ching or something like that.

GD: Yes, the I Ching.

MM: So I had a computer programme that would do that for him.

GD: What year was this?

MM: Oh, about 1970. He would come out to Bell Labs occasionally, and sometimes we’d go for a walk behind my house – there were woods back there, and we’d go look for mushrooms. He was a very friendly guy, and very resourceful. If something wasn’t working, he would revise the score, so that at least something could be done.

GD: This computer programme you wrote for Cage, what was it called? It was just for him – you didn’t release it?

MM: Yeah. I guess the I Ching put out not just one random number after another, but a group of three or four numbers, and so it would do that. And they were somewhat related. The details I’ve really forgotten.

GD: What did you write the programme in?

MM: I think FORTRAN.

GD: I wrote a little book about Brian Eno [Another Green World, 2009], and over the course of researching the book, I read a lot about the I Ching. I bought a few books on it, and it’s very complicated. It’s fascinating to me that you were writing software based on it.

MM: I didn’t remember it being that complicated, but maybe Cage simplified it for me.

GD: So Cage would visit you at Bell Labs? What would Cage do there?

MM: Well, he would maybe bring out the mixer and ask me to fix something – when we were done with the New York thing he took the mixer home, and maybe used it in other places. I’m sure he came out to tell me what he wanted in the way of the I Ching programme. I went over to Paris part-time to help Boulez design the computer parts of IRCAM. Cage appeared there once or twice with his basket full of macrobiotic foods.

Charlotte Moorman gave these avant-garde music festivals each year, at some venue in New York. She was a cello player. Mostly, she knew everyone. She was charming, and we’d all spend a week of having fun at one of these festivals. Jim Tenney, a composer, worked at Bell Labs then. In the festival, which was at Hunter College, Jim dressed up in a monkey suit and climbed around the stage playing a Stockhausen piece.

GD: Did you know Stockhausen?

MM: We knew each other. He came and took a look at my computer music stuff. I don’t think he ever did any computer music. At IRCAM, he wanted to put formants [resonances characteristic of the human vocal tract in speech and singing] onto a trumpet sound, in the hopes of making a trumpet speak. So I made him some formants, and took the board there. He had a son-in-law, who played the trumpet, and so he was going there to record a bunch of notes that we would then put through these formant filters. He wanted the trumpet player to sustain the note for a long time, so that we can play with the formants. And that’s very difficult, especially for the higher-pitched notes. So I thought, the poor son-in-law is going to expire. I explained that we could make tape loops so that a few seconds of note would be entirely sufficient. That didn’t dissuade Stockhausen from insisting. That poor guy.

Stockhausen’s saving grace was that he usually had a woman who took pity on people, and tried to do something to ameliorate their pain. In this recording session, the trumpet players started at ten in the morning and went on til about four in the afternoon. We were all starved, and she saw that this was really getting to us. So she set out into the street and bought a big bag of roasted chestnuts, and those saved the day for me.

GD: You were an advisor to IRCAM from 1974 to 1980. Was that a positive experience?

MM: That was a high point in my life. I didn’t realize it at the time, but looking back it certainly was. I would go over to Paris for about a month at a time, because I was still running the acoustic research at Bell Labs, and I would do that several times a year. And Risset was there, he’s a very old friend, and I met some other people who I still like very much – Gerald Bennett, who’s now in Switzerland, and Luciano Berio was there. He didn’t stay long. It was a great place. And the architects were building it—that’s pretty exciting too. It’s an underground laboratory, as I think you know. I don’t know what it’s like now; I haven’t been inside in a decade. But it has done important stuff.

GD: Can you talk a bit about your contribution to 2001: A Space Odyssey (1968)?

MM: Stanley Kubrick sent one of his people to Bell Labs to find out what a telephone booth in space should be like. Baker, the vice-president of research, sent the guy to talk to John Pierce, my boss. Pierce gave his opinions on the telephone booth, and then said to go hear some of my computer music. He came, and I had this first piece that had a singing voice synthesized, ‘A Bicycle Built for Two’, and the guy was impressed with that, and apparently Kubrick was impressed with it. They apparently incorporated it into the plot as HAL’s swan song. He didn’t actually use the tape that I sent him; he had an orchestra, or synthesizers, redo it. The singing part, which is the interesting thing, was done by two other people, not me – John Kelly, who was a student of Claude Shannon’s, and Carol Lochbaum, who was a very good programmer in my department.

It turned out that making singing was easier than making speech, because if you make speech you have to figure out what the proper pitch is to go with the inflections of the speech. If you’re making singing, you get the pitch from the melody, and that works. This was really the first example of physical modeling. The physical model they had was in the computer, a vocal track – a so-called tube model – and seven sections. The computer could control the diameter of each section. That’s not a physical model; this is a differential equation in the computer, or a difference equation. So they would wiggle these things to produce the right vowels, and consonants too. The only thing they lacked was they didn’t know what shape to make the sections to get the vowels. But there was a fellow in Stockholm who did know that, because he had done X-ray studies of Russian subjects uttering sustained vowels, and he took a cross-sectional X-ray and then he could deduce the vocal tract areas from that. So they took his data. The ‘Bicycle Built for Two’ was done with Russian vowels. This was a tube physical model of the vocal tract.

GD: So Arthur C. Clarke could just swing by Bell Labs, and Kubrick could get advice? Celebrities visit companies like Google, but that seems to be a very different thing. With Bell Labs, it seemed that these visitors wanted to be personally involved in a project, or they wanted Bell Labs to help them build something. Rather than Google, where famous people give talks, and get tours.

MM: Well, basically. Somebody had to invite visitors in, but as long as you were invited by someone on the staff, or wanted to talk to someone, you could do that. They generally didn’t give talks.

Usually the kooks got sent to me; I don’t know why. The prince of Saudi Arabia came by. He wanted to move icebergs from Antarctica to go to the Arabian peninsula as a source of water. I don’t think he ever did it. I don’t think the king, at the end, was willing to support it. But anyway, we talked to him.

GD: But what would the acoustics lab have to do with icebergs?

MM: Nothing! [laughs] Absolutely nothing! We knew nothing about it. Neither did anyone else.

GD: What did you tell the prince of Saudia Arabia?

MM: I told him, basically, that I didn’t know, and that no one I knew knew anything about this, and to seek advice somewhere else. And then there was this fellow who thought he had psychic powers, I forgot his name. He specialized in bending spoons …

GD: …Uri Geller?

MM: Uri Geller. So I had to entertain him. Indeed, he bent a spoon at lunchtime. But he also asked me about my kids and family when the spoon was being bent. I don’t know whether he used his telepathic forces [laughs] or managed to bend it with his fingers while I was distracted.

GD: How many people were in your group at Bell Labs?

MM: About 70. I had the behavioural research there, and the speech research, and the neurophysiology research. The physiologists did experiments – mostly on fish, using the lateral line of the fish, which is the equivalent to our ears. I forget whether they did some cat experiments or not. I think probably not. We were going to do some work with the Aplysia [a genus of sea slugs]. Oh, and they studied frogs. There’s a species of frog that can produce a viable intermarriage but they don’t; the reason they don’t is that they sing two separate songs. And so if you’re a male frog you have to sing the right song to get the attention of the female frog, and that’s how the species are kept separate. And so this was the result of one of our physiologists.

GD: They were doing wet research with frogs, all in the name of telephony research?

MM: Well, we did think that it’s important to know how the ear actually hears, and how the brain interprets it. We had vision people working too.

GD: So what happened to Bell Labs?

MM: The Bell system was a regulated monopoly that had 90 percent of the telephone business in the US. In the analogue days, the Bell system was a natural monopoly. Analogue signals are very fragile. So if you’re going to have a long-distance oceanic line that has a hundred amplifiers in it, every amplifier has to have very low distortion and controlled gain, so you have to use a feedback amplifier. Our mathematicians developed feedback amplifiers, and you really need the telephone at the far end to be something you’ve built so it doesn’t overload. So we had a technical justification for insisting that the entire Bell system be made of Bell Labs components so that they would all work together. It really wouldn’t have worked if it had been a whole bunch of different companies were doing a whole bunch of different things.

That was true until the digital revolution came along, where you convert the signals, the speech into numbers, and put them through channels with error-correcting codes. These are very robust signals, and you can hand them from one company to another, and send them out to Mars and get them back. They’re perfectly clean signals with essentially no numerical errors. When you put them through the digital to analogue converter at the end, you’ll hear very clear sound. When I call my friend in Berlin on Skype, it works. So once the technical monopoly was no longer essential, by the nature of businesses and competition, lots of people wanted to break up the Bell system. The anti-trust department went along with this, so the Bell system got broken up.

GD: It seems like Bell Labs was almost like a university then, with the focus on basic research.

MM: It was even better than a university for research, because the various people at Bell Labs didn’t have to compete for money. Whereas professors do have to find money, and it’s a pretty competitive life.

GD: Tell me about your work with Edgard Varèse. Which electronics did you build for Varèse, and for which compositions?

MM: Deserts [1950–4]. Well, there were three orchestra sections, and in between there were short electronic sections. We didn’t work on Poème électronique [1957–8] – that was stuck with the installation building in Belgium or Holland, where they had a lot of loudspeakers, so it was appropriate to that. But Varèse didn’t do very much electronic, actually. But that was fine, because he did so much with other kinds of music.

Louise, his wife, would feed us a good dinner, and then we would go up to Vladimir Ussachevsky’s tape studio at Columbia, and work on Varèse’s tapes. So that’s how we got to know him. Then he would come out to Bell Labs and visit us, and John Pierce got to know him too. He was a very sociable person.

GD: Did you know Vladimir Ussachevsky well?

MM: Oh, very well. Ussachevsky and Milton Babbitt, were two of the people who, when I played the initial sounds to them – which were terrible – saw the potential immediately and encouraged me.

GD: Babbitt died recently. You knew him well, too?

MM: Not as well as Ussachevsky, but quite well. Together they had this Columbia-Princeton tape studio on 125th Street in New York. They had the RCA synthesizer, which was certainly the biggest synthesizer in the world at that time, and Babbitt did some pieces on it. I saw his desk. The synthesizer ran on punched paper tape rolls that were about a foot wide, and you punched them by hand. I don’t think you could copy them either. The top of Babbitt’s desk was just piled with these paper tape rolls. But he didn’t do anything technically after that with the computer. I think he figured he’d paid his dues with technology by fighting the RCA synthesizer. After that I think he only wrote pieces for traditional instruments. Ussachevsky, though, came out and spent a sabbatical at Bell Labs, and did do some pieces on the computer.

GD: Was there a lot of crossover between Bell Labs and the Columbia-Princeton centre?

MM: No, it was just me and Newman Guttman, and maybe some of the musicians that I got to come into Bell Labs at night that would interact there. We had lots of computers around; they were used during the day, but not during the night so much. I had music programmes that ran on them, and I would arrange for musicians to be able to come in and work on them. Emmanuel Ghent was one of the ones that did the most interesting music, and Laurie Spiegel, a New York musician, also did some interesting stuff.

GD: When you were making MUSIC, did you realize that it was going to be so influential?

MM: No, I couldn’t possibly. I had no idea how fast computers could get, how powerful. I don’t think anyone did. The computers, the transistors, the chips, the increase in speed and the reduction in size of the components of chips, and the increase in the number of gates that you could put on a chip, and an increase in all sorts of other things like the kinds of memory and the amount of memory. The early discs were enormous things, that held a minute portion of the memory in this computer. In this computer now, the memory is solid state; there are no moving parts to it. The computer music effort was swept along by computer technology; there’s no question about that.

Although people either thank me or blame me as the father of computer music – they mean computer music synthesis – if I hadn’t done it, other people would have done it. Because they could just couldn’t resist doing it! There’s no question about it. And also because the technology was there.

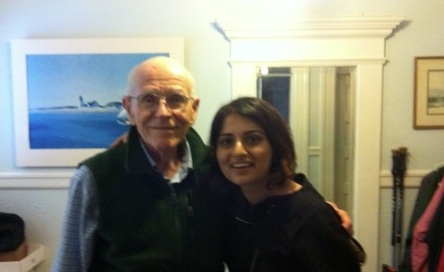

Max Mathews, with Geeta Dayal at his house in San Francisco, March 2011

Geeta Dayal

Geeta Dayal is the author of Another Green World, a book on Brian Eno (Continuum, 2009) and is currently at work on a new book on the history of electronic music.