Monday, March 01. 2010

Gartner's study on SmartPhones worldwide sales per OS

Worldwide mobile phone sales to end users totalled 1.211 billion units in 2009, a 0.9 per cent decline from 2008, according to Gartner, Inc. In the fourth quarter of 2009, the market registered a single-digit growth as mobile phone sales to end users surpassed 340 million units, an 8.3 per cent increase from the fourth quarter of 2008.

"The mobile devices market finished on a very positive note, driven by growth in smartphones and low-end devices," said Carolina Milanesi, research director at Gartner. ”Smartphone sales to end users continued their strong growth in the fourth quarter of 2009, totalling 53.8 million units, up 41.1 per cent from the same period in 2008. In 2009, smartphone sales reached 172.4 million units, a 23.8 per cent increase from 2008. In 2009, smartphone-focused vendors like Apple and Research In Motion (RIM) successfully captured market share from other larger device producers, controlling 14.4 and 19.9 per cent of the worldwide smartphone market, respectively.”

Throughout 2009, intense price competition put pressure on average selling prices (ASPs). The major handset producers had to respond more aggressively in markets such as China and India to compete with white-box producers, while in mature markets they competed hard with each other for market share. Gartner expects the better economic environment and the changing mix of sales to stabilise ASPs in 2010.

Three of the top five mobile phone vendors experienced a decline in sales in 2009 (see Table 1). The top five vendors continued to lose market share to Apple and other vendors, with their combined share dropping from 79.7 in 2008 to 75.3 per cent in 2009.

Table 1

Worldwide Mobile Terminal Sales to End Users in 2009 (Thousands of Units)

|

Company |

2009 Sales |

2009 |

2008 Sales |

2008 |

|

Nokia |

440,881.6 |

36.4 |

472,314.9 |

38.6 |

|

Samsung |

235,772.0 |

19.5 |

199,324.3 |

16.3 |

|

LG |

122,055.3 |

10.1 |

102,789.1 |

8.4 |

|

Motorola |

58,475.2 |

4.8 |

106,522.4 |

8.7 |

|

Sony Ericsson |

54,873.4 |

4.5 |

93,106.1 |

7.6 |

|

Others |

299,179.2 |

24.7 |

248,196.1 |

20.3 |

|

Total |

1,211,236.6 |

100.0 |

1,222,252.9 |

100.0 |

Note* This table includes iDEN shipments, but excludes ODM to OEM shipments.

Source: Gartner (February 2010)

In 2009, Nokia's annual mobile phone sales to end users reached 441 million units, a 2.2 per cent drop in market share from 2008. Although Nokia outperformed industry expectations in sales and revenue in the fourth quarter of 2009, its declining smartphone ASP showed that it continues to face challenges from other smartphone vendors. "Nokia will face a tough first half of 2010 as improvement to Symbian and new products based on the Meego platform will not reach the market well before the second half of 2010," said Ms Milanesi. "Its very strong mid-tier portfolio will help it hold market share, but its ongoing weakness at the high end of the portfolio will hurt its share of market value."

Samsung was the clear winner among the top five with market share growing by 3.2 percentage points from 2008. This achievement came as a result of improved channel relationships with distributors to extend its reach and better address the needs of individual markets as well as a rich mid-tier portfolio. For 2010, the company is putting a focus on Bada, its new operating system (OS) that aims at adding the value of an ecosystem to its successful hardware lineup.

Motorola sold slightly more than half of its 2008 sales and exhibited the sharpest drop in market share, accounting for 4.8 per cent market share in 2009. "Its refocus away from the low-end market limited the volume opportunity, but should help it drive margins going forward. Motorola's hardest barrier is to grow brand awareness outside the North American market, where it benefits from a long-lasting relationship with key communications service providers (CSPs).

In the smartphone OS market, Symbian continued its lead, but its share dropped 5.4 percentage points in 2009 (see Table 2). Competitive pressure from its competitors, such as RIM and Apple, and the continued weakness of Nokia's high-end device sales have negatively impacted Symbian's share.

At Mobile World Congress 2010, Symbian Foundation announced its first release since Symbian became fully open source. Symbian^3 should be made available by the end of the first quarter of 2010 and may reach the first devices by the third quarter of 2010, while Symbian^4 should be released by the end of 2010.

"Symbian had become uncompetitive in recent years, but its market share, particularly on Nokia devices, is still strong. If Symbian can use this momentum, it could return to positive growth," said Roberta Cozza, principal research analyst at Gartner.

Table 2

Worldwide Smartphone Sales to End Users by Operating System in 2009 (Thousands of Units)

|

Company |

2009 Units |

2009 |

2008 Units |

2008 |

|

Symbian |

80,878.6 |

46.9 |

72,933.5 |

52.4 |

|

Research In Motion |

34,346.6 |

19.9 |

23,149.0 |

16.6 |

|

iPhone OS |

24,889.8 |

14.4 |

11,417.5 |

8.2 |

|

Microsoft Windows Mobile |

15,027.6 |

8.7 |

16,498.1 |

11.8 |

|

Linux |

8,126.5 |

4.7 |

10,622.4 |

7.6 |

|

Android |

6,798.4 |

3.9 |

640.5 |

0.5 |

|

WebOS |

1,193.2 |

0.7 |

NA |

NA |

|

Other OSs |

1,112.4 |

0.6 |

4,026.9 |

2.9 |

|

Total |

172,373.1 |

100.0 |

139,287.9 |

100.0 |

Source: Gartner (February 2010)

The two best performers in 2009 were Android and Apple. Android increased its market share by 3.5 percentage points in 2009, while Apple's share grew by 6.2 percentage points from 2008, which helped it move to the No. 3 position and displace Microsoft Windows Mobile.

“Android's success experienced in the fourth quarter of 2009 should continue into 2010 as more manufacturers launch Android products, but some CSPs and manufacturers have expressed growing concern about Google's intentions in the mobile market,” Ms Cozza said. “If such concerns cause manufacturers to change their product strategies or CSPs to change which devices they stock, this might hinder Android's growth in 2010.”

"Looking back at the announcements during Mobile World Congress 2010, we can expect 2010 to retain a strong focus around operating systems, services and applications while hardware takes a back seat," said Ms Milanesi. "Sales will return to low-double-digit growth, but competition will continue to put a strain on vendors' margins."

Via Gartner

Remarkable Stats on the State of the Internet

by Jennifer Van Grove

Individual stats like Facebook passing the 400 million user mark, Twitter hitting 50 million tweets per day, and YouTube viewers watching 1 billion videos per day are impressive on their own, but what if we looked at Internet-related stats collectively? Jesse Thomas did just that in his video State of the Internet.

Individual stats like Facebook passing the 400 million user mark, Twitter hitting 50 million tweets per day, and YouTube viewers watching 1 billion videos per day are impressive on their own, but what if we looked at Internet-related stats collectively? Jesse Thomas did just that in his video State of the Internet.

The video — created and animated by Thomas with data from multiple sources — highlights some remarkable figures and visually depicts the Internet as we know it today. It’s a must-watch video for anyone trying to wrap their minds around just how immersed web technologies have become in our everyday lives.

You can watch the video below, but we’ve also included some of the most intriguing figures shared in the video:

- There are 1.73 billion Internet users worldwide as of September 2009.

- There are 1.4 billion e-mail users worldwide, and on average we collectively send 247 billion e-mails per day. Unfortunately 200 billion of those are spam e-mails.

- As of December 2009, there are 234 million websites.

- Facebook gets 260 billion pageviews per month, which equals 6 million page views per minute and 37.4 trillion pageviews in a year.

-----

Via Mashable

Sonic Acts 2010: On the Poetics of Hybrid Space

I just visited an interesting panel on the Sonic Acts 2010 Conference called The Poetics of Hybrid Space.

I just visited an interesting panel on the Sonic Acts 2010 Conference called The Poetics of Hybrid Space.

When over here at The Mobile City we talk about Hybrid Space, we usually refer to the work of Adriana de Souza e Silva who in several articles has convincingly argued against the dichotomy between physical or real space on the one hand and virtual or mediated spaces on the other. The very fact that these two can longer be separated is one of the central themes of The Mobile City: media spaces and virtual networks extend, broaden, filter or restrict the experience of physical spaces, and the other way around.

Interestingly, over at Sonic Acts they have adopted a broader concept of hybridization. Moderator Eric Kluitenberg explained that hybrid space is not a technical concept. Rather hybridization is about heterogenic logics that are simultaneously at work in the same space. For instance there is the top down logic of the build environment developed by the architect. But the same space may also be subjected to the logic of an informal street economy that may or may not be compatible with the ideas operationalized by the architect. The mediated experiences of the mediascape make up only one of the logics that operate in a space. Sometimes these different logics clash, sometimes they overlap, sometimes they just negate each other. However, we should understand all these different logics as real. They are all operative at the same time and together make up how a place is lived and experienced.

Having said that, the addition of the new media technologies such as mobile phones has increased the density of different logics operational in (urban) space, and new cultural practices and adaptations of space are emerging as a result. This makes the urban experience more complex and messier than ever. It’s even doubtful whether we can truly get a grasp on these processes. What we can do is try to increase our sensitivity of the complexity of different logics at work. It was this issue that most of the presentations in this session addressed.

Duncan Speakman’s Subtlemob

The work of sound artist Duncan Speakman, who discussed his subtlemob-project, addressed several aspects of the hybridization of space through the advent of digital media technologies.

A Subtlemob is a collective urban audio-experience set in urban space. Participants download an mp-3 file, head to a location in the city, and at a particular time they all press play at the same time, thereby collectively experiencing the same soundtrack. The soundtrack does not only consist of music but also of spoken instructions that the participants have to carry out (And sometimes there is different instructions for different groups of participants). It is like a flash-mob, yet more subtle. That is: flash mobs are often staged experiences that gain most of their audience and impact not at the moment itself, but because the event is taped on video and broadcasted on Youtube. A subtlemob is only to be experienced live, there are no recordings, it is all about the experience you have when you are there. You just have to be there to get it.

Popout

One of the starting points of this project is the work of audio culture researcher Michael Bull (I happend to do a podcast interview with him a few years ago, just in case you’d care). Bull studied the experience of the city of first walkman and later iPod users and came up with a few conclusions.

First of all, a lot of people used music to augment their experience of the city, they purposely add a soundtrack to extend or alter their mood. This is not something most composers take into account, Speakman realized. Usually music is not composed with a particular spatiality in mind. One composes for an abstract listening experience, not for the person that listens to an iPod in the back of the bus. But how can you compose for those specific experiences? Speakman therefore decided to change this around, so when composing he often goes to the location his music is intended for to check out if the match is right.

The second theme that has come up in the work of Michael Bull is the idea of the bubble-experience. Digital media have the affordance to make personal spaces warmer, but at the same time they make public spaces cooler. With an iPod one constructs one’s own intense experience in urban space, but it also privatizes this experience. Similarly many critics have argues that also mobile phones play a similar role. They create a ‘full time intimate community’ in which throughout the day a network of friends keeps continuously in touch with each other, even if friends are not physically present. Again this can be understood as a privatization (or parochialization) of public space.

Speakman:Digital media make personal space warmer, public space cooler |

The idea of the subtlemob is to ‘hack’ these devices to turn their logic around. Can mp3-players also be used to construct collective experiences that heighten the experience of being in public? That encourages people to observe one another rather than retracting in their mediated bubbles of private space.

Teletrust

The other three presentations, including work of Peter Westenberg and Elizabeth Sikiaridi, addressed related issues.

Karen Lancel en Hermen Maat showed their Teletrust-installation, which consists of a full body veil that on the one hand extends the idea of a personal bubble-space. Yet at the same time it enables the wearer – by touching oneself and activating the sensors in the veil – to get in touch with stories told by other people. Is it possible to use networked media to create intimate spaces within public space?

|

In TELE_TRUST [we] explore how in our changing social eco-system we increasingly demand transparency; while at the same time we increasingly cover our vulnerable bodies with personal communication-technology. For TELE_TRUST Lancel and Maat designed a hybrid play zone for a vulnerable process, of balancing between fear AND desire for the other. In a visual, poetic way they explore the emotional and social tension between visibility and invisibility; privacy and trust.

-----

Via The Mobile City

Related Links:

Particulate Swarms

![[Radar image of Sydney during the dust storm of September 2009.]](http://blog.fabric.ch/fabric/images/1350_1267480801_0.jpg)

Editors Note: File under Glacier / Island / Storm, a studio run by BLDGBLOG at Columbia University GSAPP. Storm edition.

———–

“It is time / It is time for / It is time for stormy weather” – The Pixies

Storms deal in simple materials: air, water (in various states), and other particulates, such as dirt or dust. Though, not unlike species swarming in nature (or microcosmic viruses for that matter), they assemble, grow, pulse, and respond to environmental conditions. All the while, luring other similar material into their agitated state. Storms move somewhat indifferently to us and often in spite of us. They are often predictable and just forecastable enough to tease those of us that want to know when, where, and how much. All of this is done through pattern play, and behavioral modeling at two-scales: the massive regional and continental airpsaces, and the molecular or particle-based scale. Storms work in cycles, some small seasonal cycles, some century long, and even some on significant larger timespans (quasi-periodic). We are looking here at three storms; all recurring, swirling, pulsing, and shifting–of various particulate matter: dust, water, nitrogen (air). This is through the filter of states of matter: solid, liquid, and gaseous.

![[Map showing plume expansion rate, dircetion and growth of the Australian dust storm of 2009.]](http://blog.fabric.ch/fabric/images/1350_1267480802_1.png)

1. Solid Storm: Dust // Certainly as one of the most fantastically documented storms of our young century, the Australian Dust Storm of 2009, you have no doubt seen the surreal images of highly saturated red and orange airspace. For this event, air particulate readings were about 15,400 micrograms per cubic meter. A typical day registers at about 50 micrograms, and a bushfire registers around 500 micrograms per cubic meter. It was thick. What was interesting though when this 2-day event rapidly escalated was that its long-term effects were somehow overlooked in favor of the evocative photography of a Mars-like outback. Within two weeks after the flash storm, scientists realized that the event caused a massive shift of phosphates and nitrogen as 4000 tons of desert topsoil particulates were dumped in the Sydney Harbour. Beyond that, the estimates for materials dumped in the Tasman Sea were an astounding 3,000,000 tons. And, as if a massive simulation of ocean fertilization, it was believed that this spurned phytoplankton growth to triple. So, what was in limited supply–yet was needed to grow life–in the desert ocean is ironically abundant in desert land. Further estimates put the additional phytoplankton in the Sea at 2 million tons, and, more impressively, with that about 8 million tons of CO2 captured. Eight million tons; thats a full months of a coal-fired power plant CO2 emission. Estimates for the amount of fish spawned from the increased phytoplankton are not known, but one can only imagine. Storms spawn swarms. Ocean fertilization inadvertently simulated at a massive scale by nature itself. Should it still be called geo-engineering if, in fact, it already occurs naturally on a massive?

A note should also be included on the Dust Bowl of the 1930s, aka dirty thirties. The Dust Bowl phenomenon lasted during a drought in the Great Plains from 1930-36. After the dust had settled, it was shown that farming practices in the region were irresponsible with crop rotation, deep plowing, and erosion prevention. On numerous occasions during the dust clouds, the sky would turn black by day as far East as Washington DC. Dirt fell like snow in Chicago. The winter of 1934 red snow fell in the Northeast. And on April 24, 1935, the day became known as Black Sunday.

Some believe the Dust Bowl was predictable. Here is a PBS video on the Dust Bowl years.

Another interesting diversion on dust storms is the alkali storms found at Owens Lake and other salt flats. This is well documented by Barry Lehrman in The Infrastructural City. (Pruned has an excellent writeup on this here.)

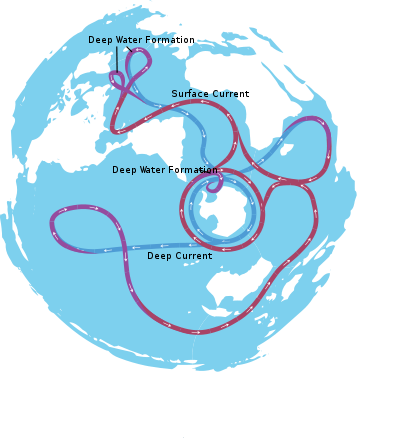

2. Liquid Storm: Water // One of the major circulatory systems responsible for the movement of large masses of water (and their associated species) and stabilizing the global climate is the Thermohaline Circulation (THC). The Thermohaline is an underwater storm–a massive global current. Known as the Great Ocean Conveyor, the Thermohaline Circulation is a series of underwater oceanic currents that are informed by the density of water, which is a function of the water’s temperature and salinity. Warm salty water is rapidly cooled as it reaches northern latitudes and as it forms into ice, sheds much of its salt. This increases the salinity in the remaining unfrozen cold water, making it denser and causing it to drop to the ocean floor (known as the ‘North Atlantic Deep Water’). This denser water moves towards the equator where it gains heat and migrates upwards. Global warming is promoting increased melting of the polar ice caps, leading to a more consistent density of water and slowing the thermohaline cycle. This has large potential effects on the climates of northern Europe and North America as well as destabilizing the sea ice formation in the arctic (and their associated ecosystems).

![[Trend Velocities in North Atlantic in meters per second per decade from May 1992 to June 2002. vectors trace the following graphic of the subpolar circulation in reverse direction, which denotes a slowing gyre. Credit: Sirpa Hakkinen, NASA GSFC.]](http://blog.fabric.ch/fabric/images/1350_1267480809_4.jpg)

The seasonal movement of the ice shelf constitutes one of the largest annual transformations in the Arctic and is the basis for the arctic ecosystem. As the summer months thaw the ice shelf, causing it to migrate northwards, fresh water is released into the sea. This freshwater promotes a blanket of fertile phytoplankton that forms the foundation of the arctic ecological food chain. Ecosystems that migrate with the annual retreat of ice traverse the Arctic seasonally. In the last 30 years, however, the summer sea ice extent has reduced by approximately 15 – 20%, while its average thickness has decreased by 10 – 15%. Both of these rates continue to increase, decreasing the foundation of the food chain and consequently applying pressure on species higher in the food chain.

Recent data points to something not-so-innocently called the Great Atlantic Shutdown. As increasing amounts of freshwater enter the THC water is more bouyant and less likely to sink, slowing or even stalling circulation.

![[The jet stream. The northern hemisphere polar jet stream is most commonly found between latitudes 30°N and 60°N, while the northern subtropical jet stream located close to latitude 30°N.]](http://blog.fabric.ch/fabric/images/1350_1267480811_5.jpg)

3. Gaseous Storm: Jet Stream // Winds have names: Katabatic, Foehn, Mistral, Bora, Cers, Marin, Levant, Gregale, Khamaseen, Harmattan, Levantades, Sirocco, Leveche, and many others (all exhaustively documented here). But all pale in comparison to the steady circulations of the tropospheric jet stream. The jet stream is a shifting river of air about 9-14 km above sea level that guides storm systems and cool air around the globe. And when it moves away from a region, high pressure and clear skies predominate. The jet stream marks a thick shifting swirling line that separates airspace that warms with height and airspace that cools with height. In short, it is the jet stream(s) that creates weather – all kinds of weather, from the ordinary, uninteresting dull gray sky to the devastating life-changing weather phenomenon.

The path of the jet typically has a meandering shape, and these meanders themselves propagate east, at lower speeds than that of the actual wind within the flow. Each large meander, or wave, within the jet stream is known as a Rossby wave. Rossby waves are caused by changes in the Coriolis effect with latitude, and propagate westward with respect to the flow in which they are embedded, which slows down the eastward migration of upper level troughs and ridges across the globe when compared to their embedded shortwave troughs.

![[The jet stream core region averages 160 km/h (100 mph) in winter and 80 km/h (50 mph) in summer. Those segments within the jet stream where winds attain their highest speeds are known as jet streaks.]](http://blog.fabric.ch/fabric/images/1350_1267480812_6.gif)

When the jet stream fractions off an eddy, such a minor event at the scale of the stream generates an cyclone as it hits the ground. Thought to be weakening and moving poleward, the jet stream would produce less rain in the south and more storms in the north. Though in the meantime, there is considerable ongoing research on how to harness this steady streaming power.

![[A wind machine, floated into the jet stream, would transmit electricity on aluminum or copper cables--or through invisible microwave beams--down to power grids, where it would be distributed locally.]](http://blog.fabric.ch/fabric/images/1350_1267480814_7.jpg)

One study (above) shows a range of kites responding to the stream in a variety of ways and at different altitudes. The possibility of a series of kites–ladder, rotor, rotating, or turntable–hovering 1000 feet in the air generating anywhere from 50- 250 kilowatts is hard to refute. Afterall, they are just kites. Or maybe, to test this possibility, we just need to tap into all the already ongoing leisurely kite-flying practices–so that regular kites are no longer available, but instead streaming kites only. Streaming kites flying much higher, and of course bigger, and equipped with gear that helps store and harness energy. At the end of a pleasurable day flying a kite you have next weeks electricity in a black box to tote back home.

Post inspired by: Star Archive, Storm Archive, Storm Control Authority, Meteorological Alchemy, Carcinogenic Storms, Life on Mars, Average Natures.

-----

Via InfraNet Lab

Personal comment:

Intéressant peut-être dans le contexte de notre projet orienté "storms & lightnings".

fabric | rblg

This blog is the survey website of fabric | ch - studio for architecture, interaction and research.

We curate and reblog articles, researches, writings, exhibitions and projects that we notice and find interesting during our everyday practice and readings.

Most articles concern the intertwined fields of architecture, territory, art, interaction design, thinking and science. From time to time, we also publish documentation about our own work and research, immersed among these related resources and inspirations.

This website is used by fabric | ch as archive, references and resources. It is shared with all those interested in the same topics as we are, in the hope that they will also find valuable references and content in it.