Tuesday, October 11. 2011

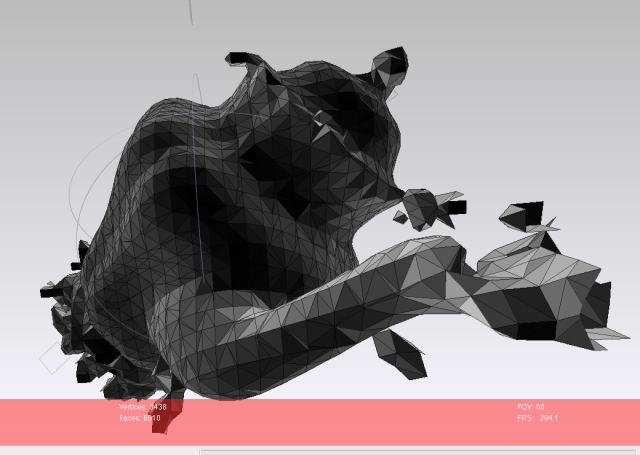

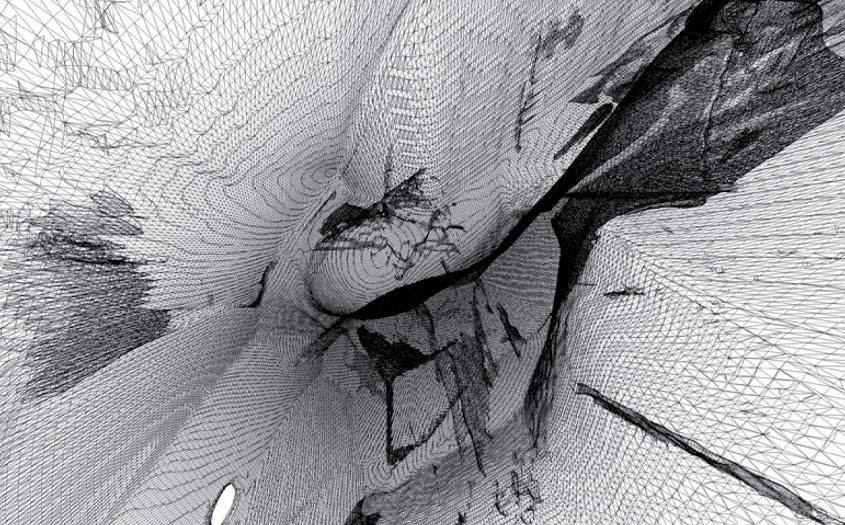

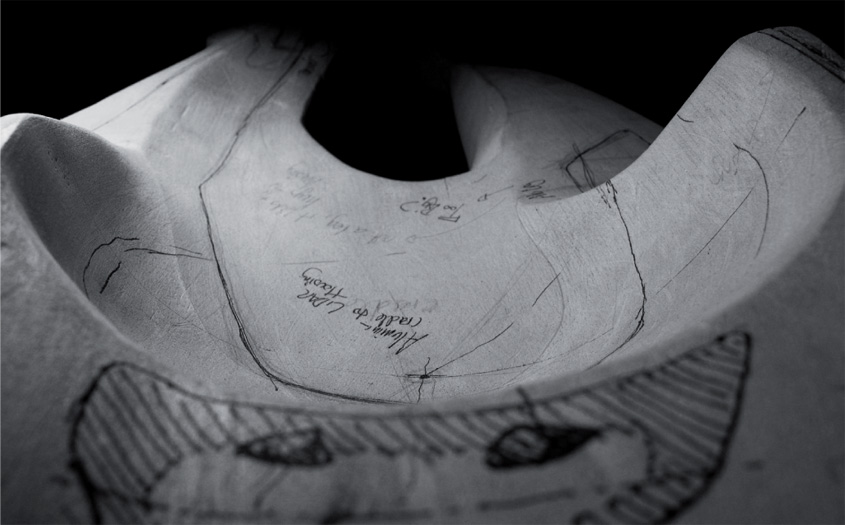

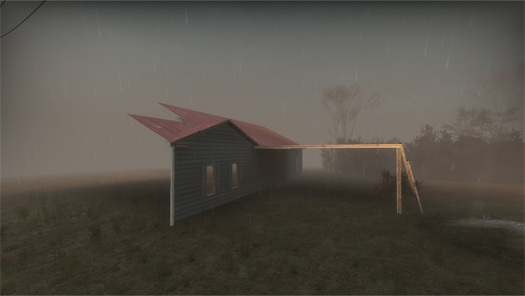

Following from Glitch Reality No1 commissioned by It’s Nice That for Nike, Glitch Reality 2 by Matthew Plummer-Fernandez and David Gardener is a new iteration of exploring physical objects in glitch form. The process is an interesting one, applying digital methodology to alter physical objects. Matthew Plummer-Fernandez explains:

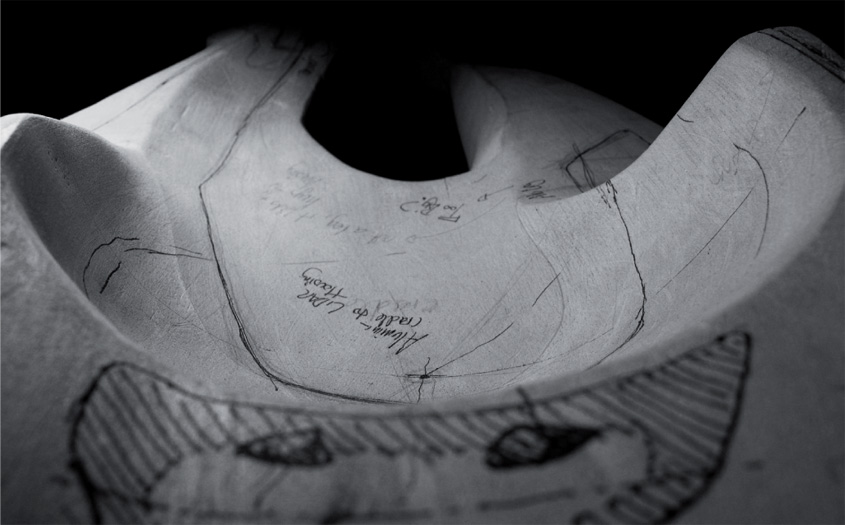

A tea set was created by purchasing non-matching tea set components, scanning them with a Z-corporation 3D scanner and roughly repairing the digital mesh files. The mesh files are then 3D printed to create an instance of this tea-set data that inherits the glitches from the analogue-to-digital-to-analogue translation.

Also below is short animation experiment Matthew made using a crude 3D scan of his friends baby Harriet.

The meshing algorithm curiously created a caccoon from fragments of scanned baby pram, which inspired the title of the piece.

Continue reading.... Glitch Reality II [Objects]

Tuesday, April 26. 2011

Via MIT Technology Review

-----

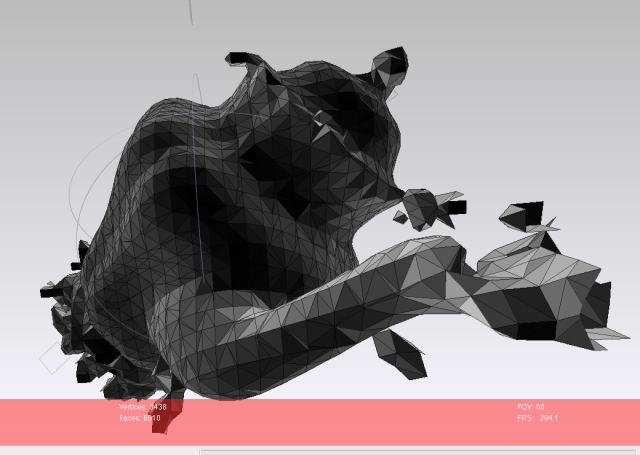

A missile-targeting technology is adapted to process aerial photos into 3-D city maps sharper than Google Earth's.

By Tom Simonite

|

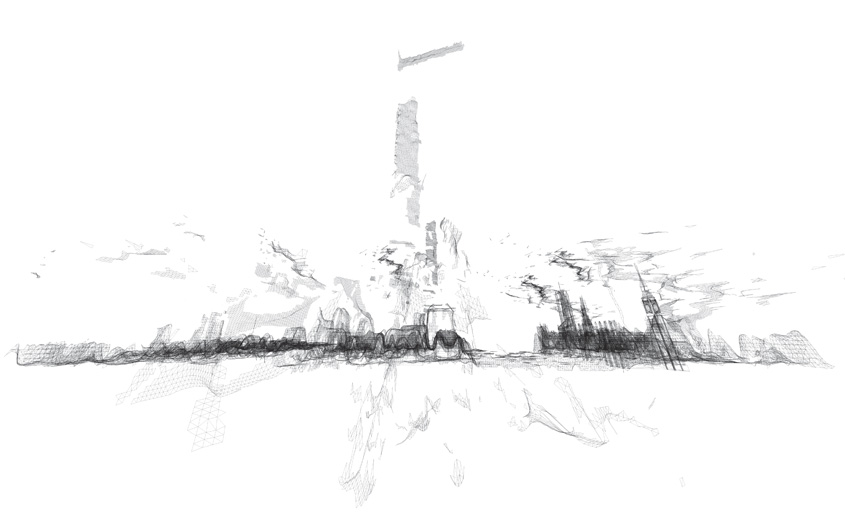

Pixel perfect: Using aerial photos, image-processing software created this 3-D model of San Francisco, accurate to 15 centimeters.

Credit: C3 Technologies |

Technology originally developed to help missiles home in on targets has been adapted to create 3-D color models of cityscapes that capture the shapes of buildings to a resolution of 15 centimeters or less. Image-processing software distills the models from aerial photos captured by custom packages of multiple cameras.

The developer is C3 Technologies, a spinoff from Swedish aerospace company Saab. C3 is building a store of eye-popping 3-D models of major cities to license to others for mapping and other applications. The first customer to go public with an application is Nokia, which used the models for 20 U.S. and European cities for an upgraded version of its Ovi online and mobile mapping service released last week. "It's the start of the flying season in North America, and we're going to be very active this year," says Paul Smith, C3's chief strategy officer.

Although Google Earth shows photorealistic buildings in 3-D for many cities, many are assembled by hand, often by volunteers, using a combination of photos and other data in Google's SketchUp 3-D drawing program.

C3's models are generated with little human intervention. First, a plane equipped with a custom-designed package of professional-grade digital single-lens reflex cameras takes aerial photos. Four cameras look out along the main compass points, at oblique angles to the ground, to image buildings from the side as well as above. Additional cameras (the exact number is secret) capture overlapping images from their own carefully determined angles, producing a final set that contains all the information needed for a full 3-D rendering of a city's buildings. Machine-vision software developed by C3 compares pairs of overlapping images to gauge depth, just as our brains use stereo vision, to produce a richly detailed 3-D model.

"Unlike Google or Bing, all of our maps are 360° explorable," says Smith, "and everything, every building, every tree, every landmark, from the city center to the suburbs, is captured in 3-D—not just a few select buildings."

C3's approach has benefits relative to more established methods of modeling cityscapes in 3-D, says Avideh Zakhor, a UC Berkeley professor whose research group developed technology licensed by Google for its Google Earth and Street View projects. Conventionally, a city's 3-D geometry is captured first with an aerial laser scanner—a technique called LIDAR—and then software adds detail.

"The advantage of C3's image-only scheme is that aerial LIDAR is significantly more expensive than photography, because you need powerful laser scanners," says Zakhor. "In theory, you can cover more area for the same cost." However, the LIDAR approach still dominates because it is more accurate, she says. "Using photos alone, you always need to manually correct errors that it makes," says Zakhor. "The 64-million-dollar question is how much manual correction C3 needs to do."

Smith says that C3's technique is about "98 percent" automated, in terms of the time it takes to produce a model from a set of photos. "Our computer vision software is good enough that there is only some minor cleanup," he says. "When your goal is to map the entire world, automation is essential to getting this done quickly and with less cost." He claims that C3 can generate richer models than its competitors, faster.

Images of cities captured by C3 do appear richer than those in Google Earth, and Smith says the models will make mapping apps more functional as well as better-looking. "Behind every pixel is a depth map, so this is not just a dumb image of the city," says Smith. On a C3 map, it is possible to mark an object's exact location in space, whether it's a restaurant entrance or 45th-story window.

C3 has also developed a version of its camera package to gather ground-level 3-D imagery and data from a car, boat, or Segway. This could enable the models to compete with Google's Street View, which captures only images. C3 is working on taking the technology indoors to map buildings' interiors and connect them with its outdoor models.

Smith says that augmented-reality apps allowing a phone or tablet to blend the virtual and real worlds are another potential use. "We can help pin down real-world imagery very accurately to solve the positioning problem," he says. However, the accuracy of cell phones' positioning systems will first have to catch up with that of C3's maps. Cell phones using GPS can typically locate themselves to within tens of meters, not tens of centimeters.

Copyright Technology Review 2011.

Via BLDGBLOG

-----

by noreply@blogger.com (Geoff Manaugh)

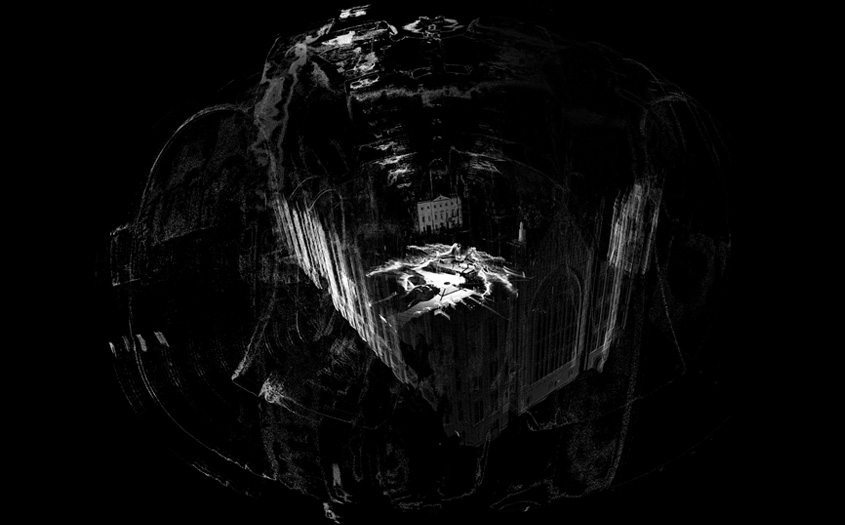

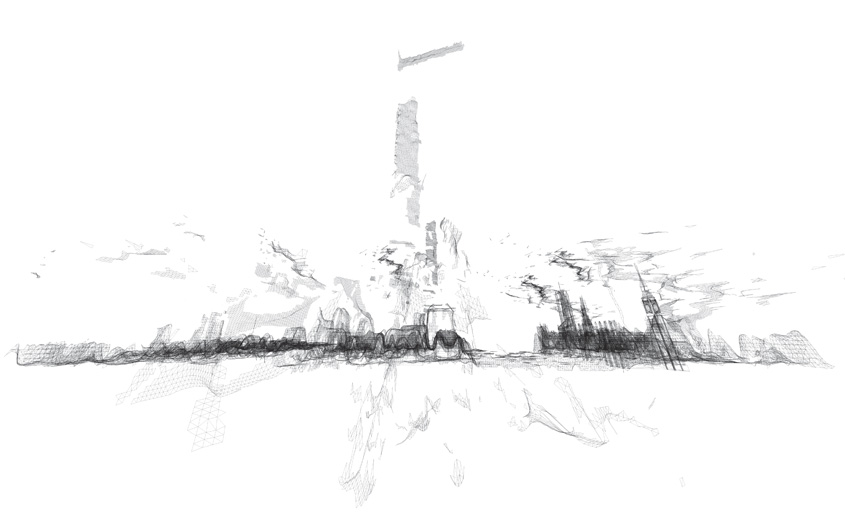

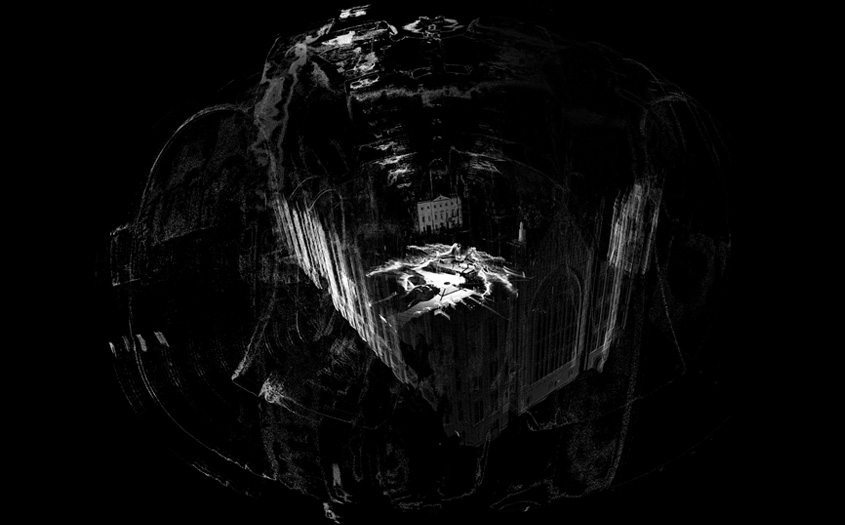

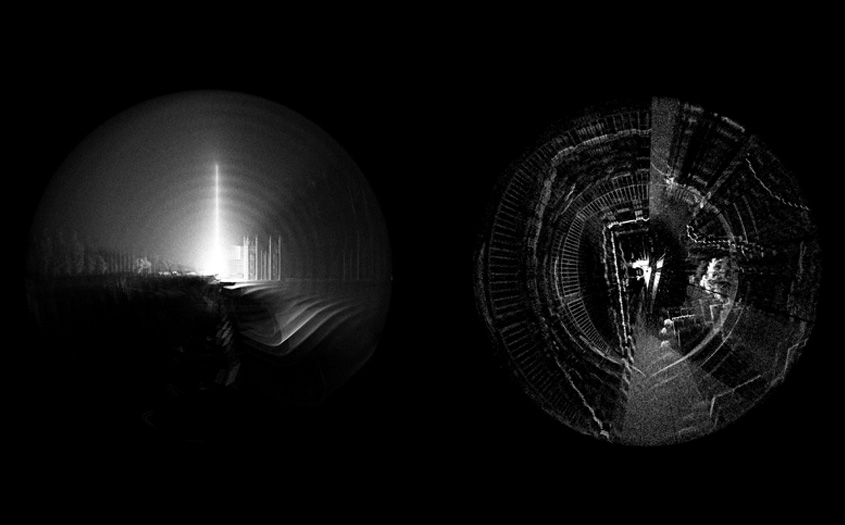

The London-based architectural group ScanLAB—founded by Matthew Shaw and William Trossell—has been doing some fascinating work with laser scanners.

Here are three of their recent projects.

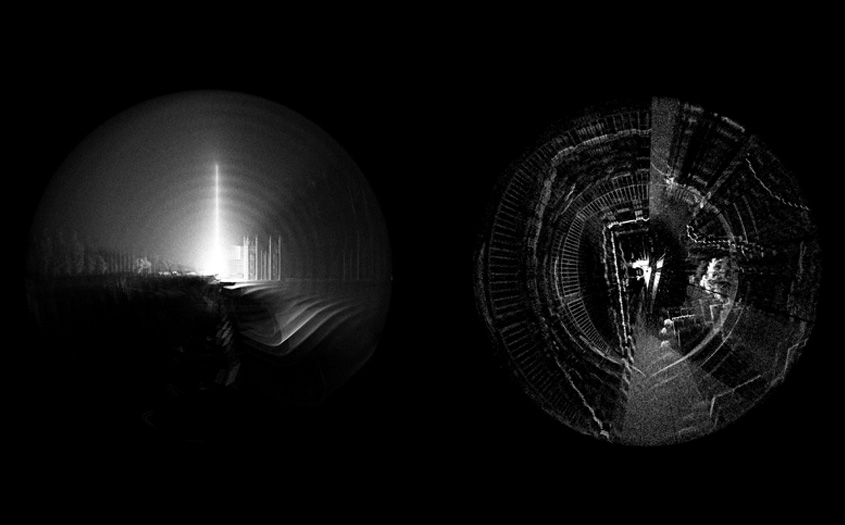

1) Scanning Mist. Shaw and Trossell "thought it might be interesting to see if the scanner could detect smoke and mist. It did and here are the remarkable results!"

[Images: From Scanning the Mist by ScanLAB]. [Images: From Scanning the Mist by ScanLAB].

In a way, I'm reminded of photographs by Alexey Titarenko.

2) Scanning an Artificial Weather System. For this project, ScanLAB wanted to "draw attention to the magical properties of weather events." They thus installed a network of what they call "pressure vessels linked to an array of humidity tanks" in the middle of England's Kielder Forest.

[Image: From Slow Becoming Delightful by ScanLAB]. [Image: From Slow Becoming Delightful by ScanLAB].

These "humidity tanks" then, at certain atmospherically appropriate moments, dispersed a fine mist, deploying an artificial cloud or fog bank into the woods.

[Image: From Slow Becoming Delightful by ScanLAB]. [Image: From Slow Becoming Delightful by ScanLAB].

Then, of course, Shaw and Trossell laser-scanned it.

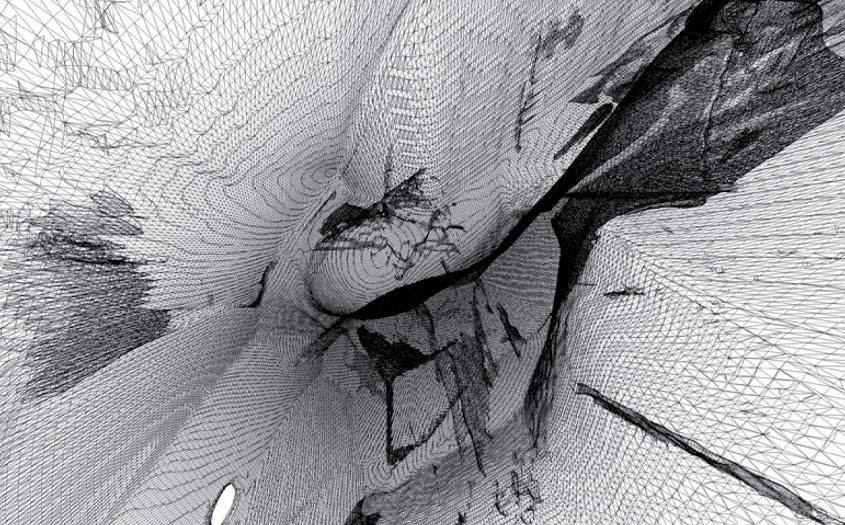

3) Subverting Urban-Scanning Projects through "Stealth Objects." The architectural potential of this final project blows me away. Basically, Shaw and Trossell have been looking at "the subversion of city scale 3D scanning in London." As they explain it, "the project uses hypothetical devices which are installed across the city and which edit the way the city is scanned and recorded." Tools include the "stealth drill" which dissolves scan data in the surrounding area, creating voids and new openings in the scanned urban landscape, and "boundary miscommunication devices" which offset, relocate and invent spatial data such as paths, boundaries, tunnels and walls.

The spatial and counter-spatial possibilities of this are extraordinary. Imagine whole new classes of architectural ornament (ornament as digital camouflage that scans in precise and strange ways), entirely new kinds of building facades (augmented reality meets LiDAR), and, of course, the creation of a kind of shadow-architecture, invisible to the naked eye, that only pops up on laser scanners at various points around the city.

[Images: From Subverting the LiDAR Landscape by ScanLAB]. [Images: From Subverting the LiDAR Landscape by ScanLAB].

ScanLAB refers to this as "the deployment of flash architecture"—flash streets, flash statues, flash doors, instancing gates—like something from a short story by China Miéville. The narrative and/or cinematic possibilities of these "stealth objects" are seemingly limitless, let alone their architectural or ornamental use.

Imagine stealth statuary dotting the streetscape, for instance, or other anomalous spatial entities that become an accepted part of the urban fabric. They exist only as representational effects on the technologies through which we view the landscape—but they eventually become landmarks, nonetheless.

For now, Shaw and Trossell explain that they are experimenting with "speculative LiDAR blooms, blockages, holes and drains. These are the result of strategically deployed devices which offset, copy, paste, erase and tangle LiDAR data around them."

[Images: From Subverting the LiDAR Landscape by ScanLAB]. [Images: From Subverting the LiDAR Landscape by ScanLAB].

Here is one such "stealth object," pictured below, designed to be "undetected" by laser-scanning equipment.

Of course, it is not hard to imagine the military being interested in this research, creating stealth body armor, stealth ground vehicles, even stealth forward-operating bases, all of which would be geometrically invisible to radar and/or scanning equipment.

In fact, one could easily imagine a kind of weapon with no moving parts, consisting entirely of radar- and LiDAR-jamming geometries; you would thus simply plant this thing, like some sort of medieval totem pole, in the streets of Mogadishu—or ring hundreds of them in a necklace around Washington D.C.—thus precluding enemy attempts to visualize your movements.

[Images: A hypothetical "stealth object," resistant to laser-scanning, by ScanLAB]. [Images: A hypothetical "stealth object," resistant to laser-scanning, by ScanLAB].

Briefly, ScanLAB's "stealth object" reminds me of an idea bandied about by the U.S. Department of Energy, suggesting that future nuclear-waste entombment sites should be liberally peppered with misleading "radar reflectors" buried in the surface of the earth.

The D.O.E.'s "trihedral" objects would produce "distinctive anomalous magnetic and radar-reflective signatures" for anyone using ground-scanning equipment above. In other words, they would create deliberate false clues, leading potential future excavators to think that they were digging in the wrong place. They would "subvert" the scanning process.

In any case, read more at ScanLAB's website.

Friday, March 25. 2011

Via Creative Applications

-----

FABRICATE is an International Peer Reviewed Conference with supporting publication and exhibition to be held at The Bartlett School of Architecture in London from 15-16 April 2011. Discussing the progressive integration of digital design with manufacturing processes, FABRICATE will bring together pioneers in design and making within architecture, construction, engineering, manufacturing, materials technology and computation.

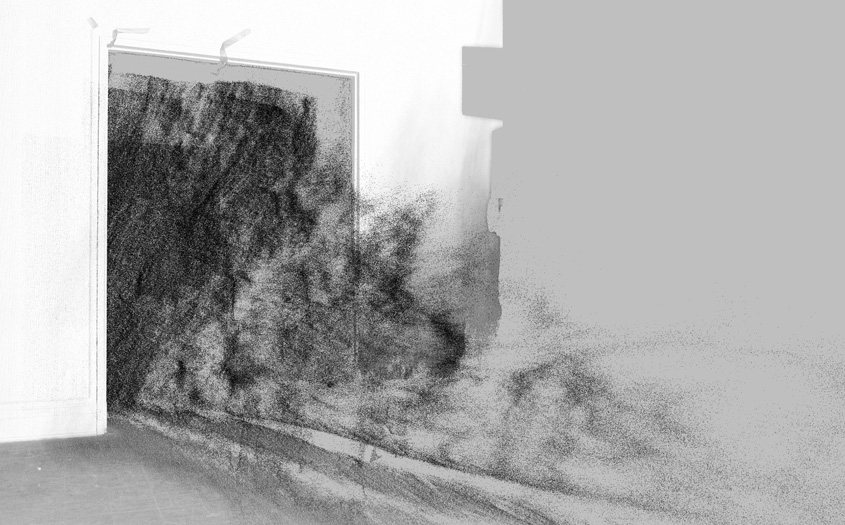

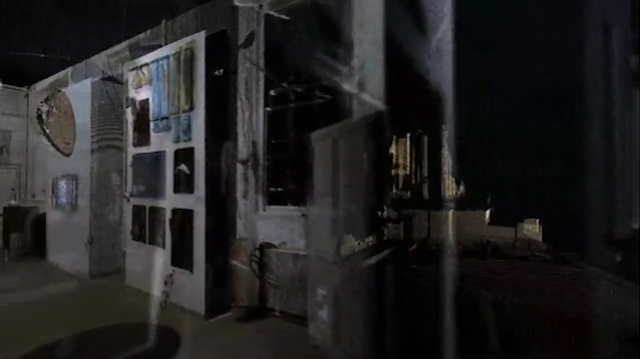

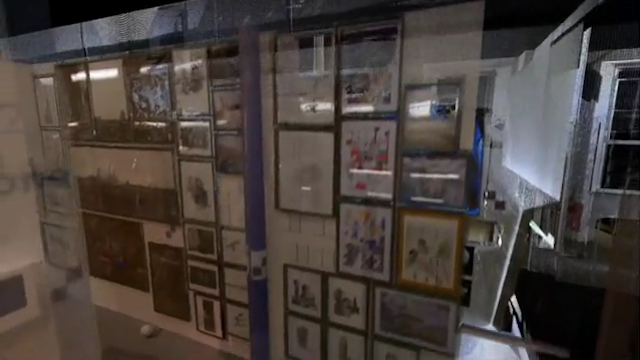

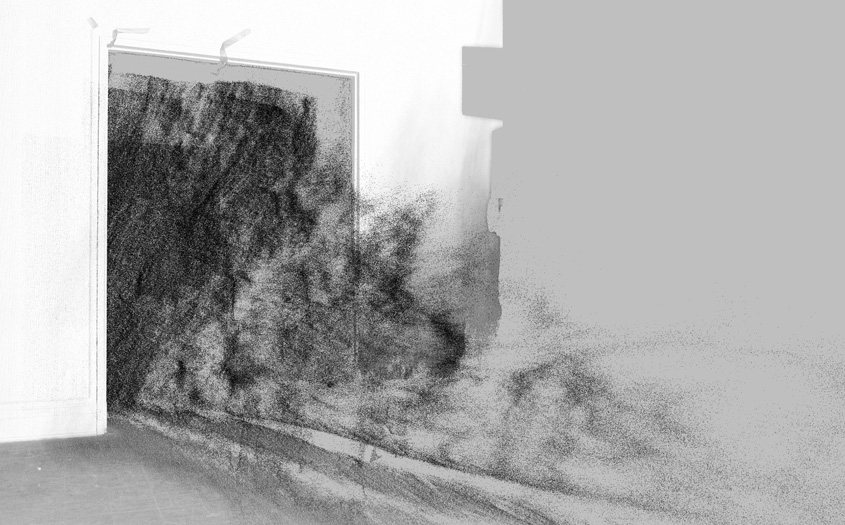

Part of the exhibition is the work of ScanLAB, a research group run by Matthew Shaw and William Trossell at the Bartlett School of Architecture that explores the potential role of 3D scanning in Architecture, Design and Making. In 2010, 48 hours of scanning produced 64 scans of the Slade school’s entire exhibition space. These have been compiled to form a complete 3D replica of the temporary show which has been distilled into a navigable animation and a series of ‘standard’ architectural drawings.

The work becomes a confused collage of hours of delicately created lines and forms set within a feature prefect representation of the exhibition space. Sometimes a model or image stands out as identifiable, more often a sketch merges into a model and an exhibition stand creating a blurred hybrid of designs and authors. These drawings represent the closest record to an as built drawing set for the entire exhibition and an ‘as was’ representation of the Bartlett’s year.

The 3D model was produced using a Faro Photon 120 laser scanner ($40k). Software that enables navigation is Pointools, generic point cloud model software that allows for some of the largest point cloud models – multi-billion point datasets.

For more information on FABRICATE, see http://www.fabricate2011.org

Exhibition Private View

6pm – 14th April 2011

Bartlett School of Architecture Gallery

Wates House, 22 Gordon Street

London WC1H 0QB

For tickets, see fabricate2011.org/registration/

(Thanks Ruairi)

See also Fragments of time and space recorded with Kinect+SLR on NYC Subway … and CITY OF HOLES on bldgblog

Personal comment:

Usually not a big fan of realistic 3d architecture, but I find quite interesting (camera movements excepted...) this "in between reality" of an uncompleted or imperfert scan. Like if the architecture was half appearing, or halp disappearing in an "in between time zone".

Via MIT Technology Review

-----

With a few snapshots, you can build a detailed virtual replica.

By Tom Simonite

|

Getting all the angles: Microsoft researcher Johannes Kopf demonstrates a cell phone app that can capture objects in 3-D.

Credit: Microsoft |

Capturing an object in three dimensions needn't require the budget of Avatar. A new cell phone app developed by Microsoft researchers can be sufficient. The software uses overlapping snapshots to build a photo-realistic 3-D model that can be spun around and viewed from any angle.

"We want everybody with a cell phone or regular digital camera to be able to capture 3-D objects," says Eric Stollnitz, one of the Microsoft researchers who worked on the project.

To capture a car in 3-D, for example, a person needs to take a handful of photos from different viewpoints around it. The photos can be instantly sent to a cloud server for processing. The app then downloads a photo-realistic model of the object that can be smoothly navigated by sliding a finger over the screen. A detailed 360 degree view of a car-sized object needs around 40 photos, a smaller object like a birthday cake would need 25 or fewer.

If captured with a conventional camera instead of a cell phone, the photos have to be uploaded onto a computer for processing in order to view the results. The researchers have also developed a Web browser plug-in that can be used to view the 3-D models, enabling them to be shared online. "You could be selling an item online, taking a picture of a friend for fun, or recording something for insurance purposes," says Stollnitz. "These 3-D scans take up less bandwidth than a video because they are based on only a few images, and are also interactive."

To make a model from the initial snapshots, the software first compares the photos to work out where in 3-D space they were taken from. The same technology was used in a previous Microsoft research project, PhotoSynth, that gave a sense of a 3-D scene by jumping between different views (see video). However, PhotoSynth doesn't directly capture the 3-D information inside photos.

"We also have to calculate the actual depth of objects from the stereo effect," says Stollnitz, "comparing how they appear in different photos." His software uses what it learns through that process to break each image apart and spread what it captures through virtual 3-D space (see video, below). The pieces from different photos are stitched together on the fly as a person navigates around the virtual space to generate his current viewpoint, creating the same view that would be seen if he were walking around the object in physical space.

Look at the video HERE.

"This is an interesting piece of software," says Jason Hurst, a product manager with 3DMedia, which makes software that combines pairs of photos to capture a single 3-D view of a scene. However, using still photos does have its limitations, he points out. "Their method, like ours, is effectively time-lapse, so it can't deal with objects that are moving," he says.

3DMedia's technology is targeted at displays like 3-D TVs or Nintendo's new glasses-free 3-D handheld gaming device. But the 3-D information built up by the Microsoft software could be modified to display on such devices, too, says Hurst, because the models it builds contain enough information to create the different viewpoints for a person's eyes.

Hurst says that as more 3-D-capable hardware appears, people will need more tools that let them make 3-D content. "The push of 3-D to consumers has come from TV and computer device makers, but the content is lagging," says Hurst. "Enabling people to make their own is a good complement."

Copyright Technology Review 2011.

Monday, March 21. 2011

Via Andreas Angelidakis

-----

by Andreas Angelidakis

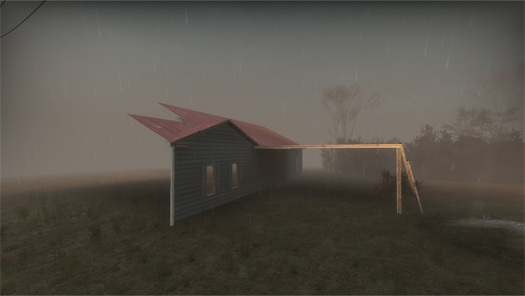

After a really long time, I found myself in Second Life Again

at first I thought the teleport took me to the wrong place

Could this really be the same lazy suburban island I left a few years ago?

And where was everybody? The place felf like the day after an invisible bomb

I guess this was not a regular bomb, it was just an explosion of development,

it made me think of all the hype that surrounded Second Life a few years back.

Obviously made invertors placed their money here. I guess they lost it,

and most probably because they promoted Second Life as a "digital revolution",

and not as the niche geekfest it really is.

another failed capitalist expansion, that took everybody to nowhere.

I peeked at vacant spaces inside generic corporate salary-buildings

flew up to the sky, and decided to leave

I saw a bridge and another, uninhabited island, strangely cut in half.

I assumed it was the graphics setting on my pc,

and as I flew closer the rest of the island would come into view

but no, the island was indeed cut off.

The development ended abrupty at sea.

I examined the cut, it was clean

the topography sliced by the programmer who ran out of space on his server?

Was this the real City of Bits? Cloud Computing Urbanisim?

hovering above the sea,

I appreciated the new graphics settings,

where the sea soflty glistens under the moonlight,

as it would any other full moon weekend

Personal comment:

Artificial cities also experience (invisible) cataclysms.

Wednesday, October 06. 2010

-----

For its new virtual museum, Adobe wanted more than a website designer: It wanted a forward-thinking architect who could make the space feel "physical." It turned to Filippo Innocenti, co-founder of Spin+ and an associate architect at Zaha Hadid Architects. via Arch Record

Personal comment:

11 years after La_Fabrique and 6 after MIX-m (for MIXed museum, at the MAMCO and later at CAC), Adobe is lauching its digital museum designed by Filippo Innocenti & Zaha Hadid. Thank you the "avant-garde" ;) ...

Is this the time of the "slope of enlightment" for digital museums, opened 24/7 worldwide and dedicated to digital content?

Tuesday, October 05. 2010

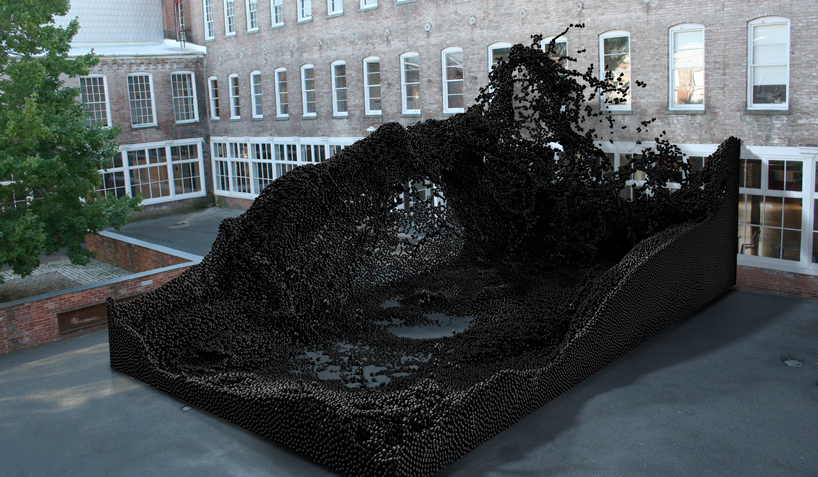

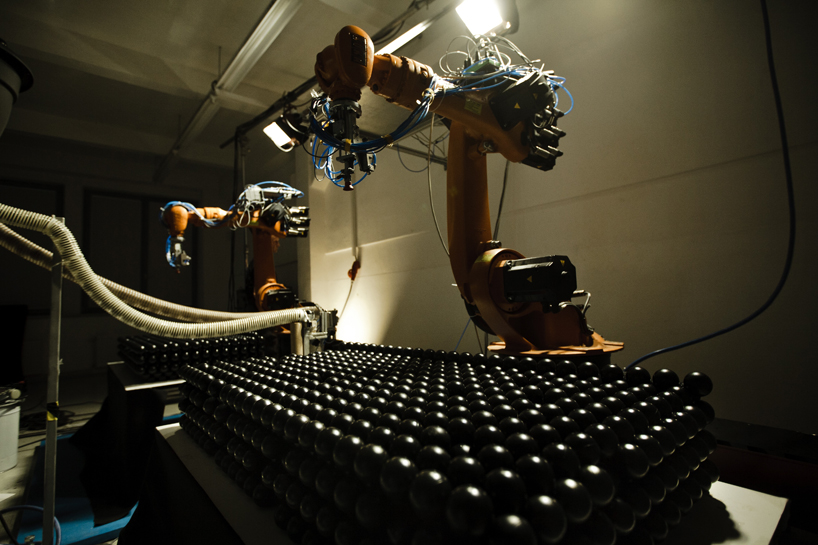

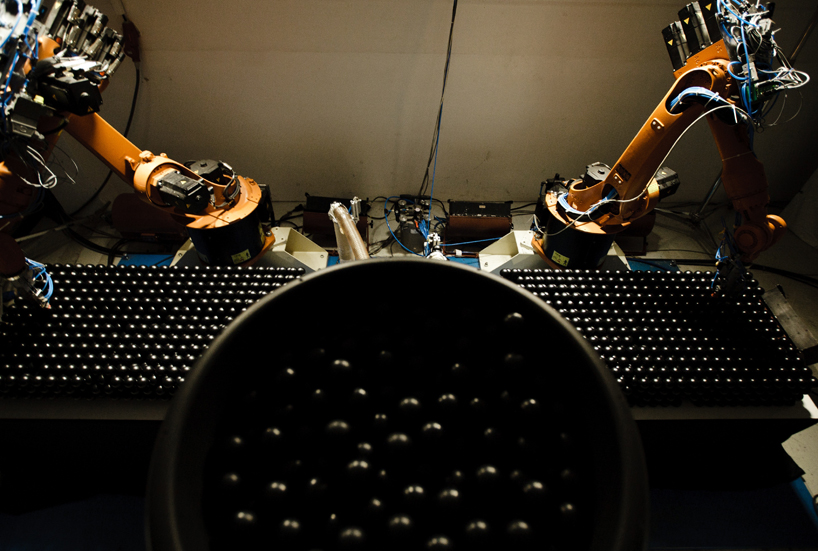

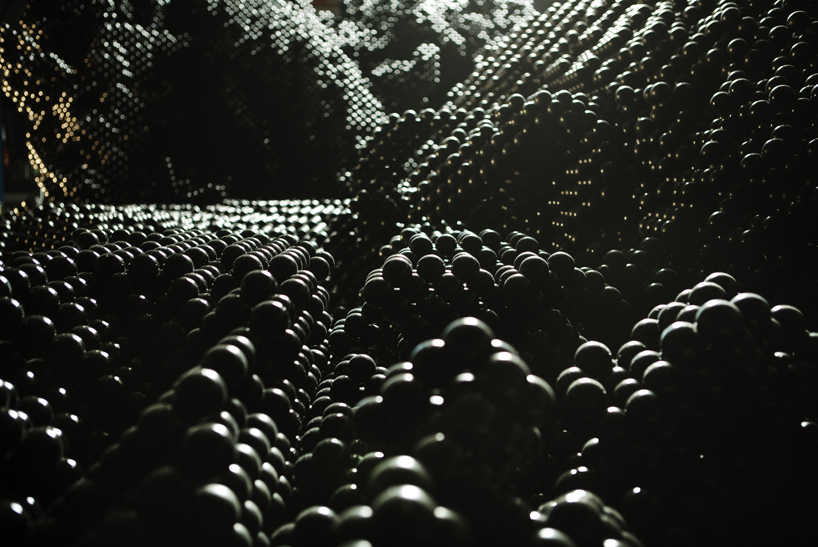

Personal comment:

A new work that uses industrial robots for a different purpose. Of course, reminds me of the work of architects Gramazio & Kohler (process, not result), about "digital materiality".

Monday, August 16. 2010

© Dirk Schelpmeier, Marcus Brehm

The experimental pavilion BOXEL was designed and realized by students of the architecture department during the last summer semester on the campus of the University of Applied Sciences in Detmold. The expressive design by Henri Schweynoch, which succeeded in an impromptu competition, creates a generous spatial scenery for presentations, concerts, events and gatherings on the campus. More images and information after the break.

© Dirk Schelpmeier, Marcus Brehm

Within the digital design course, led by Prof. Marco Hemmerling, the students were not only asked to design a summer pavilion but also to construct and realize the design as a mock-up in scale 1:1, using digital design and fabrication tools. Against this background the digital workflow including parameters of production, construction and material became key issues of the further process.

The building shape is based on a minimal surface and consists of more than 2.000 beer boxes that are organized along the free form geometry. The temporary construction was designed using parametric software to control the position of the boxes in relation to the overall geometry and to analyze the structural performance.

© Dirk Schelpmeier, Marcus Brehm

In order to define the construction concept and the detailing of the connection several static load tests were made to understand the structural behaviour of the unusual building material, especially since the empty beer boxes were not stacked onto but freely organized next to each other. In parallel to a series of shearing and bending tests in the university’s laboratory of material research the structural concept was simulated and optimized using FEM-Software.

Finally a simple system of slats and screws was chosen for the assembly of the pavilion that allowed for a flexible and invisible connection. Additional bracings were placed in the upper part of the boxes to generate the required stiffness of the modules. The structural load transfer was realized by concrete-lined boxes at the three base points that served as foundation for the pavilion.

© Dirk Schelpmeier, Marcus Brehm

BOXEL was erected in only one week by the students and served as a scenic background for the end of semester party and during the international summer school (www.a-d-a-d.com) at the University in Detmold. The beer boxes which, after being ten years in use, were supplied by the local brewery and will be recycled when the pavilion is being disassembled.

A second pavilion design that is based on moveable wooden frames, designed by Lisa Hagemann, was also selected during the competition phase and will probably be realized during the next summer semester in 2011.

Teaching staff: Prof. Marco Hemmerling, Visiting-Prof. Matthias Michl, David Lemberski, Guido Brand and Claus Deis

Students: Henri Schweynoch (Design), Guido Spriewald, Elena Daweke, Lisa Hagemann, Bernd Benkel, Thomas Serwas Michael Brezina, Samin Magriso, Caroline Zij, Michelle Layahou with Jan Bienek, Christoph Strotmann, Matthias Kemper, Frank Püchner, Florian Tolksdorf, Florian Nienhaus, Andre Osterhaus, Viktoria Vaintraub, Jörg Linden, Bianca Mohr, Kristina Schmolinski, Tobias Jonk

Personal comment:

Another example of pixel architecture?

Monday, August 09. 2010

Via Mammoth

-----

Through Brian Finoki, I ran into the game-world “photography” of Robert Overweg (“Facade 2″ pictured above), who hunts the worlds of video games not to run up a body count, but for architectural fragments and broken landscapes, moments where the rough edges of programmed rules find visual expression. I recommend “Glitches” and “The end of the virtual world”, in particular.

|

The London-based architectural group

The London-based architectural group  [Images: From

[Images: From  [Image: From

[Image: From  [Image: From

[Image: From

[Images: From

[Images: From  [Images: From

[Images: From

[Images: A hypothetical "stealth object," resistant to laser-scanning, by ScanLAB].

[Images: A hypothetical "stealth object," resistant to laser-scanning, by ScanLAB].