Thursday, May 02. 2013

Via MIT Technology Review

-----

Smart grid technology has been implemented in many places, but Florida’s new deployment is the first full-scale system.

By Kevin Bullis on May 2, 2013

Smart power: Andrew Brown, an engineer at Florida Power & Light, monitors equipment in one of the utility’s smart grid diagnostic centers.

The first comprehensive and large scale smart grid is now operating. The $800 million project, built in Florida, has made power outages shorter and less frequent, and helped some customers save money, according to the utility that operates it.

Smart grids should be far more resilient than conventional grids, which is important for surviving storms, and make it easier to install more intermittent sources of energy like solar power (see “China Tests a Small Smart Electric Grid” and “On the Smart Grid, a Watt Saved Is a Watt Earned”). The Recovery Act of 2009 gave a vital boost to the development of smart grid technology, and the Florida grid was built with $200 million from the U.S. Department of Energy made available through the Recovery Act.

Dozens of utilities are building smart grids—or at least installing some smart grid components, but no one had put together all of the pieces at a large scale. Florida Power & Light’s project incorporates a wide variety of devices for monitoring and controlling every aspect of the grid, not just, say, smart meters in people’s homes.

“What is different is the breadth of what FPL’s done,” says Eric Dresselhuys, executive vice president of global development at Silver Spring Networks, a company that’s setting up smart grids around the world, and installed the network infrastructure for Florida Power & Light (see “Headed into an IPO, Smart Grid Company Struggles for Profit”).

Many utilities are installing smart meters—Pacific Gas & Electric in California has installed twice as many as FPL, for example. But while these are important, the flexibility and resilience that the smart grid promises depends on networking those together with thousands of sensors at key points in the grid— substations, transformers, local distribution lines, and high voltage transmission lines. (A project in Houston is similar in scope, but involves half as many customers, and covers somewhat less of the grid.)

In FPL’s system, devices at all of these places are networked—data jumps from device to device until it reaches a router that sends it back to the utility—and that makes it possible to sense problems before they cause an outage, and to limit the extent and duration of outages that still occur (see “The Challenges of Big Data on the Smart Grid”). The project involved 4.5 million smart meters and over 10,000 other devices on the grid.

The project was completed just last week, so data about the impact of the whole system isn’t available yet. But parts of the smart grid have been operating for a year or more, and there are examples of improved operation. Customers can track their energy usage by the hour using a website that organizes data from smart meters. This helped one customer identify a problem with his air conditioner, says Brian Olnick, vice president of smart grid solutions at Florida Power & Light, when he saw a jump in electricity consumption compared to the previous year in similar weather.

The meters have also cut the duration of power outages. Often power outages are caused by problems within a home, like a tripped circuit breaker. Instead of dispatching a crew to investigate, which could take hours, it is possible to resolve the issue remotely. That happened 42,000 times last year, reducing the duration of outages by about two hours in each case, Olnick says.

The utility also installed sensors that can continually monitor gases produced by transformers to “determine whether the transformer is healthy, is becoming sick, or is about to experience an outage,” says Mark Hura, global smart grid commercial leader at GE, which makes the sensor.

Ordinarily, utilities only check large transformers once every six months or less, he says. The process involves taking an oil sample and sending it to the lab. In one case this year, the new sensor system identified an ailing transformer in time to prevent a power outage that could have affected 45,000 people. Similar devices allowed the utility to identify 400 ailing neighborhood-level transformers before they failed.

Smart grid technology is having an impact elsewhere. After Hurricane Sandy, sensors helped utility workers in some areas restore power faster than in others. One problem smart grids address is nested power outages—when smaller problems are masked by an outage that hits a large area. In a conventional system, after utility workers fix the larger problem, it can take hours for them to realize that a downed line has cut off power to a small area. With the smart grid, utility workers can ping sensors at smart meters or power lines before they leave an area, identifying these smaller outages.

And smart grid devices are helping utilities identify problems that could otherwise go misdiagnosed for years. In Chicago, for example, new voltage monitors indicated that a neighborhood was getting the wrong voltage, a problem that could wear out appliances. The fix took a few minutes.

As more renewable energy is installed, the smart grid will make it easier for utilities to keep the lights on. Without local sensors, it’s difficult for them to know how much power is coming from solar panels—or how much backup they need to have available in case clouds roll in and that power drops.

But whether the nearly $1 billion investment in smart grid infrastructure will pay for itself remains to be seen. The DOE is preparing reports on the impact of the technology to be published this year and next. Smart grid technology is also raising questions about security, since the networks could offer hackers new targets (see “Hacking the Smart Grid”).

Personal comment:

This is a good news! As many countries are now looking to build smart grids, let's hope that the first outcomes of this implementation will be positive.

Smart grids try to do for energy what the internet did for information, meaning that potentially everybody could produce clean energy and "share it" (if in excess or at certain times) through the grid. And monitor the usages, for the good and for the bad. We'll certainly see some sort of Google thing in the energy sector very soon. This might have huge impacts, especially for the renewable energies. The big problem with the "clean" approach remains about the way to efficiently store excess energy when it is not used, so to use it later when it will be.

Jeremy Rifkin describes in his last book, "The Third Industrial Revolution", how a more horizontal society that will be both based on information networks and energy networks could look like and though it is certainly a bit simplified in many aspects or omits counter examples, it is very exciting nonetheless!

Tuesday, April 30. 2013

Via MIT Technology Review

-----

By David Talbot on April 16, 2013

Storing video and other files more intelligently reduces the demand on servers in a data center.

Worldwide, data centers consume huge and growing amounts of electricity.

New research suggests that data centers could significantly cut their electricity usage simply by storing fewer copies of files, especially videos.

For now the work is theoretical, but over the next year, researchers at Alcatel-Lucent’s Bell Labs and MIT plan to test the idea, with an eye to eventually commercializing the technology. It could be implemented as software within existing facilities. “This approach is a very promising way to improve the efficiency of data centers,” says Emina Soljanin, a researcher at Bell Labs who participated in the work. “It is not a panacea, but it is significant, and there is no particular reason that it couldn’t be commercialized fairly quickly.”

With the new technology, any individual data center could be expected to save 35 percent in capacity and electricity costs—about $2.8 million a year or $18 million over the lifetime of the center, says Muriel Médard, a professor at MIT’s Research Laboratory of Electronics, who led the work and recently conducted the cost analysis.

So-called storage area networks within data center servers rely on a tremendous amount of redundancy to make sure that downloading videos and other content is a smooth, unbroken experience for consumers. Portions of a given video are stored on different disk drives in a data center, with each sequential piece cued up and buffered on your computer shortly before it’s needed. In addition, copies of each portion are stored on different drives, to provide a backup in case any single drive is jammed up. A single data center often serves millions of video requests at the same time.

The new technology, called network coding, cuts way back on the redundancy without sacrificing the smooth experience. Algorithms transform the data that makes up a video into a series of mathematical functions that can, if needed, be solved not just for that piece of the video, but also for different parts. This provides a form of backup that doesn’t rely on keeping complete copies of the data. Software at the data center could simply encode the data as it is stored and decode it as consumers request it.

Médard’s group previously proposed a similar technique for boosting wireless bandwidth (see “A Bandwidth Breakthrough”). That technology deals with a different problem: wireless networks waste a lot of bandwidth on back-and-forth traffic to recover dropped portions of a signal, called packets. If mathematical functions describing those packets are sent in place of the packets themselves, it becomes unnecessary to re-send a dropped packet; a mobile device can solve for the missing packet with minimal processing. That technology, which improves capacity up to tenfold, is currently being licensed to wireless carriers, she says.

Between the electricity needed to power computers and the air conditioning required to cool them, data centers worldwide consume so much energy that by 2020 they will cause more greenhouse-gas emissions than global air travel, according to the consulting firm McKinsey.

Smarter software to manage them has already proved to be a huge boon (see “A New Net”). Many companies are building data centers that use renewable energy and smarter energy management systems (see “The Little Secrets Behind Apple’s Green Data Centers”). And there are a number of ways to make chips and software operate more efficiently (see “Rethinking Energy Use in Data Centers”). But network coding could make a big contribution by cutting down on the extra disk drives—each needing energy and cooling—that cloud storage providers now rely on to ensure reliability.

This is not the first time that network coding has been proposed for data centers. But past work was geared toward recovering lost data. In this case, Médard says, “we have considered the use of coding to improve performance under normal operating conditions, with enhanced reliability a natural by-product.”

Personal comment:

Still a link in the context of our workshop at the Tsinghua University and related to data storage at large.

The link between energy, algorithms and data storage made obvious. To be read in parallel with the previous repost from Kazys Varnelis, Into the Cloud (with zombies).

-

In the same idea, another piece of code that could cut flight delays and therefore cut approx $1.2 million in annual crew costs and $5 million in annual fuel savings to a midsized airline...

Monday, April 22. 2013

Via Computed.Blg via Open Compute Project

-----

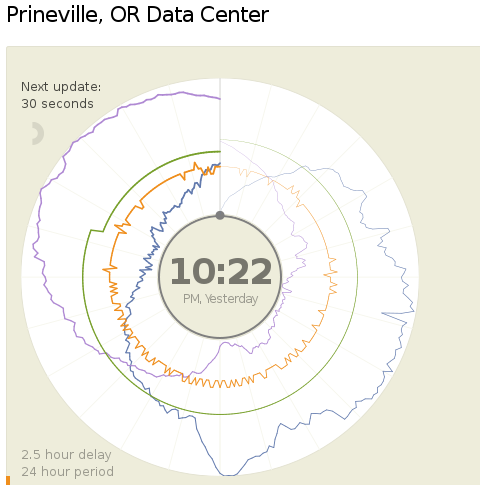

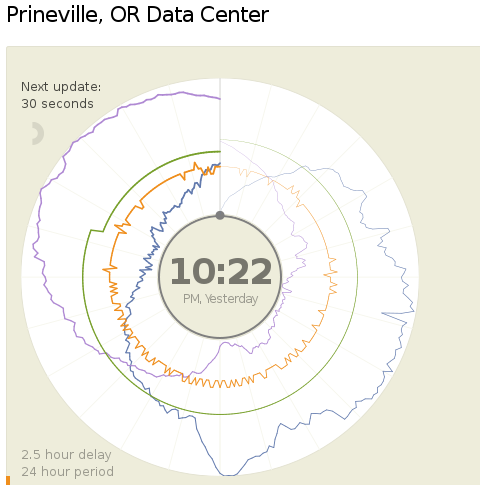

Today (18.04.2013) Facebook launched two public dashboards that report continuous, near-real-time data for key efficiency metrics – specifically, PUE and WUE – for our data centers in Prineville, OR and Forest City, NC. These dashboards include both a granular look at the past 24 hours of data and a historical view of the past year’s values. In the historical view, trends within each data set and correlations between different metrics become visible. Once our data center in Luleå, Sweden, comes online, we’ll begin publishing for that site as well.

We began sharing PUE for our Prineville data center at the end of Q2 2011 and released our first Prineville WUE in the summer of 2012. Now we’re pulling back the curtain to share some of the same information that our data center technicians view every day. We’ll continue updating our annualized averages as we have in the past, and you’ll be able to find them on the Prineville and Forest City dashboards, right below the real-time data.

Why are we doing this? Well, we’re proud of our data center efficiency, and we think it’s important to demystify data centers and share more about what our operations really look like. Through the Open Compute Project (OCP), we’ve shared the building and hardware designs for our data centers. These dashboards are the natural next step, since they answer the question, “What really happens when those servers are installed and the power’s turned on?”

Creating these dashboards wasn’t a straightforward task. Our data centers aren’t completed yet; we’re still in the process of building out suites and finalizing the parameters for our building managements systems. All our data centers are literally still construction sites, with new data halls coming online at different points throughout the year. Since we’ve created dashboards that visualize an environment with so many shifting variables, you’ll probably see some weird numbers from time to time. That’s OK. These dashboards are about surfacing raw data – and sometimes, raw data looks messy. But we believe in iteration, in getting projects out the door and improving them over time. So we welcome you behind the curtain, wonky numbers and all. As our data centers near completion and our load evens out, we expect these inevitable fluctuations to correspondingly decrease.

We’re excited about sharing this data, and we encourage others to do the same. Working together with AREA 17, the company that designed these visualizations, we’ve decided to open-source the front-end code for these dashboards so that any organization interested in sharing PUE, WUE, temperature, and humidity at its data center sites can use these dashboards to get started. Sometime in the coming weeks we’ll publish the code on the Open Compute Project’s GitHub repository. All you have to do is connect your own CSV files to get started. And in the spirit of all other technologies shared via OCP, we encourage you to poke through the code and make updates to it. Do you have an idea to make these visuals even more compelling? Great! We encourage you to treat this as a starting point and use these dashboards to make everyone’s ability to share this data even more interesting and robust.

Lyrica McTiernan is a program manager for Facebook’s sustainability team.

Personal comment:

The Open Compute Project is definitely an interesting one and the fact that it comes with open data about centers' consumption as well. Though, PUE and WUE should be questioned further to know if these are the right measures about the effectiveness of a data center.

I'm not a specialist here, but It seems to me that these values don't give an idea of the overall use of energy for a dedicated task (data and services hosting, remote computing), but just how efficient the center is (if it makes a good use or not or energy and water).

To resume it: I could spend a super large amount of energy and water, but if I do it in an efficient way, then my pue and wue will good and it will look ok on the paper and for the brand communication.

That's certainly a good start (better have a good pue and wue) and in fact all factories should publish such numbers, but it is probably not enough. How much energy for what type of service might or should become a crucial question in a close future, until we'll have an "abundance" of renewable ones!

Via Architecture Source via Archinect

-----

soundscraper. Source: eVolo 2013 Skyscraper Competition

Soundscrapers could soon turn urban noise pollution into usable energy to power cities.

An honourable mention-winning entry in the 2013 eVolo Skyscraper Competition, dubbed Soundscraper, looked into ways to convert the ambient noise in urban centres into a renewable energy form.

Noise pollution is currently a negative element of urban life but it could soon be valued and put to good use.

Acoustic architecture, or design to minimise noise, has long been an important facet of the architecture industry, but design aimed at maximising and capturing noise for beneficial reasons is an untapped area with great potential.

The Soundscraper concept is based around constructing the buildings near major highways and railroad junctions to capture noise vibrations and turn them into energy. The intensity and direction of urban noise dictates the vibrations captured by the building’s facade.

Covering a wide array of frequencies, everyday noise from trains, cars, planes and pedestrians would be picked up by 84,000 electro-active lashes covering a Soundscraper’s light metallic frame. Armed with Parametric Frequency Increased Generators (sound sensors) on the lashes, the vibrations would then be converted to kinetic energy through an energy harvester.

soundscraper. Source: eVolo 2013 Skyscraper Competition

The energy would be converted to electricity through transducer cells, at which point that power could be stored or sent to the grid for regular electricity usage.

The Soundscraper team of Julien Bourgeois, Savinien de Pizzol, Olivier Colliez, Romain Grouselle and Cédric Dounval estimate that 150 megawatts of energy could be produced from one Soundscraper, meaning that a single tower could produce enough energy to fuel 10 percent of Los Angeles’ lighting needs.

Constructing several 100-metre high Soundscrapers throughout a city near major motorways could help offset the electrical needs of the urban population. This form of renewable energy would also help lower the city’s CO2 emissions.

The energy-producing towers could become city landmarks and give interstitial spaces an important function. The electricity needs of an entire city could be met solely by Soundscrapers if enough were constructed at appropriate locations, also helping to minimise the city’s carbon footprint.

Saturday, April 20. 2013

-----

In less than 20 years, experts predict, we will reach the physical limit of how much processing capability can be squeezed out of silicon-based processors in the heart of our computing devices. But a recent scientific finding that could completely change the way we build computing devices may simply allow engineers to sidestep any obstacles.

The breakthrough from materials scientists at IBM Research doesn't sound like a big deal. In a nutshell, they claim to have figured out how to convert metal oxide materials, which act as natural insulators, to a conductive metallic state. Even better, the process is reversible.

Shifting materials from insulator to conductor and back is not exactly new, according to Stuart Parkin, IBM Fellow at IBM Research. What is new is that these changes in state are stable even after you shut off the power flowing through the materials.

And that's huge.

Power On… And On And On And On…

When it comes to computing — mobile, desktop or server — all devices have one key problem: they're inefficient as hell with power.

As users, we experience this every day with phone batteries dipping into the red, hot notebook computers burning our laps or noisily whirring PC fans grating our ears. System administrators and hardware architects in data centers are even more acutely aware of power inefficiency, since they run huge collections of machines that mainline electricity while generating tremendous amounts of heat (which in turn eats more power for the requisite cooling systems).

Here's one basic reason for all the inefficiency: Silicon-based transistors must be powered all the time, and as current runs through these very tiny transistors inside a computer processor, some of it leaks. Both the active transistors and the leaking current generate heat — so much that without heat sinks, water lines or fans to cool them, processors would probably just melt.

Enter the IBM researchers. Computers process information by switching transistors on or off, generating binary 1s and 0s. processing depends on manipulating two states of a transistor: off or on, 1s or 0s — all while the power is flowing. But suppose you could switch a transistor with just a microburst of electricity instead of supplying it constantly with current. The power savings would be enormous, and the heat generated, far, far lower.

That's exactly what the IBM team says it can now accomplish with its state-changing metal oxides. This kind of ultra-low power use is similar to the way neurons in our own brains fire to make connections across synapses, Parkin explained. The human brain is more powerful than the processors we use today, he added, but "it uses a millionth of the power."

The implications are clear. Assuming this technology can be refined and actually manufactured for use in processors and memory, it could form the basis of an entirely whole new class of electronic devices that would barely sip at power. Imagine a smartphone with that kind of technology. The screen, speakers and radios would still need power, but the processor and memory hardware would barely touch the battery.

Moore's Law? What Moore's Law?

There's a lot more research ahead before this technology sees practical applications. Parkin explained that the fluid used to help achieve the steady state changes in these materials needs to be more efficiently delivered using nano-channels, which is what he and his fellow researchers will be focusing on next.

Ultimately, this breakthrough is one among many that we have seen and will see in computing technology. Put in that perspective, it's hard to get that impressed. But stepping back a bit, it's clear that the so-called end of the road for processors due to physical limits is probably not as big a deal as one would think. True, silicon-based processing may see its time pass, but there are other technologies on the horizon that should take its place.

Now all we have to do is think of a new name for Silicon Valley.

Personal comment:

Thanks Christophe for the link! Following my previous post and still in the context of our workshop at Tsinghua, this is an interesting news as well (even so if entirely at the research phase for now but that somehow contradict the previous one I made) that means that in a not so far future, data centers might not consume so much energy anymore and will not produce heat either.

There's still some work to be done, but in a timeframe of 15-20 years, at the end of the silicon period, we could think this could become reality. A huge change for computing at that time (end of Moore's law period) or a bit before is to be expected in any case.

Friday, April 19. 2013

Via Le Parisien via @chrstphggnrd

-----

Une douce chaleur règne chez 4 MTec, à Montrouge. Ici, pas de chauffage central, mais une quinzaine de Q.rad, les « radiateurs numériques » de la start-up Qarnot Computing, hébergée dans les locaux de ce bureau d’études. A l’origine de cette invention, Paul Benoît, ingénieur polytechnicien aux faux airs de Harry Potter, croit beaucoup dans son système « économique et écologique ».

Avec ses trois associés, dont le médiatique avocat Jérémie Assous, très investi dans la promotion du projet, une vingtaine de personnes planchent aujourd’hui sur sa mise en œuvre.

Le principe, que comprendront tous ceux qui ont déjà travaillé avec un ordinateur portable sur les genoux : utiliser la chaleur dégagée par des processeurs informatiques installés dans le radiateur. Vendue à des entreprises, des particuliers, des centres de recherche pour traiter des données ou faire du calcul intensif, leur utilisation suffit largement à couvrir la dépense en électricité. Avantage pour l’habitant du logement ainsi chauffé : c’est gratuit! Et pour les clients des serveurs informatiques, la garantie de tarifs bien inférieurs à ceux des coûteux data centers. Mais l’atout est aussi écologique : « notre système gaspille cinq fois moins d’énergie pour le même résultat », affirme Paul Benoît.

Pour son inventeur, le Q.rad ne connaît pas de limites. Qarnot Computing a réponse à tout : « On règle son chauffage comme on le souhaite, avec un thermostat. En fonction des besoins, nous régulons sur les différents radiateurs le flux des clients informatiques. » Et si ces derniers viennent à manquer? Aucun risque de panne hivernale, selon Paul Benoît : « Nous pourrons offrir gratuitement l’utilisation des serveurs à des chercheurs. » Et pour l’été? « Un mode basse consommation permet de conserver la moitié de la puissance de calcul en chauffant très peu. » Et si c’est encore trop, Qarnot Computing prévoit de la redéployer vers des lieux spécifiques, en équipant par exemple de Q.rad des écoles fermées pendant les grandes vacances. Mais l’ingénieur l’admet : pour son déploiement, la société a tout intérêt à privilégier les zones les plus froides…

300 Q.rad installés cet été dans la centaine de logements d’un HLM du XVe arrondissement parisien

Du côté des utilisateurs informatiques, c’est la question de la sécurité qui prime : là encore, Paul Benoît est sûr du résultat. « Nos systèmes ne stockent pas de données, elles ne font qu’y transiter de manière cryptée et à travers des calculateurs disséminés un peu partout. » Et si l’on tente d’ouvrir la machine, « elle s’arrête », prévient l’inventeur.

Des arguments qui ont déjà convaincu : le mois prochain, 25 radiateurs viendront chauffer l’école d’ingénieurs Télécom Paris Tech. Et à partir de cet été, ce sont quelque 300 Q.rad qui vont être installés dans la centaine de logements d’un HLM du XVe arrondissement parisien, à Balard. « Une première expérimentation à grande échelle, salue Jean-Louis Missika, l’adjoint parisien chargé de l’innovation et de la recherche, pour un projet qui pourrait être révolutionnaire! »

Personal comment:

While we are still located in Beijing for the moment (and therefore not publishing so much on the blog --sorry for that--) and while we are working with some students at Tsinghua around the idea of "inhabiting the data center" (transposed in a much smaller context, which becomes therefore in this case "inhabiting the servers' cabinet"), this news about servers becoming heaters is entirely related to our project and could certainly be used.

Wednesday, March 20. 2013

Via Kazys Varnelis blog

-----

Today's New York Times carries a front-page piece by James Glanz on the massive energy waste and pollution produced by data centers. The lovely cloud that we've all been seeing icons for lately, turns out is not made of data, but rather of smog.

The basics here aren't very new. Already six years ago, we heard the apocryphal story of a Second Life avatar consuming as much energy as the average Brazilian. That data centers consume huge amounts of energy and contribute to pollution is well known.

On the other hand, Glanz does make a few critical observations. First, much of this energy use and pollution comes from our need to have data instantly accessible. Underscoring this, the article ends with the following quote:

“That’s what’s driving that massive growth — the end-user expectation of anything, anytime, anywhere,” said David Cappuccio, a managing vice president and chief of research at Gartner, the technology research firm. “We’re what’s causing the problem.”

Second, much of this data is rarely, if ever used, residing on unused, "zombie" servers. Back to our Second Life avatars, like many of my readers, I created a few avatars a half decade ago and haven't been back since. Do these avatars continue consuming energy, making Second Life an Internet version of the Zombie Apocalypse?

So the ideology of automobliity—that freedom consists of the ability to go anywhere at anytime—is now reborn, in zombie form, on the Net. Of course it also exists in terms of global travel. I've previously mentioned the incongruity between individuals proudly declaring that they live in the city so they don't drive yet bragging about how much they fly.

For the 5% or so that comprise world's jet-setting, cloud-dwelling élite, gratification is as much the rule as it ever was for the much-condemned postwar suburbanites, only now it has to be instantaneous and has to demonstrate their ever-more total power. To mix my pop culture references, perhaps that is the lesson we can take away from Mad Men. As Don Draper moves from the suburb to the city, his life loses its trappings of familial responsibility, damaged and conflicted though they may have been, in favor of a designed lifestyle, unbridled sexuality, and his position at a creative workplace. Ever upwards with gratification, ever downwards with responsibility, ever upwards with existential risk.

Survival depends on us ditching this model once and for all.

Wednesday, March 06. 2013

Via MIT Technology Review

-----

Belgium has plans for an artificial “energy atoll” to store excess wind power in the North Sea.

This illustration shows how the artificial island would use pumped hydro energy storage where water is pumped to a reservoir during off-peak times and released to a lower reservoir later to generate electricity.

Perhaps it’s not surprising that people from countries with experience holding back the sea see the potential of building an artificial island to store wind energy.

Belgian cabinet member, Johan Vande Lanotte, has introduced a planning proposal for a man-made atoll placed in the North Sea to store energy.

The idea is to place the island a few kilometers off shore near a wind farm, according to Vande Lanotte’s office. When the wind farm produces excess energy for the local electricity grid, such as off-peak times in the overnight hours, the island will store the energy and release it later during peak times.

It would use the oldest and most cost-effective bulk energy storage there is: pumped hydro. During off-peak times, power from the turbines would pump water up 15 meters to a reservoir. To generate electricity during peak times, the water is released to turn a generator, according to a representative.

The Belgian government doesn’t propose building the facility itself and would rely on private industry instead. But there is sufficient interest in energy storage that it should be part of planning exercises and weighed against other activities in the North Sea, the representative said. It would be placed three kilometers offshore and be 2.4 kilometers wide, according to a drawing provided by Vande Lanotte’s office.

The plan underscores some of the challenges associated with energy storage for the electricity grid. Pumped hydro, which contributes a significant portion of energy supply in certain countries, is by far the cheapest form of multi-hour energy storage. It costs about $100 per kilowatt-hour, a fraction of batteries, flywheels, and other methods, according to the Electricity Storage Association. (See a cost comparison chart here.) And grid storage is a considered critical to using intermittent solar and wind power more widely.

The idea of using an “energy atoll” may seen outlandish on the surface, but it’s really not, says Haresh Kamath, program manager for energy storage at the Electric Power Research Institute (EPRI). This approach, first proposed by a Dutch company, uses cheap materials—water and dirt. On the other hand, the engineering challenges of building in the ocean and technical issues, such as using salt water with a generator, are significant.

“It’s not totally crazy—it’s within the realm of reason. The question isn’t whether we can do it,” he says. “It’s whether it makes sense and that’s the thing that needs further studies and understanding.”

Thursday, February 07. 2013

Note: I'm again here joining two recent posts. First, what it could climatically and therefore spatially, geographically, energetically, socialy, ... mean, degree after degree to increase the average temperature of the Earth and second, an information map about our warming world...

It is an unsigned paper, so it certainly need to be cross-checked, which I haven't done (time, time...)! But I post it nevertheless as it points out some believable consequences, yet very dark. As many people say now, we don't have much time left to start acting, strong (7-10 years).

Via Berens Finance (!)

-----

A degree by degree explanation of what will happen when the earth warms

-

Even if greenhouse emissions stopped overnight the concentrations already in the atmosphere would still mean a global rise of between 0.5 and 1C. A shift of a single degree is barely perceptible to human skin, but it’s not human skin we’re talking about. It’s the planet; and an average increase of one degree across its entire surface means huge changes in climatic extremes.

Six thousand years ago, when the world was one degree warmer than it is now, the American agricultural heartland around Nebraska was desert. It suffered a short reprise during the dust- bowl years of the 1930s, when the topsoil blew away and hundreds of thousands of refugees trailed through the dust to an uncertain welcome further west. The effect of one-degree warming, therefore, requires no great feat of imagination.

“The western United States once again could suffer perennial droughts, far worse than the 1930s. Deserts will reappear particularly in Nebraska, but also in eastern Montana, Wyoming and Arizona, northern Texas and Oklahoma. As dust and sandstorms turn day into night across thousands of miles of former prairie, farmsteads, roads and even entire towns will be engulfed by sand.”

What’s bad for America will be worse for poorer countries closer to the equator. It has beencalculated that a one-degree increase would eliminate fresh water from a third of the world’s land surface by 2100. Again we have seen what this means. There was an incident in the summer of 2005: One tributary fell so low that miles of exposed riverbank dried out into sand dunes, with winds whipping up thick sandstorms. As desperate villagers looked out onto baking mud instead of flowing water, the army was drafted in to ferry precious drinking water up the river – by helicopter, since most of the river was too low to be navigable by boat. The river in question was not some small, insignificant trickle in Sussex. It was the Amazon.

While tropical lands teeter on the brink, the Arctic already may have passed the point of no return. Warming near the pole is much faster than the global average, with the result that Arctic icecaps and glaciers have lost 400 cubic kilometres of ice in 40 years. Permafrost – ground that has lain frozen for thousands of years – is dissolving into mud and lakes, destabilising whole areas as the ground collapses beneath buildings, roads and pipelines. As polar bears and Inuits are being pushed off the top of the planet, previous predictions are starting to look optimistic. Earlier snowmelt means more summer heat goes into the air and ground rather than into melting snow, raising temperatures in a positive feedback effect. More dark shrubs and forest on formerly bleak tundra means still more heat is absorbed by vegetation.

Out at sea the pace is even faster. Whilst snow-covered ice reflects more than 80% of the sun’s heat, the darker ocean absorbs up to 95% of solar radiation. Once sea ice begins to melt, in other words, the process becomes self-reinforcing. More ocean surface is revealed, absorbing solar heat, raising temperatures and making it unlikelier that ice will re-form next winter. The disappearance of 720,000 square kilometres of supposedly permanent ice in a single year testifies to the rapidity of planetary change. If you have ever wondered what it will feel like when the Earth crosses a tipping point, savour the moment.

Mountains, too, are starting to come apart. In the Alps, most ground above 3,000 metres is stabilised by permafrost. In the summer of 2003, however, the melt zone climbed right up to 4,600 metres, higher than the summit of the Matterhorn and nearly as high as Mont Blanc. With the glue of millennia melting away, rocks showered down and 50 climbers died. As temperatures go on edging upwards, it won’t just be mountaineers who flee. Whole towns and villages will be at risk. Some towns, like Pontresina in eastern Switzerland, have already begun building bulwarks against landslides.

At the opposite end of the scale, low-lying atoll countries such as the Maldives will be preparing for extinction as sea levels rise, and mainland coasts – in particular the eastern US and Gulf of Mexico, the Caribbean and Pacific islands and the Bay of Bengal – will be hit by stronger and stronger hurricanes as the water warms. Hurricane Katrina, which in 2005 hit New Orleans with the combined impacts of earthquake and flood, was a nightmare precursor of what the future holds.

Most striking of all was seeing how people behaved once the veneer of civilisation had been torn away. Most victims were poor and black, left to fend for themselves as the police either joined in the looting or deserted the area. Four days into the crisis, survivors were packed into the city’s Superdome, living next to overflowing toilets and rotting bodies as gangs of young men with guns seized the only food and water available. Perhaps the most memorable scene was a single military helicopter landing for just a few minutes, its crew flinging food parcels and water bottles out onto the ground before hurriedly taking off again as if from a war zone. In scenes more like a Third World refugee camp than an American urban centre, young men fought for the water as pregnant women and the elderly looked on with nothing. Don’t blame them for behaving like this, I thought. It’s what happens when people are desperate.

Chance of avoiding one degree of global warming: zero.

BETWEEN ONE AND TWO DEGREES OF WARMING

At this level, expected within 40 years, the hot European summer of 2003 will be the annual norm. Anything that could be called a heatwave thereafter will be of Saharan intensity. Even in average years, people will die of heat stress.

The first symptoms may be minor. A person will feel slightly nauseous, dizzy and irritable. It needn’t be an emergency: an hour or so lying down in a cooler area, sipping water, will cure it. But in Paris, August 2003, there were no cooler areas, especially for elderly people.

Once body temperature reaches 41C (104F) its thermoregulatory system begins to break down. Sweating ceases and breathing becomes shallow and rapid. The pulse quickens, and the victim may lapse into a coma. Unless drastic measures are taken to reduce the body’s core temperature, the brain is starved of oxygen and vital organs begin to fail. Death will be only minutes away unless the emergency services can quickly get the victim into intensive care.

These emergency services failed to save more than 10,000 French in the summer of 2003. Mortuaries ran out of space as hundreds of dead bodies were brought in each night. Across Europe as a whole, the heatwave is believed to have cost between 22,000 and 35,000 lives. Agriculture, too, was devastated. Farmers lost $12 billion worth of crops, and Portugal alone suffered $12 billion of forest-fire damage. The flows of the River Po in Italy, Rhine in Germany and Loire in France all shrank to historic lows. Barges ran aground, and there was not enough water for irrigation and hydroelectricity. Melt rates in the Alps, where some glaciers lost 10% of their mass, were not just a record – they doubled the previous record of 1998. According to the Hadley centre, more than half the European summers by 2040 will be hotter than this. Extreme summers will take a much heavier toll of human life, with body counts likely to reach hundreds of thousands. Crops will bake in the fields, and forests will die off and burn. Even so, the short-term effects may not be the worst:

From the beech forests of northern Europe to the evergreen oaks of the Mediterranean, plant growth across the whole landmass in 2003 slowed and then stopped. Instead of absorbing carbon dioxide, the stressed plants began to emit it. Around half a billion tonnes of carbon was added to the atmosphere from European plants, equivalent to a twelfth of global emissions from fossil fuels. This is a positive feedback of critical importance, because it suggests that, as temperatures rise, carbon emissions from forests and soils will also rise. If these land-based emissions are sustained over long periods, global warming could spiral out of control.

In the two-degree world, nobody will think of taking Mediterranean holidays. The movement of people from northern Europe to the Mediterranean is likely to reverse, switching eventually into a mass scramble as Saharan heatwaves sweep across the Med. People everywhere will think twice about moving to the coast. When temperatures were last between 1 and 2C higher than they are now, 125,000 years ago, sea levels were five or six metres higher too. All this “lost” water is in the polar ice that is now melting. Forecasters predict that the “tipping point” for Greenland won’t arrive until average temperatures have risen by 2.7C. The snag is that Greenland is warming much faster than the rest of the world – 2.2 times the global average. “Divide one figure by the other,” says Lynas, “and the result should ring alarm bells across the world. Greenland will tip into irreversible melt once global temperatures rise past a mere 1.2C. The ensuing sea-level ?rise will be far more than the half-metre that ?the IPCC has predicted for the end of the century. Scientists point out that sea levels at the end of the last ice age shot up by a metre every 20 years for four centuries, and that Greenland’s ice, in the words of one glaciologist, is now thinning like mad and flowing much faster than it ought to. Its biggest outflow glacier, Jakobshavn Isbrae, has thinned by 15 metres every year since 1997, and its speed of flow has doubled. At this rate the whole Greenland ice sheet would vanish within 140 years. Miami would disappear, as would most of Manhattan. Central London would be flooded. Bangkok, Bombay and Shanghai would lose most of their area. In all, half of humanity would have to move to higher ground.

Not only coastal communities will suffer. As mountains lose their glaciers, so people will lose their water supplies. The entire Indian subcontinent will be fighting for survival. As the glaciers disappear from all but the highest peaks, their runoff will cease to power the massive rivers that deliver vital freshwater to hundreds of millions. Water shortages and famine will be the result, destabilising the entire region. And this time the epicentre of the disaster won’t be India, Nepal or Bangladesh, but nuclear-armed Pakistan.

Everywhere, ecosystems will unravel as species either migrate or fall out of synch with each other. By the time global temperatures reach two degrees of warming in 2050, more than a third of all living species will face extinction.

Chance of avoiding two degrees of global warming: 93%, but only if emissions of greenhouse gases are reduced by 60% over the next 10 years.

BETWEEN TWO AND THREE DEGREES OF WARMING

Up to this point, assuming that governments have planned carefully and farmers have converted to more appropriate crops, not too many people outside subtropical Africa need have starved. Beyond two degrees, however, preventing mass starvation will be as easy as halting the cycles of the moon. First millions, then billions, of people will face an increasingly tough battle to survive.

To find anything comparable we have to go back to the Pliocene – last epoch of the Tertiary period, 3m years ago. There were no continental glaciers in the northern hemisphere (trees grew in the Arctic), and sea levels were 25 metres higher than today’s. In this kind of heat, the death of the Amazon is as inevitable as the melting of Greenland. The paper spelling it out is the very one whose apocalyptic message so shocked in 2000. Scientists at the Hadley centre feared that earlier climate models, which showed global warming as a straightforward linear progression, were too simplistic in their assumption that land and the oceans would remain inert as their temperatures rose. Correctly as it would turn out, they predicted positive feedback.

Warmer seas absorb less carbon dioxide, leaving more to accumulate in the atmosphere and intensify global warming. On land, matters would be even worse. Huge amounts of carbon are stored in the soil, the half-rotted remains of dead vegetation. The generally accepted estimate is that the soil carbon reservoir contains some 1600 gigatonnes, more than double the entire carbon content of the atmosphere. As soil warms, bacteria accelerate the breakdown of this stored carbon, releasing it into the atmosphere.

The end of the world is nigh. A three-degree increase in global temperature – possible as early as 2050 – would throw the carbon cycle into reverse. Instead of absorbing carbon dioxide, vegetation and soils start to release it. So much carbon pours into the atmosphere that it pumps up atmospheric concentrations by 250 parts per million by 2100, boosting global warming by another 1.5C. In other words, the Hadley team had discovered that carbon-cycle feedbacks could tip the planet into runaway global warming by the middle of this century – much earlier than anyone had expected.

Confirmation came from the land itself. Climate models are routinely tested against historical data. In this case, scientists checked 25 years’ worth of soil samples from 6,000 sites across the UK. The result was another black joke. As temperatures gradually rose the scientists found that huge amounts of carbon had been released naturally from the soils. They totted it all up and discovered – irony of ironies – that the 13m tonnes of carbon British soils were emitting annually was enough to wipe out all the country’s efforts to comply with the Kyoto Protocol.” All soils will be affected by the rising heat, but none as badly as the Amazon’s. “Catastrophe” is almost too small a word for the loss of the rainforest. Its 7m square kilometres produce 10% of the world’s entire photosynthetic output from plants. Drought and heat will cripple it; fire will finish it off. In human terms, the effect on the planet will be like cutting off oxygen during an asthma attack.

In the US and Australia, people will curse the climate-denying governments of Bush and Howard. No matter what later administrations may do, it will not be enough to keep the mercury down. With new “super-hurricanes” growing from the warming sea, Houston could be destroyed by 2045, and Australia will be a death trap. “Farming and food production will tip into irreversible decline. Salt water will creep up the stricken rivers, poisoning ground water. Higher temperatures mean greater evaporation, further drying out vegetation and soils, and causing huge losses from reservoirs. In state capitals, heat every year is likely to kill between 8,000 and 15,000 mainly elderly people.

It is all too easy to visualise what will happen in Africa. In Central America, too, tens of millions will have little to put on their tables. Even a moderate drought there in 2001 meant hundreds of thousands had to rely on food aid. This won’t be an option when world supplies are stretched to breaking point (grain yields decline by 10% for every degree of heat above 30C, and at 40C they are zero). Nobody need look to the US, which will have problems of its own. As the mountains lose their snow, so cities and farms in the west will lose their water and dried-out forests and grasslands will perish at the first spark.

The Indian subcontinent meanwhile will be choking on dust. All of human history shows that, given the choice between starving in situ and moving, people move. In the latter part of the century tens of millions of Pakistani citizens may be facing this choice. Pakistan may find itself joining the growing list of failed states, as civil administration collapses and armed gangs seize what little food is left.

As the land burns, so the sea will go on rising. Even by the most optimistic calculation, 80% of Arctic sea ice by now will be gone, and the rest will soon follow. New York will flood; the catastrophe that struck eastern England in 1953 will become an unremarkable regular event; and the map of the Netherlands will be torn up by the North Sea. Everywhere, starving people will be on the move – from Central America into Mexico and the US, and from Africa into Europe, where resurgent fascist parties will win votes by promising to keep them out.

Chance of avoiding three degrees of global warming: poor if the rise reaches two degrees and triggers carbon-cycle feedbacks from soils and plants.

BETWEEN THREE AND FOUR DEGREES OF WARMING

The stream of refugees will now include those fleeing from coasts to safer interiors – millions at a time when storms hit. Where they persist, coastal cities will become fortified islands. The world economy, too, will be threadbare. As direct losses, social instability and insurance payouts cascade through the system, the funds to support displaced people will be increasingly scarce. Sea levels will be rampaging upwards – in this temperature range, both poles are certain to melt, causing an eventual rise of 50 metres. “I am not suggesting it would be instantaneous. In fact it would take centuries, and probably millennia, to melt all of the Antarctic’s ice. But it could yield sea-level rises of a metre or so every 20 years – far beyond our capacity to adapt.Oxford would sit on one of many coastlines in a UK reduced to an archipelago of tiny islands.

More immediately, China is on a collision course with the planet. By 2030, if its people are consuming at the same rate as Americans, they will eat two-thirds of the entire global harvest and burn 100m barrels of oil a day, or 125% of current world output. That prospect alone contains all the ingredients of catastrophe. But it’s worse than that: “By the latter third of the 21st century, if global temperatures are more than three degrees higher than now, China’s agricultural production will crash. It will face the task of feeding 1.5bn much richer people – 200m more than now – on two thirds of current supplies.” For people throughout much of the world, starvation will be a regular threat; but it will not be the only one.

The summer will get longer still, as soaring temperatures reduce forests to tinderwood and cities to boiling morgues. Temperatures in the Home Counties could reach 45C – the sort of climate experienced today in Marrakech. Droughts will put the south-east of England on the global list of water-stressed areas, with farmers competing against cities for dwindling supplies from rivers and reservoirs.

Air-conditioning will be mandatory for anyone wanting to stay cool. This in turn will put ever more stress on energy systems, which could pour more greenhouse gases into the air if coal and gas-fired power stations ramp up their output, hydroelectric sources dwindle and renewables fail to take up the slack. The abandonment of the Mediterranean will send even more people north to “overcrowded refuges in the Baltic, Scandinavia and the British Isles.

Britain will have problems of its own. As flood plains are more regularly inundated, a general retreat out of high risk areas is likely. Millions of people will lose their lifetime investments in houses that become uninsurable and therefore unsaleable? The Lancashire/Humber corridor is expected to be among the worst affected regions, as are the Thames Valley, eastern Devon and towns around the already flood-prone Severn estuary like Monmouth and Bristol. The entire English coast from the Isle of Wight to Middlesbrough is classified as at ‘very high’ or ‘extreme’ risk, as is the whole of Cardigan Bay in Wales.

One of the most dangerous of all feedbacks will now be kicking in – the runaway thaw of permafrost. Scientists believe at least 500 billion tonnes of carbon are waiting to be released from the Arctic ice, though none yet has put a figure on what it will add to global warming. One degree? Two? Three? The pointers are ominous.

As with Amazon collapse and the carbon-cycle feedback in the three-degree worldstabilising global temperatures at four degrees above current levels may not be possible. If we reach three degrees, therefore, that leads inexorably to four degrees, which leads inexorably to five?

Chance of avoiding four degrees of global warming: poor if the rise reaches three degrees and triggers a runaway thaw of permafrost.

BETWEEN FOUR AND FIVE DEGREES OF WARMING

We are looking now at an entirely different planet. Ice sheets have vanished from both poles; rainforests have burnt up and turned to desert; the dry and lifeless Alps resemble the High Atlas; rising seas are scouring deep into continental interiors. One temptation may be to shift populations from dry areas to the newly thawed regions of the far north, in Canada and Siberia. Even here, though, summers may be too hot for crops to be grown away from the coasts; and there is no guarantee that northern governments will admit southern refugees. Lynas recalls James Lovelock’s suspicion that Siberia and Canada would be invaded by China and the US, each hammering another nail into humanity’s coffin. Any armed conflict, particularly involving nuclear weapons, would of course further increase the planetary surface area uninhabitable for humans.

When temperatures were at a similar level 55m years ago, following a very sudden burst of global warming in the early Eocene, alligators and other subtropical species were living high in the Arctic. What had caused the climate to flip? Suspicion rests on methane hydrate – “an ice-like combination of methane and water that forms under the intense cold and pressure of the deep sea”, and which escapes with explosive force when tapped. Evidence of a submarine landslide off Florida, and of huge volcanic eruptions under the North Atlantic, raises the possibility of trapped methane – a greenhouse gas 20 times more potent than carbon dioxide – being released in a giant belch that pushed global temperatures through the roof.

Summer heatwaves scorched the vegetation out of continental Spain, leaving a desert terrain which was heavily eroded by winter rainstorms. Palm mangroves grew as far north as England and Belgium, and the Arctic Ocean was so warm that Mediterranean algae thrived. In short, it was a world much like the one we are heading into this century. Although the total amount of carbon in the atmosphere during the Paleocene-Eocene thermal maximum, or PETM, as scientists call it, was more than today’s, the rate of increase in the 21st century may be 30 times faster. It may well be the fastest increase the world has ever seen – faster even than the episodes that caused catastrophic mass extinctions.

Globalism in the five-degree world will break down into something more like parochialism. Customers will have nothing to buy because producers will have nothing to sell. With no possibility of international aid, migrants will have to force their way into the few remaining habitable enclaves and fight for survival.

Where no refuge is available, civil war and a collapse into racial or communal conflict seems the likely outcome. Isolated survivalism, however, may be as impracticable as dialling for room service. How many of us could really trap or kill enough game to feed a family? Even if large numbers of people did successfully manage to fan out into the countryside, wildlife populations would quickly dwindle under the pressure. Supporting a hunter-gatherer lifestyle takes 10 to 100 times the land per person that a settled agricultural community needs. A large-scale resort to survivalism would turn into a further disaster for biodiversity as hungry humans killed and ate anything that moved. Including, perhaps, each other. Invaders do not take kindly to residents denying them food. History suggests that if a stockpile is discovered, the householder and his family may be tortured and killed. Look for comparison to the experience of present-day Somalia, Sudan or Burundi, where conflicts over scarce land and food are at the root of lingering tribal wars and state collapse.

Chance of avoiding five degrees of global warming: negligible if the rise reaches four degrees and releases trapped methane from the sea bed.

BETWEEN FIVE AND SIX DEGREES OF WARMING

Although warming on this scale lies within the IPCC’s officially endorsed range of 21st-century possibilities, climate models have little to say about what Lynas, echoing Dante, describes as “the Sixth Circle of Hell”. To see the most recent climatic lookalike, we have to turn the geological clock back between 144m and 65m years, to the Cretaceous, which ended with the extinction of the dinosaurs. There was an even closer fit at the end of the Permian, 251m years ago, when global temperatures rose by – yes – six degrees, and 95% of species were wiped out.

That episode was the worst ever endured by life on Earth, the closest the planet has come to ending up a dead and desolate rock in space.” On land, the only winners were fungi that flourished on dying trees and shrubs. At sea there were only losers. Warm water is a killer. Less oxygen can dissolve, so conditions become stagnant and anoxic. Oxygen-breathing water-dwellers – all the higher forms of life from plankton to sharks – face suffocation. Warm water also expands, and sea levels rose by 20 metres.” The resulting “super-hurricanes” hitting the coasts would have triggered flash floods that no living thing could have survived.

There are aspects of the so-called “end-Permian extinction” that are unlikely to recur – most importantly, the vast volcanic eruption in Siberia that spread magma hundreds of metres thick over an area bigger than western Europe and shot billions of tonnes of CO2 into the atmosphere. That is small comfort, however, for beneath the oceans, another monster stirred – the same that would bring a devastating end to the Palaeocene nearly 200m years later, and that still lies in wait today. Methane hydrate.

What happens when warming water releases pent-up gas from the sea bed: First, a small disturbance drives a gas-saturated parcel of water upwards. As it rises, bubbles begin to appear, as dissolved gas fizzles out with reducing pressure – just as a bottle of lemonade overflows if the top is taken off too quickly. These bubbles make the parcel of water still more buoyant, accelerating its rise through the water. As it surges upwards, reaching explosive force, it drags surrounding water up with it. At the surface, water is shot hundreds of metres into the air as the released gas blasts into the atmosphere. Shockwaves propagate outwards in all directions, triggering more eruptions nearby.

The eruption is more than just another positive feedback in the quickening process of global warming. Unlike CO2, methane is flammable. Even in air-methane concentrations as low as 5%, the mixture could ignite from lightning or some other spark and send fireballs tearing across the sky. The effect would be much like that of the fuel-air explosives used by the US and Russian armies – so-called “vacuum bombs” that ignite fuel droplets above a target. According to the CIA, those near the ignition point are obliterated. Those at the fringes are likely to suffer many internal injuries, including burst eardrums, severe concussion, ruptured lungs and internal organs, and possibly blindness.” Such tactical weapons, however, are squibs when set against methane-air clouds from oceanic eruptions. Scientists calculate that they could “destroy terrestrial life almost entirely (251m years ago, only one large land animal, the pig-like lystrosaurus, survived). It has been estimated that a large eruption in future could release energy equivalent to 108 megatonnes of TNT – 100,000 times more than the world’s entire stockpile of nuclear weapons. Not even Lynas, for all his scientific propriety, can avoid the Hollywood ending. “It is not too difficult to imagine the ultimate nightmare, with oceanic methane eruptions near large population centres wiping out billions of people – perhaps in days. Imagine a ‘fuel-air explosive’ fireball racing towards a city – London, say, or Tokyo – the blast wave spreading out from the explosive centre with the speed and force of an atomic bomb. Buildings are flattened, people are incinerated where they stand, or left blind and deaf by the force of the explosion. Mix Hiroshima with post-Katrina New Orleans to get some idea of what such a catastrophe might look like: burnt survivors battling over food, wandering far and wide from empty cities.

Then would come hydrogen sulphide from the stagnant oceans. “It would be a silent killer: imagine the scene at Bhopal following the Union Carbide gas release in 1984, replayed first at coastal settlements, then continental interiors across the world. At the same time, as the ozone layer came under assault, we would feel the sun’s rays burning into our skin, and the first cell mutations would be triggering outbreaks of cancer among anyone who survived. Dante’s hell was a place of judgment, where humanity was for ever punished for its sins. With all the remaining forests burning, and the corpses of people, livestock and wildlife piling up in every continent, the six-degree world would be a harsh penalty indeed for the mundane crime of burning fossil energy.

------------

Via Information Aesthetics

-----

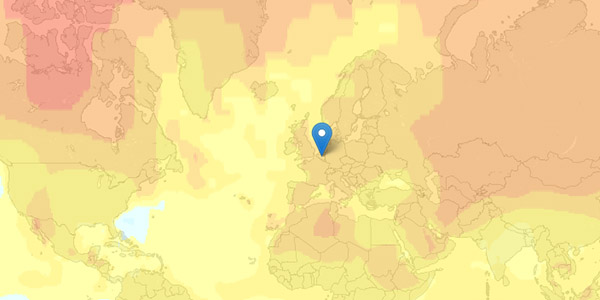

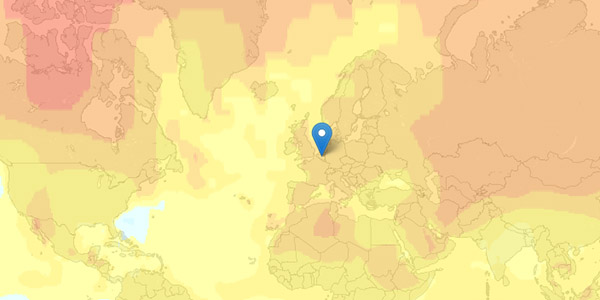

Warming World [newscientistapps.com], developed by Chris Amico and Peter Aldhous for the New Scientist, shows the distribution of ambient temperatures around the world, ranging from 1951 to now. The graphs and maps highlight the changes relative to the average temperatures measured between 1951 to 1980.

Users can click anywhere on the map and investigate an entire temperature record for that grid cell, retrieved via NASA's surface temperature analysis database GISTEMP, which is based on 6000 monitoring stations, ships and satellite measurements worldwide. Via the drop-down list at the top, users can also switch between different map overlays that summarize the average temperatures for different 20-year pictures. Accordingly, climate change become visible as the cool blue hues from previous decades are replaced with warm red and yellow hues around the start of the 20th Century.

Accordingly, this tool aims to communicate the reality and variability of recorded climate change, and compare that local picture with the trend for the global average temperature..

The accompanying article can be found here.

See also Cal-Adapt and Climate Change Media Watch.

----

Read also "An Alarm in the Offing on Climate Change", The New York Times.

Friday, December 07. 2012

Via MIT Technology Review

-----

Apple doubles the size of the fuel cell at its new data center, a potential new energy model for the cloud computing.

One of the ways Apple’s new data center will save energy is by using a white roof that reflects heat. Credit: Apple.

Apple is doubling the size its fuel cell installation at its new North Carolina data center, making it a proving ground for large-scale on-site energy at data centers.

In papers filed with the state’s utilities commission last month, Apple indicated that it intends to expand capacity from five megawatts of fuel cells, which are now runnning, to a maximum of 10 megawatts. The filing was originally spotted by the Charlotte News Observer.

Apple says the much-watched project (Wired actually hired a pilot to take photos of it) will be one of the most environmentally benign data centers ever built because it will use several energy-efficiency tricks and run on biogas-powered fuel cells and a giant 20-megawatt solar array.

Beyond Apple’s eco-bragging rights, this data center (and one being built by eBay) should provide valuable insights to the rest of the cloud computing industry. Apple likely won’t give hard numbers on expenses but, if all works as planned, it will validate data center fuel cells for reliable power generation at this scale.

Stationary fuel cells are certainly well proven, but multi-megawatt installations are pretty rare. Data center customers for Bloom Energy, which is supplying Apple in North Carolina, typically have far less than a megawatt installed. Each Bloom Energy Server, which takes up about a full parking space, produces 200 kilowatts.

By going to 10 megawatts of capacity, Apple can claim the largest fuel cell powered data center, passing eBay which earlier this year announced plans for six megawatts worth of fuel cells at a data center in Utah. (See, EBay Goes All-in With Fuel Cell-Powered Fuel Cell Data Center.) It also opens up new ways of doing business.

Using fuel cells at this scale potentially changes how data center operators use grid power and traditional back up diesel generators. With Apple’s combination of its solar power and fuel cells, it appears the facility will be able to produce more than the 20 megawatts it needs at full steam. That means Apple could sell power back to the utility or even operate independently and use the grid as back up power—a completely new configuration.

Bloom Energy’s top data center executive Peter Gross told Data Center Insider that data center servers could have two power cords—one from the grid and one from the fuel cells. In the event of a power failure, those fuel cells could keep the servers humming, rather than the backup diesel generators.

Apple hasn’t disclose how much it’s paying for all this, but the utility commission filing indicates it plans to monetize its choice of biogas, rather than natural gas. The documents show that Apple is contracting with a separate company to procure biogas, or methane that is given off from landfills. Because it’s a renewable source, Apple can receive compensation for renewable energy credits.

Proving fuel cells and solar work in a mission-critical workload at this scale is one thing. Whether it makes economic sense for companies other than cash-rich Apple and eBay is something different. Apple and eBay could save some money by installing fewer diesel generators. Investing in solar also gives companies a fixed electricity cost for years ahead, shielding them from spikes in utilities’ power prices.

But some of the most valuable information on these projects will be how the numbers pencil out. That might help conservative data center designers to look at these technologies, which are substantially cleaner than the grid, more seriously.

Both operationally and financially, there’s a lot to learn down in Maiden. Let’s hope Apple is a bit more forthcoming about its data center than telling us what’s in the next iPhone.

Personal comment:

This looks like one of several (but far not enough) implementations of "the third industrial revolution" (J. Rifkin), definitely a book to read to foresee a path toward a new (economic) model of clean energy and society, when the information based Internet will (might) combine with the energy based Internet and when energy will start to be an (abundant) solution and not a problem anymore.

We've seen computer/Internet industries take over the music industry, or now the book industry, etc. Will we see them take over the energy industry? We can witness several "little things" going into that direction. Google energy in your Google+ "task bar" by 2030?

But the main point of all this, is that if we by chance move not too late toward a clean energy model (fuel cells, solar, wind, etc.) --but note that we don't have any other choice now (an increase of 6°C degrees in average temperature means massive ecosystems extinctions by the end of the century, and will it or not, we are part of them--), it should remain decentralized and not concentrated as it is now. Therefore we should remain vigilant with this point like we are with the actual Internet. It is important that the system remains participative in some ways and that anybody can produce its own energy and share the surplus.

|