Monday, August 31. 2020

fabric | ch, Satellite Daylight Pavilion | #luminosity #artificiality #interference

Note: during the long shutdown of the museums in Switzerland last Spring, fabric | ch has nevertheless the chance to see Public Platform of Future Past (pdf), one of its latest architectural investigations, integrated into the permanent collection of the Haus der elektronischen Künste (HeK), in Basel.

We are pleased that our work is recognized by innovative and risk taking curators (Sabine Himmelsbach, Boris Magrini), and become part of the museum's collection, along several others works (by Jodi, !Mediengruppe Bitnik, Olia Lialina, Christina Kubisch, Zimoun, etc.)

It is also the first of our work whose certificate of authenticity has been issued by a blockchain! (datadroppers)

A second work - currently in production - will enter the collection in the spring of 2021, which will be documented at that time.

-----

Via Haus der elektronischen Künste

By Sabine Himmelsbach

HeK Sammlung - Sabine Himmelsbach über fabric | ch from HeK on Vimeo.

---

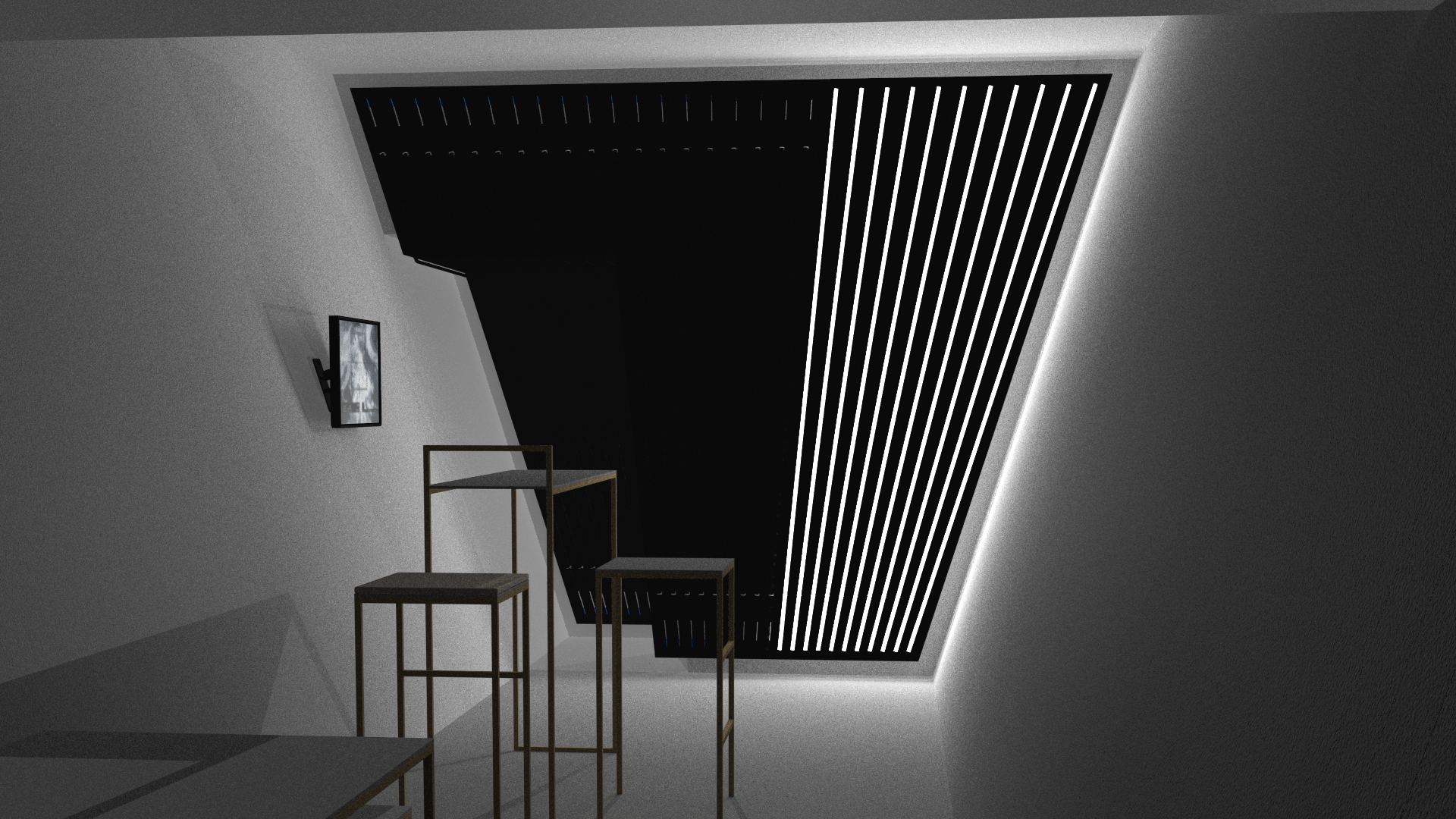

The video work is composed of four distinct videos, each showing a distinct but static view into a timelapse of the pavilion and its evolving light.

All four video must be displayed simultaneously by following minimal instructions.

Wednesday, April 18. 2018

A Turing Machine Handmade Out of Wood | #history #computing #openculture

Note: Turing Machines are now undoubtedly part of pop culture, aren't they?

Via Open Culture (via Boing Boing)

-----

It took Richard Ridel six months of tinkering in his workshop to create this contraption--a mechanical Turing machine made out of wood. The silent video above shows how the machine works. But if you're left hanging, wanting to know more, I'd recommend reading Ridel's fifteen page paper where he carefully documents why he built the wooden Turing machine, and what pieces and steps went into the construction.

If this video prompts you to ask, what exactly is a Turing Machine?, also consider adding this short primer by philosopher Mark Jago to your media diet.

Related Content:

Free Online Computer Science Courses

The Books on Young Alan Turing’s Reading List: From Lewis Carroll to Modern Chromatics

The LEGO Turing Machine Gives a Quick Primer on How Your Computer Works

The Enigma Machine: How Alan Turing Helped Break the Unbreakable Nazi Code

Saturday, February 17. 2018

Environmental Devices retrospective exhibition by fabric | ch until today! | #fabric|ch #accrochage #book

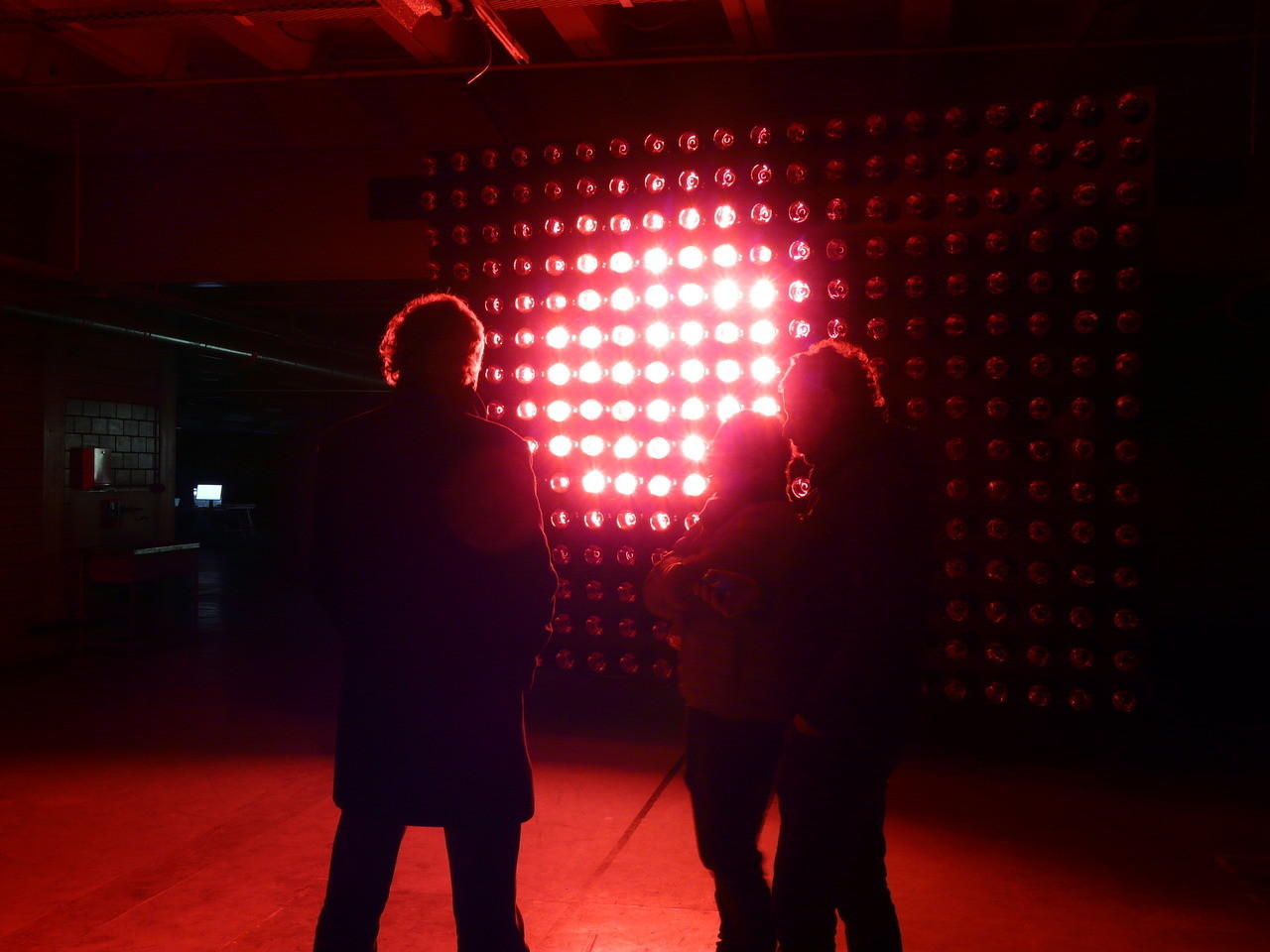

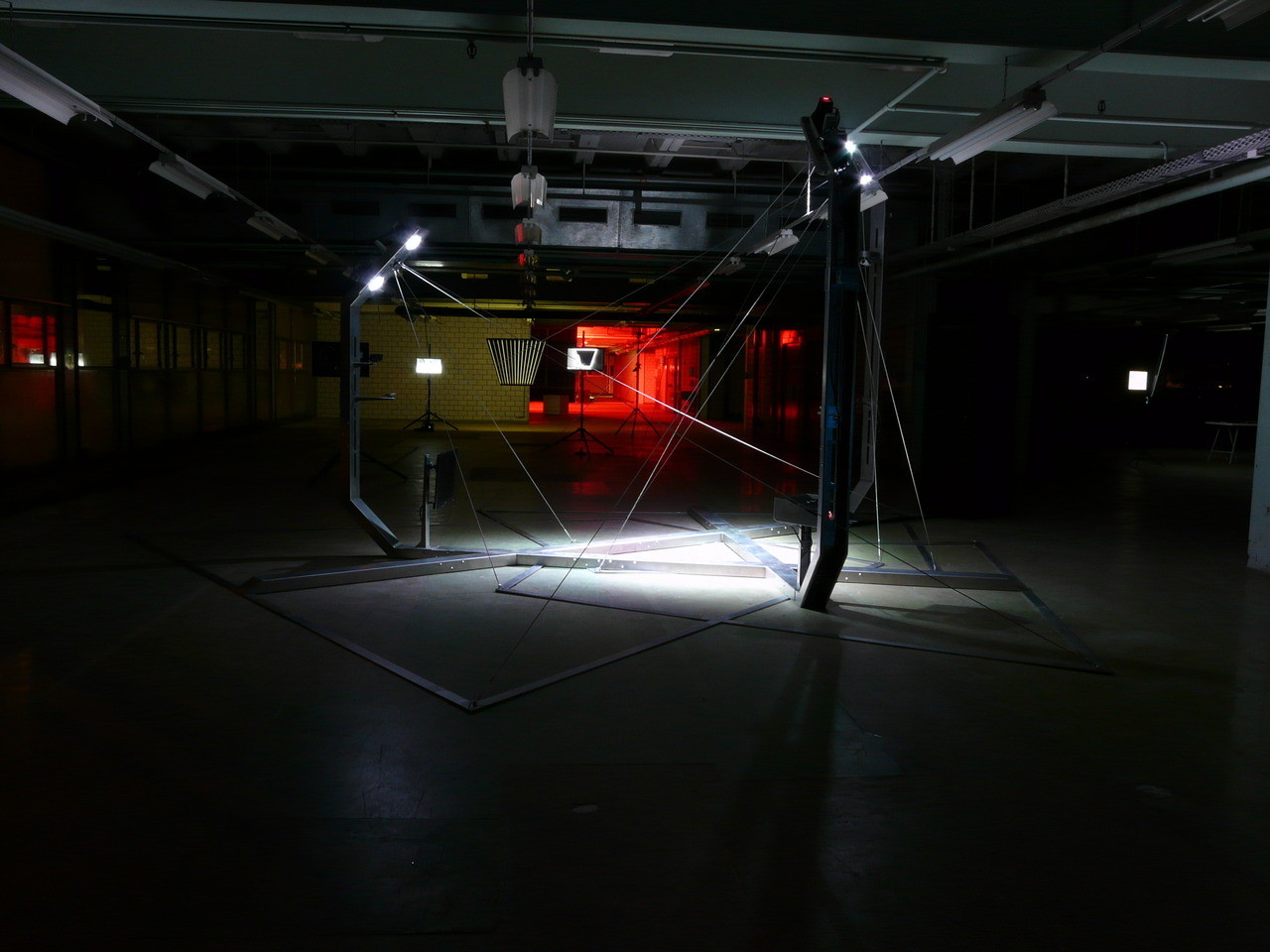

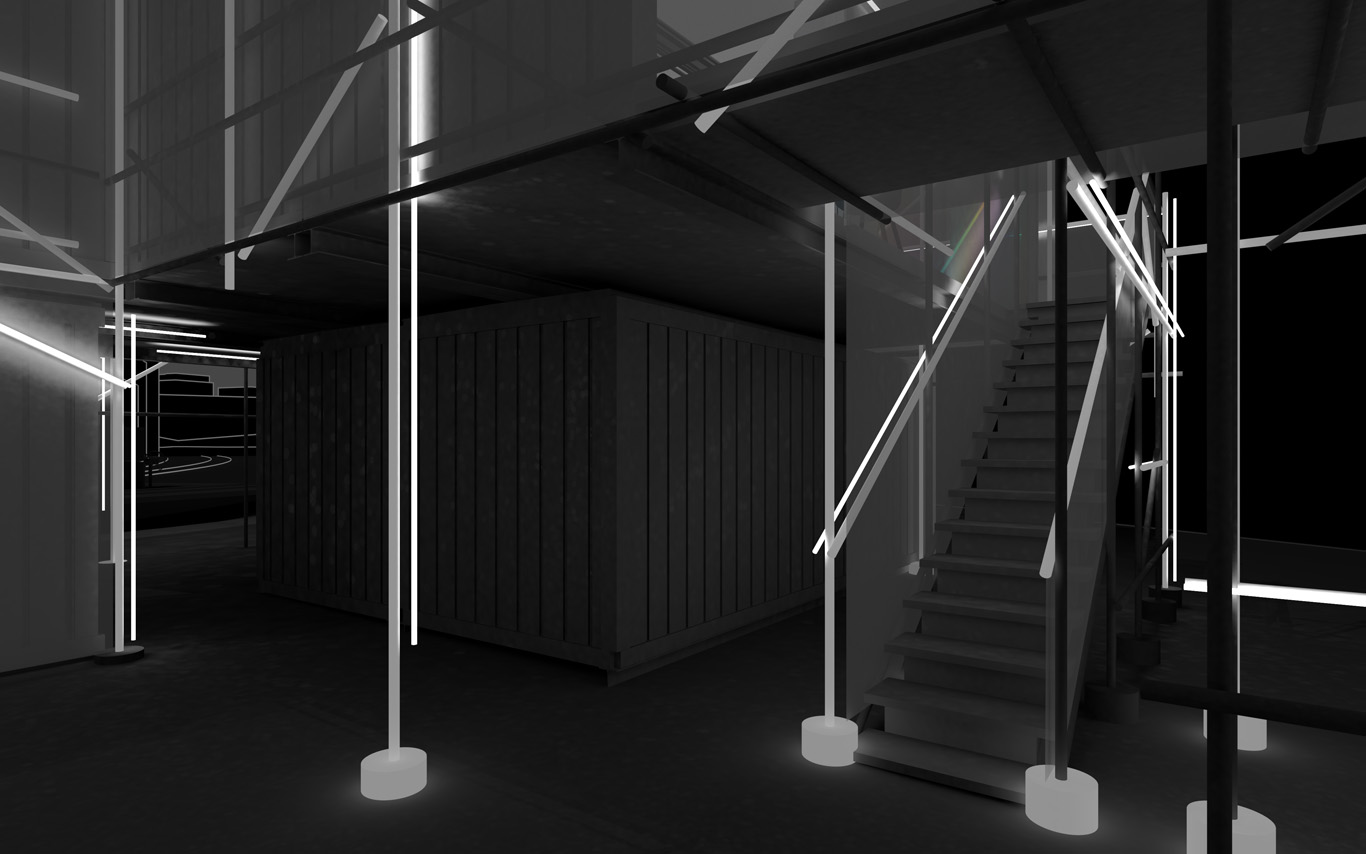

Note: a few pictures from fabric | ch retrospective at #EphemeralKunsthalleLausanne (disused factory Mayer & Soutter, nearby Lausanne in Renens).

The exhibition is being set up in the context of the production of a monographic book and is still open today (Saturday 17.02, 5.00-8.00 pm)!

By fabric | ch

-----

-

All images Ch. Guignard.

Monday, February 05. 2018

Environmental Devices · Projets & expérimentations (1997-2017) | #fabric|ch #exhibition

Note: 2017 was very busy (the reason why I wasn't able to post much on | rblg...), and the start of 2018 happens to be the same. Fortunately and unfortunatly!

I hope things will calm down a bit next Spring, but in the meantime, we're setting up an exhibition with fabric | ch. A selection of works retracing 20 years of activities, which purpose will be also to serve in the perspective of a photo shooting for a forthcoming book.

The event will take place in a disuse factory (yet a historical monument from the 2nd industrial era), near Lausanne.

If you are around, do not hesitate to knock at the door!

By fabric | ch

-----

Environmental Devices · Projets & expérimentations (1997-2017)

Image: Daniela & Tonatiuh.

During a few days, in the context of the preparation of a book, a selection of works retracing 20 years of activities of fabric | ch will be on display in a disused factory close to Lausanne.

·

Information: http://www.fabric.ch/xx/

·

Opening on February 9, 5.00-11.00pm

·

Visiting hours:

Saturday - Sunday 10-11.02, 4.00-8.00pm

Wednesday 14.02, 5.00-8.00pm

Friday-Saturday 16-17.02, 5.00-8.00pm.

·

Or by appointment: 021.3511021

Guided tours at 6.00pm

-----

Pendant quelques jours et dans le contexte de la création d'un livre monographique, accrochage d'une sélection de travaux retraçant 20 ans d'activités de fabric | ch.

·

Informations: http://www.fabric.ch/xx

·

Vernissage le 9 février, 17h-23h

·

Heures de visite:

Samedi - dimanche 10-11.02, 16h-20h

Mercredi 14.02, 17h-20h

Vendredi-samedi 16-17.02, 17h-20h00

·

Ou sur rendez-vous: 021.3511021

Visites commentées à 18h.

&

Monday, June 19. 2017

Sucking carbon from the air | #device #environment

Note: the following post has been widely reblogged recently. The reason why I did wait a bit before archiving it in | rblg.

It interests me as a king of "device" that can handle environmental parameters. In this sense, it has undoubtedly architectural characteristics and could extend itself into an "architectural device". Think here for exemple about the ongoing Jade Eco Park by Philippe Rahm architectes, filled with devices in the competition proposal. Or to move less further about our own work, with small "environmental devices" like Perpetual (Tropical) SUNSHINE, Satellite Daylight, etc. Architecture as device like Public Platform of Future Past, I-Weather as Deep Space Public Lighting or Heterochrony, or even data tools like Deterritorialized Living.

As a matter of fact, there is a "devices" tag in this blog for this precise reason, to give references for trhese king of architectures that trigger modification in the environment.

Via Science

-----

In Switzerland, a giant new machine is sucking carbon directly from the air

The world's first commercial plant for capturing carbon dioxide directly from the air opened yesterday, refueling a debate about whether the technology can truly play a significant role in removing greenhouse gases already in the atmosphere. The Climeworks AG facility near Zurich becomes the first ever to capture CO2 at industrial scale from air and sell it directly to a buyer.

Developers say the plant will capture about 900 tons of CO2 annually — or the approximate level released from 200 cars — and pipe the gas to help grow vegetables.

While the amount of CO2 is a small fraction of what firms and climate advocates hope to trap at large fossil fuel plants, Climeworks says its venture is a first step in their goal to capture 1 percent of the world's global CO2 emissions with similar technology. To do so, there would need to be about 250,000 similar plants, the company says.

"Highly scalable negative emission technologies are crucial if we are to stay below the 2-degree target [for global temperature rise] of the international community," said Christoph Gebald, co-founder and managing director of Climeworks. The plant sits on top of a waste heat recovery facility that powers the process. Fans push air through a filter system that collects CO2. When the filter is saturated, CO2 is separated at temperatures above 100 degrees Celsius.

The gas is then sent through an underground pipeline to a greenhouse operated by Gebrüder Meier Primanatura AG to help grow vegetables, like tomatoes and cucumbers.

Gebald and Climeworks co-founder Jan Wurzbacher said the CO2 could have a variety of other uses, such as carbonating beverages. They established Climeworks in 2009 after working on air capture during postgraduate studies in Zurich.

The new plant is intended to run as a three-year demonstration project, they said. In the next year, the company said it plans to launch additional commercial ventures, including some that would bury gas underground to achieve negative emissions.

"With the energy and economic data from the plant, we can make reliable calculations for other, larger projects," said Wurzbacher.

Note: with interesting critical comments below concerning the real sustainable effect by Howard Herzog (MIT).

'Sideshow'

There are many critics of air capture technology who say it would be much cheaper to perfect carbon capture directly at fossil fuel plants and keep CO2 out of the air in the first place. Among the skeptics are Massachusetts Institute of Technology senior research engineer Howard Herzog, who called it a "sideshow" during a Washington event earlier this year. He estimated that total system costs for air capture could be as much as $1,000 per ton of CO2, or about 10 times the cost of carbon removal at a fossil fuel plant.

"At that price, it is ridiculous to think about right now. We have so many other ways to do it that are so much cheaper," Herzog said. He did not comment specifically on Climeworks but noted that the cost for air capture is high partly because CO2 is diffuse in the air, while it is more concentrated in the stream from a fossil fuel plant. Climeworks did not immediately release detailed information on its costs but said in a statement that the Swiss Federal Office of Energy would assist in financing. The European Union also provided funding.

In 2015, the National Academies of Sciences, Engineering and Medicine released a report saying climate intervention technologies like air capture were not a substitute for reducing emissions. Last year, two European scientists wrote in the journal Science that air capture and other "negative emissions" technologies are an "unjust gamble," distracting the world from viable climate solutions (Greenwire, Oct. 14, 2016).

Engineers have been toying with the technology for years, and many say it is a needed option to keep temperatures to controllable levels. It's just a matter of lowering costs, supporters say. More than a decade ago, entrepreneur Richard Branson launched the Virgin Earth Challenge and offered $25 million to the builder of a viable air capture design.

Climeworks was a finalist in that competition, as were companies like Carbon Engineering, which is backed by Microsoft Corp. co-founder Bill Gates and is testing air capture at a pilot plant in British Columbia.

-----

...

And let's also mention while we are here the similar device ("smog removal" for China cities) made by Studio Roosegaarde, Smog Free Project.

Related Links:

Friday, May 12. 2017

The World’s Largest Artificial Sun Could Help Generate Clean Fuel | #conditioning #energy

Note: an amazing climatic device.

For clean energy experimentation here. Would have loved to have that kind of devices (and budget ;)) when we put in place Perpetual Tropical Sunshine !

-----

Don’t lean against the light switch at the Synlight building in Jülich, Germany—if you do, things might get rather hotter than you can cope with.

The new facility is home to what researchers at the German Aerospace Center, known as DLR, have called the “world's largest artificial Sun.” Across a single wall in the building sit a series of Xenon short-arc lamps—the kind used in large cinemas to project movies. But in a huge cinema there would be one lamp. Here, spread across a surface 45 feet high and 52 feet wide, there are 140.

When all those lamps are switched on and focused on the same 20 by 20 centimeter spot, they create light that’s 10,000 times more intense than solar radiation anywhere on Earth. At the center, temperatures reach over 3,000 °C.

The setup is being used to mimic large concentrated solar power plants, which use a field full of adjustable mirrors to focus sunlight into a small incredibly hot area, where it melts salt that is then used to create steam and generate electricity.

Researchers at DLR, though, think that a similar mirror setup could be used to power a high-energy reaction where hydrogen is extracted from water vapor. In theory, that process could supply a constant and affordable source of liquid hydrogen fuel—something that clean energy researchers continue to lust after, because it creates no carbon emissions when burned.

Trouble is, folks at DLR don’t quite yet know how to make it happen. So they built a laboratory rig to allow them to tinker with the process using artificial light instead of reflected sunlight—a setup which, as Gizmodo notes, uses the equivalent of a household's entire year of electricity during just four hours of operation, somewhat belying its green aspirations.

Of course, it’s far from the first project to aim to create hydrogen fuel cheaply: artificial photosynthesis, seawater electrolysis, biomass reactions, and many other projects have all tried—and so far failed—to make it a cost-effective exercise. So now it’s over to the Sun. Or a fake one, for now.

(Read more: DLR, Gizmodo, “World’s Largest Solar Thermal Power Plant Delivers Power for the First Time,” “A Big Leap for an Artificial Leaf,” "A New Source of Hydrogen for Fuel-Cell Vehicles")

Tuesday, July 05. 2016

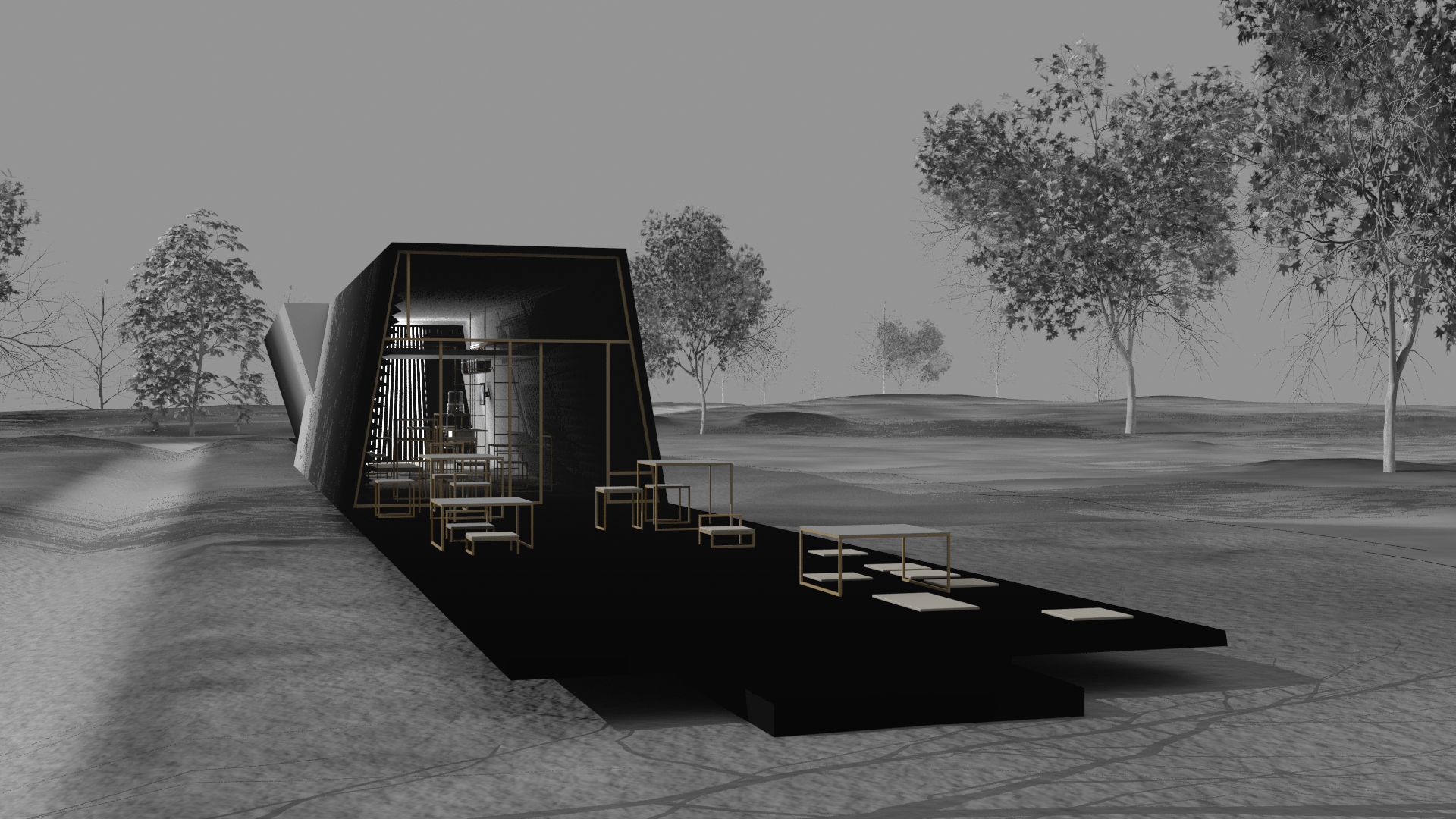

Public Platform of Future-Past, extended study phase. Bots, "Ar.I." & tools? | #data #monitoring #architecture

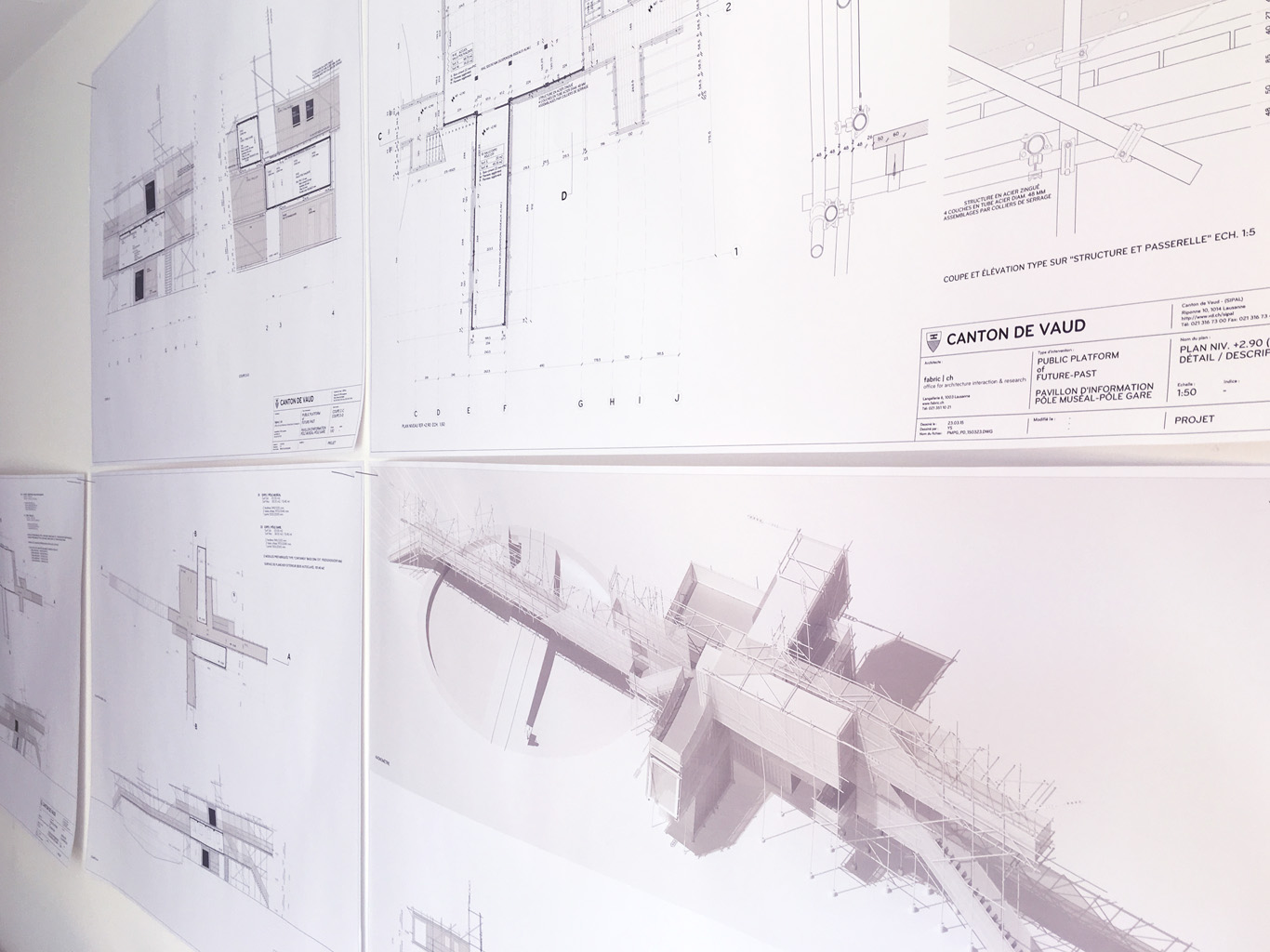

Note: in the continuity of my previous post/documentation concerning the project Platform of Future-Past (fabric | ch's recent winning competition proposal), I publish additional images (several) and explanations about the second phase of the Platform project, for which we were mandated by Canton de Vaud (SiPAL).

The first part of this article gives complementary explanations about the project, but I also take the opportunity to post related works and researches we've done in parallel about particular implications of the platform proposal. This will hopefully bring a neater understanding to the way we try to combine experimentations-exhibitions, the creation of "tools" and the design of larger proposals in our open and process of work.

Notably, these related works concerned the approach to data, the breaking of the environment into computable elements and the inevitable questions raised by their uses as part of a public architecture project.

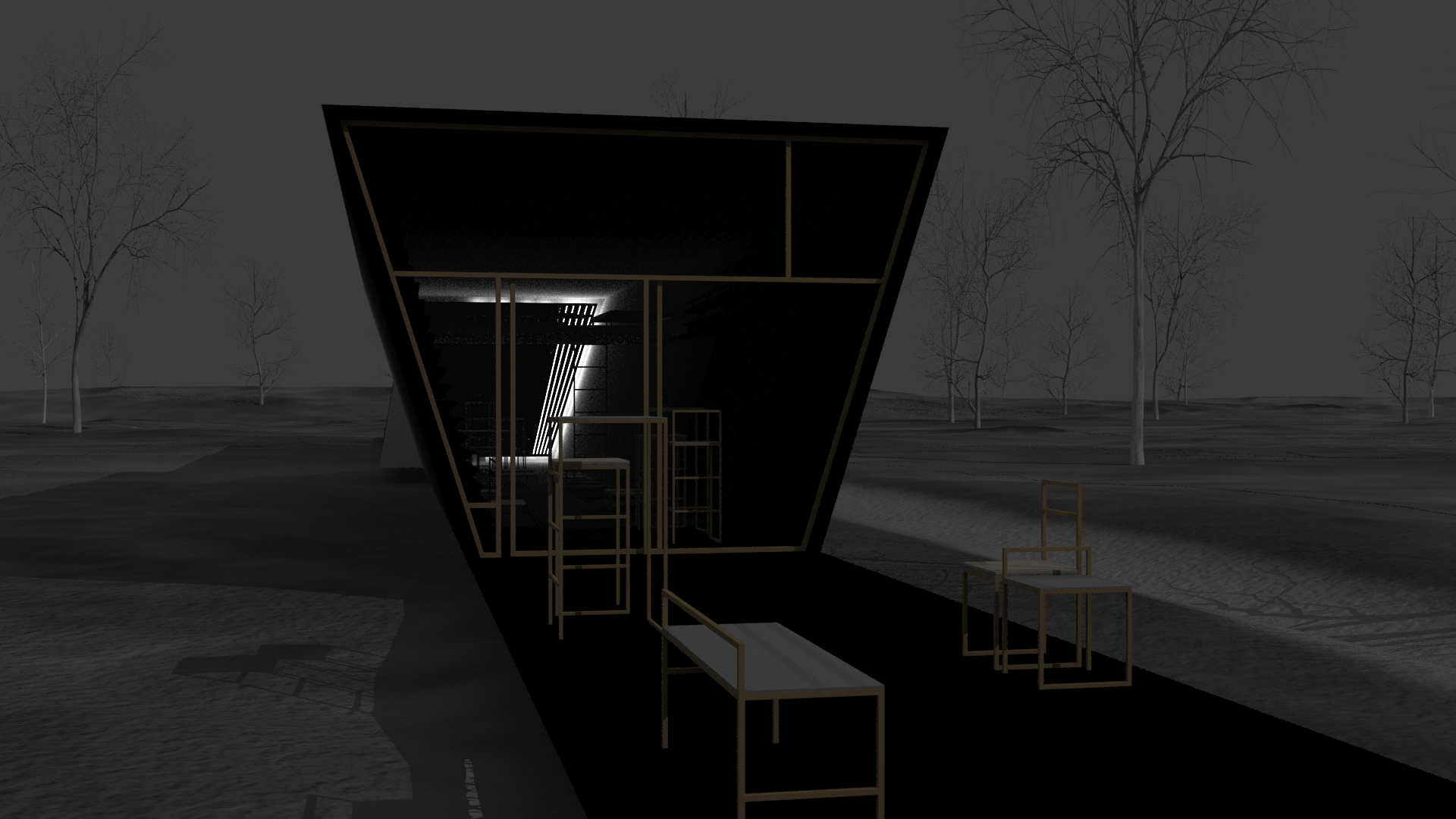

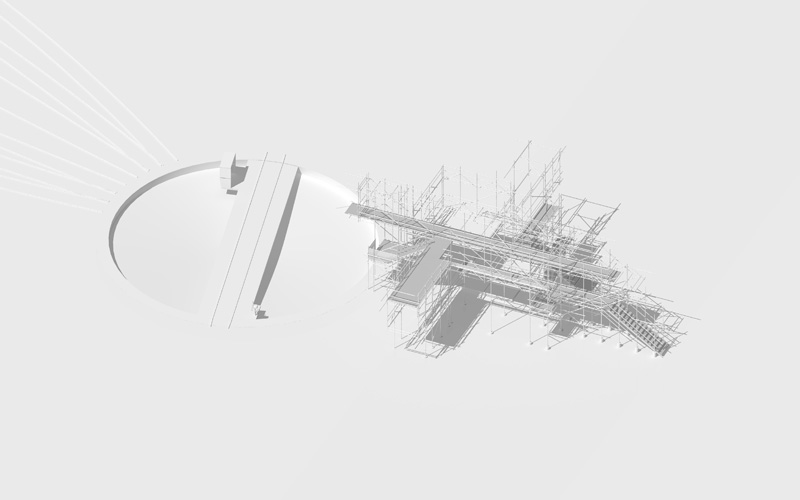

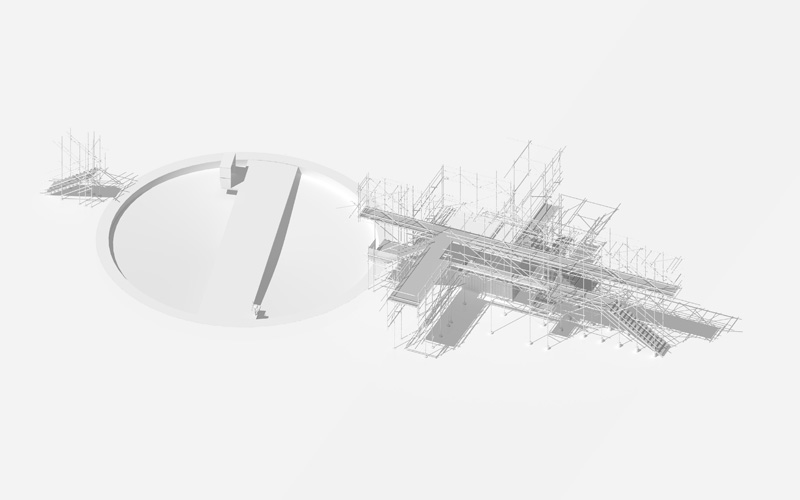

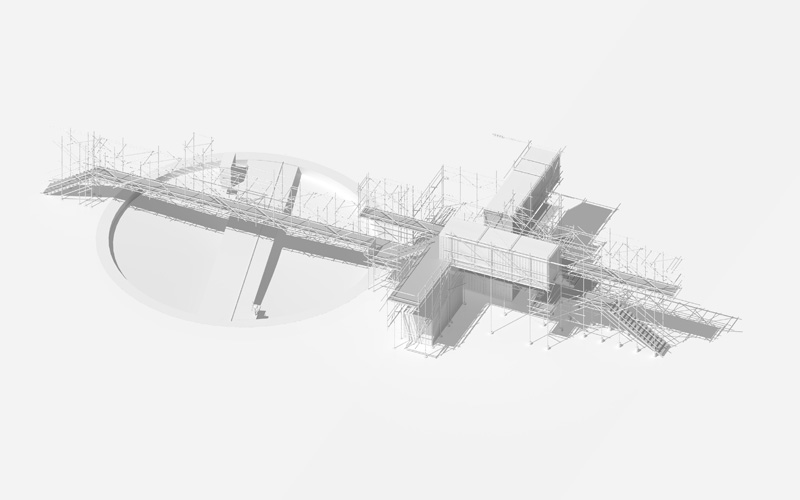

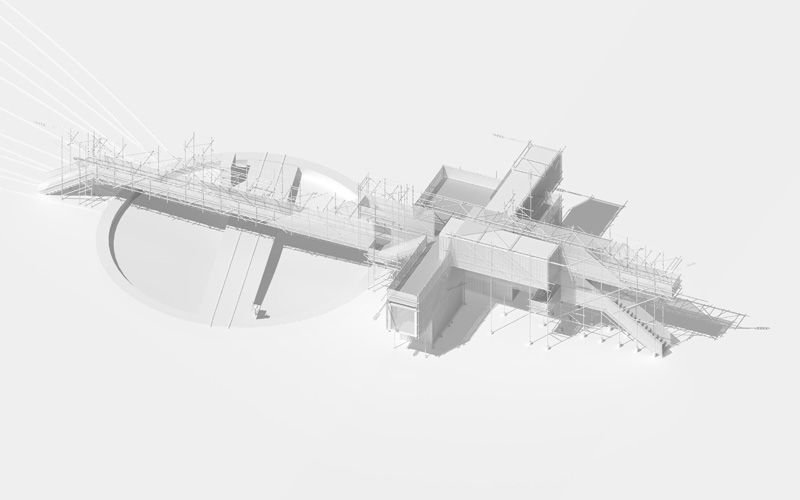

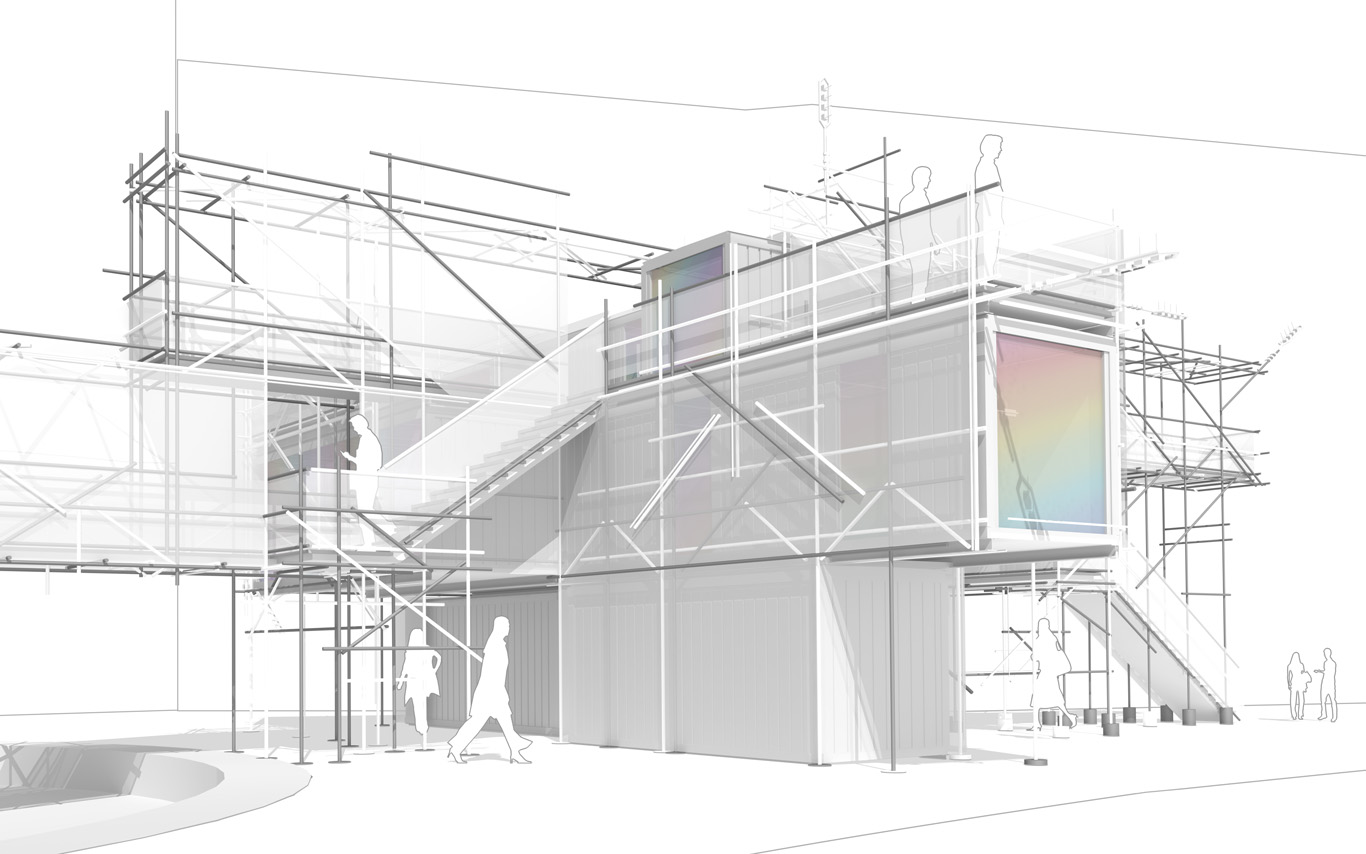

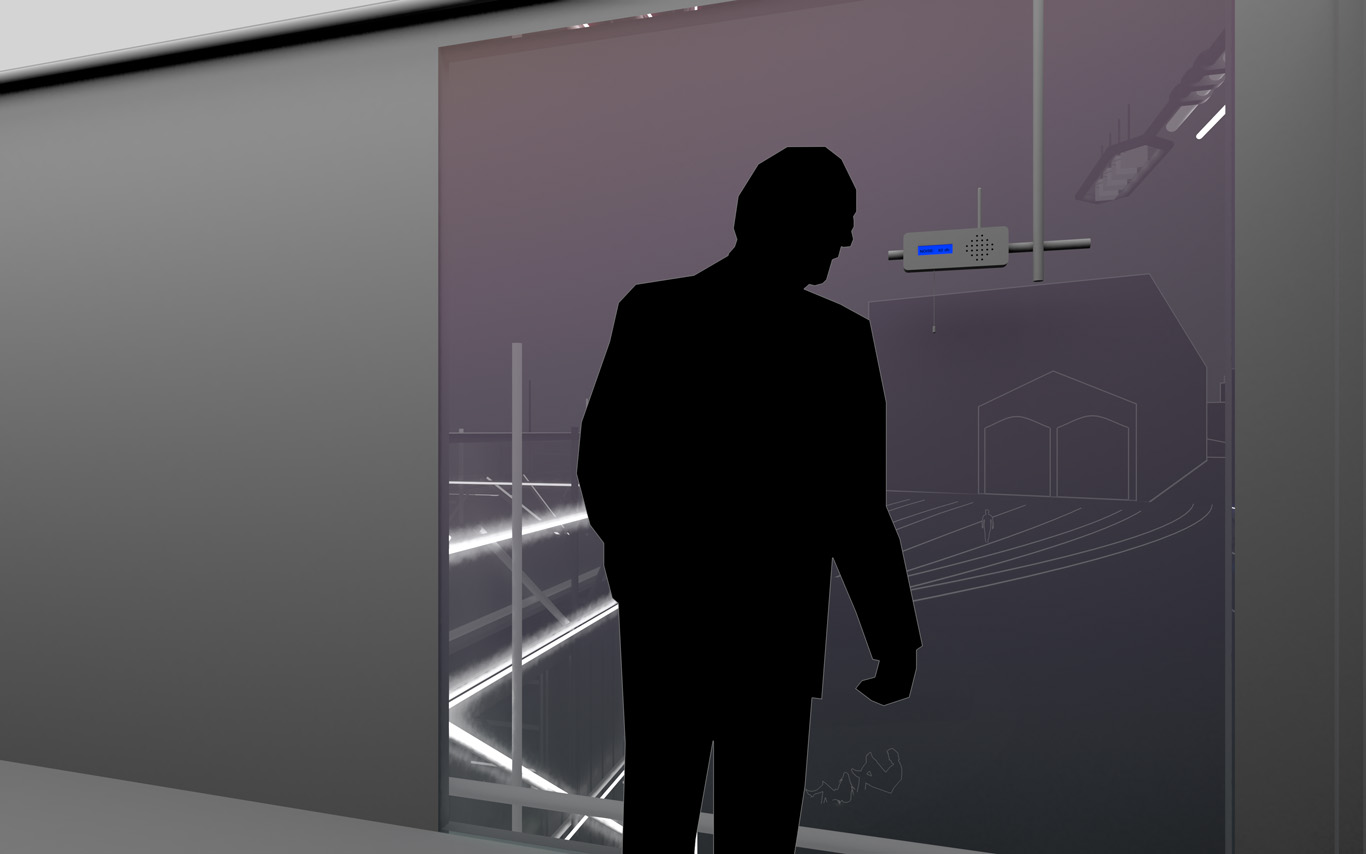

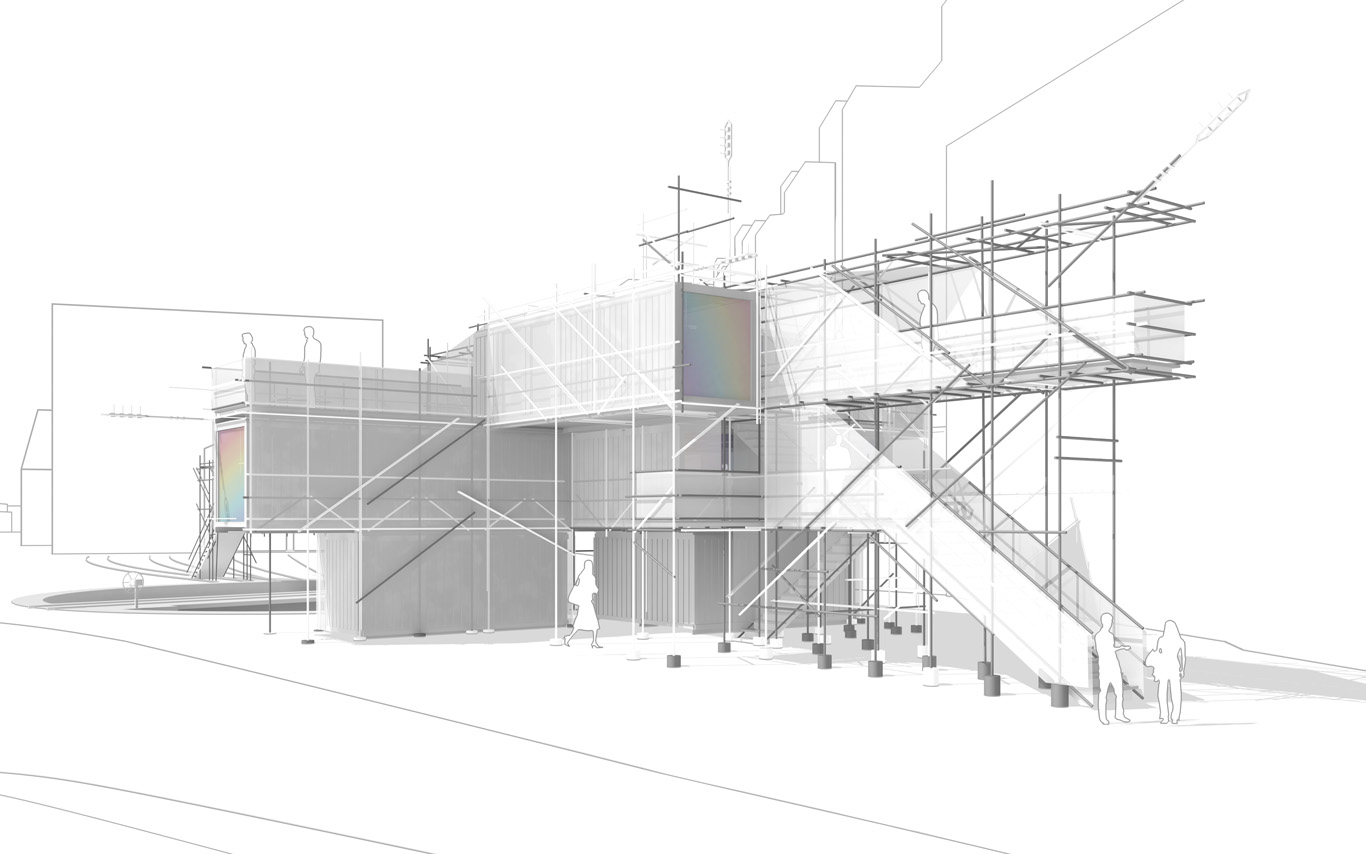

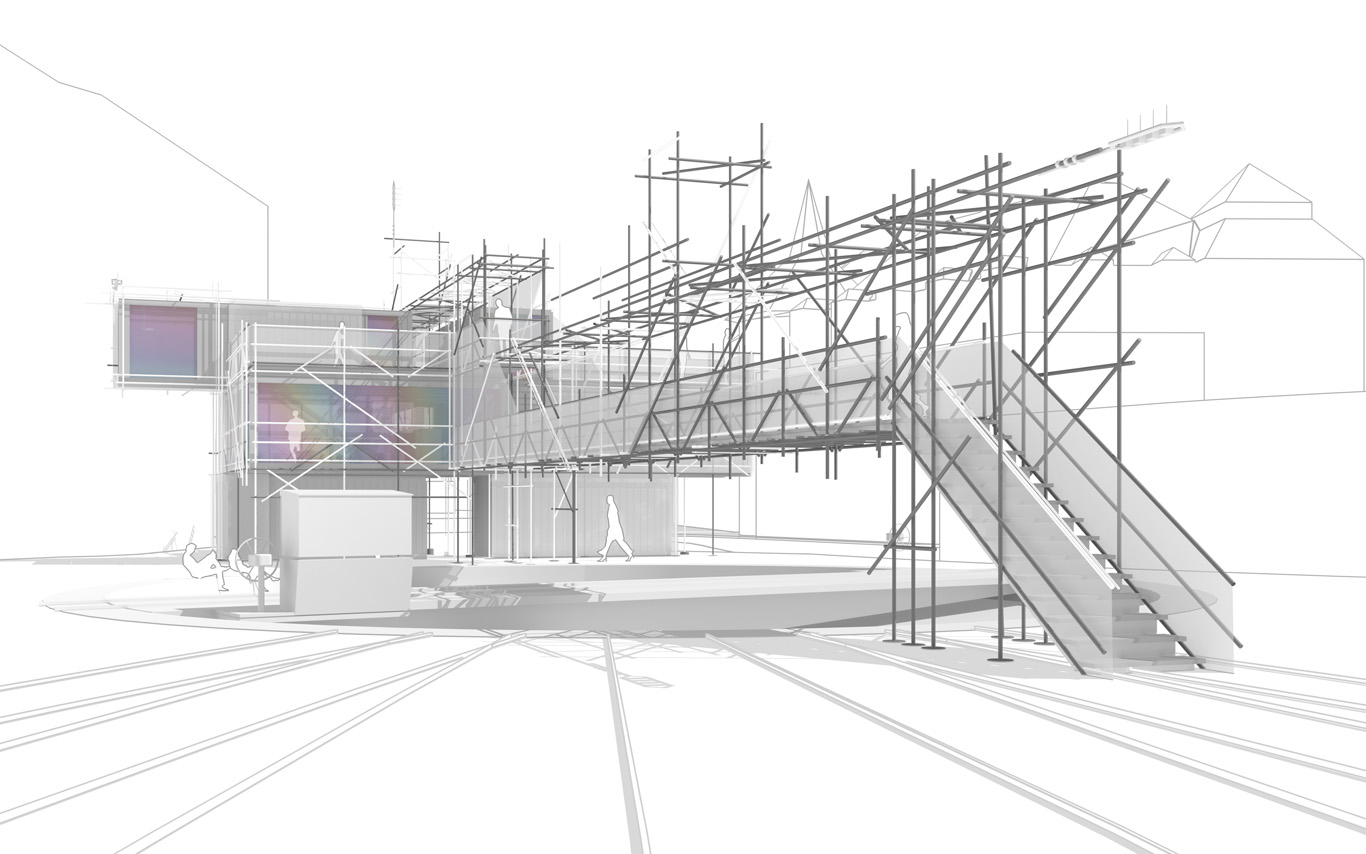

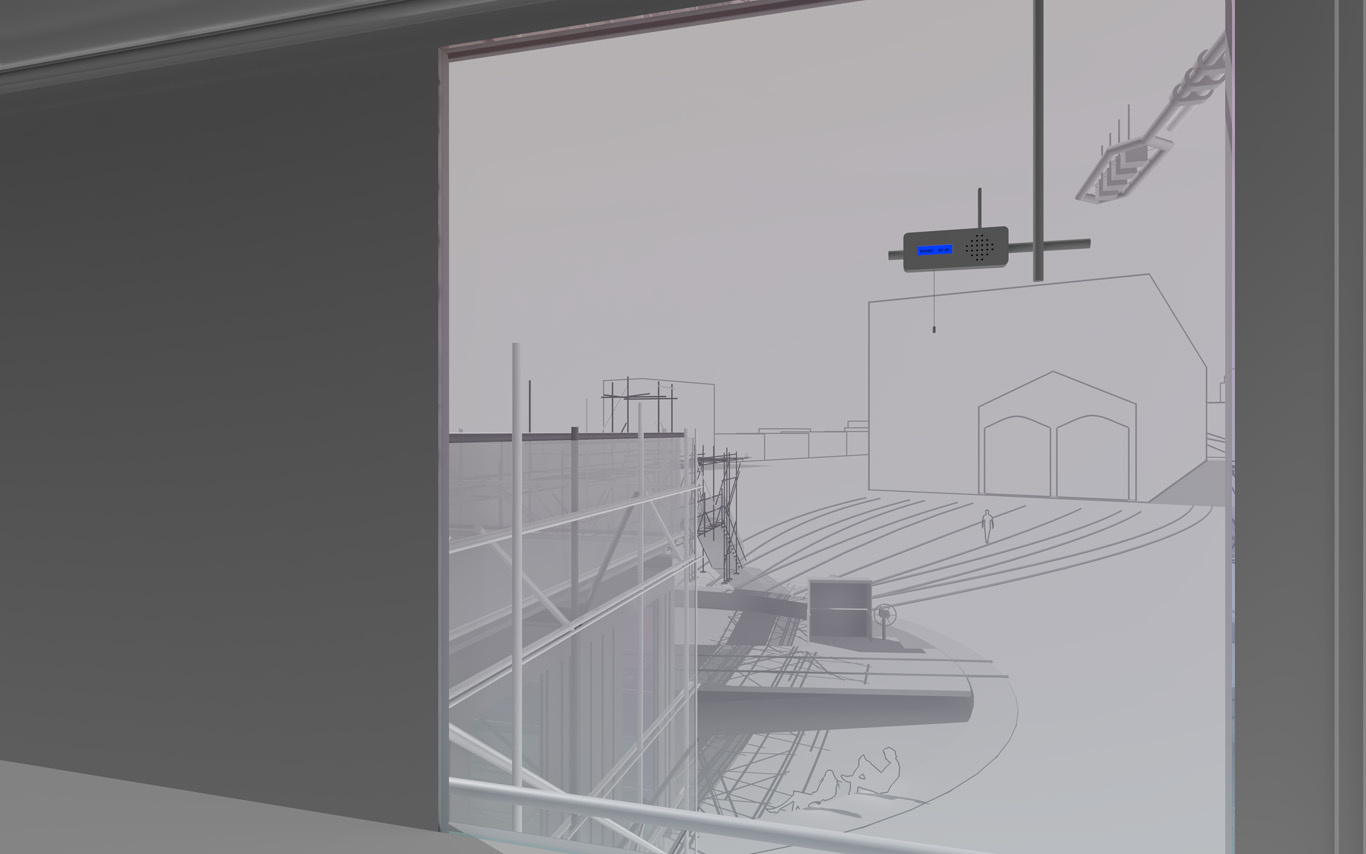

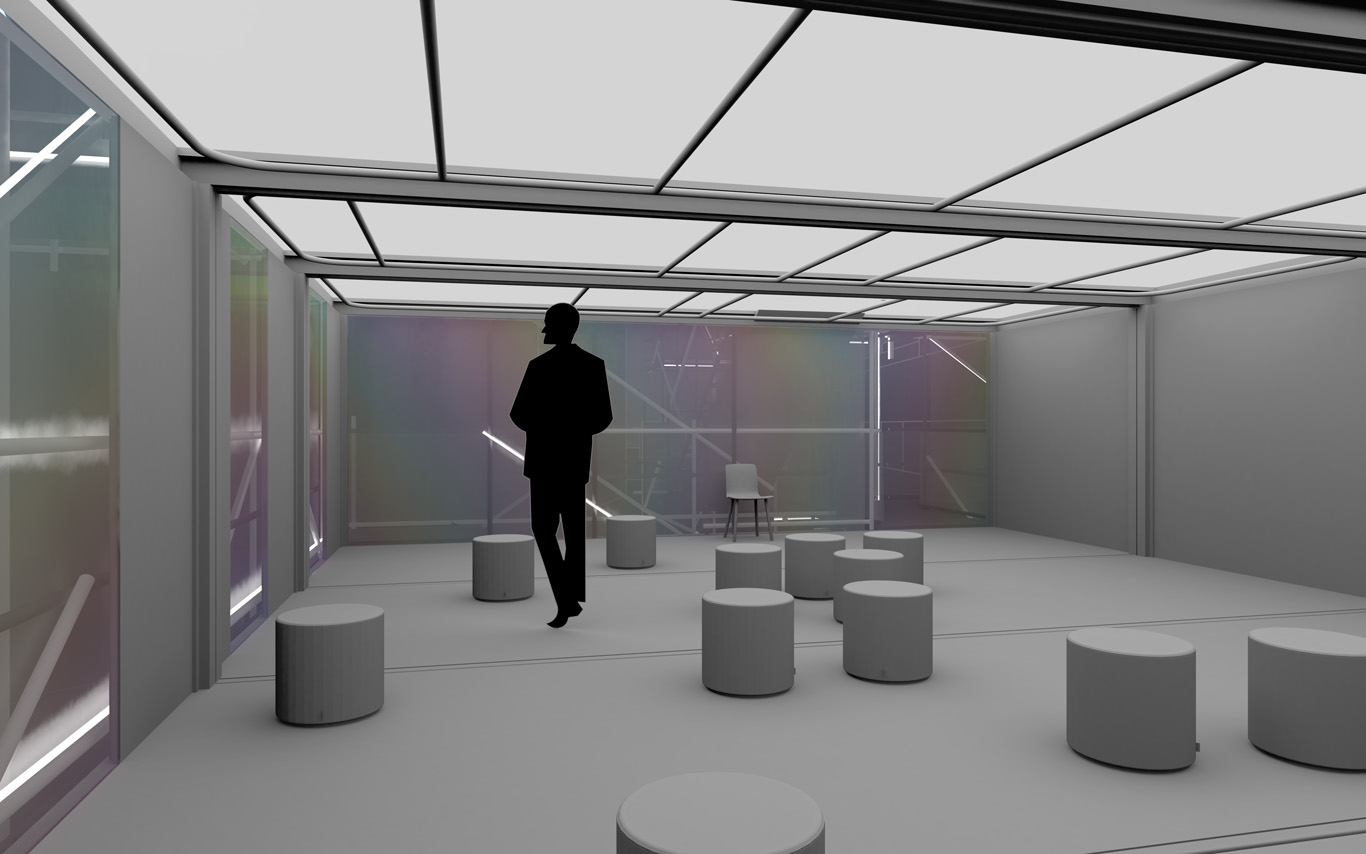

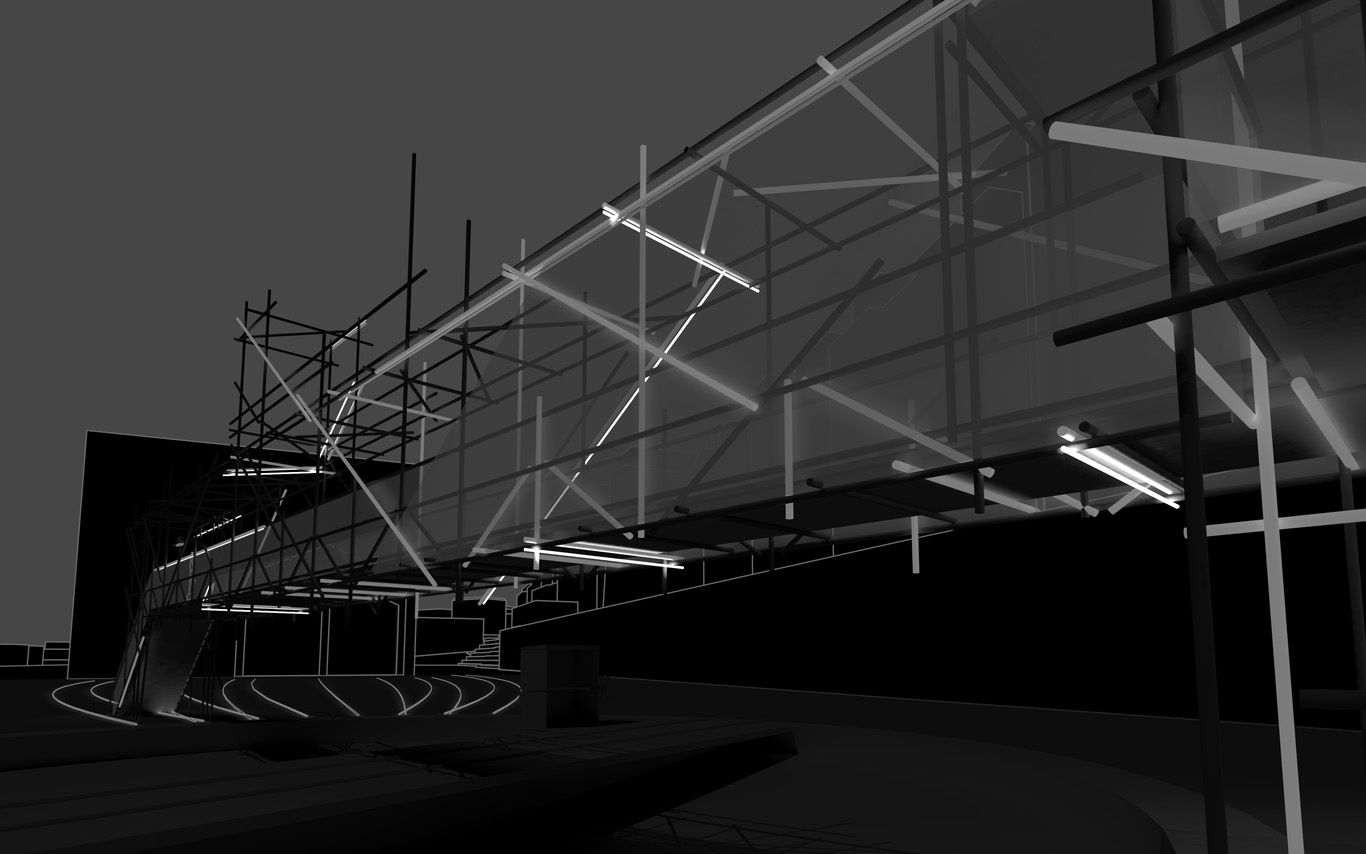

The information pavilion was potentially a slow, analog and digital "shape/experience shifter", as it was planned to be built in several succeeding steps over the years and possibly "reconfigure" to sense and look at its transforming surroundings.

The pavilion conserved therefore an unfinished flavour as part of its DNA, inspired by these old kind of meshed constructions (bamboo scaffoldings), almost sketched. This principle of construction was used to help "shift" if/when necessary.

In a general sense, the pavilion answered the conventional public program of an observation deck about a construction site. It also served the purpose of documenting the ongoing building process that often comes along. By doing so, we turned the "monitoring dimension" (production of data) of such a program into a base element of our proposal. That's where a former experimental installation helped us: Heterochrony.

As it can be noticed, the word "Public" was added to the title of the project between the two phases, to become Public Platform of Future-Past (PPoFP) ... which we believe was important to add. This because it was envisioned that the PPoFP would monitor and use environmental data concerning the direct surroundings of the information pavilion (but NO DATA about uses/users). Data that we stated in this case Public, while the treatment of the monitored data would also become part of the project, "architectural" (more below about it).

For these monitored data to stay public, so as for the space of the pavilion itself that would be part of the public domain and physically extends it, we had to ensure that these data wouldn't be used by a third party private service. We were in need to keep an eye on the algorithms that would treat the spatial data. Or best, write them according to our design goals (more about it below).

That's were architecture meets code and data (again) obviously...

By fabric | ch

-----

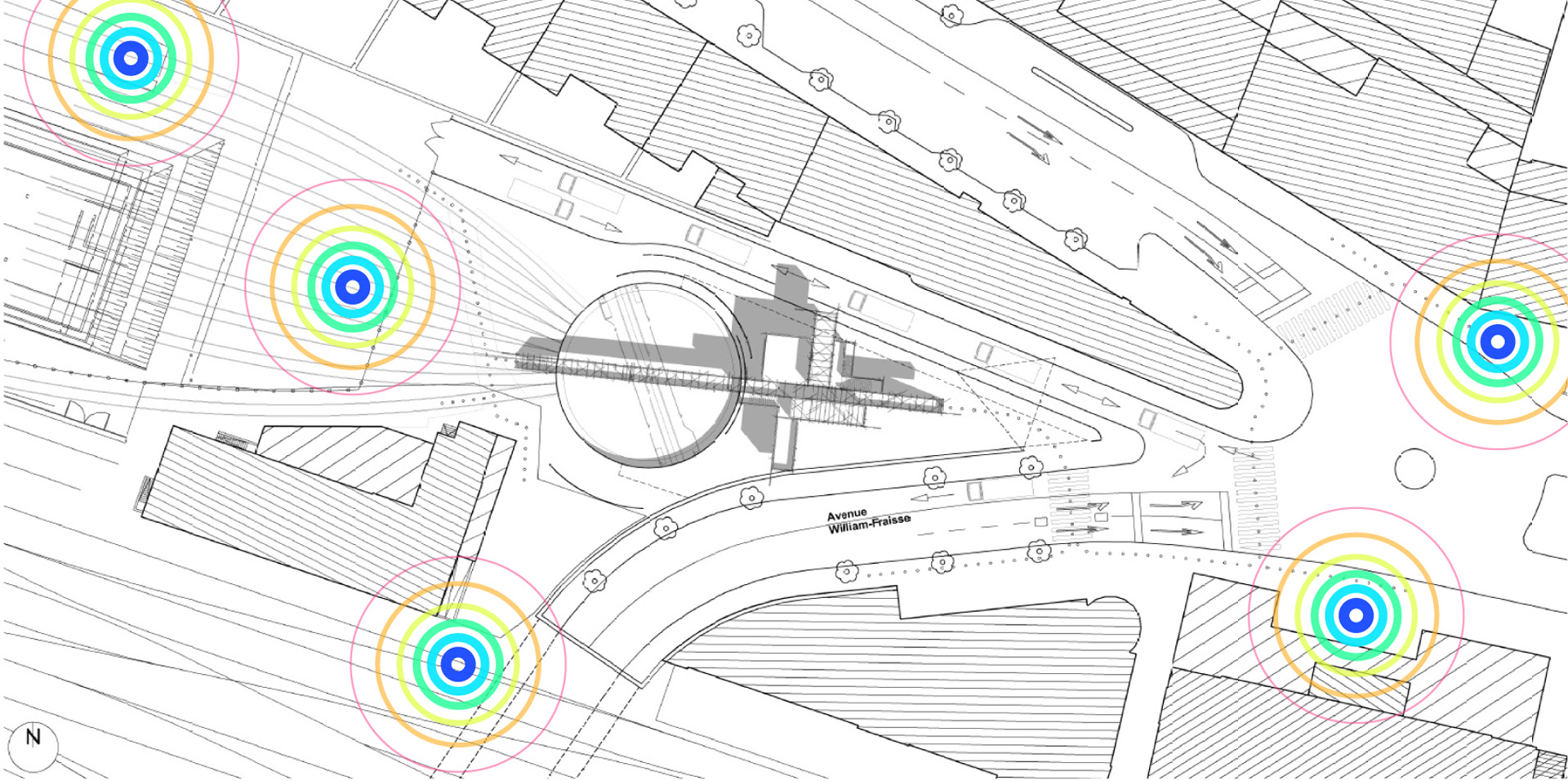

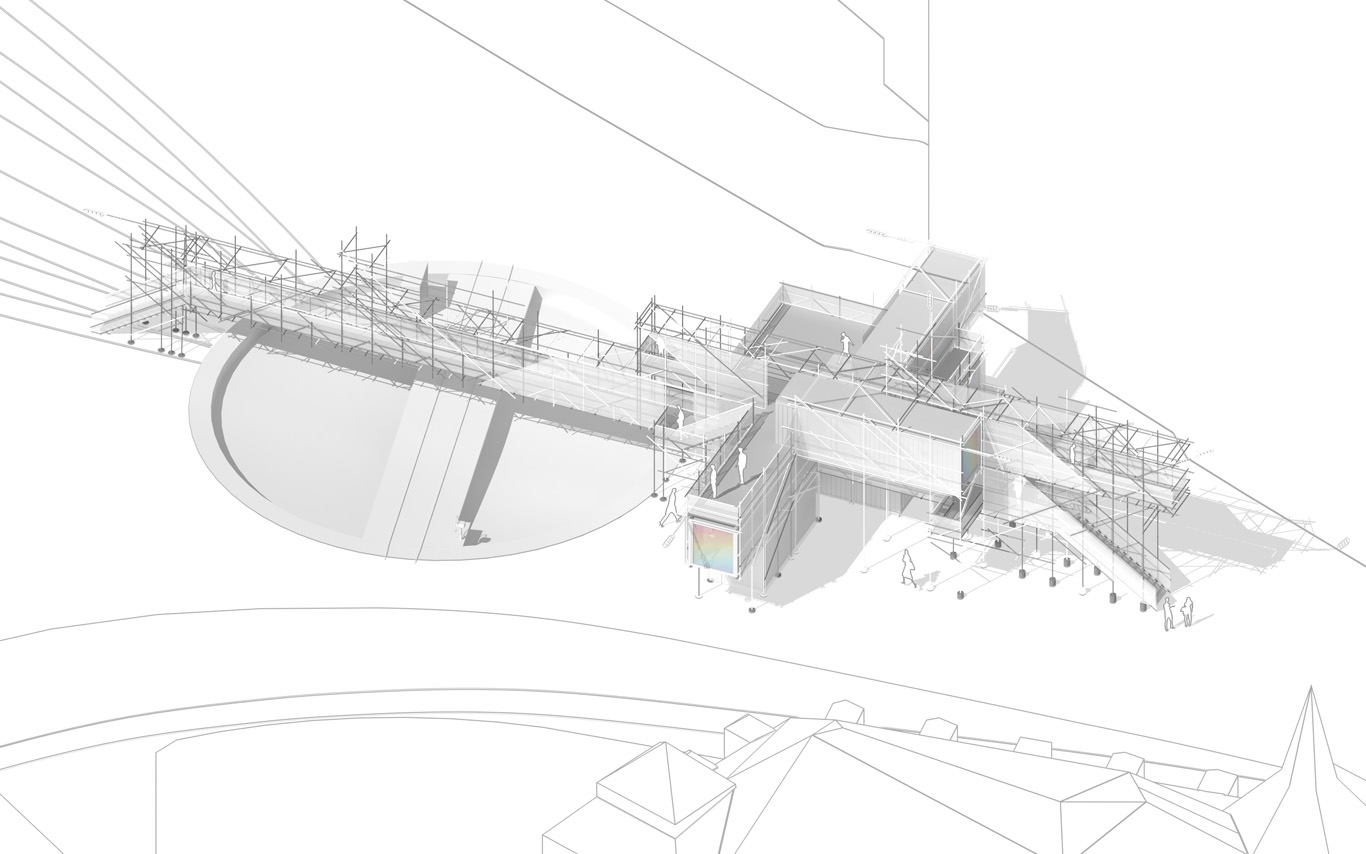

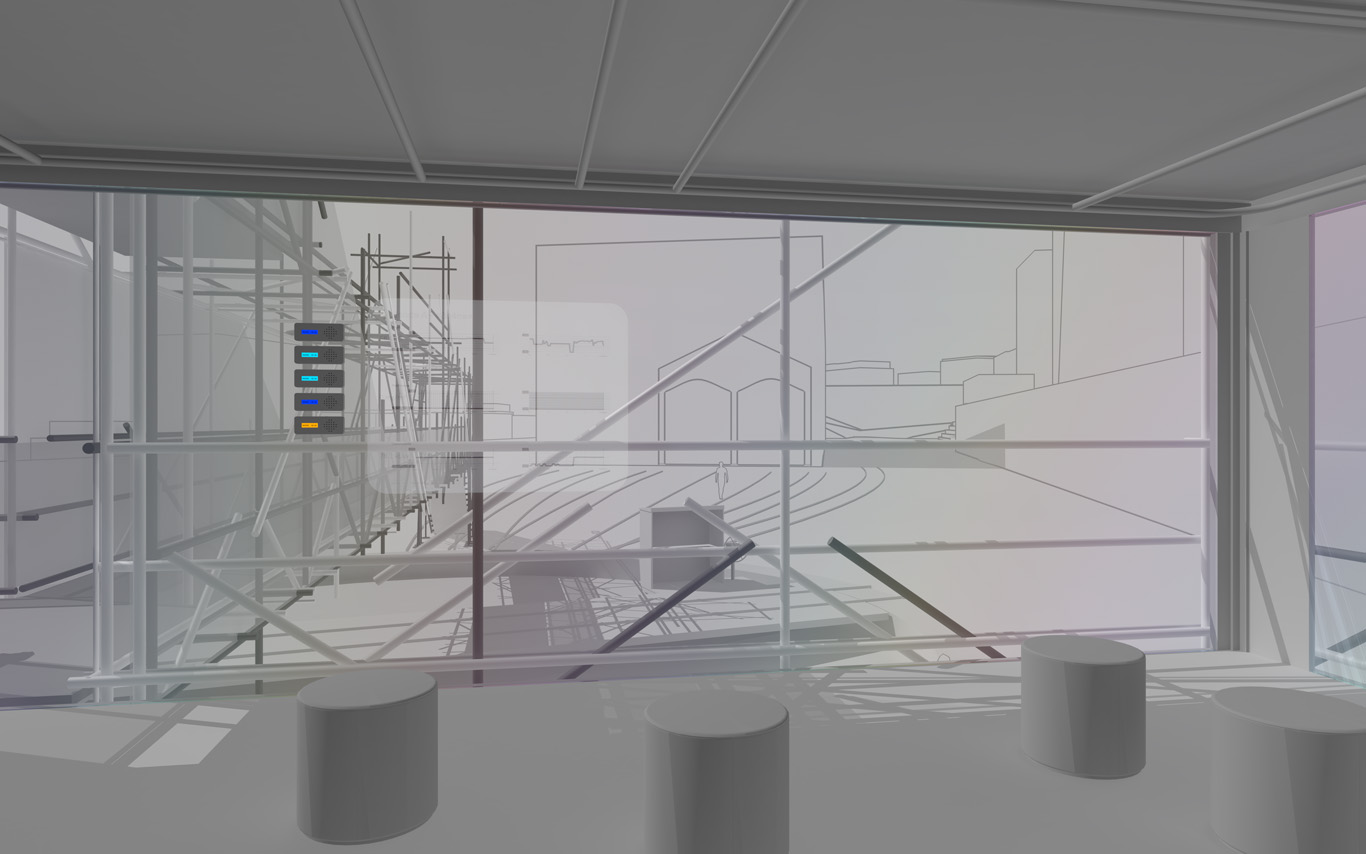

The Public Platform of Future-Past is a structure (an information and sightseeing pavilion), a Platform that overlooks an existing Public site while basically taking it as it is, in a similar way to an archeological platform over an excavation site.

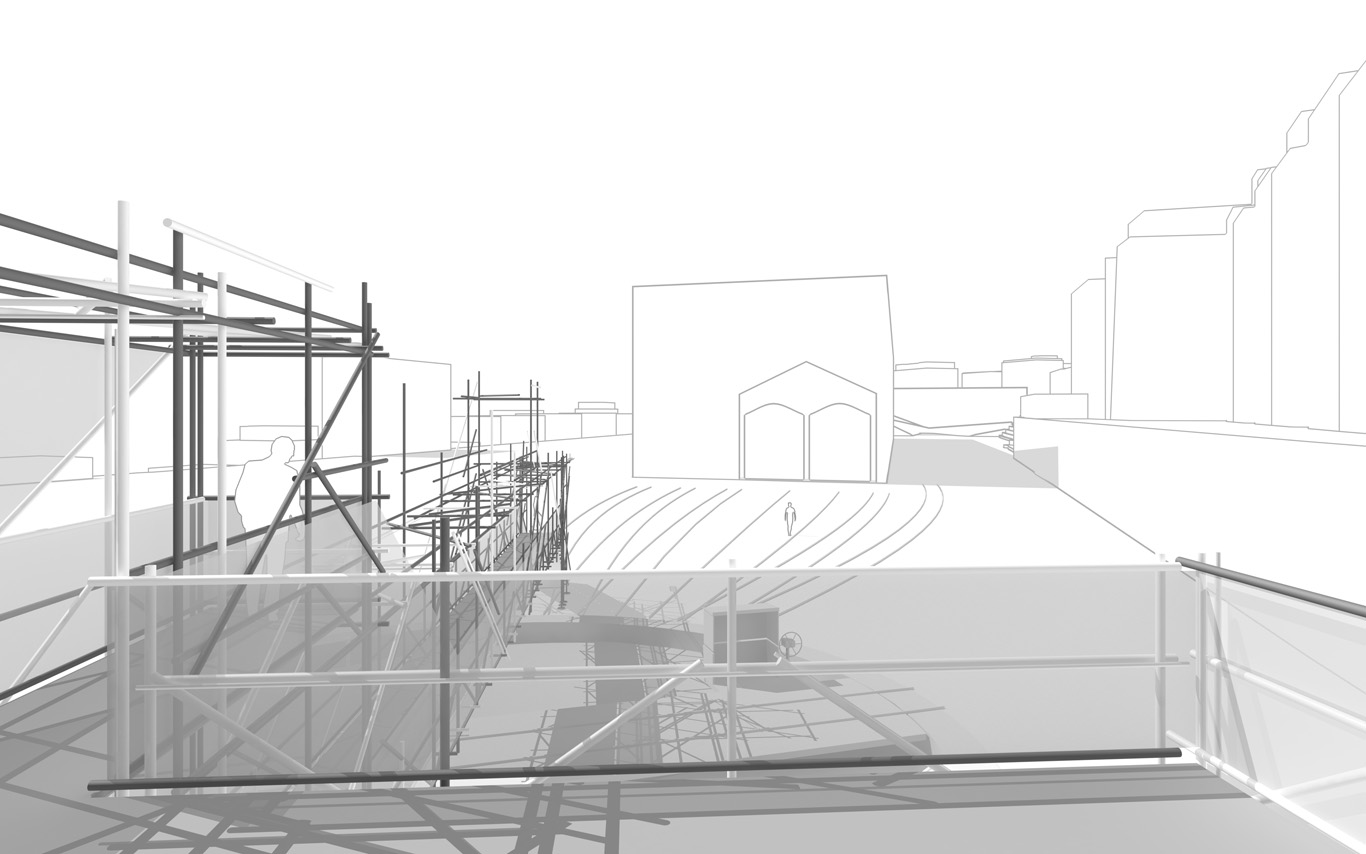

The asphalt ground floor remains virtually untouched, with traces of former uses kept as they are, some quite old (a train platform linked to an early XXth century locomotives hall), some less (painted parking spaces). The surrounding environment will move and change consideralby over the years while new constructions will go on. The pavilion will monitor and document these changes. Therefore the last part of its name: "Future-Past".

By nonetheless touching the site in a few points, the pavilion slightly reorganizes the area and triggers spaces for a small new outdoor cafe and a bikes parking area. This enhanced ground floor program can work by itself, seperated from the upper floors.

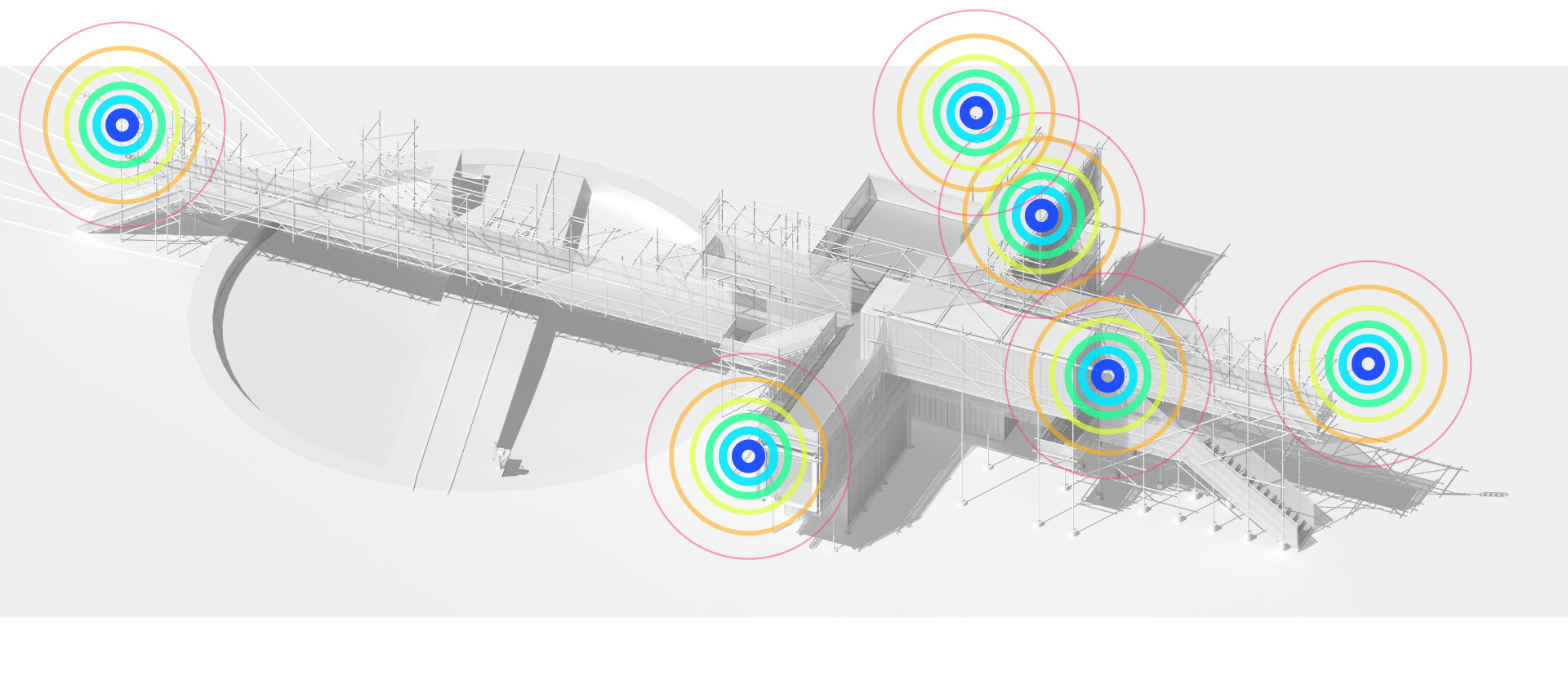

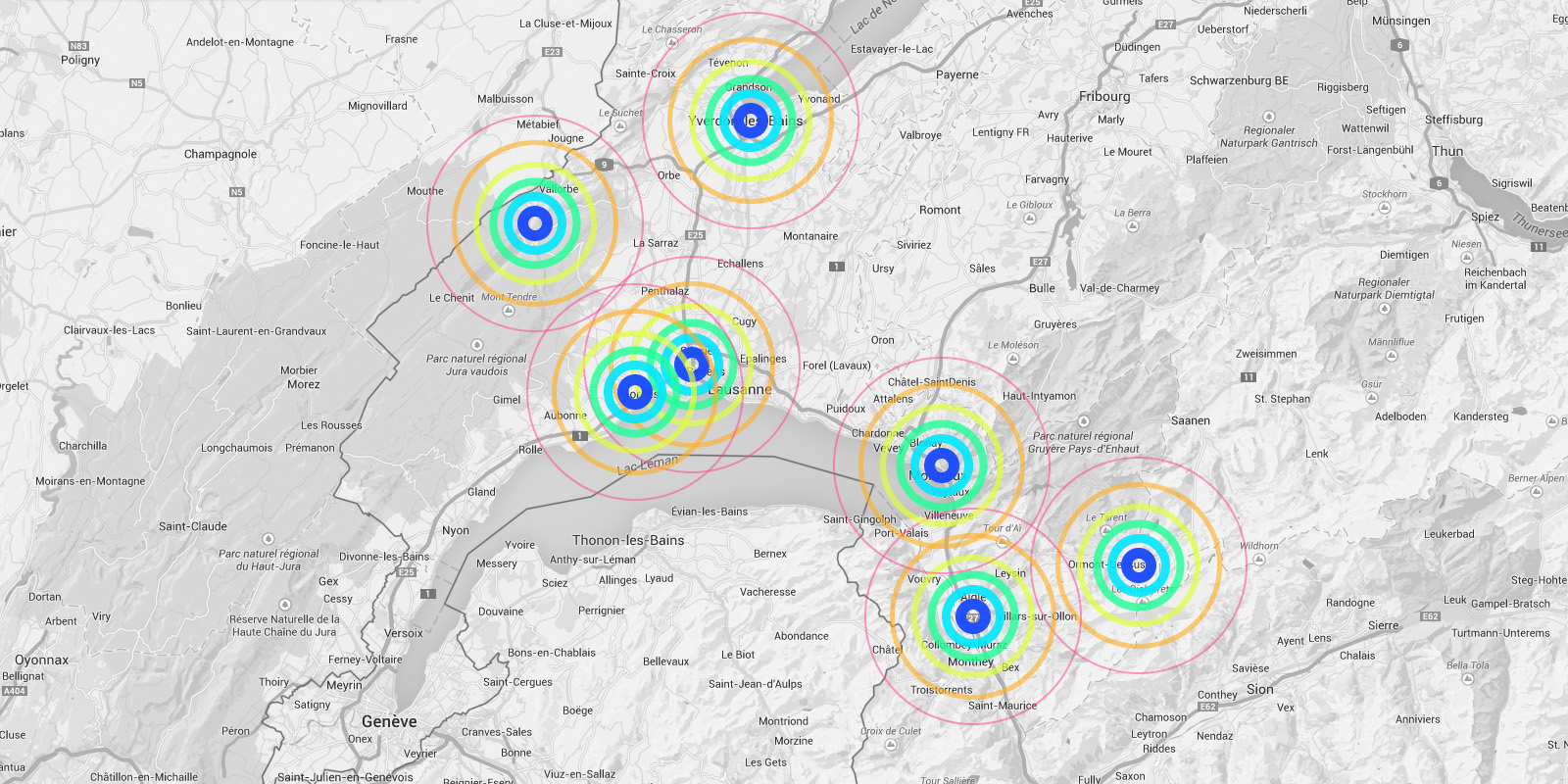

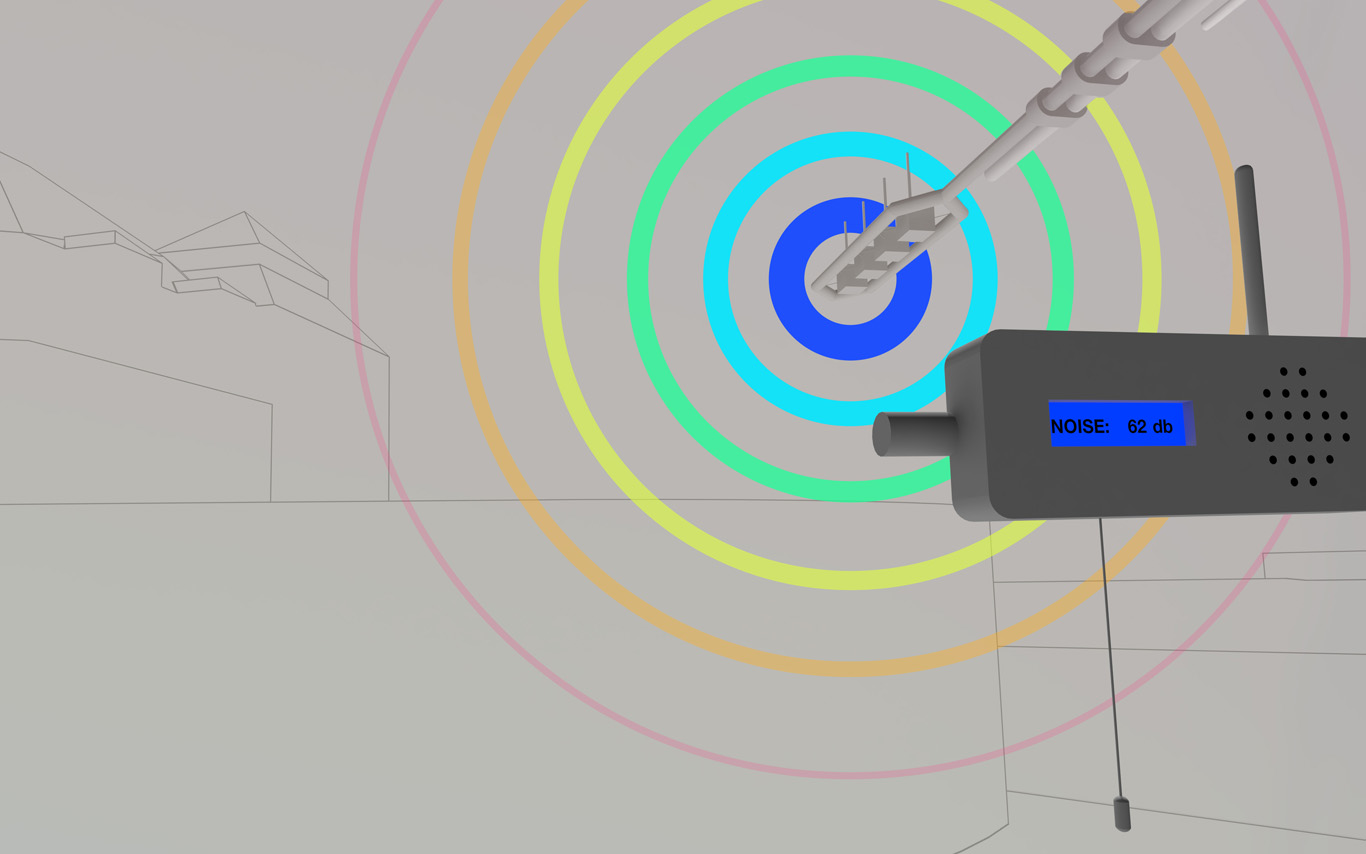

Several areas are linked to monitoring activities (input devices) and/or displays (in red, top -- that concern interests points and views from the platform or elsewhere --). These areas consist in localized devices on the platform itself (5 locations), satellite ones directly implented in the three construction sites or even in distant cities of the larger political area --these are rather output devices-- concerned by the new constructions (three museums, two new large public squares, a new railway station and a new metro). Inspired by the prior similar installation in a public park during a festival -- Heterochrony (bottom image) --, these raw data can be of different nature: visual, audio, integers from sensors (%, °C, ppm, db, lm, mb, etc.), ...

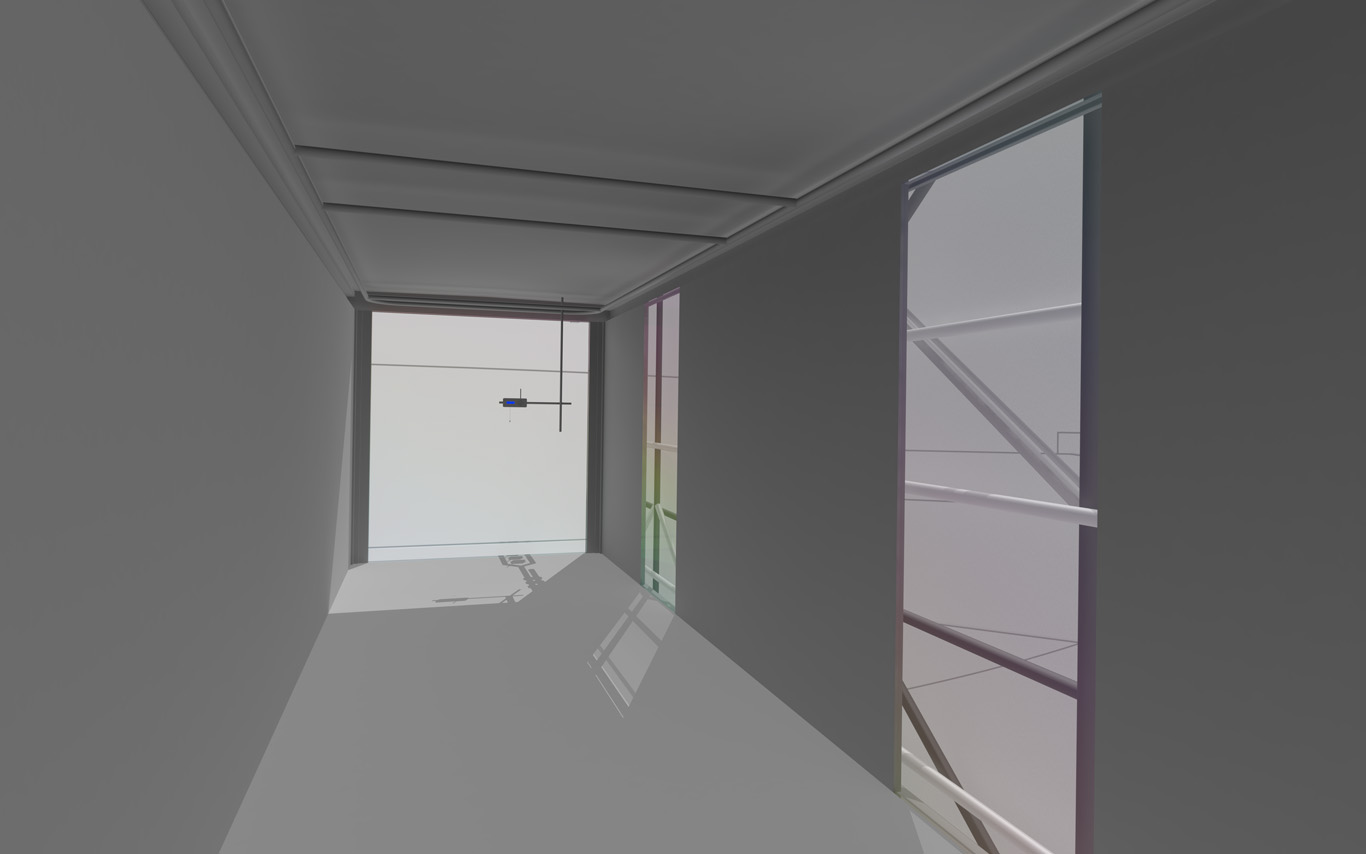

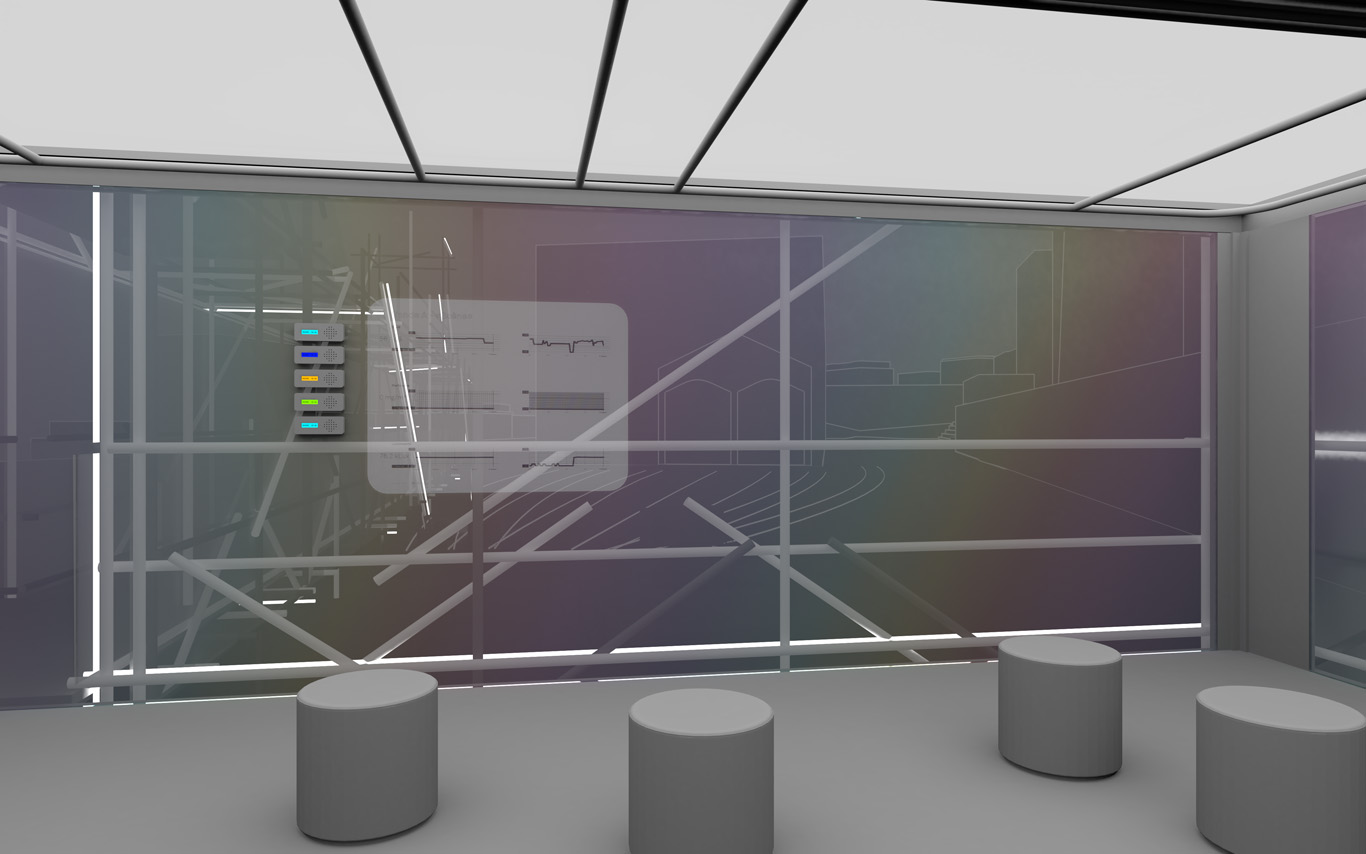

Input and output devices remain low-cost and simple in their expression: several input devices / sensors are placed outside of the pavilion in the structural elements and point toward areas of interest (construction sites or more specific parts of them). Directly in relation with these sensors and the sightseeing spots but on the inside are placed output devices with their recognizable blue screens. These are mainly voice interfaces: voice outputs driven by one bot according to architectural "scores" or algorithmic rules (middle image). Once the rules designed, the "architectural system" runs on its own. That's why we've also named the system based on automated bots "Ar.I." It could stand for "Architectural Intelligence", as it is entirely part of the architectural project.

The coding of the "Ar.I." and use of data has the potential to easily become something more experimental, transformative and performative along the life of PPoFT.

Observers (users) and their natural "curiosity" play a central role: preliminary observations and monitorings are indeed the ones produced in an analog way by them (eyes and ears), in each of the 5 interesting points and through their wanderings. Extending this natural interest is a simple cord in front of each "output device" that they can pull on, which will then trigger a set of new measures by all the related sensors on the outside. This set new data enter the database and become readable by the "Ar.I."

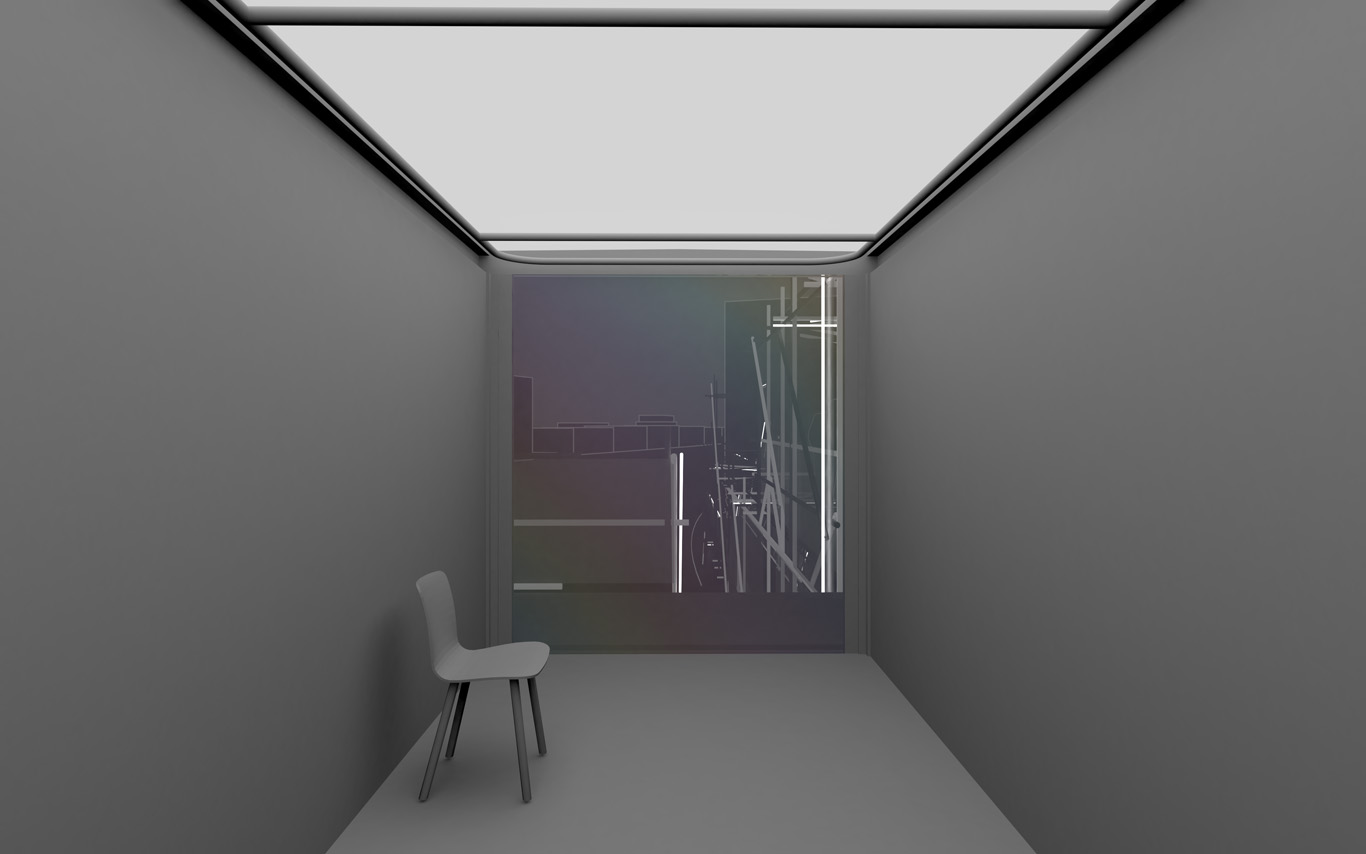

The whole part of the project regarding interaction and data treatments has been subject to a dedicated short study (a document about this study can be accessed here --in French only--). The main design implications of it are that the "Ar.I." takes part in the process of "filtering" which happens between the "outside" and the "inside", by taking part to the creation of a variable but specific "inside atmosphere" ("artificial artificial", as the outside is artificial as well since the anthropocene, isn't it ?) By doing so, the "Ar.I." bot fully takes its own part to the architecture main program: triggering the perception of an inside, proposing patterns of occupations.

"Ar.I." computes spatial elements and mixes times. It can organize configurations for the pavilion (data, displays, recorded sounds, lightings, clocks). It can set it to a past, a present, but also a future estimated disposition. "Ar.I." is mainly a set of open rules and a vocal interface, at the exception of the common access and conference space equipped with visual displays as well. "Ar.I." simply spells data at some times while at other, more intriguingly, it starts give "spatial advices" about the environment data configuration.

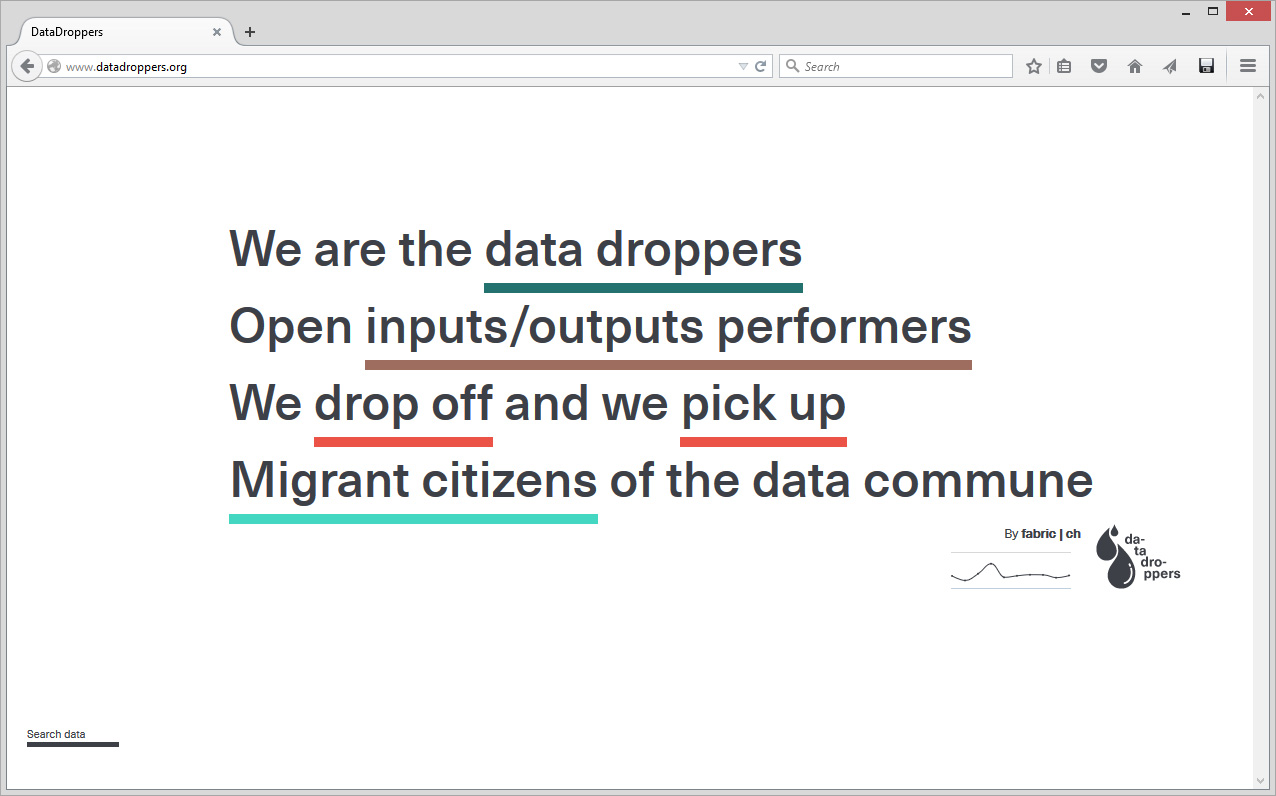

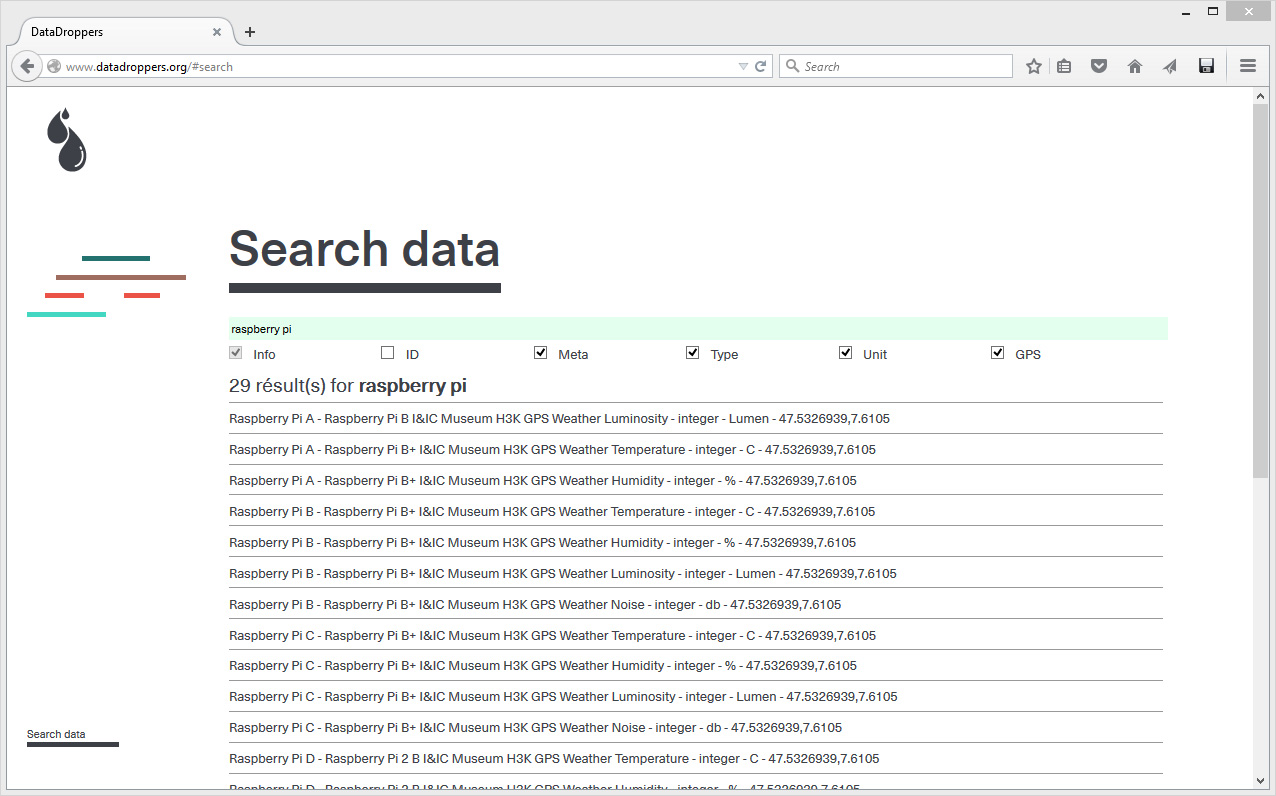

In parallel to Public Platform of Future Past and in the frame of various research or experimental projects, scientists and designers at fabric | ch have been working to set up their own platform for declaring and retrieving data (more about this project, Datadroppers, here). A platform, simple but that is adequate to our needs, on which we can develop as desired and where we know what is happening to the data. To further guarantee the nature of the project, a "data commune" was created out of it and we plan to further release the code on Github.

In tis context, we are turning as well our own office into a test tube for various monitoring systems, so that we can assess the reliability and handling of different systems. It is then the occasion to further "hack" some basic domestic equipments and turn them into sensors, try new functions as well, with the help of our 3d printer in tis case (middle image). Again, this experimental activity is turned into a side project, Studio Station (ongoing, with Pierre-Xavier Puissant), while keeping the general background goal of "concept-proofing" the different elements of the main project.

A common room (conference room) in the pavilion hosts and displays the various data. 5 small screen devices, 5 voice interfaces controlled for the 5 areas of interests and a semi-transparent data screen. Inspired again by what was experimented and realized back in 2012 during Heterochrony (top image).

----- ----- -----

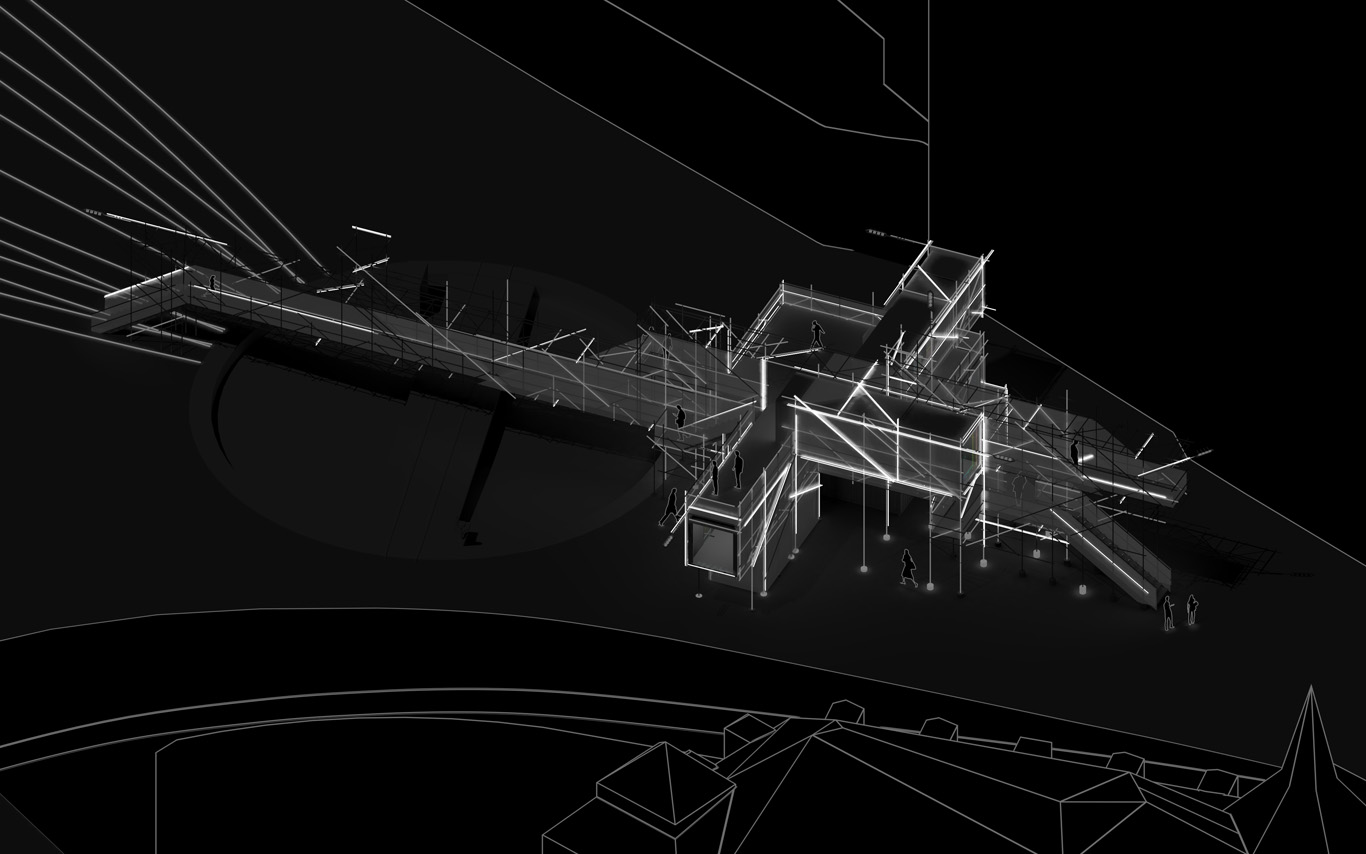

PPoFP, several images. Day, night configurations & few comments

Public Platform of Future-Past, axonometric views day/night.

An elevated walkway that overlook the almost archeological site (past-present-future). The circulations and views define and articulate the architecture and the five main "points of interests". These mains points concentrates spatial events, infrastructures and monitoring technologies. Layer by layer, the suroundings are getting filtrated by various means and become enclosed spaces.

Walks, views over transforming sites, ...

Data treatment, bots, voice and minimal visual outputs.

Night views, circulations, points of view.

Night views, ground.

Random yet controllable lights at night. Underlined areas of interests, points of "spatial densities".

Project: fabric | ch

Team: Patrick Keller, Christophe Guignard, Christian Babski, Sinan Mansuroglu, Yves Staub, Nicolas Besson.

Monday, June 13. 2016

Wolfram Data Drop vs. Datadroppers | #data #drop #pick #compute

Note: after a few weeks posting about the Universal Income, here comes the "Universal data accumulator for devices, sensors, programs, humans & more" by Wolfram (best known for Wolfram Alpha computational engine and the former Mathematica libraries, on which most of their other services seem to be built).

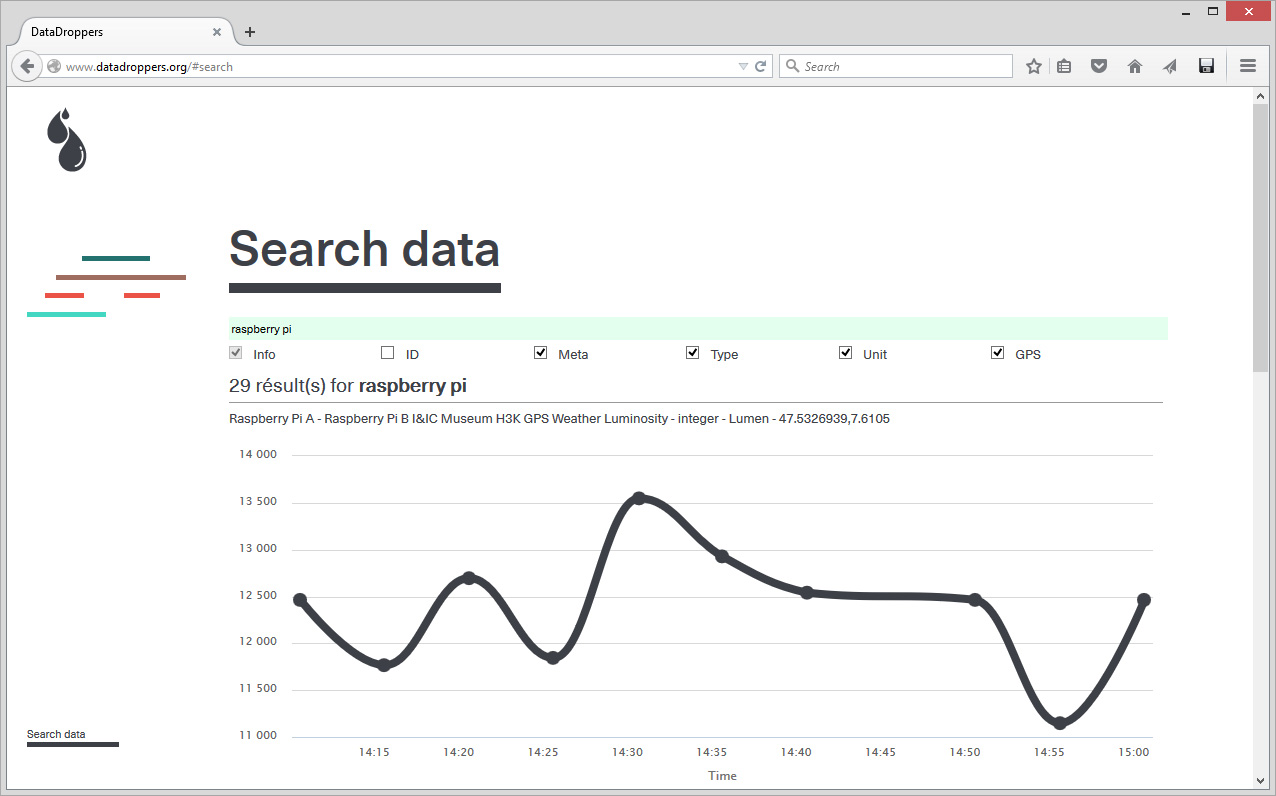

Funilly, we've picked a very similar name for a very similar data service we've set up for ourselves and our friends last year, during an exhibition at H3K: Datadroppers (!), with a different set of references in our mind (Drop City? --from which we borrowed the colors-- "Turn on, tune in, drop out"?) Even if our service is logically much more grassroots, less developed but therfore quite light to use as well.

We developed this project around data dropping/picking with another architectural project in mind that I'll speak about in the coming days: Public Platform of Future-Past. It was clearly and closely linked.

"Universal" is back in the loop as a keyword therefore... (I would rather adopt a different word for myself and the work we are doing though: "Diversal" --which is a word I'm using for 2 yearnow and naively thought I "invented", but not...)

Via Wolfram

-----

"The Wolfram Data Drop is an open service that makes it easy to accumulate data of any kind, from anywhere—setting it up for immediate computation, visualization, analysis, querying, or other operations." - which looks more oriented towards data analysis than use in third party designs and projects.

Via Datadroppers

-----

"Datadroppers is a public and open data commune, it is a tool dedicated to data collection and sharing that tries to remain as simple, minimal and easy to use as possible." Direct and light data tool for designers, belonging to designers (fabric | ch) that use it for their own projects...

Friday, March 13. 2015

Personal Cloud? | #data #participant

Via Rhizome

-----

"Computing has always been personal. By this I mean that if you weren't intensely involved in it, sometimes with every fiber in your body, you weren't doing computers, you were just a user."

Sunday, December 14. 2014

I&IC workshop #3 at ECAL: output > Networked Data Objects & Devices | #data #things

Via iiclouds.org

-----

The third workshop we ran in the frame of I&IC with our guest researcher Matthew Plummer-Fernandez (Goldsmiths University) and the 2nd & 3rd year students (Ba) in Media & Interaction Design (ECAL) ended last Friday (| rblg note: on the 21st of Nov.) with interesting results. The workshop focused on small situated computing technologies that could collect, aggregate and/or “manipulate” data in automated ways (bots) and which would certainly need to heavily rely on cloud technologies due to their low storage and computing capacities. So to say “networked data objects” that will soon become very common, thanks to cheap new small computing devices (i.e. Raspberry Pis for diy applications) or sensors (i.e. Arduino, etc.) The title of the workshop was “Botcave”, which objective was explained by Matthew in a previous post.

The choice of this context of work was defined accordingly to our overall research objective, even though we knew that it wouldn’t address directly the “cloud computing” apparatus — something we learned to be a difficult approachduring the second workshop –, but that it would nonetheless question its interfaces and the way we experience the whole service. Especially the evolution of this apparatus through new types of everyday interactions and data generation.

Matthew Plummer-Fernandez (#Algopop) during the final presentation at the end of the research workshop.

Through this workshop, Matthew and the students definitely raised the following points and questions:

1° Small situated technologies that will soon spread everywhere will become heavy users of cloud based computing and data storage, as they have low storage and computing capacities. While they might just use and manipulate existing data (like some of the workshop projects — i.e. #Good vs. #Evil or Moody Printer) they will altogether and mainly also contribute to produce extra large additional quantities of them (i.e. Robinson Miner). Yet, the amount of meaningful data to be “pushed” and “treated” in the cloud remains a big question mark, as there will be (too) huge amounts of such data –Lucien will probably post something later about this subject: “fog computing“–, this might end up with the need for interdisciplinary teams to rethink cloud architectures.

2° Stored data are becoming “alive” or significant only when “manipulated”. It can be done by “analog users” of course, but in general it is now rather operated by rules and algorithms of different sorts (in the frame of this workshop: automated bots). Are these rules “situated” as well and possibly context aware (context intelligent) –i.e.Robinson Miner? Or are they somehow more abstract and located anywhere in the cloud? Both?

3° These “Networked Data Objects” (and soon “Network Data Everything”) will contribute to “babelize” users interactions and interfaces in all directions, paving the way for new types of combinations and experiences (creolization processes) — i.e. The Beast, The Like Hotline, Simon Coins, The Wifi Cracker could be considered as starting phases of such processes–. Cloud interfaces and computing will then become everyday “things” and when at “house”, new domestic objects with which we’ll have totally different interactions (this last point must still be discussed though as domesticity might not exist anymore according to Space Caviar).

Moody Printer – (Alexia Léchot, Benjamin Botros)

Moody Printer remains a basic conceptual proposal at this stage, where a hacked printer, connected to a Raspberry Pi that stays hidden (it would be located inside the printer), has access to weather information. Similarly to human beings, its “mood” can be affected by such inputs following some basic rules (good – bad, hot – cold, sunny – cloudy -rainy, etc.) The automated process then search for Google images according to its defined “mood” (direct link between “mood”, weather conditions and exhaustive list of words) and then autonomously start to print them.

A different kind of printer combined with weather monitoring.

The Beast – (Nicolas Nahornyj)

Top: Nicolas Nahornyj is presenting his project to the assembly. Bottom: the laptop and “the beast”.

The Beast is a device that asks to be fed with money at random times… It is your new laptop companion. To calm it down for a while, you must insert a coin in the slot provided for that purpose. If you don’t comply, not only will it continue to ask for money in a more frequent basis, but it will also randomly pick up an image that lie around on your hard drive, post it on a popular social network (i.e. Facebook, Pinterest, etc.) and then erase this image on your local disk. Slowly, The Beast will remove all images from your hard drive and post them online…

A different kind of slot machine combined with private files stealing.

Robinson – (Anne-Sophie Bazard, Jonas Lacôte, Pierre-Xavier Puissant)

Top: Pierre-Xavier Puissant is looking at the autonomous “minecrafting” of his bot. Bottom: the proposed bot container that take on the idea of cubic construction. It could be placed in your garden, in one of your room, then in your fridge, etc.

Robinson automates the procedural construction of MineCraft environments. To do so, the bot uses local weather information that is monitored by a weather sensor located inside the cubic box, attached to a Raspberry Pi located within the box as well. This sensor is looking for changes in temperature, humidity, etc. that then serve to change the building blocks and rules of constructions inside MineCraft (put your cube inside your fridge and it will start to build icy blocks, put it in a wet environment and it will construct with grass, etc.)

A different kind of thermometer combined with a construction game.

Note: Matthew Plummer-Fernandez also produced two (auto)MineCraft bots during the week of workshop. The first one is building environment according to fluctuations in the course of different market indexes while the second one is trying to build “shapes” to escape this first envirnment. These two bots are downloadable from theGithub repository that was realized during the workshop.

#Good vs. #Evil – (Maxime Castelli)

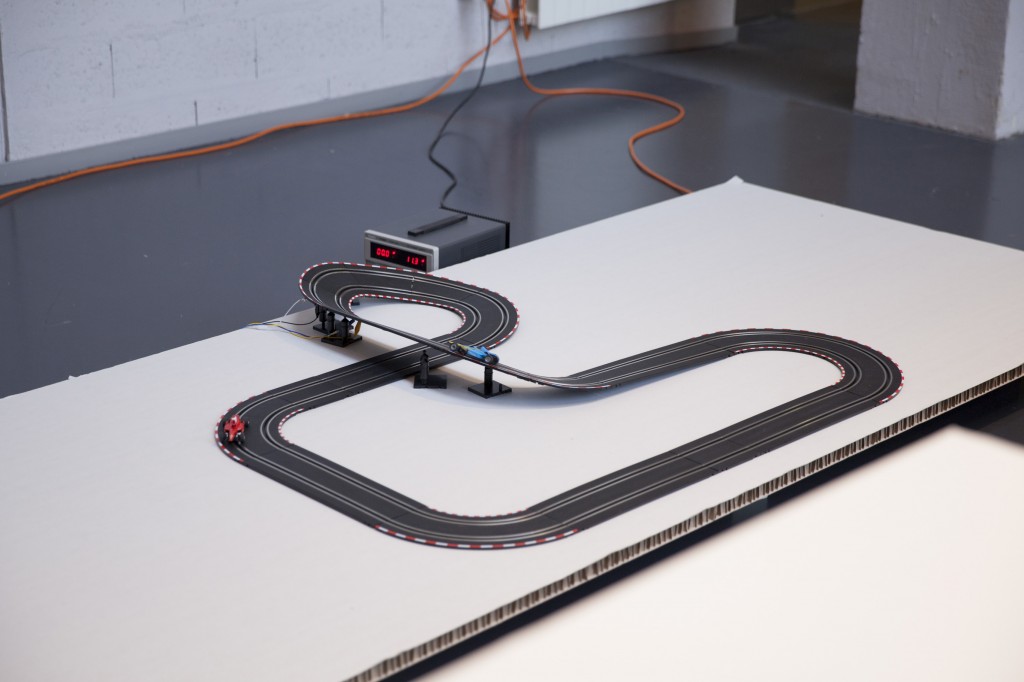

Top: a transformed car racing game. Bottom: a race is going on between two Twitter hashtags, materialized by two cars.

#Good vs. #Evil is a quite straightforward project. It is also a hack of an existing two racing cars game. Yet in this case, the bot is counting iterations of two hashtags on Twitter: #Good and #Evil. At each new iteration of one or the other word, the device gives an electric input to its associated car. The result is a slow and perpetual race car between “good” and “evil” through their online hashtags iterations.

A different kind of data visualization combined with racing cars.

The “Like” Hotline – (Mylène Dreyer, Caroline Buttet, Guillaume Cerdeira)

Top: Caroline Buttet and Mylène Dreyer are explaining their project. The screen of the laptop, which is a Facebook account is beamed on the left outer part of the image. Bottom: Caroline Buttet is using a hacked phone to “like” pages.

The “Like” Hotline is proposing to hack a regular phone and install a hotline bot on it. Connected to its online Facebook account that follows a few personalities and the posts they are making, the bot ask questions to the interlocutor which can then be answered by using the keypad on the phone. After navigating through a few choices, the bot hotline help you like a post on the social network.

A different kind of hotline combined with a social network.

Simoncoin – (Romain Cazier)

Top: Romain Cazier introducing its “coin” project. Bottom: the device combines an old “Simon” memory game with the production of digital coins.

Simoncoin was unfortunately not finished at the end of the week of workshop but was thought out in force details that would be too long to explain in this short presentation. Yet the main idea was to use the game logic of Simon to generate coins. In a parallel to the Bitcoins that are harder and harder to mill, Simon Coins are also more and more difficult to generate due to the game logic.

Another different kind of money combined with a memory game.

The Wifi Cracker – (Bastien Girshig, Martin Hertig)

Top: Bastien Girshig and Martin Hertig (left of Matthew Plummer-Fernandez) presenting. Middle and Bottom: the wifi password cracker slowly diplays the letters of the wifi password.

The Wifi Cracker is an object that you can independently leave in a space. It furtively looks a little bit like a clock, but it won’t display the time. Instead, it will look for available wifi networks in the area and start try to find their protected password (Bastien and Martin found a ready made process for that). The bot will test all possible combinations and it will take time. Once the device will have found the working password, it will use its round display to transmit the password. Letter by letter and slowly as well.

A different kind of cookoo clock combined with a password cracker.

Acknowledgments:

Lots of thanks to Matthew Plummer-Fernandez for its involvement and great workshop direction; Lucien Langton for its involvment, technical digging into Raspberry Pis, pictures and documentation; Nicolas Nova and Charles Chalas (from HEAD) so as Christophe Guignard, Christian Babski and Alain Bellet for taking part or helping during the final presentation. A special thanks to the students from ECAL involved in the project and the energy they’ve put into it: Anne-Sophie Bazard, Benjamin Botros, Maxime Castelli, Romain Cazier, Guillaume Cerdeira, Mylène Dreyer, Bastien Girshig, Jonas Lacôte, Alexia Léchot, Nicolas Nahornyj, Pierre-Xavier Puissant.

From left to right: Bastien Girshig, Martin Hertig (The Wifi Cracker project), Nicolas Nova, Matthew Plummer-Fernandez (#Algopop), a “mystery girl”, Christian Babski (in the background), Patrick Keller, Sebastian Vargas, Pierre Xavier-Puissant (Robinson Miner), Alain Bellet and Lucien Langton (taking the pictures…) during the final presentation on Friday.

fabric | rblg

This blog is the survey website of fabric | ch - studio for architecture, interaction and research.

We curate and reblog articles, researches, writings, exhibitions and projects that we notice and find interesting during our everyday practice and readings.

Most articles concern the intertwined fields of architecture, territory, art, interaction design, thinking and science. From time to time, we also publish documentation about our own work and research, immersed among these related resources and inspirations.

This website is used by fabric | ch as archive, references and resources. It is shared with all those interested in the same topics as we are, in the hope that they will also find valuable references and content in it.

Quicksearch

Categories

Calendar

|

|

April '24 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

| 29 | 30 | |||||

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)